|

Wrapped Distribution

In probability theory and directional statistics, a wrapped probability distribution is a continuous probability distribution that describes data points that lie on a unit ''n''-sphere. In one dimension, a wrapped distribution consists of points on the unit circle. If \phi is a random variate in the interval (-\infty,\infty) with probability density function (PDF) p(\phi), then z = e^ is a circular variable distributed according to the wrapped distribution p_(\theta) and \theta = \arg(z) is an angular variable in the interval (-\pi,\pi] distributed according to the wrapped distribution p_w(\theta). Any probability density function p(\phi) on the line can be "wrapped" around the circumference of a circle of unit radius. That is, the PDF of the wrapped variable :\theta=\phi \mod 2\pi in some interval of length 2\pi is : p_w(\theta)=\sum_^\infty which is a periodic summation, periodic sum of period 2\pi. The preferred interval is generally (-\pi<\theta\le\pi) for which [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequence

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is called the ''length'' of the sequence. Unlike a set, the same elements can appear multiple times at different positions in a sequence, and unlike a set, the order does matter. Formally, a sequence can be defined as a function from natural numbers (the positions of elements in the sequence) to the elements at each position. The notion of a sequence can be generalized to an indexed family, defined as a function from an ''arbitrary'' index set. For example, (M, A, R, Y) is a sequence of letters with the letter 'M' first and 'Y' last. This sequence differs from (A, R, M, Y). Also, the sequence (1, 1, 2, 3, 5, 8), which contains the number 1 at two different positions, is a valid sequence. Sequences can be ''finite'', as in these examples, or ''infi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wrapped Exponential Distribution

In probability theory and directional statistics, a wrapped exponential distribution is a wrapped distribution, wrapped probability distribution that results from the "wrapping" of the exponential distribution around the unit circle. Definition The probability density function of the wrapped exponential distribution is : f_(\theta;\lambda)=\sum_^\infty \lambda e^=\frac , for 0 \le \theta 0 is the rate parameter of the unwrapped distribution. This is identical to the truncated distribution obtained by restricting observed values ''X'' from the exponential distribution with rate parameter ''λ'' to the range 0\le X < 2\pi. Characteristic function The Characteristic function (probability theory), characteristic function of the wrapped exponential is just the characteristic function of the exponential function evaluated at integer arguments: : which yields an alternate expression for the wrapped exponentia ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wrapped Cauchy Distribution

In probability theory and directional statistics, a wrapped Cauchy distribution is a wrapped probability distribution that results from the "wrapping" of the Cauchy distribution around the unit circle. The Cauchy distribution is sometimes known as a Lorentzian distribution, and the wrapped Cauchy distribution may sometimes be referred to as a wrapped Lorentzian distribution. The wrapped Cauchy distribution is often found in the field of spectroscopy where it is used to analyze diffraction patterns (e.g. see Fabry–Pérot interferometer). Description The probability density function of the wrapped Cauchy distribution is: : f_(\theta;\mu,\gamma)=\sum_^\infty \frac\qquad -\pi<\theta<\pi where is the scale factor and is the peak position of the "unwrapped" distribution. Expressing the above pdf in terms of the |

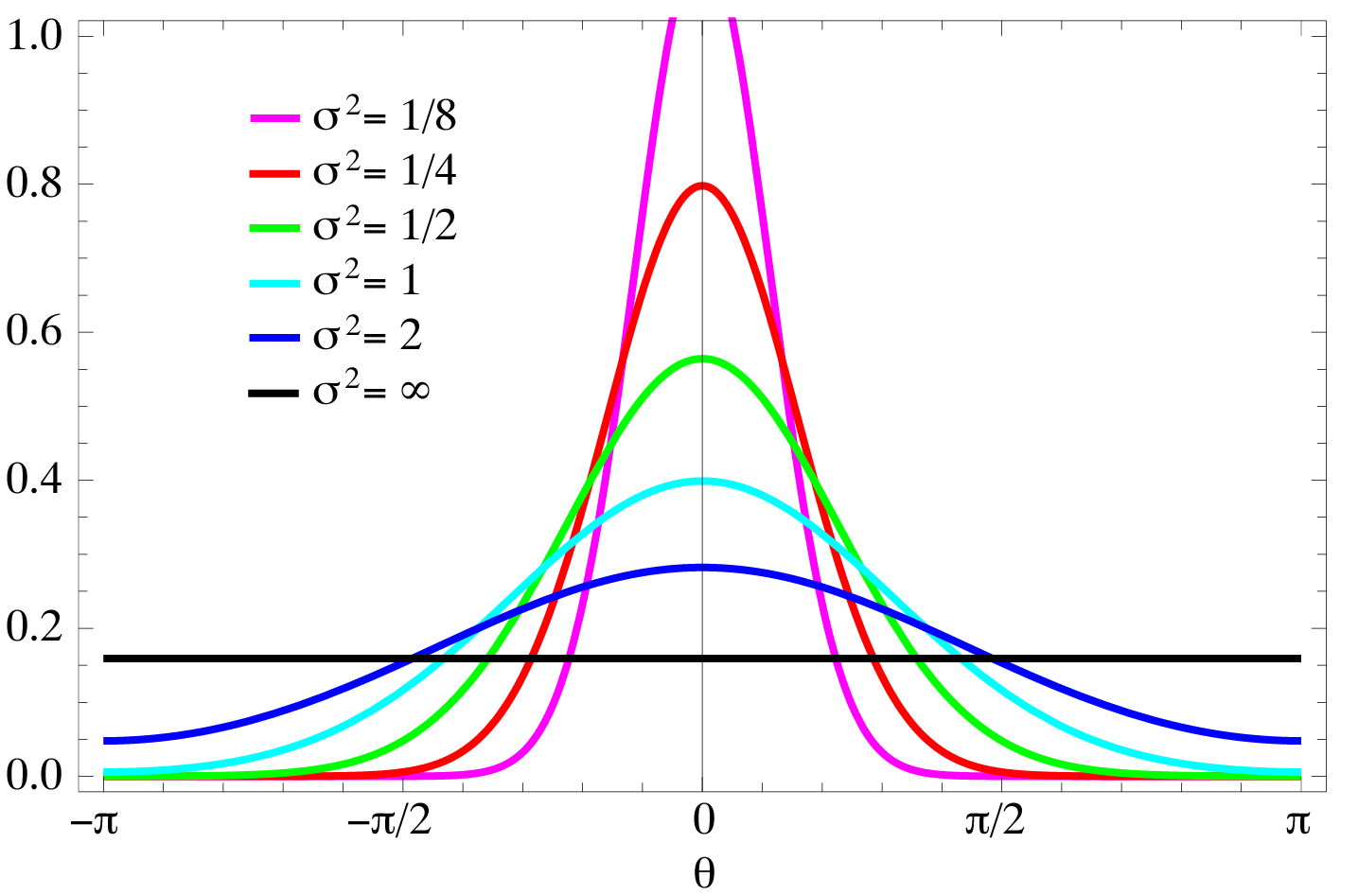

Wrapped Normal Distribution

In probability theory and directional statistics, a wrapped normal distribution is a wrapped probability distribution that results from the "wrapping" of the normal distribution around the unit circle. It finds application in the theory of Brownian motion and is a solution to the heat equation for periodic boundary conditions. It is closely approximated by the von Mises distribution, which, due to its mathematical simplicity and tractability, is the most commonly used distribution in directional statistics. Definition The probability density function of the wrapped normal distribution is : f_(\theta;\mu,\sigma)=\frac \sum^_ \exp \left frac \right where ''μ'' and ''σ'' are the mean and standard deviation of the unwrapped distribution, respectively. Expressing the above density function in terms of the characteristic function of the normal distribution yields: : f_(\theta;\mu,\sigma)=\frac\sum_^\infty e^ =\frac\vartheta\left(\frac,\frac\right) , where \vartheta(\theta,\tau) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Closed Form Expression

In mathematics, a closed-form expression is a mathematical expression that uses a finite number of standard operations. It may contain constants, variables, certain well-known operations (e.g., + − × ÷), and functions (e.g., ''n''th root, exponent, logarithm, trigonometric functions, and inverse hyperbolic functions), but usually no limit, differentiation, or integration. The set of operations and functions may vary with author and context. Example: roots of polynomials The solutions of any quadratic equation with complex coefficients can be expressed in closed form in terms of addition, subtraction, multiplication, division, and square root extraction, each of which is an elementary function. For example, the quadratic equation :ax^2+bx+c=0, is tractable since its solutions can be expressed as a closed-form expression, i.e. in terms of elementary functions: :x=\frac. Similarly, solutions of cubic and quartic (third and fourth degree) equations can be expressed u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orthogonal Basis

In mathematics, particularly linear algebra, an orthogonal basis for an inner product space V is a basis for V whose vectors are mutually orthogonal. If the vectors of an orthogonal basis are normalized, the resulting basis is an orthonormal basis. As coordinates Any orthogonal basis can be used to define a system of orthogonal coordinates V. Orthogonal (not necessarily orthonormal) bases are important due to their appearance from curvilinear orthogonal coordinates in Euclidean spaces, as well as in Riemannian and pseudo-Riemannian manifolds. In functional analysis In functional analysis, an orthogonal basis is any basis obtained from an orthonormal basis (or Hilbert basis) using multiplication by nonzero scalars. Extensions Symmetric bilinear form The concept of an orthogonal basis is applicable to a vector space V (over any field) equipped with a symmetric bilinear form \langle \cdot, \cdot \rangle, where '' orthogonality'' of two vectors v and w means \langle v, w \rangle = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral Transform

In mathematics, an integral transform maps a function from its original function space into another function space via integration, where some of the properties of the original function might be more easily characterized and manipulated than in the original function space. The transformed function can generally be mapped back to the original function space using the ''inverse transform''. General form An integral transform is any transform ''T'' of the following form: :(Tf)(u) = \int_^ f(t)\, K(t, u)\, dt The input of this transform is a function ''f'', and the output is another function ''Tf''. An integral transform is a particular kind of mathematical operator. There are numerous useful integral transforms. Each is specified by a choice of the function K of two variables, the kernel function, integral kernel or nucleus of the transform. Some kernels have an associated ''inverse kernel'' K^( u,t ) which (roughly speaking) yields an inverse transform: :f(t) = \int_^ (Tf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kronecker Delta

In mathematics, the Kronecker delta (named after Leopold Kronecker) is a function of two variables, usually just non-negative integers. The function is 1 if the variables are equal, and 0 otherwise: \delta_ = \begin 0 &\text i \neq j, \\ 1 &\text i=j. \end or with use of Iverson brackets: \delta_ = =j, where the Kronecker delta is a piecewise function of variables and . For example, , whereas . The Kronecker delta appears naturally in many areas of mathematics, physics and engineering, as a means of compactly expressing its definition above. In linear algebra, the identity matrix has entries equal to the Kronecker delta: I_ = \delta_ where and take the values , and the inner product of vectors can be written as \mathbf\cdot\mathbf = \sum_^n a_\delta_b_ = \sum_^n a_ b_. Here the Euclidean vectors are defined as -tuples: \mathbf = (a_1, a_2, \dots, a_n) and \mathbf= (b_1, b_2, ..., b_n) and the last step is obtained by using the values of the Kronecker delta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Residue Theorem

In complex analysis, the residue theorem, sometimes called Cauchy's residue theorem, is a powerful tool to evaluate line integrals of analytic functions over closed curves; it can often be used to compute real integrals and infinite series as well. It generalizes the Cauchy integral theorem and Cauchy's integral formula. From a geometrical perspective, it can be seen as a special case of the generalized Stokes' theorem. Statement The statement is as follows: Let be a simply connected open subset of the complex plane containing a finite list of points , , and a function defined and holomorphic on . Let be a closed rectifiable curve in , and denote the winding number of around by . The line integral of around is equal to times the sum of residues of at the points, each counted as many times as winds around the point: \oint_\gamma f(z)\, dz = 2\pi i \sum_^n \operatorname(\gamma, a_k) \operatorname( f, a_k ). If is a positively oriented simple closed curve, if i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude (absolute value) of the complex value represents the amplitude of a constituent complex sinusoid with that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |