|

Turbo Codes

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closely approach the maximum channel capacity or Shannon limit, a theoretical maximum for the code rate at which reliable communication is still possible given a specific noise level. Turbo codes are used in 3G/ 4G mobile communications (e.g., in UMTS and LTE) and in ( deep space) satellite communications as well as other applications where designers seek to achieve reliable information transfer over bandwidth- or latency-constrained communication links in the presence of data-corrupting noise. Turbo codes compete with low-density parity-check (LDPC) codes, which provide similar performance. The name "turbo code" arose from the feedback loop used during normal turbo code decoding, which was analogized to the exhaust feedback used for engine tur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Repeat-accumulate Code

In computer science, repeat-accumulate codes (RA codes) are a low complexity class of error-correcting codes. They were devised so that their ensemble weight distributions are easy to derive. RA codes were introduced by Divsalar ''et al.'' In an RA code, an information block of length is repeated times, scrambled by an interleaver of size , and then encoded by a rate 1 accumulator. The accumulator can be viewed as a truncated rate 1 recursive convolutional encoder with transfer function , but Divsalar ''et al.'' prefer to think of it as a block code whose input block and output block are related by the formula and x_i = x_+z_i for i > 1. The encoding time for RA codes is linear and their rate is 1/q. They are nonsystematic. Irregular Repeat Accumulate Codes Irregular Repeat Accumulate (IRA) Codes build on top of the ideas of RA codes. IRA replaces the outer code in RA code with a Low Density Generator Matrix code. IRA codes first repeats information bits different times, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BCJR Algorithm

The BCJR algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Frederick Jelinek, Jelinek and Raviv.L.Bahl, J.Cocke, F.Jelinek, and J.Raviv, "Optimal Decoding of Linear Codes for minimizing symbol error rate", IEEE Transactions on Information Theory, vol. IT-20(2), pp. 284-287, March 1974. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the convolutional code, trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR P. Robertson, P. Hoeher and E. Villebrun, "Optim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Additive White Gaussian Noise

Additive white Gaussian noise (AWGN) is a basic noise model used in information theory to mimic the effect of many random processes that occur in nature. The modifiers denote specific characteristics: * ''Additive'' because it is added to any noise that might be intrinsic to the information system. * ''White'' refers to the idea that it has uniform power across the frequency band for the information system. It is an analogy to the color white which has uniform emissions at all frequencies in the visible spectrum. * ''Gaussian'' because it has a normal distribution in the time domain with an average time domain value of zero. Wideband noise comes from many natural noise sources, such as the thermal vibrations of atoms in conductors (referred to as thermal noise or Johnson–Nyquist noise), shot noise, black-body radiation from the earth and other warm objects, and from celestial sources such as the Sun. The central limit theorem of probability theory indicates that the summation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turbo Decoder

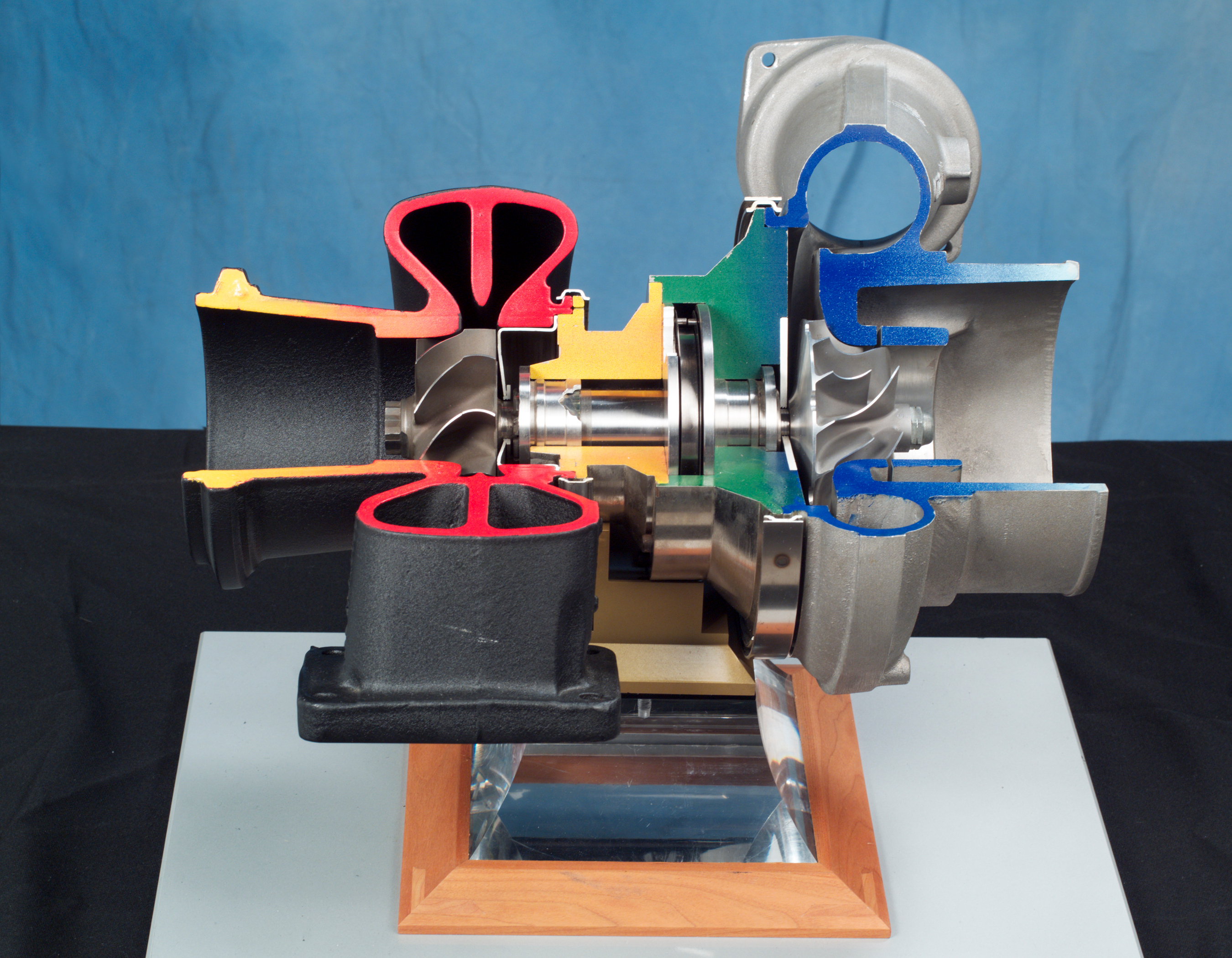

In an internal combustion engine, a turbocharger (often called a turbo) is a forced induction device that is powered by the flow of exhaust gases. It uses this energy to compress the intake gas, forcing more air into the engine in order to produce more power for a given displacement. The current categorisation is that a turbocharger is powered by the kinetic energy of the exhaust gasses, whereas a is mechanically powered (usually by a belt from the engine's crankshaft). However, up until the mid-20th century, a turbocharger was called a "turbosupercharger" and was considered a type of supercharger. History Prior to the invention of the turbocharger, |

Turbo Code

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closely approach the maximum channel capacity or Shannon limit, a theoretical maximum for the code rate at which reliable communication is still possible given a specific noise level. Turbo codes are used in 3G/ 4G mobile communications (e.g., in UMTS and LTE) and in ( deep space) satellite communications as well as other applications where designers seek to achieve reliable information transfer over bandwidth- or latency-constrained communication links in the presence of data-corrupting noise. Turbo codes compete with low-density parity-check (LDPC) codes, which provide similar performance. The name "turbo code" arose from the feedback loop used during normal turbo code decoding, which was analogized to the exhaust feedback used for engine tur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turbo Encoder

In an internal combustion engine, a turbocharger (often called a turbo) is a forced induction device that is powered by the flow of exhaust gases. It uses this energy to compress the intake gas, forcing more air into the engine in order to produce more power for a given displacement. The current categorisation is that a turbocharger is powered by the kinetic energy of the exhaust gasses, whereas a is mechanically powered (usually by a belt from the engine's crankshaft). However, up until the mid-20th century, a turbocharger was called a "turbosupercharger" and was considered a type of supercharger. History Prior to the invention of the turbocharger, |

Interleaver

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation

In mathematics, a permutation of a set is, loosely speaking, an arrangement of its members into a sequence or linear order, or if the set is already ordered, a rearrangement of its elements. The word "permutation" also refers to the act or process of changing the linear order of an ordered set. Permutations differ from combinations, which are selections of some members of a set regardless of order. For example, written as tuples, there are six permutations of the set , namely (1, 2, 3), (1, 3, 2), (2, 1, 3), (2, 3, 1), (3, 1, 2), and (3, 2, 1). These are all the possible orderings of this three-element set. Anagrams of words whose letters are different are also permutations: the letters are already ordered in the original word, and the anagram is a reordering of the letters. The study of permutations of finite sets is an important topic in the fields of combinatorics and group theory. Permutations are used in almost every branch of mathematics, and in many other fields of scie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Punctured Code

In coding theory, puncturing is the process of removing some of the parity bits after encoding with an error-correction code. This has the same effect as encoding with an error-correction code with a higher rate, or less redundancy. However, with puncturing the same decoder can be used regardless of how many bits have been punctured, thus puncturing considerably increases the flexibility of the system without significantly increasing its complexity. In some cases, a pre-defined pattern of puncturing is used in an encoder. Then, the inverse operation, known as depuncturing, is implemented by the decoder. Puncturing is used in UMTS during the rate matching process. It is also used in Wi-Fi, Wi-SUN, GPRS, EDGE, DVB-T and DAB, as well as in the DRM Standards. Puncturing is often used with the Viterbi algorithm in coding systems. During Radio Resource Control (RRC) Connection set procedure, during sending NBAP radio link setup message the uplink puncturing limit will send to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert G

The name Robert is an ancient Germanic given name, from Proto-Germanic "fame" and "bright" (''Hrōþiberhtaz''). Compare Old Dutch ''Robrecht'' and Old High German ''Hrodebert'' (a compound of '' Hruod'' ( non, Hróðr) "fame, glory, honour, praise, renown" and ''berht'' "bright, light, shining"). It is the second most frequently used given name of ancient Germanic origin. It is also in use as a surname. Another commonly used form of the name is Rupert. After becoming widely used in Continental Europe it entered England in its Old French form ''Robert'', where an Old English cognate form (''Hrēodbēorht'', ''Hrodberht'', ''Hrēodbēorð'', ''Hrœdbœrð'', ''Hrœdberð'', ''Hrōðberχtŕ'') had existed before the Norman Conquest. The feminine version is Roberta. The Italian, Portuguese, and Spanish form is Roberto. Robert is also a common name in many Germanic languages, including English, German, Dutch, Norwegian, Swedish, Scots, Danish, and Icelandic. It can be use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Code

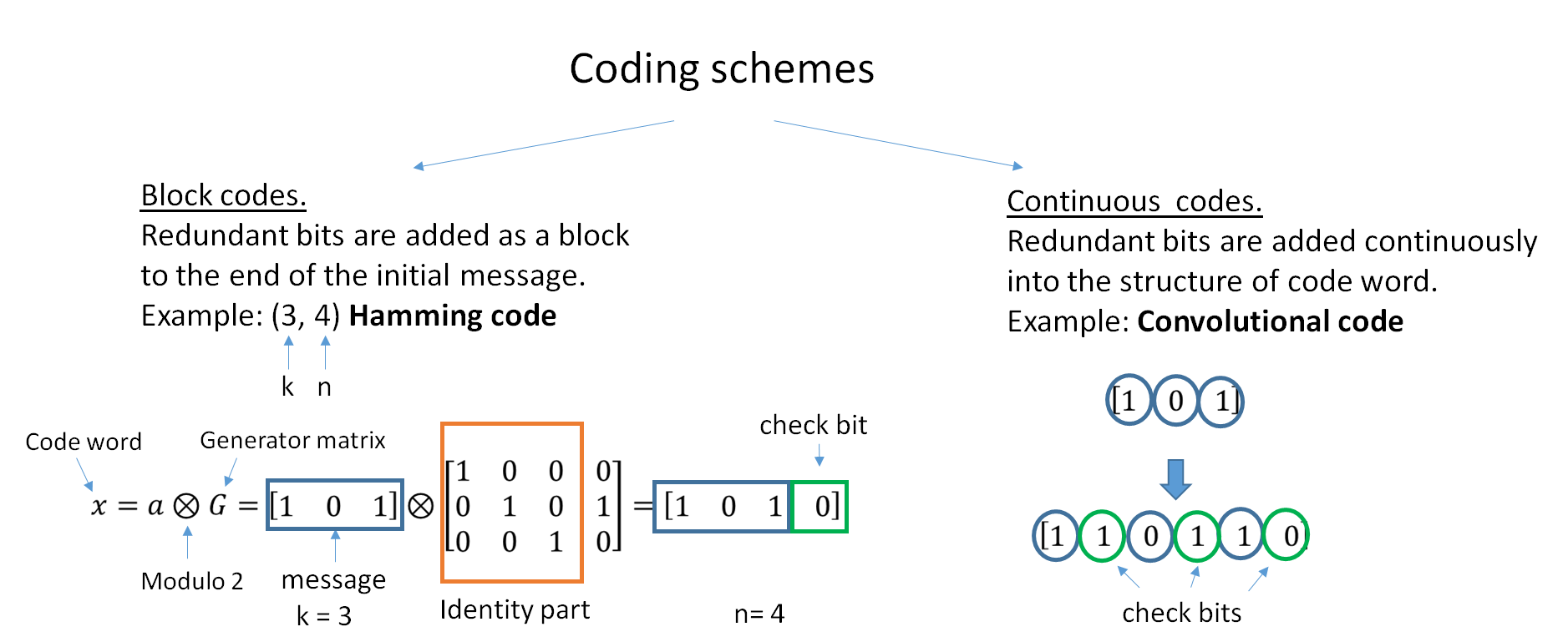

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of the encoder over the data, which gives rise to the term 'convolutional coding'. The sliding nature of the convolutional codes facilitates trellis decoding using a time-invariant trellis. Time invariant trellis decoding allows convolutional codes to be maximum-likelihood soft-decision decoded with reasonable complexity. The ability to perform economical maximum likelihood soft decision decoding is one of the major benefits of convolutional codes. This is in contrast to classic block codes, which are generally represented by a time-variant trellis and therefore are typically hard-decision decoded. Convolutional codes are often characterized by the base code rate and the depth (or memory) of the encoder ,k,K/math>. The base code rate is ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |