|

Tagged Architecture

In computer science, a tagged architecture is a type of computer architecture where every word (data type), word of memory constitutes a tagged union, being divided into a number of bits of data, and a ''tag'' section that describes the type of the data: how it is to be interpreted, and, if it is a reference, the type of the object that it points to. Precursors Some early systems use tagging of data in memory but do not have all of the characteristics now consider to be part of tagged architectures. RCA 601 The RCA 601 has a 3-bit tag register and a 3-bit tag for every 24-bit half-word. Every instruction can request a test for equal or unequal tag, and cause a maskable interrupt if the specified match fails. There is no architectural connection between the tag and the contents of the half-word; it is strictly determined by the software. Burroughs B5000, B5500 and B5700 The Burroughs B5000, B5500 and B5700 have 48-bit words with no appended tag field. However, while there are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Wayback Machine

The Wayback Machine is a digital archive of the World Wide Web founded by Internet Archive, an American nonprofit organization based in San Francisco, California. Launched for public access in 2001, the service allows users to go "back in time" to see how websites looked in the past. Founders Brewster Kahle and Bruce Gilliat developed the Wayback Machine to provide "universal access to all knowledge" by preserving archived copies of defunct web pages. The Wayback Machine's earliest archives go back at least to 1995, and by the end of 2009, more than 38.2 billion webpages had been saved. As of November 2024, the Wayback Machine has archived more than 916 billion web pages and well over 100 petabytes of data. History The Internet Archive has been archiving cached web pages since at least 1995. One of the earliest known pages was archived on May 8, 1995. Internet Archive founders Brewster Kahle and Bruce Gilliat launched the Wayback Machine in San Francisco, California ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Executable-space Protection

In computer security, executable-space protection marks memory regions as non-executable, such that an attempt to execute machine code in these regions will cause an exception. It relies on hardware features such as the NX bit (no-execute bit), or on software emulation when hardware support is unavailable. Software emulation often introduces a performance cost, or overhead (extra processing time or resources), while hardware-based NX bit implementations have no measurable performance impact. The Burroughs large systems, starting with the Burroughs 5000 introduced in 1961, implemented executable-space protection using a tagged architecture. All accesses to code and data took place through descriptors, which had memory tags preventing them from being modified; descriptors for code did not allow the code to be modified, and descriptors for data did not allow the data ta be executed as code. Today, operating systems use executable-space protection to mark writable memory areas, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Supercomputers

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, including quantum mechan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Elbrus (computer)

The Elbrus () is a line of Soviet and Russian computer systems developed by the Lebedev Institute of Precision Mechanics and Computer Engineering. These computers are used in the space program, nuclear weapons research, and defense systems, as well as for theoretical and researching purposes, such as an experimental Refal and CLU translators. History Historically, computers under the ''Elbrus'' brand comprised several different instruction set architectures (ISAs). The first of them was the line of the large fourth-generation computers, developed by Vsevolod Burtsev. These were heavily influenced by the Burroughs large systems and similarly to them implemented tagged architecture and a variant of ALGOL-68 as system programming language. After that Burtsev retired, and new Lebedev's chief developer, Boris Babayan, introduced the completely new system architecture. Differing completely from the architecture of both Elbrus 1 and Elbrus 2, it employed a very long instructio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

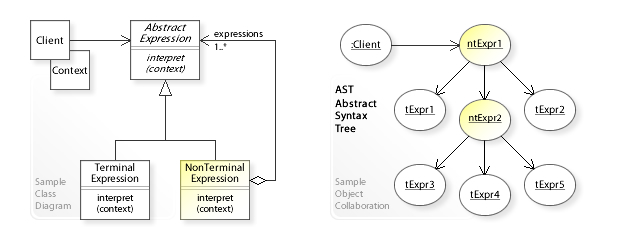

Interpreter (computing)

In computer science, an interpreter is a computer program that directly executes instructions written in a programming or scripting language, without requiring them previously to have been compiled into a machine language program. An interpreter generally uses one of the following strategies for program execution: # Parse the source code and perform its behavior directly; # Translate source code into some efficient intermediate representation or object code and immediately execute that; # Explicitly execute stored precompiled bytecode made by a compiler and matched with the interpreter's virtual machine. Early versions of Lisp programming language and minicomputer and microcomputer BASIC dialects would be examples of the first type. Perl, Raku, Python, MATLAB, and Ruby are examples of the second, while UCSD Pascal is an example of the third type. Source programs are compiled ahead of time and stored as machine independent code, which is then linked at run-ti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

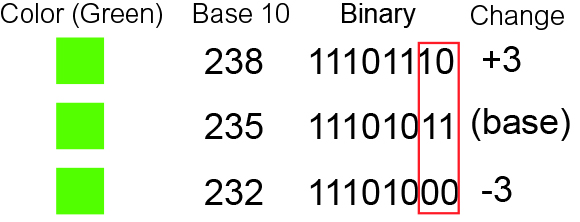

Bit Shift

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's position is counted from the right (least si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Memory Alignment

Data structure alignment is the way data is arranged and accessed in computer memory. It consists of three separate but related issues: data alignment, data structure padding, and packing. The CPU in modern computer hardware performs reads and writes to memory most efficiently when the data is ''naturally aligned'', which generally means that the data's memory address is a multiple of the data size. For instance, in a 32-bit architecture, the data may be aligned if the data is stored in four consecutive bytes and the first byte lies on a 4-byte boundary. ''Data alignment'' is the aligning of elements according to their natural alignment. To ensure natural alignment, it may be necessary to insert some ''padding'' between structure elements or after the last element of a structure. For example, on a 32-bit machine, a data structure containing a 16-bit value followed by a 32-bit value could have 16 bits of ''padding'' between the 16-bit value and the 32-bit value to align the 32 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Least-significant Bit

In computing, bit numbering is the convention used to identify the bit positions in a binary number. Bit significance and indexing In computing, the least significant bit (LSb) is the bit position in a binary integer representing the lowest-order place of the integer. Similarly, the most significant bit (MSb) represents the highest-order place of the binary integer. The LSb is sometimes referred to as the ''low-order bit''. Due to the convention in positional notation of writing less significant digits further to the right, the LSb also might be referred to as the ''right-most bit''. The MSb is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSb and MSb correlate directly to the least significant digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit index is not affected by how the value is stored on the device, such as the value's byte ord ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

High-level Language

A high-level programming language is a programming language with strong abstraction from the details of the computer. In contrast to low-level programming languages, it may use natural language ''elements'', be easier to use, or may automate (or even hide entirely) significant areas of computing systems (e.g. memory management), making the process of developing a program simpler and more understandable than when using a lower-level language. The amount of abstraction provided defines how "high-level" a programming language is. In the 1960s, a high-level programming language using a compiler was commonly called an '' autocode''. Examples of autocodes are COBOL and Fortran. The first high-level programming language designed for computers was Plankalkül, created by Konrad Zuse. However, it was not implemented in his time, and his original contributions were largely isolated from other developments due to World War II, aside from the language's influence on the "Superplan" langua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

High-level Language Computer Architecture

A high-level language computer architecture (HLLCA) is a computer architecture designed to be targeted by a specific high-level programming language (HLL), rather than the architecture being dictated by hardware considerations. It is accordingly also termed language-directed computer design, coined in and primarily used in the 1960s and 1970s. HLLCAs were popular in the 1960s and 1970s, but largely disappeared in the 1980s. This followed the dramatic failure of the Intel 432 (1981) and the emergence of optimizing compilers and reduced instruction set computer (RISC) architectures and RISC-like complex instruction set computer (CISC) architectures, and the later development of just-in-time compilation (JIT) for HLLs. A detailed survey and critique can be found in . HLLCAs date almost to the beginning of HLLs, in the Burroughs large systems (1961), which were designed for ALGOL 60 (1960), one of the first HLLs. The best known HLLCAs may be the Lisp machines of the 1970s and 1980s, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |