|

Two-step M-estimator

Two-step M-estimators deals with M-estimation problems that require preliminary estimation to obtain the parameter of interest. Two-step M-estimation is different from usual M-estimation problem because asymptotic distribution of the second-step estimator generally depends on the first-step estimator. Accounting for this change in asymptotic distribution is important for valid inference. Description The class of two-step M-estimators includes Heckman's sample selection estimator, weighted non-linear least squares, and ordinary least squares with generated regressors.Wooldridge, J.M., Econometric Analysis of Cross Section and Panel Data, MIT Press, Cambridge, Mass. To fix ideas, let \^n_ \subseteq R^d be an i.i.d. sample. \Theta and \Gamma are subsets of Euclidean spaces R^p and R^q , respectively. Given a function m(;;;): R^d \times \Theta \times \Gamma\rightarrow R , two-step M-estimator \hat\theta is defined as: :\hat \theta:=\arg\max_\frac\sum_m\bigl(W_,\theta,\hat\gamm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

M-estimation

In statistics, M-estimators are a broad class of extremum estimators for which the objective function is a sample average. Both non-linear least squares and maximum likelihood estimation are special cases of M-estimators. The definition of M-estimators was motivated by robust statistics, which contributed new types of M-estimators. However, M-estimators are not inherently robust, as is clear from the fact that they include maximum likelihood estimators, which are in general not robust. The statistical procedure of evaluating an M-estimator on a data set is called M-estimation. The "M" initial stands for "maximum likelihood-type". More generally, an M-estimator may be defined to be a zero of an estimating function. This estimating function is often the derivative of another statistical function. For example, a maximum-likelihood estimate is the point where the derivative of the likelihood function with respect to the parameter is zero; thus, a maximum-likelihood estimator is a crit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maximum Likelihood Estimator

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when the random errors are assumed to have normal distributions with the same variance. From the perspective of Bayesian inference ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Estimator

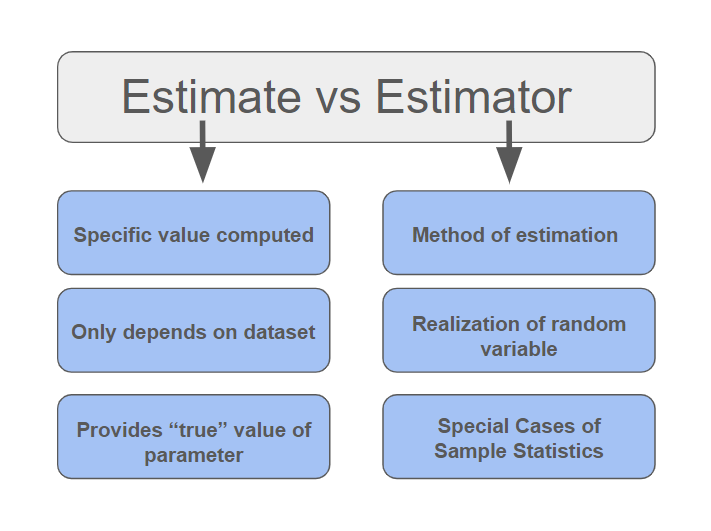

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

M-estimators

In statistics, M-estimators are a broad Class (mathematics), class of extremum estimators for which the objective function is a sample average. Both non-linear least squares and maximum likelihood estimation are special cases of M-estimators. The definition of M-estimators was motivated by robust statistics, which contributed new types of M-estimators. However, M-estimators are not inherently robust, as is clear from the fact that they include maximum likelihood estimators, which are in general not robust. The statistical procedure of evaluating an M-estimator on a data set is called M-estimation. The "M" initial stands for "maximum likelihood-type". More generally, an M-estimator may be defined to be a zero of an estimating equations, estimating function. This estimating function is often the derivative of another statistical function. For example, a maximum likelihood estimation, maximum-likelihood estimate is the point where the derivative of the likelihood function with respect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Estimator

In statistics, an adaptive estimator is an estimator in a parametric or semiparametric model with nuisance parameters such that the presence of these nuisance parameters does not affect efficiency of estimation. Definition Formally, let parameter ''θ'' in a parametric model consists of two parts: the parameter of interest , and the nuisance parameter . Thus . Then we will say that \scriptstyle\hat\nu_n is an adaptive estimator of ''ν'' in the presence of ''η'' if this estimator is regular, and efficient for each of the submodels : \mathcal_\nu(\eta_0) = \big\. Adaptive estimator estimates the parameter of interest equally well regardless whether the value of the nuisance parameter is known or not. The necessary condition for a regular parametric model to have an adaptive estimator is that : I_(\theta) = \operatorname , z_\nu z_\eta' \,= 0 \quad \text\theta, where ''z''''ν'' and ''z''''η'' are components of the score function corresponding to parameters ''ν ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Generalized Method Of Moments

In econometrics and statistics, the generalized method of moments (GMM) is a generic method for estimating parameters in statistical models. Usually it is applied in the context of semiparametric models, where the parameter of interest is finite-dimensional, whereas the full shape of the data's distribution function may not be known, and therefore maximum likelihood estimation is not applicable. The method requires that a certain number of ''moment conditions'' be specified for the model. These moment conditions are functions of the model parameters and the data, such that their expectation is zero at the parameters' true values. The GMM method then minimizes a certain norm of the sample averages of the moment conditions, and can therefore be thought of as a special case of minimum-distance estimation. The GMM estimators are known to be consistent, asymptotically normal, and most efficient in the class of all estimators that do not use any extra information aside from that conta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Generalized Least Squares

In statistics, generalized least squares (GLS) is a method used to estimate the unknown parameters in a Linear regression, linear regression model. It is used when there is a non-zero amount of correlation between the Residual (statistics), residuals in the regression model. GLS is employed to improve efficiency_(statistics), statistical efficiency and reduce the risk of drawing erroneous inferences, as compared to conventional least squares and weighted least squares methods. It was first described by Alexander Aitken in 1935. It requires knowledge of the covariance matrix for the residuals. If this is unknown, estimating the covariance matrix gives the method of feasible generalized least squares (FGLS). However, FGLS provides fewer guarantees of improvement. Method In standard linear regression models, one observes data \_ on ''n'' statistical units with ''k'' − 1 predictor values and one response value each. The response values are placed in a vector,\mathbf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Generated Regressor

In least squares estimation problems, sometimes one or more regressors specified in the model are not observable. One way to circumvent this issue is to estimate or generate regressors from observable data. This generated regressor method is also applicable to unobserved instrumental variables. Under some regularity conditions, consistency and asymptotic normality of least squares estimator is preserved, but asymptotic variance has a different form in general. Suppose the model of interest is the following: :y_=g(x_,x_,\beta)+u_ where g is a conditional mean function and its form is known up to finite-dimensional parameter β. Here x_ is not observable, but we know that x_=h(w_,\gamma) for some function ''h'' known up to parameter \gamma, and a random sample y_=g(x_,x_,\beta)+u_ is available. Suppose we have a consistent estimator \hat\gamma of \gamma that uses the observation w_'s. Then, β can be estimated by (Non-Linear) Least Squares using \hat=h(w_,\hat\gamma). Some exampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Conditional Independence

In probability theory, conditional independence describes situations wherein an observation is irrelevant or redundant when evaluating the certainty of a hypothesis. Conditional independence is usually formulated in terms of conditional probability, as a special case where the probability of the hypothesis given the uninformative observation is equal to the probability without. If A is the hypothesis, and B and C are observations, conditional independence can be stated as an equality: :P(A\mid B,C) = P(A \mid C) where P(A \mid B, C) is the probability of A given both B and C. Since the probability of A given C is the same as the probability of A given both B and C, this equality expresses that B contributes nothing to the certainty of A. In this case, A and B are said to be conditionally independent given C, written symbolically as: (A \perp\!\!\!\perp B \mid C). The concept of conditional independence is essential to graph-based theories of statistical inference, as it estab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Asymptotic Efficiency

In statistics, efficiency is a measure of quality of an estimator, of an experimental design, or of a hypothesis testing procedure. Essentially, a more efficient estimator needs fewer input data or observations than a less efficient one to achieve the Cramér–Rao bound. An ''efficient estimator'' is characterized by having the smallest possible variance, indicating that there is a small deviance between the estimated value and the "true" value in the L2 norm sense. The relative efficiency of two procedures is the ratio of their efficiencies, although often this concept is used where the comparison is made between a given procedure and a notional "best possible" procedure. The efficiencies and the relative efficiency of two procedures theoretically depend on the sample size available for the given procedure, but it is often possible to use the asymptotic relative efficiency (defined as the limit of the relative efficiencies as the sample size grows) as the principal comparison ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

First-order Condition

In calculus, a derivative test uses the derivatives of a function to locate the critical points of a function and determine whether each point is a local maximum, a local minimum, or a saddle point. Derivative tests can also give information about the concavity of a function. The usefulness of derivatives to find extrema is proved mathematically by Fermat's theorem of stationary points. First-derivative test The first-derivative test examines a function's monotonic properties (where the function is increasing or decreasing), focusing on a particular point in its domain. If the function "switches" from increasing to decreasing at the point, then the function will achieve a highest value at that point. Similarly, if the function "switches" from decreasing to increasing at the point, then it will achieve a least value at that point. If the function fails to "switch" and remains increasing or remains decreasing, then no highest or least value is achieved. One can examine a funct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Heckman Correction

The Heckman correction is a statistical technique to correct bias from non-randomly selected samples or otherwise incidentally truncated dependent variables, a pervasive issue in quantitative social sciences when using observational data. Conceptually, this is achieved by explicitly modelling the individual sampling probability of each observation (the so-called selection equation) together with the conditional expectation of the dependent variable (the so-called outcome equation). The resulting likelihood function is mathematically similar to the tobit model for censored dependent variables, a connection first drawn by James Heckman in 1974. Heckman also developed a two-step control function approach to estimate this model, which avoids the computational burden of having to estimate both equations jointly, albeit at the cost of inefficiency. Heckman received the Nobel Memorial Prize in Economic Sciences in 2000 for his work in this field. Method Statistical analyses b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |