|

Structured Prediction

Structured prediction or structured (output) learning is an umbrella term for supervised machine learning techniques that involves predicting structured objects, rather than scalar discrete or real values. Similar to commonly used supervised learning techniques, structured prediction models are typically trained by means of observed data in which the true prediction value is used to adjust model parameters. Due to the complexity of the model and the interrelations of predicted variables the process of prediction using a trained model and of training itself is often computationally infeasible and approximate inference and learning methods are used. Applications For example, the problem of translating a natural language sentence into a syntactic representation such as a parse tree can be seen as a structured prediction problem in which the structured output domain is the set of all possible parse trees. Structured prediction is also used in a wide variety of application domains i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Umbrella Term

In linguistics, semantics, general semantics, and ontologies, hyponymy () is a semantic relation between a hyponym denoting a subtype and a hypernym or hyperonym (sometimes called umbrella term or blanket term) denoting a supertype. In other words, the semantic field of the hyponym is included within that of the hypernym. In simpler terms, a hyponym is in a ''type-of'' relationship with its hypernym. For example, ''pigeon'', ''crow'', ''eagle'', and ''seagull'' are all hyponyms of ''bird'', their hypernym, which itself is a hyponym of ''animal'', its hypernym. Hyponyms and hypernyms Hyponymy shows the relationship between a generic term (hypernym) and a specific instance of it (hyponym). A hyponym is a word or phrase whose semantic field is more specific than its hypernym. The semantic field of a hypernym, also known as a superordinate, is broader than that of a hyponym. An approach to the relationship between hyponyms and hypernyms is to view a hypernym as consisting of hypo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noun

A noun () is a word that generally functions as the name of a specific object or set of objects, such as living creatures, places, actions, qualities, states of existence, or ideas.Example nouns for: * Living creatures (including people, alive, dead or imaginary): ''mushrooms, dogs, Afro-Caribbeans, rosebushes, Nelson Mandela, bacteria, Klingons'', etc. * Physical objects: ''hammers, pencils, Earth, guitars, atoms, stones, boots, shadows'', etc. * Places: ''closets, temples, rivers, Antarctica, houses, Grand Canyon, utopia'', etc. * Actions: ''swimming, exercises, diffusions, explosions, flight, electrification, embezzlement'', etc. * Qualities: ''colors, lengths, deafness, weights, roundness, symmetry, warp speed,'' etc. * Mental or physical states of existence: ''jealousy, sleep, heat, joy, stomachache, confusion, mind meld,'' etc. Lexical categories (parts of speech) are defined in terms of the ways in which their members combine with other kinds of expressions. The syn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Logic Network

A Markov logic network (MLN) is a probabilistic logic which applies the ideas of a Markov network to first-order logic, enabling uncertain inference. Markov logic networks generalize first-order logic, in the sense that, in a certain limit, all unsatisfiable statements have a probability of zero, and all tautologies have probability one. History Work in this area began in 2003 by Pedro Domingos and Matt Richardson, and they began to use the term MLN to describe it. Description Briefly, it is a collection of formulas from first-order logic, to each of which is assigned a real number, the weight. Taken as a Markov network, the vertices of the network graph are atomic formulas, and the edges are the logical connectives used to construct the formula. Each formula is considered to be a clique, and the Markov blanket is the set of formulas in which a given atom appears. A potential function is associated to each formula, and takes the value of one when the formula is true, and zero when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structured SVM

The structured support-vector machine is a machine learning algorithm that generalizes the Support-Vector Machine (SVM) classifier. Whereas the SVM classifier supports binary classification, multiclass classification and regression, the structured SVM allows training of a classifier for general structured output labels. As an example, a sample instance might be a natural language sentence, and the output label is an annotated parse tree. Training a classifier consists of showing pairs of correct sample and output label pairs. After training, the structured SVM model allows one to predict for new sample instances the corresponding output label; that is, given a natural language sentence, the classifier can produce the most likely parse tree. Training For a set of n training instances (\boldsymbol_i,y_i) \in \mathcal\times\mathcal, i=1,\dots,n from a sample space \mathcal and label space \mathcal, the structured SVM minimizes the following regularized risk function. :\underse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Case-based Reasoning

In artificial intelligence and philosophy, case-based reasoning (CBR), broadly construed, is the process of solving new problems based on the solutions of similar past problems. In everyday life, an auto mechanic who fixes an engine by recalling another car that exhibited similar symptoms is using case-based reasoning. A lawyer who advocates a particular outcome in a trial based on legal precedents or a judge who creates case law is using case-based reasoning. So, too, an engineer copying working elements of nature (practicing biomimicry), is treating nature as a database of solutions to problems. Case-based reasoning is a prominent type of analogy solution making. It has been argued that case-based reasoning is not only a powerful method for computer reasoning, but also a pervasive behavior in everyday human problem solving; or, more radically, that all reasoning is based on past cases personally experienced. This view is related to prototype theory, which is most deepl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Field

In physics and mathematics, a random field is a random function over an arbitrary domain (usually a multi-dimensional space such as \mathbb^n). That is, it is a function f(x) that takes on a random value at each point x \in \mathbb^n(or some other domain). It is also sometimes thought of as a synonym for a stochastic process with some restriction on its index set. That is, by modern definitions, a random field is a generalization of a stochastic process where the underlying parameter need no longer be real or integer valued "time" but can instead take values that are multidimensional vectors or points on some manifold. Formal definition Given a probability space (\Omega, \mathcal, P), an ''X''-valued random field is a collection of ''X''-valued random variables indexed by elements in a topological space ''T''. That is, a random field ''F'' is a collection : \ where each F_t is an ''X''-valued random variable. Examples In its discrete version, a random field is a list of rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms can perform inference and learning in Bayesian networks. Bayesian networks that model sequences of variables (''e.g.'' speech signals or protein sequences) are called dynamic Bayesian networks. Generalizations of Bayesian networks that can represent and solve decision problems under uncertainty are called influence diagrams. Graphical mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a Graph (discrete mathematics), graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or Factor graph, factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. History The Viterbi a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Random Field

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. What kind of graph is used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions. Other examples where CRFs are used are: labeling or parsing of sequential data for natural language processing or biological sequences, part-of-speech tagging, shallow parsing, named entity recogni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

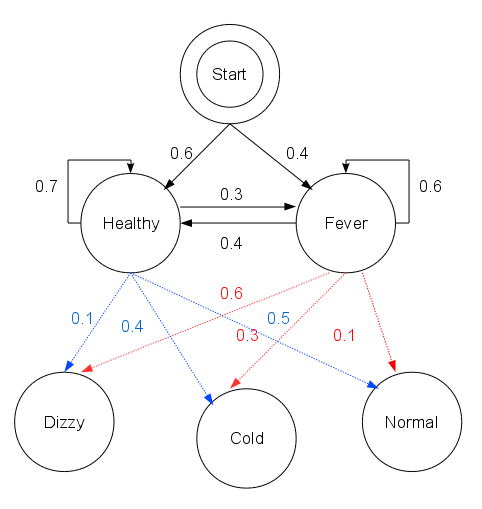

Hidden Markov Model

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it X — with unobservable ("''hidden''") states. As part of the definition, HMM requires that there be an observable process Y whose outcomes are "influenced" by the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about X by observing Y. HMM has an additional requirement that the outcome of Y at time t=t_0 must be "influenced" exclusively by the outcome of X at t=t_0 and that the outcomes of X and Y at t handwriting recognition, handwriting, gesture recognition, part-of-speech tagging, musical score following, partial discharges and bioinformatics. Definition Let X_n and Y_n be discrete-time stochastic processes and n\geq 1. The pair (X_n,Y_n) is a ''hidden Markov model'' if * X_n is a Markov process whose behavior is not directly observable ("hidden"); * \operatorname\bigl(Y_n \i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |