|

Structural Risk Minimization

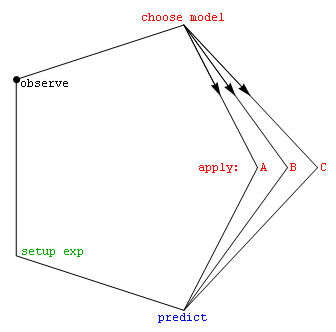

Structural risk minimization (SRM) is an inductive principle of use in machine learning. Commonly in machine learning, a generalized model must be selected from a finite data set, with the consequent problem of overfitting – the model becoming too strongly tailored to the particularities of the training set and generalizing poorly to new data. The SRM principle addresses this problem by balancing the model's complexity against its success at fitting the training data. This principle was first set out in a 1974 paper by Vladimir Vapnik and Alexey Chervonenkis and uses the VC dimension. In practical terms, Structural Risk Minimization is implemented by minimizing E_ + \beta H(W), where E_ is the train error, the function H(W) is called a regularization function, and \beta is a constant. H(W) is chosen such that it takes large values on parameters W that belong to high-capacity subsets of the parameter space. Minimizing H(W) in effect limits the capacity of the accessible subset ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vladimir Vapnik

Vladimir Naumovich Vapnik (russian: Владимир Наумович Вапник; born 6 December 1936) is one of the main developers of the Vapnik–Chervonenkis theory of statistical learning, and the co-inventor of the support-vector machine method, and support-vector clustering algorithm. Early life and education Vladimir Vapnik was born to a Jewish family in the Soviet Union. He received his master's degree in mathematics from the Uzbek State University, Samarkand, Uzbek SSR in 1958 and Ph.D in statistics at the Institute of Control Sciences, Moscow in 1964. He worked at this institute from 1961 to 1990 and became Head of the Computer Science Research Department. Academic career At the end of 1990, Vladimir Vapnik moved to the USA and joined the Adaptive Systems Research Department at AT&T Bell Labs in Holmdel, New Jersey. While at AT&T, Vapnik and his colleagues did work on the support-vector machine, which he also worked on much earlier before moving to the USA. They de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alexey Chervonenkis

Alexey Yakovlevich Chervonenkis (russian: link=no, Алексей Яковлевич Червоненкис; 7 September 1938 – 22 September 2014) was a Soviet and Russian mathematician. Along with Vladimir Vapnik, he was one of the main developers of the Vapnik–Chervonenkis theory, also known as the "fundamental theory of learning" - an important part of computational learning theory. Chervonenkis held joint appointments with the Russian Academy of Sciences and Royal Holloway, University of London. Alexey Chervonenkis got lost in Losiny Ostrov National Park on 22 September 2014, and later during a search operation was found dead near Mytishchi, a suburb of Moscow. He had died of hypothermia Hypothermia is defined as a body core temperature below in humans. Symptoms depend on the temperature. In mild hypothermia, there is shivering and mental confusion. In moderate hypothermia, shivering stops and confusion increases. In severe h .... References External linksChervone ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VC Dimension

VC may refer to: Military decorations * Victoria Cross, a military decoration awarded by the United Kingdom and also by certain Commonwealth nations ** Victoria Cross for Australia ** Victoria Cross (Canada) ** Victoria Cross for New Zealand * Victorious Cross, Idi Amin's self-bestowed military decoration Organisations * Ocean Airlines (IATA airline designator 2003-2008), Italian cargo airline * Voyageur Airways (IATA airline designator since 1968), Canadian charter airline * Visual Communications, an Asian-Pacific-American media arts organization in Los Angeles, US * Viet Cong (also Victor Charlie or Vietnamese Communists), a political and military organization from the Vietnam War (1959–1975) Education * Vanier College, Canada * Vassar College, US * Velez College, Philippines * Virginia College, US Places * Saint Vincent and the Grenadines (ISO country code), a state in the Caribbean * Sri Lanka (ICAO airport prefix code) * Watsonian vice-counties, subdivisions of Great Brita ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vapnik–Chervonenkis Theory

Vapnik–Chervonenkis theory (also known as VC theory) was developed during 1960–1990 by Vladimir Vapnik and Alexey Chervonenkis. The theory is a form of computational learning theory, which attempts to explain the learning process from a statistical point of view. Introduction VC theory covers at least four parts (as explained in ''The Nature of Statistical Learning Theory''): *Theory of consistency of learning processes **What are (necessary and sufficient) conditions for consistency of a learning process based on the empirical risk minimization principle? *Nonasymptotic theory of the rate of convergence of learning processes **How fast is the rate of convergence of the learning process? *Theory of controlling the generalization ability of learning processes **How can one control the rate of convergence (the generalization ability) of the learning process? *Theory of constructing learning machines **How can one construct algorithms that can control the generalization abilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machines

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues (Boser et al., 1992, Guyon et al., 1993, Cortes and Vapnik, 1995, Vapnik et al., 1997) SVMs are one of the most robust prediction methods, being based on statistical learning frameworks or VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that assigns new examples to one category or the other, making it a non- probabilistic binary linear classifier (although methods such as Platt scaling exist to use SVM in a probabilistic classification setting). SVM maps training examples to points in space so as to maximise the width of the gap between the two categories. New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Selection

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making or optimization under uncertainty. Introduction In its most basic forms, model selection is one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Occam Learning

In computational learning theory, Occam learning is a model of algorithmic learning where the objective of the learner is to output a succinct representation of received training data. This is closely related to probably approximately correct (PAC) learning, where the learner is evaluated on its predictive power of a test set. Occam learnability implies PAC learning, and for a wide variety of concept classes, the converse is also true: PAC learnability implies Occam learnability. Introduction Occam Learning is named after Occam's razor, which is a principle stating that, given all other things being equal, a shorter explanation for observed data should be favored over a lengthier explanation. The theory of Occam learning is a formal and mathematical justification for this principle. It was first shown by Blumer, et al. that Occam learning implies PAC learning, which is the standard model of learning in computational learning theory. In other words, ''parsimony'' (of the output ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Empirical Risk Minimization

Empirical risk minimization (ERM) is a principle in statistical learning theory which defines a family of learning algorithms and is used to give theoretical bounds on their performance. The core idea is that we cannot know exactly how well an algorithm will work in practice (the true "risk") because we don't know the true distribution of data that the algorithm will work on, but we can instead measure its performance on a known set of training data (the "empirical" risk). Background Consider the following situation, which is a general setting of many supervised learning problems. We have two spaces of objects X and Y and would like to learn a function \ h: X \to Y (often called ''hypothesis'') which outputs an object y \in Y, given x \in X. To do so, we have at our disposal a ''training set'' of n examples \ (x_1, y_1), \ldots, (x_n, y_n) where x_i \in X is an input and y_i \in Y is the corresponding response that we wish to get from h(x_i). To put it more formally, we assume ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |