|

Strongly Normalizing

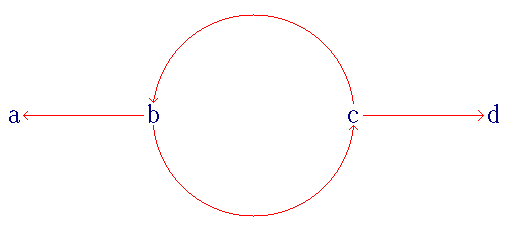

In abstract rewriting, an object is in normal form if it cannot be rewritten any further, i.e. it is irreducible. Depending on the rewriting system, an object may rewrite to several normal forms or none at all. Many properties of rewriting systems relate to normal forms. Definitions Stated formally, if (''A'',→) is an abstract rewriting system, ''x''∈''A'' is in normal form if no ''y''∈''A'' exists such that ''x''→''y'', i.e. ''x'' is an irreducible term. An object ''a'' is weakly normalizing if there exists at least one particular sequence of rewrites starting from ''a'' that eventually yields a normal form. A rewriting system has the weak normalization property or is ''(weakly) normalizing'' (WN) if every object is weakly normalizing. An object ''a'' is strongly normalizing if every sequence of rewrites starting from ''a'' eventually terminates with a normal form. An abstract rewriting system is ''strongly normalizing'', ''terminating'', ''noetherian'', or has the (stro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abstract Rewriting

In mathematical logic and theoretical computer science, an abstract rewriting system (also (abstract) reduction system or abstract rewrite system; abbreviated ARS) is a formalism that captures the quintessential notion and properties of rewriting systems. In its simplest form, an ARS is simply a set (of "objects") together with a binary relation, traditionally denoted with \rightarrow; this definition can be further refined if we index (label) subsets of the binary relation. Despite its simplicity, an ARS is sufficient to describe important properties of rewriting systems like normal forms, termination, and various notions of confluence. Historically, there have been several formalizations of rewriting in an abstract setting, each with its idiosyncrasies. This is due in part to the fact that some notions are equivalent, see below in this article. The formalization that is most commonly encountered in monographs and textbooks, and which is generally followed here, is due to Gérard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Calculus Of Constructions

In mathematical logic and computer science, the calculus of constructions (CoC) is a type theory created by Thierry Coquand. It can serve as both a typed programming language and as constructive foundation for mathematics. For this second reason, the CoC and its variants have been the basis for Coq and other proof assistants. Some of its variants include the calculus of inductive constructions (which adds inductive types), the calculus of (co)inductive constructions (which adds coinduction), and the predicative calculus of inductive constructions (which removes some impredicativity). General traits The CoC is a higher-order typed lambda calculus, initially developed by Thierry Coquand. It is well known for being at the top of Barendregt's lambda cube. It is possible within CoC to define functions from terms to terms, as well as terms to types, types to types, and types to terms. The CoC is strongly normalizing, and hence consistent. Usage The CoC has been developed alongsi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rewriting Systems

In mathematics, computer science, and logic, rewriting covers a wide range of methods of replacing subterms of a formula with other terms. Such methods may be achieved by rewriting systems (also known as rewrite systems, rewrite engines, or reduction systems). In their most basic form, they consist of a set of objects, plus relations on how to transform those objects. Rewriting can be non-deterministic. One rule to rewrite a term could be applied in many different ways to that term, or more than one rule could be applicable. Rewriting systems then do not provide an algorithm for changing one term to another, but a set of possible rule applications. When combined with an appropriate algorithm, however, rewrite systems can be viewed as computer programs, and several theorem provers and declarative programming languages are based on term rewriting. Example cases Logic In logic, the procedure for obtaining the conjunctive normal form (CNF) of a formula can be implemented as a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Languages

In logic, mathematics, computer science, and linguistics, a formal language consists of string (computer science), words whose symbol (formal), letters are taken from an alphabet (formal languages), alphabet and are well-formedness, well-formed according to a specific set of rules. The alphabet of a formal language consists of symbols, letters, or tokens that concatenate into strings of the language. Each string concatenated from symbols of this alphabet is called a word, and the words that belong to a particular formal language are sometimes called ''well-formed words'' or ''well-formed formulas''. A formal language is often defined by means of a formal grammar such as a regular grammar or context-free grammar, which consists of its formation rules. In computer science, formal languages are used among others as the basis for defining the grammar of programming languages and formalized versions of subsets of natural languages in which the words of the language represent concepts ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computability Theory

Computability theory, also known as recursion theory, is a branch of mathematical logic, computer science, and the theory of computation that originated in the 1930s with the study of computable functions and Turing degrees. The field has since expanded to include the study of generalized computability and definability. In these areas, computability theory overlaps with proof theory and effective descriptive set theory. Basic questions addressed by computability theory include: * What does it mean for a function on the natural numbers to be computable? * How can noncomputable functions be classified into a hierarchy based on their level of noncomputability? Although there is considerable overlap in terms of knowledge and methods, mathematical computability theorists study the theory of relative computability, reducibility notions, and degree structures; those in the computer science field focus on the theory of subrecursive hierarchies, formal methods, and formal languages. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normalization By Evaluation

In programming language semantics, normalisation by evaluation (NBE) is a style of obtaining the normal form of terms in the λ-calculus by appealing to their denotational semantics. A term is first ''interpreted'' into a denotational model of the λ-term structure, and then a canonical (β-normal and η-long) representative is extracted by ''reifying'' the denotation. Such an essentially semantic, reduction-free, approach differs from the more traditional syntactic, reduction-based, description of normalisation as reductions in a term rewrite system where β-reductions are allowed deep inside λ-terms. NBE was first described for the simply typed lambda calculus. It has since been extended both to weaker type systems such as the untyped lambda calculus using a domain theoretic approach, and to richer type systems such as several variants of Martin-Löf type theory. Outline Consider the simply typed lambda calculus, where types τ can be basic types (α), function types (→ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Newman's Lemma

In mathematics, in the theory of rewriting systems, Newman's lemma, also commonly called the diamond lemma, states that a terminating (or strongly normalizing) abstract rewriting system (ARS), that is, one in which there are no infinite reduction sequences, is confluent if it is locally confluent. In fact a terminating ARS is confluent precisely when it is locally confluent. Equivalently, for every binary relation with no decreasing infinite chains and satisfying a weak version of the diamond property, there is a unique minimal element in every connected component of the relation considered as a graph. Today, this is seen as a purely combinatorial result based on well-foundedness due to a proof of Gérard Huet in 1980. Newman's original proof was considerably more complicated. Diamond lemma In general, Newman's lemma can be seen as a combinatorial result about binary relations → on a set ''A'' (written backwards, so that ''a'' → ''b'' means that ''b'' is below ''a'') wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Barendregt–Geuvers–Klop Conjecture

__NOTOC__ In the branches of mathematical logic known as proof theory and type theory, a pure type system (PTS), previously known as a generalized type system (GTS), is a form of typed lambda calculus that allows an arbitrary number of sorts and dependencies between any of these. The framework can be seen as a generalisation of Barendregt's lambda cube, in the sense that all corners of the cube can be represented as instances of a PTS with just two sorts. In fact, Barendregt (1991) framed his cube in this setting. Pure type systems may obscure the distinction between ''types'' and ''terms'' and collapse the type hierarchy, as is the case with the calculus of constructions, but this is not generally the case, e.g. the simply typed lambda calculus allows only terms to depend on terms. Pure type systems were independently introduced by Stefano Berardi (1988) and Jan Terlouw (1989). Barendregt discussed them at length in his subsequent papers. In his PhD thesis, Berardi defined a cube ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Total Functional Programming

Total functional programming (also known as strong functional programming, to be contrasted with ordinary, or ''weak'' functional programming) is a programming paradigm that restricts the range of programs to those that are provably terminating. Restrictions Termination is guaranteed by the following restrictions: # A restricted form of recursion, which operates only upon 'reduced' forms of its arguments, such as Walther recursion, substructural recursion, or "strongly normalizing" as proven by abstract interpretation of code. # Every function must be a total (as opposed to partial) function. That is, it must have a definition for everything inside its domain. #* There are several possible ways to extend commonly used partial functions such as division to be total: choosing an arbitrary result for inputs on which the function is normally undefined (such as \forall x \in \mathbb. x \div 0 = 0 for division); adding another argument to specify the result for those inputs; or exclu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rewriting

In mathematics, computer science, and logic, rewriting covers a wide range of methods of replacing subterms of a well-formed formula, formula with other terms. Such methods may be achieved by rewriting systems (also known as rewrite systems, rewrite engines, or reduction systems). In their most basic form, they consist of a set of objects, plus relations on how to transform those objects. Rewriting can be non-deterministic algorithm, non-deterministic. One rule to rewrite a term could be applied in many different ways to that term, or more than one rule could be applicable. Rewriting systems then do not provide an algorithm for changing one term to another, but a set of possible rule applications. When combined with an appropriate algorithm, however, rewrite systems can be viewed as computer programs, and several automated theorem proving, theorem provers and declarative programming languages are based on term rewriting. Example cases Logic In logic, the procedure for obtaini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Typed Lambda Calculus

A typed lambda calculus is a typed formalism that uses the lambda-symbol (\lambda) to denote anonymous function abstraction. In this context, types are usually objects of a syntactic nature that are assigned to lambda terms; the exact nature of a type depends on the calculus considered (see kinds below). From a certain point of view, typed lambda calculi can be seen as refinements of the untyped lambda calculus, but from another point of view, they can also be considered the more fundamental theory and ''untyped lambda calculus'' a special case with only one type. Typed lambda calculi are foundational programming languages and are the base of typed functional programming languages such as ML and Haskell and, more indirectly, typed imperative programming languages. Typed lambda calculi play an important role in the design of type systems for programming languages; here, typability usually captures desirable properties of the program (e.g., the program will not cause a memory acces ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canonical Form

In mathematics and computer science, a canonical, normal, or standard form of a mathematical object is a standard way of presenting that object as a mathematical expression. Often, it is one which provides the simplest representation of an object and which allows it to be identified in a unique way. The distinction between "canonical" and "normal" forms varies from subfield to subfield. In most fields, a canonical form specifies a ''unique'' representation for every object, while a normal form simply specifies its form, without the requirement of uniqueness. The canonical form of a positive integer in decimal representation is a finite sequence of digits that does not begin with zero. More generally, for a class of objects on which an equivalence relation is defined, a canonical form consists in the choice of a specific object in each class. For example: *Jordan normal form is a canonical form for matrix similarity. *The row echelon form is a canonical form, when one considers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |