|

Statistical Parametric Mapping

Statistical parametric mapping (SPM) is a statistical technique for examining differences in brain activity recorded during functional neuroimaging experiments. It was created by Karl Friston. It may alternatively refer to software created by the Wellcome Department of Imaging Neuroscience at University College London to carry out such analyses. Approach Unit of measurement Functional neuroimaging is one type of 'brain scanning'. It involves the measurement of brain activity. The measurement technique depends on the imaging technology (e.g., fMRI and PET). The scanner produces a 'map' of the area that is represented as voxels. Each voxel represents the activity of a specific volume in three-dimensional space. The exact size of a voxel varies depending on the technology. fMRI voxels typically represent a volume of 27 mm3 in an equilateral cuboid. Experimental design Researchers examine brain activity linked to a specific mental process or processes. One approach involves aski ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical

Statistics (from German: ''Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An experim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wavelet

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the number and direction of its pulses. Wavelets are imbued with specific properties that make them useful for signal processing. For example, a wavelet could be created to have a frequency of Middle C and a short duration of roughly one tenth of a second. If this wavelet were to be convolved with a signal created from the recording of a melody, then the resulting signal would be useful for determining when the Middle C note appeared in the song. Mathematically, a wavelet correlates with a signal if a portion of the signal is similar. Correlation is at the core of many practical wavelet applications. As a mathematical tool, wavelets can be used to extract information from many different kinds of data, including but not limited to au ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Integration (neurobiology)

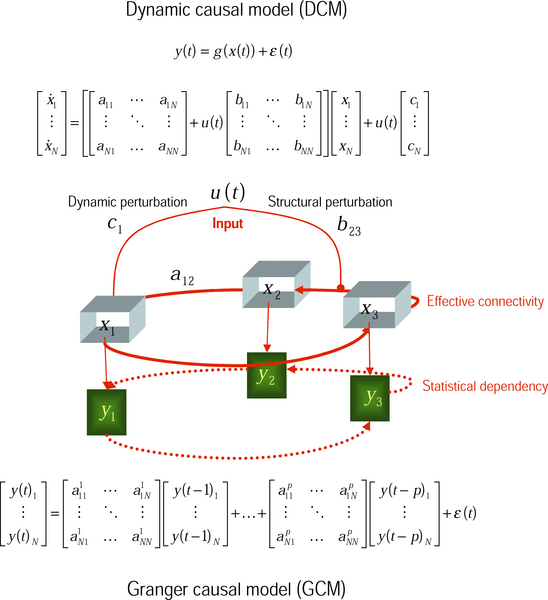

Functional integration is the study of how brain regions work together to process information and effect responses. Though functional integration frequently relies on anatomic knowledge of the connections between brain areas, the emphasis is on how large clusters of neurons – numbering in the thousands or millions – fire together under various stimuli. The large datasets required for such a whole-scale picture of brain function have motivated the development of several novel and general methods for the statistical analysis of interdependence, such as dynamic causal modelling and statistical linear parametric mapping. These datasets are typically gathered in human subjects by non-invasive methods such as EEG/ MEG, fMRI, or PET. The results can be of clinical value by helping to identify the regions responsible for psychiatric disorders, as well as to assess how different activities or lifestyles affect the functioning of the brain. Imaging techniques A study's choice of imagin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Neuroscience

Cognitive neuroscience is the scientific field that is concerned with the study of the biological processes and aspects that underlie cognition, with a specific focus on the neural connections in the brain which are involved in mental processes. It addresses the questions of how cognitive activities are affected or controlled by neural circuits in the brain. Cognitive neuroscience is a branch of both neuroscience and psychology, overlapping with disciplines such as behavioral neuroscience, cognitive psychology, physiological psychology and affective neuroscience.Gazzaniga 2002, p. xv Cognitive neuroscience relies upon theories in cognitive science coupled with evidence from neurobiology, and computational modeling. Parts of the brain play an important role in this field. Neurons play the most vital role, since the main point is to establish an understanding of cognition from a neural perspective, along with the different lobes of the cerebral cortex. Methods employed in c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Free Software

Free software or libre software is computer software distributed under terms that allow users to run the software for any purpose as well as to study, change, and distribute it and any adapted versions. Free software is a matter of liberty, not price; all users are legally free to do what they want with their copies of a free software (including profiting from them) regardless of how much is paid to obtain the program.Selling Free Software (gnu.org) Computer programs are deemed "free" if they give end-users (not just the developer) ultimate control over the software and, subsequently, over their devices. The right to study and modify a computer program entails that |

MATLAB

MATLAB (an abbreviation of "MATrix LABoratory") is a proprietary multi-paradigm programming language and numeric computing environment developed by MathWorks. MATLAB allows matrix manipulations, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other languages. Although MATLAB is intended primarily for numeric computing, an optional toolbox uses the MuPAD symbolic engine allowing access to symbolic computing abilities. An additional package, Simulink, adds graphical multi-domain simulation and model-based design for dynamic and embedded systems. As of 2020, MATLAB has more than 4 million users worldwide. They come from various backgrounds of engineering, science, and economics. History Origins MATLAB was invented by mathematician and computer programmer Cleve Moler. The idea for MATLAB was based on his 1960s PhD thesis. Moler became a math professor at the University of New Mexico and starte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Magnetic Resonance Imaging

Functional magnetic resonance imaging or functional MRI (fMRI) measures brain activity by detecting changes associated with blood flow. This technique relies on the fact that cerebral blood flow and neuronal activation are coupled. When an area of the brain is in use, blood flow to that region also increases. The primary form of fMRI uses the blood-oxygen-level dependent (BOLD) contrast, discovered by Seiji Ogawa in 1990. This is a type of specialized brain and body scan used to map neuron, neural activity in the brain or spinal cord of humans or other animals by imaging the change in blood flow (hemodynamic response) related to energy use by brain cells. Since the early 1990s, fMRI has come to dominate brain mapping research because it does not involve the use of injections, surgery, the ingestion of substances, or exposure to ionizing radiation. This measure is frequently corrupted by noise from various sources; hence, statistical procedures are used to extract the underlying si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Comparisons

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a potent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Field

In physics and mathematics, a random field is a random function over an arbitrary domain (usually a multi-dimensional space such as \mathbb^n). That is, it is a function f(x) that takes on a random value at each point x \in \mathbb^n(or some other domain). It is also sometimes thought of as a synonym for a stochastic process with some restriction on its index set. That is, by modern definitions, a random field is a generalization of a stochastic process where the underlying parameter need no longer be real or integer valued "time" but can instead take values that are multidimensional vectors or points on some manifold. Formal definition Given a probability space (\Omega, \mathcal, P), an ''X''-valued random field is a collection of ''X''-valued random variables indexed by elements in a topological space ''T''. That is, a random field ''F'' is a collection : \ where each F_t is an ''X''-valued random variable. Examples In its discrete version, a random field is a list of rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resel

In image analysis, a resel (from ''res''olution ''el''ement) represents the actual spatial resolution in an image or a volumetric dataset. The number of resels in the image may be lower or equal to the number of pixel/voxels in the image. In an actual image the resels can vary across the image and indeed the local resolution can be expressed as "resels per pixel" (or "resels per voxel"). In functional neuroimaging analysis, an estimate of the number of resels together with random field theory is used in statistical inference. Keith Worsley has proposed an estimate for the number of resels/roughness. The word "resel" is related to the words "pixel", "texel", and "voxel", and Waldo R. Tobler is probably among the first to use the word. See also * Kell factor References Bibliography * Keith J. Worsley, An unbiased estimator for the roughness of a multivariate Gaussian random field', Technical report, 2000 July. * * {{Cite journal , doi = 10.1111/j.1538-4632.1985.tb0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unluck ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolution

In mathematics (in particular, functional analysis), convolution is a operation (mathematics), mathematical operation on two function (mathematics), functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution'' refers to both the result function and to the process of computing it. It is defined as the integral of the product of the two functions after one is reflected about the y-axis and shifted. The choice of which function is reflected and shifted before the integral does not change the integral result (see #Properties, commutativity). The integral is evaluated for all values of shift, producing the convolution function. Some features of convolution are similar to cross-correlation: for real-valued functions, of a continuous or discrete variable, convolution (f*g) differs from cross-correlation (f \star g) only in that either or is reflected about the y-axis in convolution; thus it is a cross-c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |