|

Statistical Machine Translation

Statistical machine translation (SMT) is a machine translation paradigm where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, and has more recently been superseded by neural machine translation in many applications (see this article's final section). The first ideas of statistical machine translation were introduced by Warren Weaver in 1949, including the ideas of applying Claude Shannon's information theory. Statistical machine translation was re-introduced in the late 1980s and early 1990s by researchers at IBM's Thomas J. Watson Research Center and has contributed to the significant resurgence in interest in machine translation in recent years. Before the introduction of neural machine translation, it was by far the most widely studied machine translati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation or interactive translation), is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another. On a basic level, MT performs mechanical substitution of words in one language for words in another, but that alone rarely produces a good translation because recognition of whole phrases and their closest counterparts in the target language is needed. Not all words in one language have equivalent words in another language, and many words have more than one meaning. Solving this problem with corpus statistical and neural techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies. Current machine translation software often allows for customizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word

A word is a basic element of language that carries an semantics, objective or pragmatics, practical semantics, meaning, can be used on its own, and is uninterruptible. Despite the fact that language speakers often have an intuitive grasp of what a word is, there is no consensus among linguistics, linguists on its definition and numerous attempts to find specific criteria of the concept remain controversial. Different standards have been proposed, depending on the theoretical background and descriptive context; these do not converge on a single definition. Some specific definitions of the term "word" are employed to convey its different meanings at different levels of description, for example based on phonology, phonological, grammar, grammatical or orthography, orthographic basis. Others suggest that the concept is simply a convention used in everyday situations. The concept of "word" is distinguished from that of a morpheme, which is the smallest unit of language that has a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data-oriented Parsing

Data-oriented parsing (DOP, also data-oriented processing) is a probabilistic model in computational linguistics. DOP was conceived by Remko Scha in 1990 with the aim of developing a performance-oriented grammar framework. Unlike other probabilistic models, DOP takes into account all subtrees contained in a treebank rather than being restricted to, for example, 2-level subtrees (like PCFGs), thus allowing for more context-sensitive information. Several variants of DOP have been developed. The initial version developed by Rens Bod in 1992 was based on tree-substitution grammar,R. Bod, A computational model of language performance: Data oriented parsing, in: COLING 1992 Volume 3: The 15th International Conference on Computational Linguistics, https://www.aclweb.org/anthology/C92-3126.pdf while more recently, DOP has been combined with lexical-functional grammar (LFG). The resulting DOP-LFG finds an application in machine translation. Other work on learning and parameter estimation f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Parsing

Statistical parsing is a group of parsing methods within natural language processing. The methods have in common that they associate grammar rules with a probability. Grammar rules are traditionally viewed in computational linguistics as defining the valid sentences in a language. Within this mindset, the idea of associating each rule with a probability then provides the relative frequency of any given grammar rule and, by deduction, the probability of a complete parse for a sentence. (The probability associated with a grammar rule may be induced, but the application of that grammar rule within a parse tree and the computation of the probability of the parse tree based on its component rules is a form of deduction.) Using this concept, statistical parsers make use of a procedure to search over a space of all candidate parses, and the computation of each candidate's probability, to derive the most probable parse of a sentence. The Viterbi algorithm is one popular method of search ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parse Tree

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars (phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such as programming languages. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntax (linguistics)

In linguistics, syntax () is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals. Etymology The word ''syntax'' comes from Ancient Greek roots: "coordination", which consists of ''syn'', "together", and ''táxis'', "ordering". Topics The field of syntax contains a number of various topics that a syntactic theory is often designed to handle. The relation between the topics is treated differently in different theories, and some of them may not be considered to be distinct but instead to be derived from one another (i.e. word order can be seen as the result of movement rules derived from grammatical relations). Seq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IBM Alignment Models

IBM alignment models are a sequence of increasingly complex models used in statistical machine translation to train a translation model and an alignment model, starting with lexical translation probabilities and moving to reordering and word duplication. They underpinned the majority of statistical machine translation systems for almost twenty years starting in the early 1990s, until neural machine translation began to dominate. These models offer principled probabilistic formulation and (mostly) tractable inference. The original work on statistical machine translation at IBM proposed five models, and a model 6 was proposed later. The sequence of the six models can be summarized as: * Model 1: lexical translation * Model 2: additional absolute alignment model * Model 3: extra fertility model * Model 4: added relative alignment model * Model 5: fixed deficiency problem. * Model 6: Model 4 combined with a HMM alignment model in a log linear way Mathematical setup The IBM alignm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation–maximization Algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the ''E'' step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step. History The EM algorithm was explained and given its name in a classic 1977 paper by Arthur Dempster, Nan Laird, and Donald Rubin. They pointed out that the method had been "proposed many times in special circumstances" by earlier authors. One of the earliest is the gene-counting method for estimating allele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntactic Categories

A syntactic category is a syntactic unit that theories of syntax assume. Word classes, largely corresponding to traditional parts of speech (e.g. noun, verb, preposition, etc.), are syntactic categories. In phrase structure grammars, the ''phrasal categories'' (e.g. noun phrase, verb phrase, prepositional phrase, etc.) are also syntactic categories. Dependency grammars, however, do not acknowledge phrasal categories (at least not in the traditional sense). Word classes considered as syntactic categories may be called ''lexical categories'', as distinct from phrasal categories. The terminology is somewhat inconsistent between the theoretical models of different linguists. However, many grammars also draw a distinction between ''lexical categories'' (which tend to consist of content words, or phrases headed by them) and ''functional categories'' (which tend to consist of function words or abstract functional elements, or phrases headed by them). The term ''lexical category'' therefore ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phraseme

A phraseme, also called a set phrase, idiomatic phrase, multi-word expression (in computational linguistics), or idiom, is a multi-word or multi-morphemic utterance whose components include at least one that is selectionally constrained or restricted by linguistic convention such that it is not freely chosen. In the most extreme cases, there are expressions such as ''X kicks the bucket'' ≈ ‘person X dies of natural causes, the speaker being flippant about X’s demise’ where the unit is selected as a whole to express a meaning that bears little or no relation to the meanings of its parts. All of the words in this expression are chosen restrictedly, as part of a chunk. At the other extreme, there are collocations such as ''stark naked'', ''hearty laugh'', or ''infinite patience'' where one of the words is chosen freely (''naked'', ''laugh'', and ''patience'', respectively) based on the meaning the speaker wishes to express while the choice of the other (intensifying) word (''s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

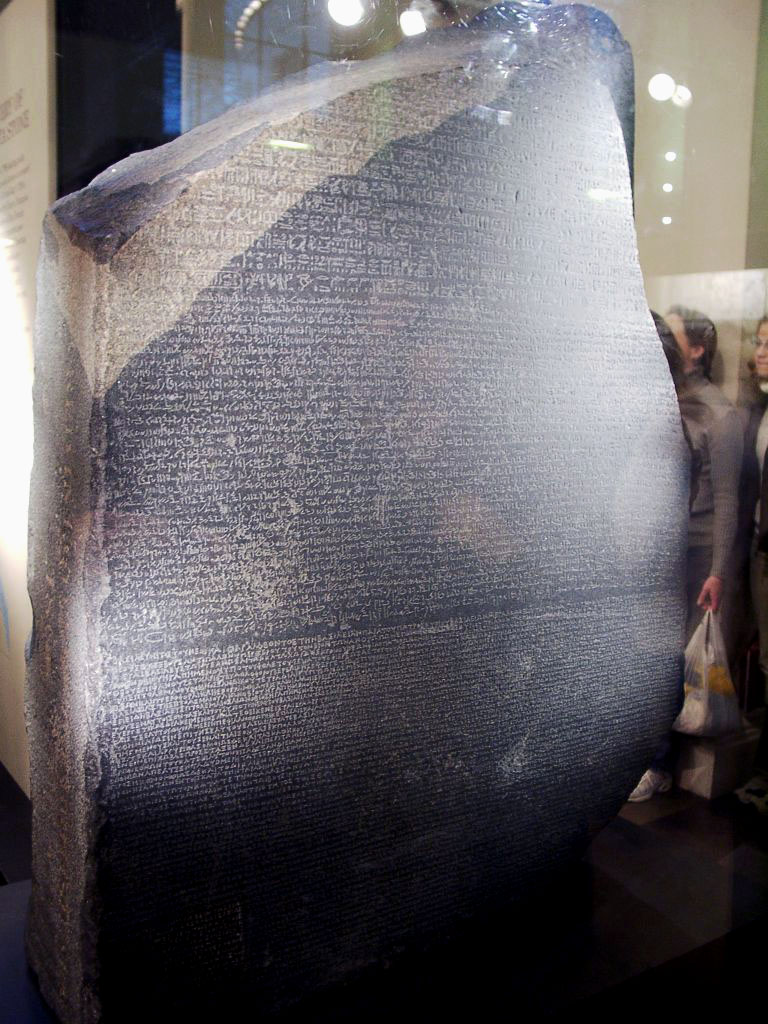

Parallel Corpora

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla (Greek for "sixfold") placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered. Large collections of parallel texts are called parallel corpora (see text corpus). Alignments of parallel corpora at sentence level are prerequisite for many areas of linguistic research. During translation, sentences can be split, merged, deleted, inserted or reordered by the translator. This makes alignment a non-trivial task. P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)