|

Star Schema

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of dimension tables. The star schema is an important special case of the snowflake schema, and is more effective for handling simpler queries. The star schema gets its name from the physical model's", p. 708 resemblance to a star shape with a fact table at its center and the dimension tables surrounding it representing the star's points. Model The star schema separates business process data into facts, which hold the measurable, quantitative data about a business, and dimensions which are descriptive attributes related to fact data. Examples of fact data include sales price, sale quantity, and time, distance, speed and weight measurements. Related dimension attribute examples include product models, product colors, product sizes, geographic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Online Analytical Processing

Online analytical processing, or OLAP (), is an approach to answer multi-dimensional analytical (MDA) queries swiftly in computing. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, report writing and data mining. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications emerging, such as agriculture. The term ''OLAP'' was created as a slight modification of the traditional database term online transaction processing (OLTP). OLAP tools enable users to analyze multidimensional data interactively from multiple perspectives. OLAP consists of three basic analytical operations: consolidation (roll-up), drill-down, and slicing and dicing.O'Brien, J. A., & Marakas, G. M. (2009). Management information systems (9th ed.). Boston, MA: McGraw-Hill/Irwin. Consolidat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Activity Schema

Activity may refer to: * Action (philosophy), in general * Human activity: human behavior, in sociology behavior may refer to all basic human actions, economics may study human economic activities and along with cybernetics and psychology may study their modulation * Recreation, or activities of leisure * The Aristotelian concept of energeia, Latinized as ''actus'' * Activity (UML), a major task in Unified Modeling Language * ''Activity'', the rate of catalytic activity, such as enzyme activity (enzyme assay), in physical chemistry and enzymology * Thermodynamic activity, the effective concentration of a solute for the purposes of mass action * Activity (project management) * Activity, the number of radioactive decays per second * Activity (software engineering) * Activity (soil mechanics) * , an aircraft carrier of the Royal Navy * "Activity", a song by Way Out West from ''Intensify'' * Cultural activities, activities referred to culture. See also * Activity theory, a learnin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fact Constellation

Fact constellation is a measure of online analytical processing, which is a collection of multiple fact tables sharing dimension tables, viewed as a collection of stars. It can be seen as an extension of the star schema. A fact constellation schema has multiple fact tables. It is also known as galaxy schema. It is widely used schema and more complex than star schema and snowflake schema. It is possible to create fact constellation schema by splitting original star schema into more star schema. It has many fact tables and some common dimension table. See also * Online analytical processing * Star schema * Snowflake schema In computing, a snowflake schema is a logical arrangement of tables in a multidimensional database such that the entity relationship diagram resembles a snowflake shape. The snowflake schema is represented by centralized fact tables which ar ... References {{Reflist Online analytical processing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Snowflake Schema

In computing, a snowflake schema is a logical arrangement of tables in a multidimensional database such that the entity relationship diagram resembles a snowflake shape. The snowflake schema is represented by centralized fact tables which are connected to multiple dimensions. "Snowflaking" is a method of normalizing the dimension tables in a star schema. When it is completely normalized along all the dimension tables, the resultant structure resembles a snowflake with the fact table in the middle. The principle behind snowflaking is normalization of the dimension tables by removing low cardinality attributes and forming separate tables. The snowflake schema is similar to the star schema. However, in the snowflake schema, dimensions are normalized into multiple related tables, whereas the star schema's dimensions are denormalized with each dimension represented by a single table. A complex snowflake shape emerges when the dimensions of a snowflake schema are elaborate, having ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reverse Star Schema

The reverse star schema is a schema optimized for fast retrieval of large quantities of descriptive data. The design was derived from a warehouse star schema, and its adaptation for descriptive data required that certain key characteristics of the classic star schema be "reversed". Model The relation of the central table to those in dimension tables is one-to-many, or in some cases many-to-many rather than many-to-one; the primary keys of the central table are the foreign keys in dimension tables, and the main tables are, in general, smaller than the dimension tables. Main table columns are typically the source of query constraints, as opposed to dimension tables in the classical star schema. By starting queries with the smaller table, many results are filtered out early in the querying process, thereby streamlining the entire search path. To add further flexibility, more than one main table is allowed, with main and submain tables having a one-to-many relation. Each main t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

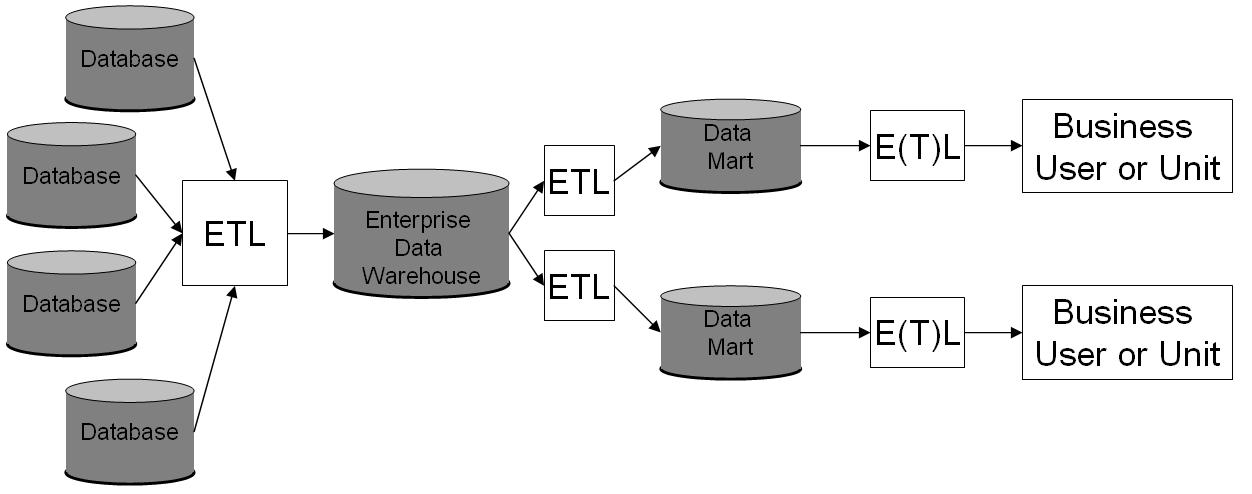

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire Information Lifecycle Management, life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity. Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities). Moreover, upon later Data retrieval, retrieval, ensure the data is the same as when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is not to be confus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ROLAP

Online analytical processing, or OLAP (), is an approach to answer multi-dimensional analytical (MDA) queries swiftly in computing. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, report writing and data mining. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications emerging, such as agriculture. The term ''OLAP'' was created as a slight modification of the traditional database term online transaction processing (OLTP). OLAP tools enable users to analyze multidimensional data interactively from multiple perspectives. OLAP consists of three basic analytical operations: consolidation (roll-up), drill-down, and slicing and dicing.O'Brien, J. A., & Marakas, G. M. (2009). Management information systems (9th ed.). Boston, MA: McGraw-Hill/Irwin. Consol ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OLAP Cube

An OLAP cube is a multi-dimensional array of data. Online analytical processing (OLAP) is a computer-based technique of analyzing data to look for insights. The term ''cube'' here refers to a multi-dimensional dataset, which is also sometimes called a hypercube if the number of dimensions is greater than three. Terminology A cube can be considered a multi-dimensional generalization of a two- or three-dimensional spreadsheet. For example, a company might wish to summarize financial data by product, by time-period, and by city to compare actual and budget expenses. Product, time, city and scenario (actual and budget) are the data's dimensions. ''Cube'' is a shorthand for ''multidimensional dataset'', given that data can have an arbitrary number of ''dimensions''. The term hypercube is sometimes used, especially for data with more than three dimensions. A cube is not a "cube" in the strict mathematical sense, as the sides are not all necessarily equal. But this term is used widel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Normalization

Database normalization or database normalisation (see spelling differences) is the process of structuring a relational database in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model. Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of ''synthesis'' (creating a new database design) or ''decomposition'' (improving an existing database design). Objectives A basic objective of the first normal form defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic. An example of such a language is SQL, though it is one that Codd regarded as seriou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |