|

Spinlock

In software engineering, a spinlock is a lock that causes a thread trying to acquire it to simply wait in a loop ("spin") while repeatedly checking whether the lock is available. Since the thread remains active but is not performing a useful task, the use of such a lock is a kind of busy waiting. Once acquired, spinlocks will usually be held until they are explicitly released, although in some implementations they may be automatically released if the thread being waited on (the one that holds the lock) blocks or "goes to sleep". Because they avoid overhead from operating system process rescheduling or context switching, spinlocks are efficient if threads are likely to be blocked for only short periods. For this reason, operating-system kernels often use spinlocks. However, spinlocks become wasteful if held for longer durations, as they may prevent other threads from running and require rescheduling. The longer a thread holds a lock, the greater the risk that the thread will be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Busy Waiting

In computer science and software engineering, busy-waiting, busy-looping or spinning is a technique in which a process repeatedly checks to see if a condition is true, such as whether keyboard input or a lock is available. Spinning can also be used to generate an arbitrary time delay, a technique that was necessary on systems that lacked a method of waiting a specific length of time. Processor speeds vary greatly from computer to computer, especially as some processors are designed to dynamically adjust speed based on current workload. Consequently, spinning as a time-delay technique can produce inconsistent or even unpredictable results on different systems unless code is included to determine the time a processor takes to execute a "do nothing" loop, or the looping code explicitly checks a real-time clock. In most cases spinning is considered an anti-pattern and should be avoided, as processor time that could be used to execute a different task is instead wasted on useless act ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Engineering

Software engineering is a branch of both computer science and engineering focused on designing, developing, testing, and maintaining Application software, software applications. It involves applying engineering design process, engineering principles and computer programming expertise to develop software systems that meet user needs. The terms ''programmer'' and ''coder'' overlap ''software engineer'', but they imply only the construction aspect of a typical software engineer workload. A software engineer applies a software development process, which involves defining, Implementation, implementing, Software testing, testing, Project management, managing, and Software maintenance, maintaining software systems, as well as developing the software development process itself. History Beginning in the 1960s, software engineering was recognized as a separate field of engineering. The development of software engineering was seen as a struggle. Problems included software that was over ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

80386

The Intel 386, originally released as the 80386 and later renamed i386, is the third-generation x86 architecture microprocessor from Intel. It was the first 32-bit processor in the line, making it a significant evolution in the x86 architecture. Pre-production samples of the 386 were released to select developers in 1985, while mass production commenced in 1986. The 386 was the central processing unit (CPU) of many workstations and high-end personal computers of the time. The 386 began to fall out of public use starting with the release of the i486 processor in 1989, while in embedded systems the 386 remained in widespread use until Intel finally discontinued it in 2007. Compared to its predecessor the Intel 80286 ("286"), the 80386 added a three-stage instruction pipeline which it brings up to total of 6-stage instruction pipeline, extended the architecture from 16-bits to 32-bits, and added an on-chip memory management unit. This paging translation unit made it much easier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Glibc

The GNU C Library, commonly known as glibc, is the GNU Project implementation of the C standard library. It provides a wrapper around the system calls of the Linux kernel and other kernels for application use. Despite its name, it now also directly supports C++ (and, indirectly, other programming languages). It was started in the 1980s by the Free Software Foundation (FSF) for the GNU operating system. glibc is free software released under the GNU Lesser General Public License. The GNU C Library project provides the core libraries for the GNU system, as well as many systems that use Linux kernel, Linux as the kernel (operating system), kernel. These libraries provide critical APIs including ISO C11 (C standard revision), C11, POSIX.1-2008, Berkeley Software Distribution, BSD, OS-specific APIs and more. These APIs include such foundational facilities as open (system call), open, read (system call), read, write (system call), write, malloc, printf format string, printf, getaddrin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transactional Memory

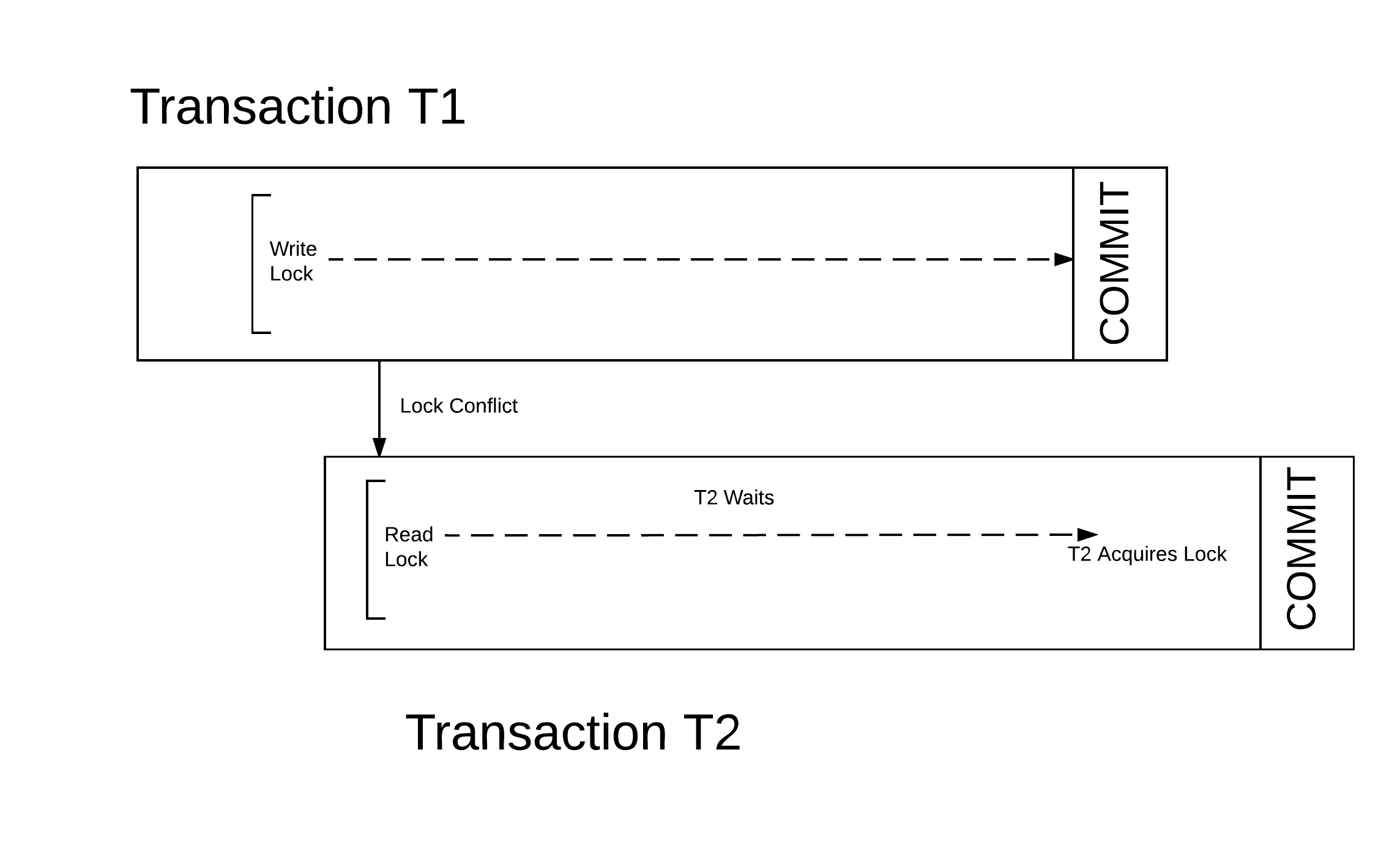

In computer science and computer engineering, engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an linearizability, atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to Shared memory (interprocess communication), shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from running the code protected by the critical section. The process of applying and releasing locks often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transactional Synchronization Extensions

Transactional Synchronization Extensions (TSX), also called Transactional Synchronization Extensions New Instructions (TSX-NI), is an extension to the x86 instruction set architecture (ISA) that adds hardware transactional memory support, speeding up execution of multi-threaded software through lock elision. According to different benchmarks, TSX/TSX-NI can provide around 40% faster applications execution in specific workloads, and 4–5 times more database transactions per second (TPS). TSX/TSX-NI was documented by Intel in February 2012, and debuted in June 2013 on selected Intel microprocessors based on the Haswell microarchitecture. Haswell processors below 45xx as well as R-series and K-series (with unlocked multiplier) SKUs do not support TSX/TSX-NI. In August 2014, Intel announced a bug in the TSX/TSX-NI implementation on current steppings of Haswell, Haswell-E, Haswell-EP and early Broadwell CPUs, which resulted in disabling the TSX/TSX-NI feature on affected CPUs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MESI

The MESI protocol is an invalidate-based cache coherence protocol, and is one of the most common protocols that support write-back caches. It is also known as the Illinois protocol due to its development at the University of Illinois at Urbana-Champaign. Write back caches can save considerable bandwidth generally wasted on a write through cache. There is always a dirty state present in write-back caches that indicates that the data in the cache is different from that in the main memory. The Illinois Protocol requires a cache-to-cache transfer on a miss if the block resides in another cache. This protocol reduces the number of main memory transactions with respect to the MSI protocol. This marks a significant improvement in performance. States The letters in the acronym MESI represent four exclusive states that a cache line can be marked with (encoded using two additional bits): ;Modified (M): The cache line is present only in the current cache, and is ''dirty'' - it has been m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bus (computing)

In computer architecture, a bus (historically also called a data highway or databus) is a communication system that transfers Data (computing), data between components inside a computer or between computers. It encompasses both Computer hardware, hardware (e.g., wires, optical fiber) and software, including communication protocols. At its core, a bus is a shared physical pathway, typically composed of wires, traces on a circuit board, or busbars, that allows multiple devices to communicate. To prevent conflicts and ensure orderly data exchange, buses rely on a communication protocol to manage which device can transmit data at a given time. Buses are categorized based on their role, such as system buses (also known as internal buses, internal data buses, or memory buses) connecting the Central processing unit, CPU and Computer memory, memory. Expansion buses, also called peripheral buses, extend the system to connect additional devices, including peripherals. Examples of widely ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IA-64

IA-64 (Intel Itanium architecture) is the instruction set architecture (ISA) of the discontinued Itanium family of 64-bit Intel microprocessors. The basic ISA specification originated at Hewlett-Packard (HP), and was subsequently implemented by Intel in collaboration with HP. The first Itanium processor, codenamed ''Merced'', was released in 2001. The Itanium architecture is based on explicit instruction-level parallelism, in which the compiler decides which instructions to execute in parallel. This contrasts with superscalar architectures, which depend on the processor to manage instruction dependencies at runtime. In all Itanium models, up to and including '' Tukwila'', cores execute up to six instructions per cycle. In 2008, Itanium was the fourth-most deployed microprocessor architecture for enterprise-class systems, behind x86-64, Power ISA, and SPARC. In 2019, Intel announced the discontinuation of the last of the CPUs supporting the IA-64 architecture. Microsoft Win ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Multiprocessing

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. Professor John D. Kubiatowicz considers traditionally SMP systems to contain processors without caches. Culler and Pal-Singh in their 1998 book "Parallel Computer Architecture: A Hardware/Software Approach" mention: "The term SMP is widely used but causes a bit of confusion. ..The more precise description of what is intended by SMP is a shared memory multiprocessor where the cost of accessing a memory location ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

I486

The Intel 486, officially named i486 and also known as 80486, is a microprocessor introduced in 1989. It is a higher-performance follow-up to the i386, Intel 386. It represents the fourth generation of binary compatible CPUs following the Intel 8086, 8086 of 1978, the Intel 80286 of 1982, and 1985's i386. It was the first tightly-instruction pipeline, pipelined x86 design as well as the first x86 chip to include more than one million transistors. It offered a large on-chip Cache (computing), cache and an integrated floating-point unit. When it was announced, the initial performance was originally published between 15 and 20 VAX Unit of Performance, VAX MIPS, between 37,000 and 49,000 Dhrystone, dhrystones per second, and between 6.1 and 8.2 double-precision Whetstone (benchmark), megawhetstones per second for both 25 and 33 MHz version. A typical 50 MHz i486 executes 41 million instructions per second Dhrystone MIPS and SPECint, SPEC integer rating of 27.9.Chen, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentium (brand)

Pentium is a series of x86 architecture-compatible microprocessors produced by Intel from 1993 to 2023. The original Pentium was Intel's fifth generation processor, succeeding the i486; Pentium was Intel's flagship processor line for over a decade until the introduction of the Intel Core line in 2006. Pentium-branded processors released from 2009 onwards were considered entry-level products positioned above the low-end Atom and Celeron series, but below the faster Core lineup and workstation/server Xeon series. The later Pentiums, which have little more than their name in common with earlier Pentiums, were based on both the architecture used in Atom and that of Core processors. In the case of Atom architectures, Pentiums were the highest performance implementations of the architecture. Pentium processors with Core architectures prior to 2017 were distinguished from the faster, higher-end i-series processors by lower clock rates and disabling some features, such as hyper ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |