|

Semantic Triple

A semantic triple, or RDF triple or simply triple, is the atomic data entity in the Resource Description Framework (RDF) data model. As its name indicates, a triple is a sequence of three entities that codifies a statement about semantic data in the form of subject–predicate–object expressions (e.g., "Bob is 35", or "Bob knows John"). Subject, predicate and object This format enables knowledge to be represented in a machine-readable way. Particularly, every part of an RDF triple is individually addressable via unique URIs—for example, the statement "Bob knows John" might be represented in RDF as: http://example.name#BobSmith12 http://xmlns.com/foaf/spec/#term_knows http://example.name#JohnDoe34. Given this precise representation, semantic data can be unambiguously queried and reasoned about. The components of a triple, such as the statement "The sky has the color blue", consist of a subject ("the sky"), a predicate ("has the color"), and an object ("blue"). This is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Resource Description Framework

The Resource Description Framework (RDF) is a method to describe and exchange graph data. It was originally designed as a data model for metadata by the World Wide Web Consortium (W3C). It provides a variety of syntax notations and formats, of which the most widely used is Turtle ( Terse RDF Triple Language). RDF is a directed graph composed of triple statements. An RDF graph statement is represented by: (1) a node for the subject, (2) an arc from subject to object, representing a predicate, and (3) a node for the object. Each of these parts can be identified by a Uniform Resource Identifier (URI). An object can also be a literal value. This simple, flexible data model has a lot of expressive power to represent complex situations, relationships, and other things of interest, while also being appropriately abstract. RDF was adopted as a W3C recommendation in 1999. The RDF 1.0 specification was published in 2004, and the RDF 1.1 specification in 2014. SPARQL is a standard query ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

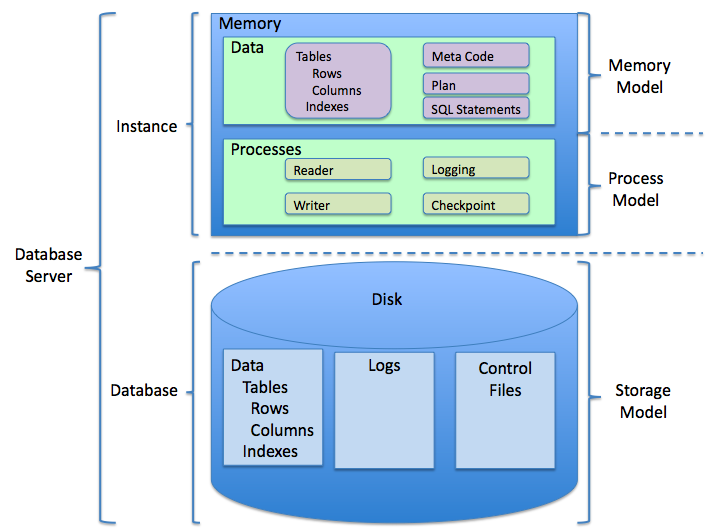

Relational Database

A relational database (RDB) is a database based on the relational model of data, as proposed by E. F. Codd in 1970. A Relational Database Management System (RDBMS) is a type of database management system that stores data in a structured format using rows and columns. Many relational database systems are equipped with the option of using SQL (Structured Query Language) for querying and updating the database. History The concept of relational database was defined by E. F. Codd at IBM in 1970. Codd introduced the term ''relational'' in his research paper "A Relational Model of Data for Large Shared Data Banks". In this paper and later papers, he defined what he meant by ''relation''. One well-known definition of what constitutes a relational database system is composed of Codd's 12 rules. However, no commercial implementations of the relational model conform to all of Codd's rules, so the term has gradually come to describe a broader class of database systems, which at a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

World Wide Web Consortium

The World Wide Web Consortium (W3C) is the main international standards organization for the World Wide Web. Founded in 1994 by Tim Berners-Lee, the consortium is made up of member organizations that maintain full-time staff working together in the development of standards for the World Wide Web. W3C has 350 members. The organization has been led by CEO Seth Dobbs since October 2023. W3C also engages in education and outreach, develops software and serves as an open forum for discussion about the Web. History The World Wide Web Consortium (W3C) was founded in 1994 by Tim Berners-Lee after he left the European Organization for Nuclear Research (CERN) in October 1994. It was founded at the Massachusetts Institute of Technology (MIT) Laboratory for Computer Science with support from the European Commission, and the Defense Advanced Research Projects Agency, which had pioneered the ARPANET, the most direct predecessor to the modern Internet. It was located in Technology Square (Ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Link Relation

A link relation is a descriptive attribute attached to a hyperlink in order to define the type of the link, or the relationship between the source and destination resources. The attribute can be used by automated systems, or can be presented to a user in a different way. In HTML these are designated with the attribute on , , or elements. Example uses include the standard way of referencing CSS, , which indicates that the external resource linked to with the attribute is a stylesheet, so a web browser will generally fetch this file to render the page. Another example is for the popular favicon icon. Link relations are used in some microformats (e.g. for tagging), in XHTML Friends Network (XFN), and in the Atom standard, in XLink, as well as in HTML. Standardized link relations are one of the foundations of HATEOAS as they allow the user agent to understand the meaning of the available state transitions in a Representational State Transfer system. The Internet Engineerin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Graph Database

A graph database (GDB) is a database that uses graph structures for semantic queries with nodes, edges, and properties to represent and store data. A key concept of the system is the graph (or edge or relationship). The graph relates the data items in the store to a collection of nodes and edges, the edges representing the relationships between the nodes. The relationships allow data in the store to be linked together directly and, in many cases, retrieved with one operation. Graph databases hold the relationships between data as a priority. Querying relationships is fast because they are perpetually stored in the database. Relationships can be intuitively visualized using graph databases, making them useful for heavily inter-connected data. Graph databases are commonly referred to as a NoSQL database. Graph databases are similar to 1970s network model databases in that both represent general graphs, but network-model databases operate at a lower level of abstraction and lac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

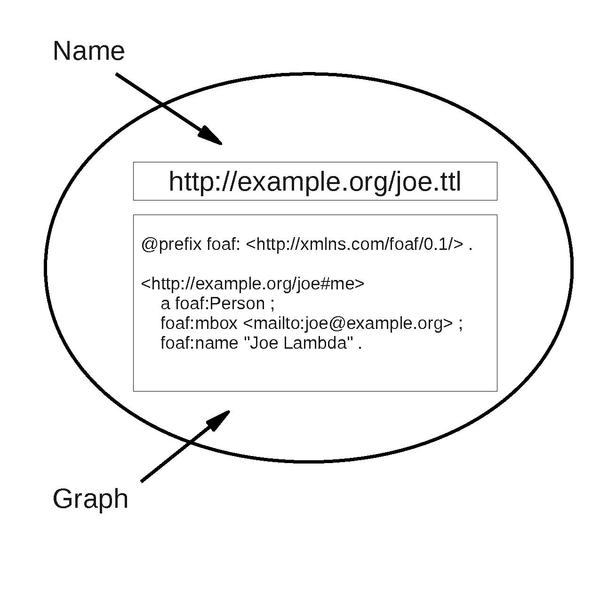

Named Graph

Named graphs are a key concept of Semantic Web architecture in which a set of Resource Description Framework statements (a graph) are identified using a URI, allowing descriptions to be made of that set of statements such as context, provenance information or other such metadata. Named graphs are a simple extension of the RDF data model through which graphs can be created but the model lacks an effective means of distinguishing between them once published on the Web at large. Named graphs and HTTP One conceptualization of the Web is as a graph of document nodes identified with URIs and connected by hyperlink arcs which are expressed within the HTML documents. By doing an HTTP GET on a URI (usually via a Web browser), a somehow-related document may be retrieved. This "follow your nose" approach also applies to RDF documents on the Web in the form of Linked Data, where typically an RDF syntax is used to express data as a series of statements, and URIs within the RDF point to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Commonsense Reasoning

In artificial intelligence (AI), commonsense reasoning is a human-like ability to make presumptions about the type and essence of ordinary situations humans encounter every day. These assumptions include judgments about the nature of physical objects, taxonomic properties, and peoples' intentions. A device that exhibits commonsense reasoning might be capable of drawing conclusions that are similar to humans' folk psychology (humans' innate ability to reason about people's behavior and intentions) and naive physics (humans' natural understanding of the physical world). Definitions and characterizations Some definitions and characterizations of common sense from different authors include: * "Commonsense knowledge includes the basic facts about events (including actions) and their effects, facts about knowledge and how it is obtained, facts about beliefs and desires. It also includes the basic facts about material objects and their properties." * "Commonsense knowledge differs from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

What-if Analysis

Sensitivity analysis is the study of how the uncertainty in the output of a mathematical model or system (numerical or otherwise) can be divided and allocated to different sources of uncertainty in its inputs. This involves estimating sensitivity indices that quantify the influence of an input or group of inputs on the output. A related practice is uncertainty analysis, which has a greater focus on uncertainty quantification and propagation of uncertainty; ideally, uncertainty and sensitivity analysis should be run in tandem. Motivation A mathematical model (for example in biology, climate change, economics, renewable energy, agronomy...) can be highly complex, and as a result, its relationships between inputs and outputs may be faultily understood. In such cases, the model can be viewed as a black box, i.e. the output is an "opaque" function of its inputs. Quite often, some or all of the model inputs are subject to sources of uncertainty, including errors of measurement, err ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Predicate (mathematical Logic)

In logic, a predicate is a symbol that represents a property or a relation. For instance, in the first-order formula P(a), the symbol P is a predicate that applies to the individual constant a. Similarly, in the formula R(a,b), the symbol R is a predicate that applies to the individual constants a and b. According to Gottlob Frege, the meaning of a predicate is exactly a function from the domain of objects to the truth values "true" and "false". In the semantics of logic, predicates are interpreted as relations. For instance, in a standard semantics for first-order logic, the formula R(a,b) would be true on an interpretation if the entities denoted by a and b stand in the relation denoted by R. Since predicates are non-logical symbols, they can denote different relations depending on the interpretation given to them. While first-order logic only includes predicates that apply to individual objects, other logics may allow predicates that apply to collections of objects defined b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database Scalability

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database. Before digital storage and retrieval of data have become widespread, index cards were used for data storage in a wide range of applications and environments: in the home to record and store recipes, shopping lists, contact information and other organizational data; in business to record presentation notes, project research and notes, and contact information; in schools as flash card ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Knowledge Base

In computer science, a knowledge base (KB) is a set of sentences, each sentence given in a knowledge representation language, with interfaces to tell new sentences and to ask questions about what is known, where either of these interfaces might use inference. It is a technology used to store complex structured data used by a computer system. The initial use of the term was in connection with expert systems, which were the first knowledge-based systems. Original usage of the term The original use of the term knowledge base was to describe one of the two sub-systems of an expert system. A knowledge-based system consists of a knowledge-base representing facts about the world and ways of reasoning about those facts to deduce new facts or highlight inconsistencies. Properties The term "knowledge-base" was coined to distinguish this form of knowledge store from the more common and widely used term ''database''. During the 1970s, virtually all large management information sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Software Engineering

Software engineering is a branch of both computer science and engineering focused on designing, developing, testing, and maintaining Application software, software applications. It involves applying engineering design process, engineering principles and computer programming expertise to develop software systems that meet user needs. The terms ''programmer'' and ''coder'' overlap ''software engineer'', but they imply only the construction aspect of a typical software engineer workload. A software engineer applies a software development process, which involves defining, Implementation, implementing, Software testing, testing, Project management, managing, and Software maintenance, maintaining software systems, as well as developing the software development process itself. History Beginning in the 1960s, software engineering was recognized as a separate field of engineering. The development of software engineering was seen as a struggle. Problems included software that was over ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |