|

Semantic Compression

In natural language processing, semantic compression is a process of compacting a lexicon used to build a textual document (or a set of documents) by reducing language heterogeneity, while maintaining text semantics. As a result, the same ideas can be represented using a smaller set of words. In most applications, semantic compression is a lossy compression, that is, increased prolixity does not compensate for the lexical compression, and an original document cannot be reconstructed in a reverse process. By generalization Semantic compression is basically achieved in two steps, using frequency dictionaries and semantic network: # determining cumulated term frequencies to identify target lexicon, # replacing less frequent terms with their hypernyms (generalization) from target lexicon. Step 1 requires assembling word frequencies and information on semantic relationships, specifically hyponymy. Moving upwards in word hierarchy, a cumulative concept frequency is calculating by a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

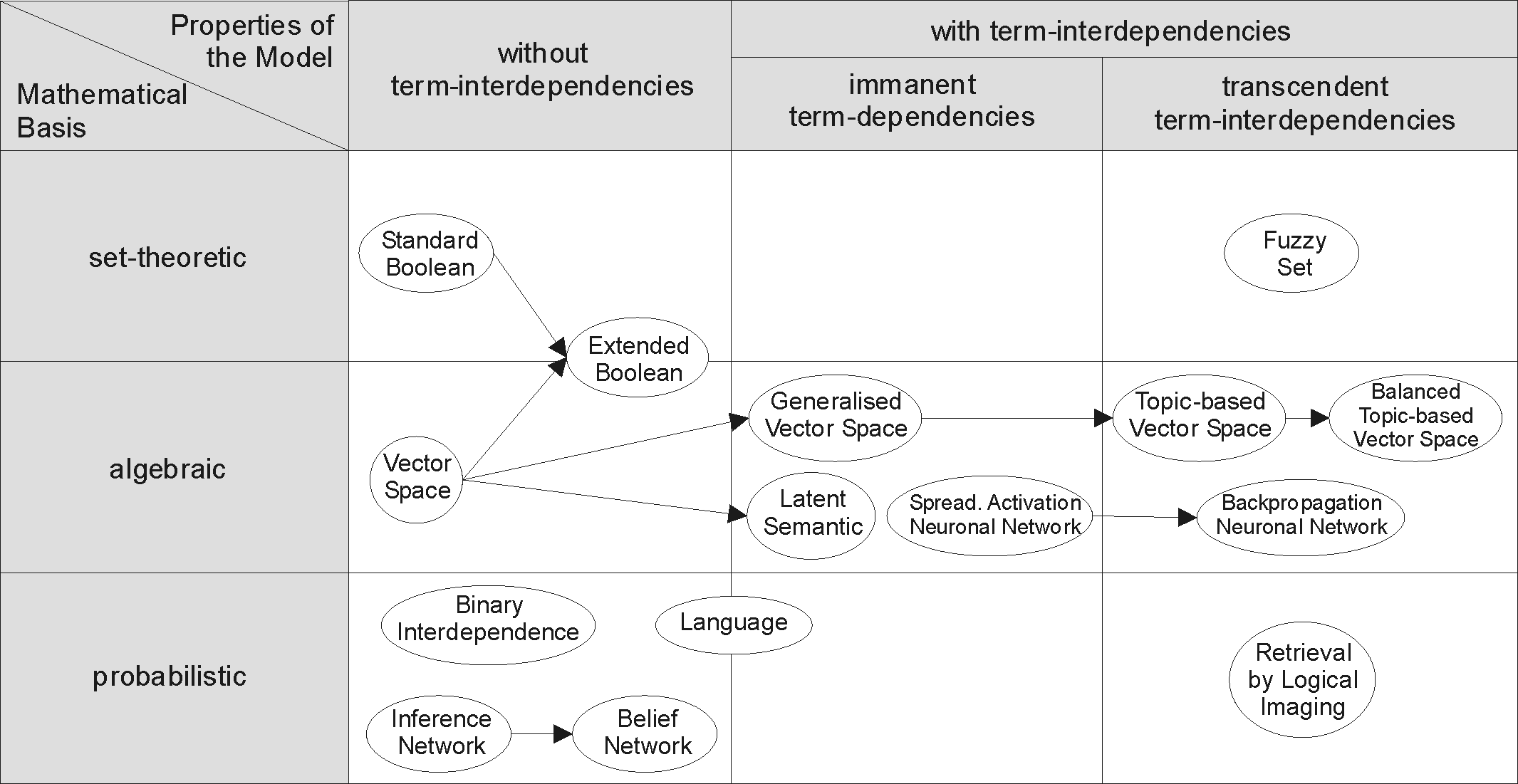

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval Techniques

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level information pertains to the interpretation of that which may be sensed. Any natural process that is not completely random, and any observable pattern in any medium can be said to convey some amount of information. Whereas digital signals and other data use discrete signs to convey information, other phenomena and artifacts such as analog signals, poems, pictures, music or other sounds, and currents convey information in a more continuous form. Information is not knowledge itself, but the meaning that may be derived from a representation through interpretation. Information is often processed iteratively: Data available at one step are processed into information to be interpreted and processed at the next step. For example, in written text each symbol or letter conveys information relevant to the word it is part of, each word conveys information relevan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Simplification

Text simplification is an operation used in natural language processing to change, enhance, classify, or otherwise process an existing body of human-readable text so its grammar and structure is greatly simplified while the underlying meaning and information remain the same. Text simplification is an important area of research because of communication needs in an increasingly complex and interconnected world more dominated by science, technology, and new media. But natural human languages pose huge problems because they ordinarily contain large vocabularies and complex constructions that machines, no matter how fast and well-programmed, cannot easily process. However, researchers have discovered that, to reduce linguistic diversity, they can use methods of semantic compression to limit and simplify a set of words used in given texts. Example Text simplification is illustrated with an example used by Siddharthan (2006). The first sentence contains two relative clauses and one con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantities Of Information

Quantity or amount is a property that can exist as a multitude or magnitude, which illustrate discontinuity and continuity. Quantities can be compared in terms of "more", "less", or "equal", or by assigning a numerical value multiple of a unit of measurement. Mass, time, distance, heat, and angle are among the familiar examples of quantitative properties. Quantity is among the basic classes of things along with quality, substance, change, and relation. Some quantities are such by their inner nature (as number), while others function as states (properties, dimensions, attributes) of things such as heavy and light, long and short, broad and narrow, small and great, or much and little. Under the name of multitude comes what is discontinuous and discrete and divisible ultimately into indivisibles, such as: ''army, fleet, flock, government, company, party, people, mess (military), chorus, crowd'', and ''number''; all which are cases of collective nouns. Under the name of magnitud ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Substitution

Lexical substitution is the task of identifying a substitute for a word in the context of a clause. For instance, given the following text: "After the ''match'', replace any remaining fluid deficit to prevent chronic dehydration throughout the tournament", a substitute of ''game'' might be given. Lexical substitution is strictly related to word sense disambiguation (WSD), in that both aim to determine the meaning of a word. However, while WSD consists of automatically assigning the appropriate sense from a fixed sense inventory, lexical substitution does not impose any constraint on which substitute to choose as the best representative for the word in context. By not prescribing the inventory, lexical substitution overcomes the issue of the granularity of sense distinctions and provides a level playing field for automatic systems that automatically acquire word senses (a task referred to as Word Sense Induction). Evaluation In order to evaluate automatic systems on lexical subs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Controlled Natural Language

Controlled natural languages (CNLs) are subsets of natural languages that are obtained by restricting the grammar and vocabulary in order to reduce or eliminate ambiguity and complexity. Traditionally, controlled languages fall into two major types: those that improve readability for human readers (e.g. non-native speakers), and those that enable reliable automatic semantic analysis of the language. The first type of languages (often called "simplified" or "technical" languages), for example ASD Simplified Technical English, Caterpillar Technical English, IBM's Easy English, are used in the industry to increase the quality of technical documentation, and possibly simplify the semi-automatic translation of the documentation. These languages restrict the writer by general rules such as "Keep sentences short", "Avoid the use of pronouns", "Only use dictionary-approved words", and "Use only the active voice". The second type of languages have a formal syntax and semantics, and can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of computationa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantics

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy Philosophy (from , ) is the systematized study of general and fundamental questions, such as those about existence, reason, knowledge, values, mind, and language. Such questions are often posed as problems to be studied or resolved. Some ..., linguistics and computer science. History In English, the study of meaning in language has been known by many names that involve the Ancient Greek word (''sema'', "sign, mark, token"). In 1690, a Greek rendering of the term ''semiotics'', the interpretation of signs and symbols, finds an early allusion in John Locke's ''An Essay Concerning Human Understanding'': The third Branch may be called [''simeiotikí'', "semiotics"], or the Doctrine of Signs, the most usual whereof being words, it is aptly enough ter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Curse Of Dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The expression was coined by Richard E. Bellman when considering problems in dynamic programming. Dimensionally cursed phenomena occur in domains such as numerical analysis, sampling, combinatorics, machine learning, data mining and databases. The common theme of these problems is that when the dimensionality increases, the volume of the space increases so fast that the available data become sparse. In order to obtain a reliable result, the amount of data needed often grows exponentially with the dimensionality. Also, organizing and searching data often relies on detecting areas where objects form groups with similar properties; in high dimensional data, however, all objects appear to be sparse and dissimilar in many ways, which prevents co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |