|

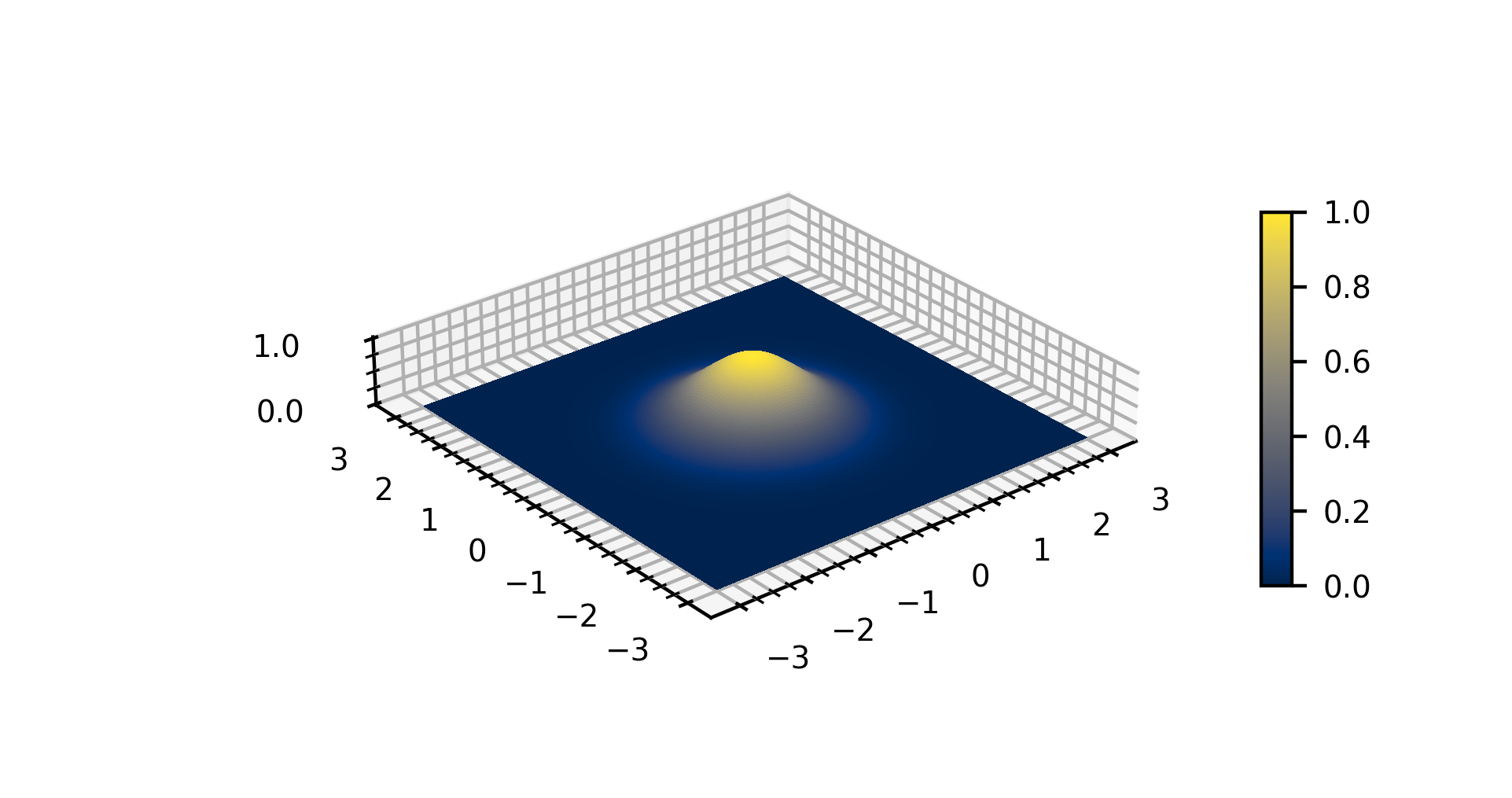

Radial Basis Function Kernel

In machine learning, the radial basis function kernel, or RBF kernel, is a popular kernel function used in various kernelized learning algorithms. In particular, it is commonly used in support vector machine classification. The RBF kernel on two samples \mathbf\in \mathbb^ and x', represented as feature vectors in some ''input space'', is defined asJean-Philippe Vert, Koji Tsuda, and Bernhard Schölkopf (2004)"A primer on kernel methods".''Kernel Methods in Computational Biology''. :K(\mathbf, \mathbf) = \exp\left(-\frac\right) \textstyle\, \mathbf - \mathbf\, ^2 may be recognized as the squared Euclidean distance between the two feature vectors. \sigma is a free parameter. An equivalent definition involves a parameter \textstyle\gamma = \tfrac: :K(\mathbf, \mathbf) = \exp(-\gamma\, \mathbf - \mathbf\, ^2) Since the value of the RBF kernel decreases with distance and ranges between zero (in the limit) and one (when ), it has a ready interpretation as a similarity measure. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nyström Method

In mathematics numerical analysis, the Nyström method or quadrature method seeks the numerical solution of an integral equation by replacing the integral with a representative weighted sum. The continuous problem is broken into n discrete intervals; quadrature or numerical integration determines the weights and locations of representative points for the integral. The problem becomes a system of linear equations with n equations and n unknowns, and the underlying function is implicitly represented by an interpolation using the chosen quadrature rule. This discrete problem may be ill-conditioned, depending on the original problem and the chosen quadrature rule. Since the linear equations require O(n^3) operations to solve, high-order quadrature rules perform better because low-order quadrature rules require large n for a given accuracy. Gaussian quadrature is normally a good choice for smooth, non-singular problems. Discretization of the integral Standard quadrature method ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Obst Kernel Network

Obst is a German language surname, which means "fruit". It may refer to: * Alan Obst (born 1987), Australian football player * Andreas Obst (born 1996), German basketball player *Andrew Obst (born 1964), Australian football player *Chris Obst (born 1979), Australian football player * David Obst (born 1946), American literary agent *Erich Obst (1886–1981), German geographer *Henry Obst (1906–1975), American football player *Herbert Obst (born 1936), Canadian fencer *Lynda Obst (born 1950), American film producer *Michael Obst (born 1944), German rower *Michael Obst (composer) (born 1955), German composer *Peter Obst (born 1936), Australian football player *Sam Obst (born 1980), Australian rugby league player *Seweryn Obst (1847- 1917), Polish painter, illustrator and ethnographer *Trevor Obst Trevor Obst (21 June 1940 – 1 December 2015) was an Australian rules footballer who played with Port Adelaide in the South Australian National Football League (SANFL) during the 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radial Basis Function Network

In the field of mathematical modeling, a radial basis function network is an artificial neural network that uses radial basis functions as activation functions. The output of the network is a linear combination of radial basis functions of the inputs and neuron parameters. Radial basis function networks have many uses, including function approximation, time series prediction, classification, and system control. They were first formulated in a 1988 paper by Broomhead and Lowe, both researchers at the Royal Signals and Radar Establishment. Network architecture Radial basis function (RBF) networks typically have three layers: an input layer, a hidden layer with a non-linear RBF activation function and a linear output layer. The input can be modeled as a vector of real numbers \mathbf \in \mathbb^n. The output of the network is then a scalar function of the input vector, \varphi : \mathbb^n \to \mathbb , and is given by :\varphi(\mathbf) = \sum_^N a_i \rho(, , \mathbf-\mathbf_i, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radial Basis Function

A radial basis function (RBF) is a real-valued function \varphi whose value depends only on the distance between the input and some fixed point, either the origin, so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ), or some other fixed point \mathbf, called a ''center'', so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf-\mathbf\right\, ). Any function \varphi that satisfies the property \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ) is a radial function. The distance is usually Euclidean distance, although other metrics are sometimes used. They are often used as a collection \_k which forms a basis for some function space of interest, hence the name. Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they were originally applied to machine learning, in work by David Broomhead and David Lowe in 1988, which st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Kernel

In machine learning, the polynomial kernel is a kernel function commonly used with support vector machines (SVMs) and other kernelized models, that represents the similarity of vectors (training samples) in a feature space over polynomials of the original variables, allowing learning of non-linear models. Intuitively, the polynomial kernel looks not only at the given features of input samples to determine their similarity, but also combinations of these. In the context of regression analysis, such combinations are known as interaction features. The (implicit) feature space of a polynomial kernel is equivalent to that of polynomial regression, but without the combinatorial blowup in the number of parameters to be learned. When the input features are binary-valued (booleans), then the features correspond to logical conjunctions of input features.Yoav Goldberg and Michael Elhadad (2008). splitSVM: Fast, Space-Efficient, non-Heuristic, Polynomial Kernel Computation for NLP Applicatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel (statistics)

The term kernel is used in statistical analysis to refer to a window function. The term "kernel" has several distinct meanings in different branches of statistics. Bayesian statistics In statistics, especially in Bayesian statistics, the kernel of a probability density function (pdf) or probability mass function (pmf) is the form of the pdf or pmf in which any factors that are not functions of any of the variables in the domain are omitted. Note that such factors may well be functions of the parameters of the pdf or pmf. These factors form part of the normalization factor of the probability distribution, and are unnecessary in many situations. For example, in pseudo-random number sampling, most sampling algorithms ignore the normalization factor. In addition, in Bayesian analysis of conjugate prior distributions, the normalization factors are generally ignored during the calculations, and only the kernel considered. At the end, the form of the kernel is examined, and if i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Function

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric " bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describe the normal distributions, in signal processing to define Gaussian filters, in image processing where two-dimen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gramian Matrix

In linear algebra, the Gram matrix (or Gramian matrix, Gramian) of a set of vectors v_1,\dots, v_n in an inner product space is the Hermitian matrix of inner products, whose entries are given by the inner product G_ = \left\langle v_i, v_j \right\rangle., p.441, Theorem 7.2.10 If the vectors v_1,\dots, v_n are the columns of matrix X then the Gram matrix is X^* X in the general case that the vector coordinates are complex numbers, which simplifies to X^\top X for the case that the vector coordinates are real numbers. An important application is to compute linear independence: a set of vectors are linearly independent if and only if the Gram determinant (the determinant of the Gram matrix) is non-zero. It is named after Jørgen Pedersen Gram. Examples For finite-dimensional real vectors in \mathbb^n with the usual Euclidean dot product, the Gram matrix is G = V^\top V, where V is a matrix whose columns are the vectors v_k and V^\top is its transpose whose rows are the vectors v_k ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigendecomposition

In linear algebra, eigendecomposition is the factorization of a matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrices can be factorized in this way. When the matrix being factorized is a normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the spectral theorem. Fundamental theory of matrix eigenvectors and eigenvalues A (nonzero) vector of dimension is an eigenvector of a square matrix if it satisfies a linear equation of the form :\mathbf \mathbf = \lambda \mathbf for some scalar . Then is called the eigenvalue corresponding to . Geometrically speaking, the eigenvectors of are the vectors that merely elongates or shrinks, and the amount that they elongate/shrink by is the eigenvalue. The above equation is called the eigenvalue equation or the eigenvalue problem. This yields an equation for the eigenvalues : p\left(\lambda\right) = \det\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

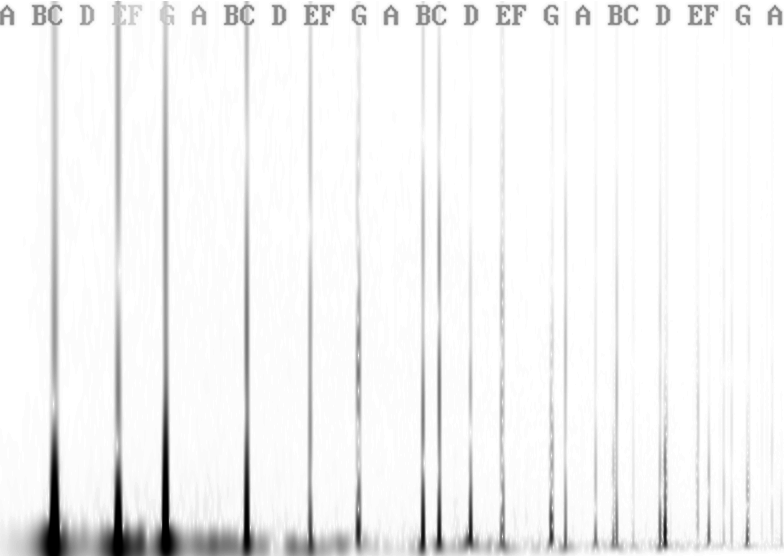

Fourier Transformation

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude (absolute value) of the complex value represents the amplitude of a constituent complex sinusoid wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radial Basis Function

A radial basis function (RBF) is a real-valued function \varphi whose value depends only on the distance between the input and some fixed point, either the origin, so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ), or some other fixed point \mathbf, called a ''center'', so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf-\mathbf\right\, ). Any function \varphi that satisfies the property \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ) is a radial function. The distance is usually Euclidean distance, although other metrics are sometimes used. They are often used as a collection \_k which forms a basis for some function space of interest, hence the name. Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they were originally applied to machine learning, in work by David Broomhead and David Lowe in 1988, which st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |