|

Robots.txt

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit. The standard, developed in 1994, relies on voluntary compliance. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with security through obscurity. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate server overload. In the 2020s, websites began denying bots that collect information for generative artificial intelligence. The "robots.txt" file can be used in conjunction with sitemaps, another robot inclusion standard for websites. History The standard was proposed by Martijn Koster, when working for Nexor in February 1994 on the ''www-talk'' mailing list, the main communication channel for WWW-related activities at the time. Charles Stross clai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sitemaps

Sitemaps is a protocol in XML format meant for a webmaster to inform search engines about URLs on a website that are available for web crawling. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site. This allows search engines to crawl the site more efficiently and to find URLs that may be isolated from the rest of the site's content. The Sitemaps protocol is a URL inclusion protocol and complements robots.txt, a URL exclusion protocol. History Google first introduced Sitemaps 0.84 in June 2005 so web developers could publish lists of links from across their sites. Google, Yahoo! and Microsoft announced joint support for the Sitemaps protocol in November 2006. The schema version was changed to "Sitemap 0.90", but no other changes were made. In April 2007, Ask.com and IBM announced support for Sitemaps. Also, Google, Yahoo, MSN announced auto-discove ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Crawler

Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which Index (search engine), indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request Software agent, bots to index only parts of a website, or nothing at all. The number of In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine

A search engine is a software system that provides hyperlinks to web pages, and other relevant information on World Wide Web, the Web in response to a user's web query, query. The user enters a query in a web browser or a mobile app, and the search engine results page, search results are typically presented as a list of hyperlinks accompanied by textual summaries and images. Users also have the option of limiting a search to specific types of results, such as images, videos, or news. For a search provider, its software engine, engine is part of a distributed computing system that can encompass many data centers throughout the world. The speed and accuracy of an engine's response to a query are based on a complex system of Search engine indexing, indexing that is continuously updated by automated web crawlers. This can include data mining the Computer file, files and databases stored on web servers, although some content is deep web, not accessible to crawlers. There have been ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Bot

An Internet bot, web robot, robot, or simply bot, is a software application that runs automated tasks ( scripts) on the Internet, usually with the intent to imitate human activity, such as messaging, on a large scale. An Internet bot plays the client role in a client–server model whereas the server role is usually played by web servers. Internet bots are able to perform simple and repetitive tasks much faster than a person could ever do. The most extensive use of bots is for web crawling, in which an automated script fetches, analyzes and files information from web servers. More than half of all web traffic is generated by bots. Efforts by web servers to restrict bots vary. Some servers have a robots.txt file that contains the rules governing bot behavior on that server. Any bot that does not follow the rules could, in theory, be denied access to or removed from the affected website. If the posted text file has no associated program/software/app, then adhering to the rules i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Martijn Koster

Martijn Koster (born ca 1970) is a Dutch software engineer noted for his pioneering work on Internet searching. Koster created ALIWEB, the Internet's first search engine, which was announced in November 1993plaintext version while working at Nexor and presented in May 1994 at the First International Conference on the World Wide Web

The World Wide Web (WWW or simply th ...

[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Port (computer Networking)

In computer networking, a port is a communication endpoint. At the software level within an operating system, a port is a logical construct that identifies a specific process or a type of network service. A port is uniquely identified by a number, the port number, associated with the combination of a transport protocol and the network IP address. Port numbers are 16-bit unsigned integers. The most common transport protocols that use port numbers are the Transmission Control Protocol (TCP) and the User Datagram Protocol (UDP). The port completes the destination and origination addresses of a message within a host to point to an operating system process. Specific port numbers are reserved to identify specific services so that an arriving packet can be easily forwarded to a running application. For this purpose, port numbers lower than 1024 identify the historically most commonly used services and are called the well-known port numbers. Higher-numbered ports are available for g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

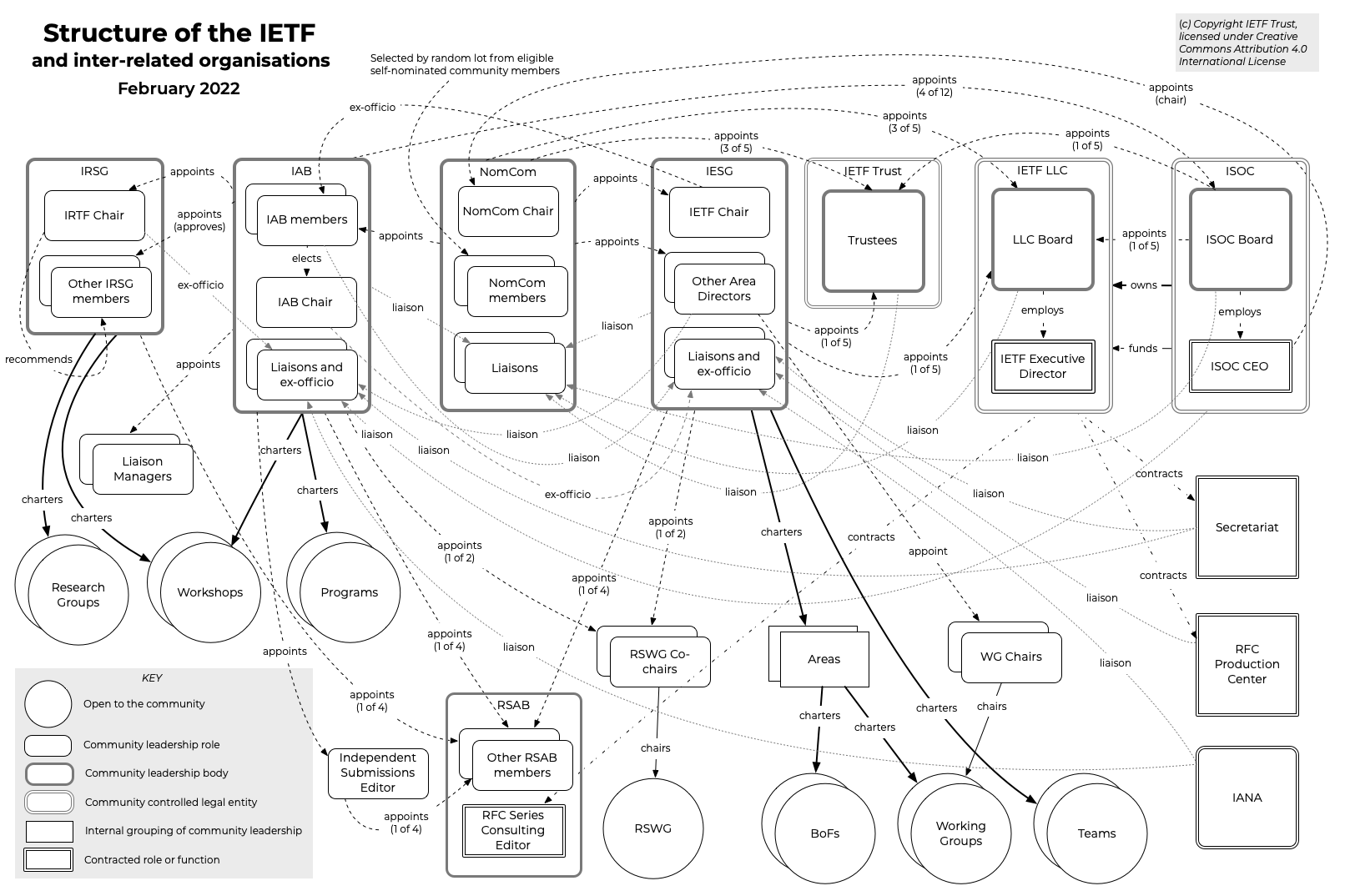

Internet Engineering Task Force

The Internet Engineering Task Force (IETF) is a standards organization for the Internet standard, Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster or requirements and all its participants are volunteers. Their work is usually funded by employers or other sponsors. The IETF was initially supported by the federal government of the United States but since 1993 has operated under the auspices of the Internet Society, a non-profit organization with local chapters around the world. Organization There is no membership in the IETF. Anyone can participate by signing up to a working group mailing list, or registering for an IETF meeting. The IETF operates in a bottom-up task creation mode, largely driven by working groups. Each working group normally has appointed two co-chairs (occasionally three); a charter that describes its focus; and what it is expected to produce, and when. It is open ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Same Origin Policy

Same may refer to: * Sameness or identity * '' Idem,'' Latin term for "the same" used in citations Places * Same (Homer), an island mentioned by Homer in the ''Odyssey'' * Same (polis), an ancient city * Same, Timor-Leste, the capital of the Manufahi district * Samé, Mali * Same, Tanzania * Same District, Tanzania Other uses * SAME Deutz-Fahr, an Italian manufacturer of tractors, combine harvesters and engines * SAME (tractors), a brand of SAME Deutz-Fahr * S-adenosyl methionine or SAMe, an amino acid * Society of American Military Engineers * Specific Area Message Encoding, a coding system within the Emergency Alert System in the United States * Governor Francisco Gabrielli International Airport, Argentina, ICAO code "SAME" * "Same", a song by Snow Patrol from ''Final Straw'' * "Same", a song by Oneohtrix Point Never from ''Age Of'' * The Same, a punk band * Syndrome of apparent mineralocorticoid excess, an autosomal recessive disorder causing hypertension and hypok ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subdomain

In the Domain Name System (DNS) hierarchy, a subdomain is a domain that is a part of another (main) domain. For example, if a domain offered an online store as part of their website it might use the subdomain. Overview The Domain Name System (DNS) has a tree structure or hierarchy, which includes nodes on the tree being a domain name. A subdomain is a domain that is part of a larger domain. Each label may contain from 0 to 63 octets.RFC 1034, ''Domain Names - Concepts and Facilities'', P. Mockapetris (Nov 1987) The full domain name may not exceed a total length of 253 ASCII characters in its textual representation.RFC 1035, ''Domain names--Implementation and specification'', P. Mockapetris (Nov 1987) Subdomains are defined by editing the DNS zone file pertaining to the parent domain. However, there is an ongoing debate over the use of the term "subdomain" when referring to names which map to the Address record A (host) and various other types of zone records which may map t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of URI Schemes

This article lists common URI schemes. A Uniform Resource Identifier helps identify a source without ambiguity. Many URI schemes are registered with the IANA; however, there exist many unofficial URI schemes as well. Mobile deep links are one example of a class of unofficial URI schemes that allow for linking directly to a specific location in a mobile app. Official IANA-registered schemes URI schemes registered with the IANA, both provisional and fully approved, are listed in its registry foUniform Resource Identifier (URI) Schemes These include well known ones like: * - File URI scheme * – File Transfer Protocol * – Hypertext Transfer Protocol * – Hypertext Transfer Protocol Secure * – mailto for email addresses * – for telephone numbers * – Internet Message Access Protocol * – Internet Relay Chat * – Network News Transfer Protocol as well as many lesser known schemes like: * – Application Configuration Access Protocol * – Internet Content ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Filename

A filename or file name is a name used to uniquely identify a computer file in a file system. Different file systems impose different restrictions on filename lengths. A filename may (depending on the file system) include: * name – base name of the file * Filename extension, extension – may indicate the File format, format of the file (e.g. .txt for plain text, .pdf for Portable Document Format, .dat for unspecified binary data, etc.) The components required to identify a file by utilities and applications varies across operating systems, as does the syntax and format for a valid filename. The characters allowed in filenames depend on the file system. The letters A–Z and digits 0–9 are allowed by most file systems; many file systems support additional characters, such as the letters a–z, special characters, and other printable characters such as accented letters, symbols in non-Roman alphabets, and symbols in non-alphabetic scripts. Some file systems allow even ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EBay V

eBay Inc. ( , often stylized as ebay) is an American multinational e-commerce company based in San Jose, California, that allows users to buy or view items via retail sales through online marketplaces and websites in 190 markets worldwide. Sales occur either via online auctions or "buy it now" instant sales, and the company charges commissions to sellers upon sales. eBay was founded by Pierre Omidyar in September 1995. It has 132 million yearly active buyers worldwide and handled $73 billion in transactions in 2023, 48% of which were in the United States. In 2023, the company had a take rate (revenue as a percentage of volume) of 13.81%. The company is listed on the Nasdaq Global Select Market and is a component of the S&P 500 and formerly the Nasdaq-100. eBay can be used by individuals, companies and governments to purchase and sell almost any legal, non-controversial item. eBay's auctions use a Vickrey auction (sealed-bid) proxy bid system. Buyers and sellers may rate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |