|

Recommender System

A recommender system (RecSys), or a recommendation system (sometimes replacing ''system'' with terms such as ''platform'', ''engine'', or ''algorithm'') and sometimes only called "the algorithm" or "algorithm", is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer. Modern recommendation systems such as those used on large social media sites make extensive use of AI, machine learning and related techniques to learn the behavior and preferences of each user and categorize content to tailor their feed individually. Typically, the suggestions refer to various decision-making processes, such as what product to purchase, what music to listen to, or what online news to read. Recommender systems are used in a variety of areas, with commonly recognised ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pandora Radio

Pandora is a subscription-based music streaming service owned by the broadcasting corporation Sirius XM that is based in Oakland, California in the United States. The service carries a focus on recommendations based on the " Music Genome Project", which is a means of classifying individual songs by musical traits such as genres and shared instrumentation. The service originally launched in the consumer market as an internet radio service that would generate personalized channels based on these traits as well as specific tracks liked by the user; this service is available in an advertising-supported tier and additionally a subscription-based version. In 2017, the service launched ''Pandora Premium'', which is an on-demand version of the service more in line with contemporary competitors. The company was founded in 2000 as Savage Beast Technologies, and initially conceived as a business-to-business company licensing the Music Genome Project to retailers as a recommendation p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gediminas Adomavicius

Gediminas ( – December 1341) was Grand Duke of Lithuania from 1315 or 1316 until his death in 1341. He is considered the founder of Lithuania's capital Vilnius (see: Iron Wolf legend). During his reign, he brought under his rule lands from the Baltic Sea to the Black Sea. The Gediminids dynasty he founded and which is named after him came to rule over Poland, Hungary and Bohemia. Biography Origin Gediminas was born in about 1275. Because written sources of the era are scarce, Gediminas' ancestry, early life, and assumption of the title of Grand Duke in ca. 1316 are obscure and continue to be the subject of scholarly debate. Various theories have claimed that Gediminas was either his predecessor Grand Duke Vytenis' son, his brother, his cousin, or his hostler. For several centuries only two versions of his origins circulated. Chronicles — written long after Gediminas' death by the Teutonic Knights, a long-standing enemy of Lithuania — claimed that Gediminas was a hostl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

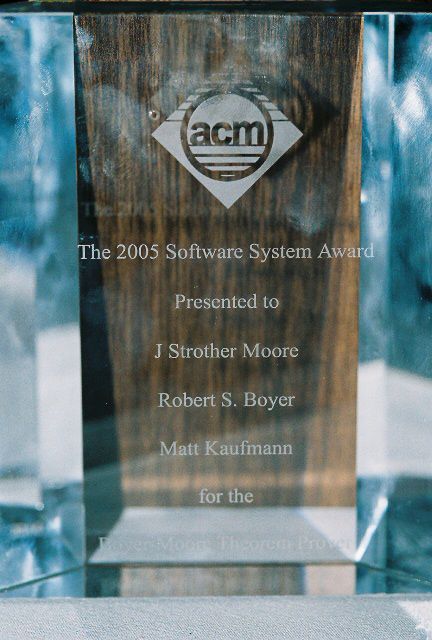

ACM Software Systems Award

The ACM Software System Award is an annual award that honors people or an organization "for developing a software system that has had a lasting influence, reflected in contributions to concepts, in commercial acceptance, or both". It is awarded by the Association for Computing Machinery (ACM) since 1983, with a cash prize sponsored by IBM of currently $35,000. Recipients The following is a list of recipients of the ACM Software System Award: See also * Software system * List of computer science awards References External links Software System Award — ACM Awards {{Association for Computing Machinery Awards established in 1983 Awards of the Association for Computing Machinery, Software System Award Computer science awards ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paul Resnick

Paul Resnick is Michael D. Cohen Collegiate Professor of Information at the School of Information at the University of Michigan. Education Paul Resnick was born in New York and attended the University of Michigan for his undergraduate studies. He received a Ph.D. at the Massachusetts Institute of Technology (MIT) in 1992 in Computer Science. After graduating from MIT, Resnick worked at AT&T Labs and AT&T Bell Labs and was an assistant professor at the MIT Sloan School of Management. He became an assistant professor at the University of Michigan The University of Michigan (U-M, U of M, or Michigan) is a public university, public research university in Ann Arbor, Michigan, United States. Founded in 1817, it is the oldest institution of higher education in the state. The University of Mi ... in 1997, and subsequently became associate professor, professor, and then associate dean. Awards Resnick was elected to the CHI Academy in 2017. He received the 2010 ACM Software Systems ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pattie Maes

Pattie Maes (born 1961) is a Belgian scientist. She is a professor in MIT's program in Media Arts and Sciences. She founded and directed the MIT Media Lab's Fluid Interfaces Group. Previously, she founded and ran the Software Agents group. She served for several years as both the head and associate head of the Media Lab's academic program. Prior to joining the Media Lab, Maes was a visiting professor and a research scientist at the MIT Artificial Intelligence Lab. She holds bachelor's degree in computer science and PhD degree in AI from the Vrije Universiteit Brussel in Belgium. She did post-graduate work at MIT under Rodney Brooks and Marvin Minsky. Maes launched the Software Agents Group at the Lab in 1991. One of the main projects from the group was the Helpful Online Music Recommendations (HOMR), later renamed “Ringo” in 1994. Users would rate a random sampling of music artists on a scale from 1 to 7. This creates a user profile. The system would look for similar u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SICS

RISE SICS (previously Swedish Institute of Computer Science) is a leading research institute for applied information and communication technology in Sweden, founded in 1985. It explores the digitalization of products, services and businesses. In January 2005, SICS had about 88 employees, of whom 77 were researchers, 30 with PhD degrees. , SICS had about 200 employees, of which 160 were researchers, 83 with PhD degrees. The institute is headquartered in the Kista district of Stockholm, with the main office in the Electrum building. Software Several well-known software packages have been developed at SICS: *Contiki, an operating system for small-memory embedded devices * Delegent, an authorization server * Distributed Interactive Virtual Environment or DIVE in short * lwIP, a TCP/IP stack for embedded systems * Oz-Mozart, a multi-platform programming system * Nemesis, a concept exokernel operating system * Protothreads, light-weight stackless threads * Quintus Prolog and SIC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jussi Karlgren

Jussi Karlgren is a Swedish computational linguist, research scientist at Spotify, and co-founder of text analytics company Gavagai AB. He holds a PhD in computational linguistics from Stockholm University, and the title of docent (adjoint professor) of language technology at Helsinki University. Jussi Karlgren is known for having pioneered the application of computational linguistics to stylometry, for having first formulated the notion of a recommender system A recommender system (RecSys), or a recommendation system (sometimes replacing ''system'' with terms such as ''platform'', ''engine'', or ''algorithm'') and sometimes only called "the algorithm" or "algorithm", is a subclass of information fi ..., and for his continued work in bringing non-topical features of text to the attention of the information access research field. Karlgren's research is focused on questions relating to information access, genre and stylistics, distributional pragmatics, and evaluation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Elaine Rich

Elaine Alice Rich is an American computer scientist, known for her textbooks on artificial intelligence and automata theory and for her research on user modeling. She is retired as a distinguished senior lecturer from the University of Texas at Austin. Education and career Rich is the daughter of applied mathematician Robert Peter Rich. She majored in linguistics and applied mathematics at Brown University, graduating magna cum laude in 1972. She completed her Ph.D. at Carnegie Mellon University in 1979. Her doctoral dissertation, ''Building and Exploiting User Models'', was supervised by George G. Robertson. She joined the University of Texas at Austin as an assistant professor in 1979, but in 1985 moved to the Microelectronics and Computer Technology Corporation (MCC) as a researcher in the Human Interface Laboratory and Knowledge-Based Natural Language Project. At MCC she became director of the Artificial Intelligence Laboratory in 1988. She left MCC in 1993. In 1998 she r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gonzalez V

Gonzalez or González may refer to: People * González (surname) Places * González, Cesar, Colombia * González Municipality, Tamaulipas, Mexico * Gonzalez, Florida, United States * González Island, Antarctica * González Anchorage, Antarctica * Juan González, Adjuntas, Puerto Rico * Pedro González, Panama Other * Ernesto Gonzalez, cartoon character in ''Bordertown'' (American TV series) * Gonzalez (band), a British band, and their 1974 album * Gonzalez (organ builders), French firm of organ builders * González Byass, a Spanish winery * USS ''Gonzalez'', a U.S. Navy destroyer See also * * * * * Gonçalves, Portuguese equivalent of Gonzalez * Gonsales, Portuguese variation of Gonzalez * Gonsalves, English language variation of Gonçalves * Gonzales (other) Gonzales may refer to: Places * Gonzales, California, U.S. * Gonzales, Louisiana, U.S. * Gonzales, Texas, U.S. * Gonzales County, Texas Other uses * Battle of Gonzales, 1835 * Gonzales (hors ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Algorithm

In computer science, a search algorithm is an algorithm designed to solve a search problem. Search algorithms work to retrieve information stored within particular data structure, or calculated in the Feasible region, search space of a problem domain, with Continuous or discrete variable, either discrete or continuous values. Although Search engine (computing), search engines use search algorithms, they belong to the study of information retrieval, not algorithmics. The appropriate search algorithm to use often depends on the data structure being searched, and may also include prior knowledge about the data. Search algorithms can be made faster or more efficient by specially constructed database structures, such as search trees, hash maps, and database indexes. Search algorithms can be classified based on their mechanism of searching into three types of algorithms: linear, binary, and hashing. Linear search algorithms check every record for the one associated with a target key i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Special Interest Group On Information Retrieval

SIGIR is the Association for Computing Machinery's Special Interest Group on Information Retrieval. The scope of the group's specialty is the theory and application of computers to the acquisition, organization, storage, retrieval and distribution of information; emphasis is placed on working with non-numeric information, ranging from natural language to highly structured data bases. Conferences The annual international SIGIR conference, which began in 1978, is considered the most important in the field of information retrieval. SIGIR also sponsors the annual Joint Conference on Digital Libraries (JCDL) in association with SIGWEB, the Conference on Information and Knowledge Management (CIKM), and the International Conference on Web Search and Data Mining (WSDM) in association with SIGKDD, SIGMOD, and SIGWEB. SIGIR conference locations Awards The group gives out several awards to contributions to the field of information retrieval. The most important award is the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |