|

Proximal Gradient Method

Proximal gradient methods are a generalized form of projection used to solve non-differentiable convex optimization problems. Many interesting problems can be formulated as convex optimization problems of the form \operatorname\limits_ \sum_^n f_i(x) where f_i: \mathbb^N \rightarrow \mathbb,\ i = 1, \dots, n are possibly non-differentiable convex functions. The lack of differentiability rules out conventional smooth optimization techniques like the steepest descent method and the conjugate gradient method, but proximal gradient methods can be used instead. Proximal gradient methods starts by a splitting step, in which the functions f_1, . . . , f_n are used individually so as to yield an easily implementable algorithm. They are called proximal because each non-differentiable function among f_1, . . . , f_n is involved via its proximity operator. Iterative shrinkage thresholding algorithm, projected Landweber, projected gradient, alternating projections, alternating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

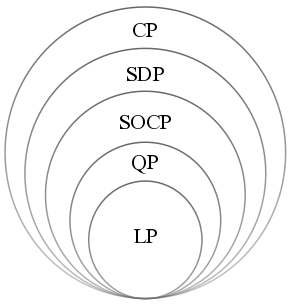

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Statistical Learning Theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data. Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics. Introduction The goals of learning are understanding and prediction. Learning falls into many categories, including supervised learning, unsupervised learning, online learning, and reinforcement learning. From the perspective of statistical learning theory, supervised learning is best understood. Supervised learning involves learning from a training set of data. Every point in the training is an input–output pair, where the input maps to an output. The learning problem consists of inferring the function that maps between the input and the output, such that the learned function can be used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Matlab

MATLAB (an abbreviation of "MATrix LABoratory") is a proprietary multi-paradigm programming language and numeric computing environment developed by MathWorks. MATLAB allows matrix manipulations, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other languages. Although MATLAB is intended primarily for numeric computing, an optional toolbox uses the MuPAD symbolic engine allowing access to symbolic computing abilities. An additional package, Simulink, adds graphical multi-domain simulation and model-based design for dynamic and embedded systems. , MATLAB has more than four million users worldwide. They come from various backgrounds of engineering, science, and economics. , more than 5000 global colleges and universities use MATLAB to support instruction and research. History Origins MATLAB was invented by mathematician and computer programmer Cleve Moler. The idea for MATLAB was base ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Julia (programming Language)

Julia is a high-level programming language, high-level, general-purpose programming language, general-purpose dynamic programming language, dynamic programming language, designed to be fast and productive, for e.g. data science, artificial intelligence, machine learning, modeling and simulation, most commonly used for numerical analysis and computational science. Distinctive aspects of Julia's design include a type system with parametric polymorphism and the use of multiple dispatch as a core programming paradigm, a default just-in-time compilation, just-in-time (JIT) compiler (with support for ahead-of-time compilation) and an tracing garbage collection, efficient (multi-threaded) garbage collection implementation. Notably Julia does not support classes with encapsulated methods and instead it relies on structs with generic methods/functions not tied to them. By default, Julia is run similarly to scripting languages, using its runtime, and allows for read–eval–print loop, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Frank–Wolfe Algorithm

The Frank–Wolfe algorithm is an iterative first-order optimization algorithm for constrained convex optimization. Also known as the conditional gradient method, reduced gradient algorithm and the convex combination algorithm, the method was originally proposed by Marguerite Frank and Philip Wolfe in 1956. In each iteration, the Frank–Wolfe algorithm considers a linear approximation of the objective function, and moves towards a minimizer of this linear function (taken over the same domain). Problem statement Suppose \mathcal is a compact convex set in a vector space and f \colon \mathcal \to \mathbb is a convex, differentiable real-valued function. The Frank–Wolfe algorithm solves the optimization problem :Minimize f(\mathbf) :subject to \mathbf \in \mathcal. Algorithm :''Initialization:'' Let k \leftarrow 0, and let \mathbf_0 \! be any point in \mathcal. :Step 1. ''Direction-finding subproblem:'' Find \mathbf_k solving ::Minimize \mathbf^T \nabla f(\mathb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Proximal Gradient Methods For Learning

Proximal gradient (forward backward splitting) methods for learning is an area of research in optimization and statistical learning theory which studies algorithms for a general class of Convex function#Definition, convex Regularization (mathematics), regularization problems where the regularization penalty may not be Differentiable function, differentiable. One such example is \ell_1 regularization (also known as Lasso) of the form :\min_ \frac\sum_^n (y_i- \langle w,x_i\rangle)^2+ \lambda \, w\, _1, \quad \text x_i\in \mathbb^d\text y_i\in\mathbb. Proximal gradient methods offer a general framework for solving regularization problems from statistical learning theory with penalties that are tailored to a specific problem application. Such customized penalties can help to induce certain structure in problem solutions, such as ''sparsity'' (in the case of Lasso (statistics), lasso) or ''group structure'' (in the case of Lasso (statistics)#Group LASSO, group lasso). Relevant backgro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Alternating Projection

In mathematics, projections onto convex sets (POCS), sometimes known as the alternating projection method, is a method to find a point in the intersection of two closed convex sets. It is a very simple algorithm and has been rediscovered many times. The simplest case, when the sets are affine spaces, was analyzed by John von Neumann. The case when the sets are affine spaces is special, since the iterates not only converge to a point in the intersection (assuming the intersection is non-empty) but to the orthogonal projection of the point onto the intersection. For general closed convex sets, the limit point need not be the projection. Classical work on the case of two closed convex sets shows that the rate of convergence of the iterates is linear. There are now extensions that consider cases when there are more than two sets, or when the sets are not convex, or that give faster convergence rates. Analysis of POCS and related methods attempt to show that the algorithm converges (and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Iteration

Iteration is the repetition of a process in order to generate a (possibly unbounded) sequence of outcomes. Each repetition of the process is a single iteration, and the outcome of each iteration is then the starting point of the next iteration. In mathematics and computer science, iteration (along with the related technique of recursion) is a standard element of algorithms. Mathematics In mathematics, iteration may refer to the process of iterated function, iterating a function, i.e. applying a function repeatedly, using the output from one iteration as the input to the next. Iteration of apparently simple functions can produce complex behaviors and difficult problems – for examples, see the Collatz conjecture and juggler sequences. Another use of iteration in mathematics is in iterative methods which are used to produce approximate numerical solutions to certain mathematical problems. Newton's method is an example of an iterative method. Manual calculation of a number's sq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Projection Operator

In linear algebra and functional analysis, a projection is a linear transformation P from a vector space to itself (an endomorphism) such that P\circ P=P. That is, whenever P is applied twice to any vector, it gives the same result as if it were applied once (i.e. P is idempotent). It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object. Definitions A projection on a vector space V is a linear operator P\colon V \to V such that P^2 = P. When V has an inner product and is complete, i.e. when V is a Hilbert space, the concept of orthogonality can be used. A projection P on a Hilbert space V is called an orthogonal projection if it satisfies \langle P \mathbf x, \mathbf y \rangle = \langle \mathbf x, P \mathbf y \rangle for all \mathbf x, \mathbf y \in V. A projection on a Hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Projections Onto Convex Sets

In mathematics, projections onto convex sets (POCS), sometimes known as the alternating projection method, is a method to find a point in the intersection of two closed convex sets. It is a very simple algorithm and has been rediscovered many times. The simplest case, when the sets are affine spaces, was analyzed by John von Neumann. The case when the sets are affine spaces is special, since the iterates not only converge to a point in the intersection (assuming the intersection is non-empty) but to the orthogonal projection of the point onto the intersection. For general closed convex sets, the limit point need not be the projection. Classical work on the case of two closed convex sets shows that the rate of convergence of the iterates is linear. There are now extensions that consider cases when there are more than two sets, or when the sets are not convex, or that give faster convergence rates. Analysis of POCS and related methods attempt to show that the algorithm converges (and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Proximal Gradient Methods For Learning

Proximal gradient (forward backward splitting) methods for learning is an area of research in optimization and statistical learning theory which studies algorithms for a general class of Convex function#Definition, convex Regularization (mathematics), regularization problems where the regularization penalty may not be Differentiable function, differentiable. One such example is \ell_1 regularization (also known as Lasso) of the form :\min_ \frac\sum_^n (y_i- \langle w,x_i\rangle)^2+ \lambda \, w\, _1, \quad \text x_i\in \mathbb^d\text y_i\in\mathbb. Proximal gradient methods offer a general framework for solving regularization problems from statistical learning theory with penalties that are tailored to a specific problem application. Such customized penalties can help to induce certain structure in problem solutions, such as ''sparsity'' (in the case of Lasso (statistics), lasso) or ''group structure'' (in the case of Lasso (statistics)#Group LASSO, group lasso). Relevant backgro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Bregman Method

The Bregman method is an iterative algorithm to solve certain convex optimization problems involving regularization. The original version is due to Lev M. Bregman, who published it in 1967. The algorithm is a row-action method accessing constraint functions one by one and the method is particularly suited for large optimization problems where constraints can be efficiently enumerated. The algorithm works particularly well for regularizers such as the \ell_1 norm, where it converges very quickly because of an error-cancellation effect. Algorithm In order to be able to use the Bregman method, one must frame the problem of interest as finding \min_u J(u) + f(u), where J is a regularizing function such as \ell_1. The ''Bregman distance'' is defined as D^p(u, v) := J(u) - (J(v) + \langle p, u - v\rangle) where p belongs to the subdifferential of J at u (which we denoted \partial J(u)). One performs the iteration u_:= \min_u(\alpha D(u, u_k) + f(u)), with \alpha a constant to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |