|

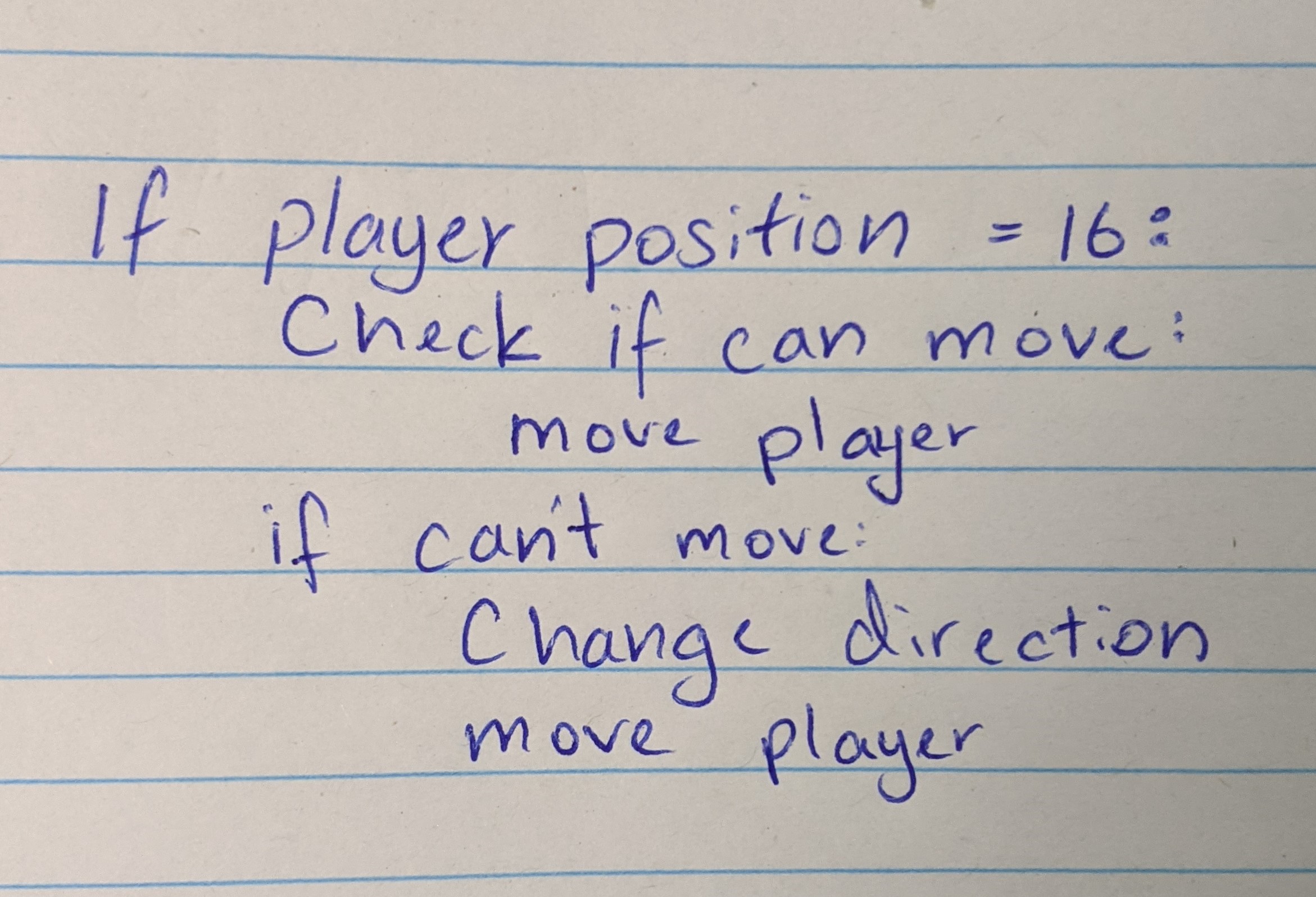

Pseudocode

In computer science, pseudocode is a description of the steps in an algorithm using a mix of conventions of programming languages (like assignment operator, conditional operator, loop) with informal, usually self-explanatory, notation of actions and conditions. Although pseudocode shares features with regular programming languages, it is intended for human reading rather than machine control. Pseudocode typically omits details that are essential for machine implementation of the algorithm, meaning that pseudocode can only be verified by hand. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The reasons for using pseudocode are that it is easier for people to understand than conventional programming language code and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications to document ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Skeleton (computer Programming)

Skeleton programming is a style of computer programming based on simple high-level program structures and so called dummy code. Program skeletons resemble pseudocode, but allow parsing, compilation and testing of the code. Dummy code is inserted in a program skeleton to simulate processing and avoid compilation error messages. It may involve empty function declarations, or functions that return a correct result only for a simple test case where the expected response of the code is known. Skeleton programming facilitates a top-down design approach, where a partially functional system with complete high-level structures is designed and coded, and this system is then progressively expanded to fulfill the requirements of the project. Program skeletons are also sometimes used for high-level descriptions of algorithms. A program skeleton may also be utilized as a template that reflects syntax and structures commonly used in a wide class of problems. Skeleton programs are utiliz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Programming Languages

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their syntax (form) and semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, variables, and mechanisms for error handling. An implementation of a programming language is required in order to execute programs, namely an interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type ( imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. While early programming languages were closely tied to the hardware, over time they have developed more abstraction to hide implementation details for greater simplicity. Thousands of programming langua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Syntax

In linguistics, syntax ( ) is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). Diverse approaches, such as generative grammar and functional grammar, offer unique perspectives on syntax, reflecting its complexity and centrality to understanding human language. Etymology The word ''syntax'' comes from the ancient Greek word , meaning an orderly or systematic arrangement, which consists of (''syn-'', "together" or "alike"), and (''táxis'', "arrangement"). In Hellenistic Greek, this also specifically developed a use referring to the grammatical order of words, with a slightly altered spelling: . The English term, which first appeared in 1548, is partly borrowed from Latin () and Greek, though the L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Programmer

A programmer, computer programmer or coder is an author of computer source code someone with skill in computer programming. The professional titles Software development, ''software developer'' and Software engineering, ''software engineer'' are used for jobs that require a programmer. Identification Sometimes a programmer or job position is identified by the language used or target platform. For example, assembly language, assembly programmer, web developer. Job title The job titles that include programming tasks have differing connotations across the computer industry and to different individuals. The following are notable descriptions. A ''software developer'' primarily implements software based on specifications and fixes Software bug, bugs. Other duties may include code review, reviewing code changes and software testing, testing. To achieve the required skills for the job, they might obtain a computer science or associate degree, associate degree, attend a Cod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Top-down And Bottom-up

Bottom-up and top-down are strategies of composition and decomposition in fields as diverse as information processing and ordering knowledge, software, humanistic and scientific theories (see systemics), and management and organization. In practice they can be seen as a style of thinking, teaching, or leadership. A top-down approach (also known as ''stepwise design'' and stepwise refinement and in some cases used as a synonym of ''decomposition'') is essentially the breaking down of a system to gain insight into its compositional subsystems in a reverse engineering fashion. In a top-down approach an overview of the system is formulated, specifying, but not detailing, any first-level subsystems. Each subsystem is then refined in yet greater detail, sometimes in many additional subsystem levels, until the entire specification is reduced to base elements. A top-down model is often specified with the assistance of black boxes, which makes it easier to manipulate. However, black b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Moving Picture Experts Group

The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by ISO and IEC that sets standards for media coding, including compression coding of audio, video, graphics, and genomic data; and transmission and file formats for various applications.John Watkinson, ''The MPEG Handbook'', p. 1 Together with JPEG, MPEG is organized under ISO/IEC JTC 1/ SC 29 – ''Coding of audio, picture, multimedia and hypermedia information'' (ISO/IEC Joint Technical Committee 1, Subcommittee 29). MPEG formats are used in various multimedia systems. The most well known older MPEG media formats typically use MPEG-1, MPEG-2, and MPEG-4 AVC media coding and MPEG-2 systems transport streams and program streams. Newer systems typically use the MPEG base media file format and dynamic streaming (a.k.a. MPEG-DASH). History MPEG was established in 1988 by the initiative of Dr. Hiroshi Yasuda ( NTT) and Dr. Leonardo Chiariglione ( CSELT). Chiariglione was th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

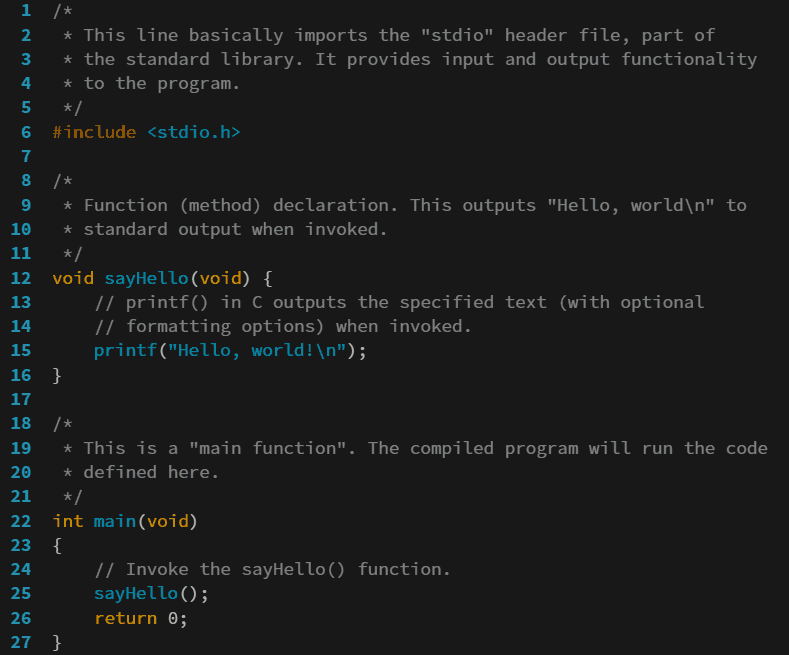

C (programming Language)

C (''pronounced'' '' – like the letter c'') is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted Central processing unit, CPUs. It has found lasting use in operating systems code (especially in Kernel (operating system), kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Judith Gersting

Judith Lee MacKenzie Gersting (born August 20, 1940) is an American mathematician, computer scientist, and textbook author. She is a professor emerita of computer science at Indiana University–Purdue University Indianapolis and at the University of Hawaiʻi at Hilo. Education and career Gersting graduated from Stetson University in 1962, and completed a Ph.D. in mathematics in 1969 at Arizona State University. Her dissertation, ''Some Results on t-Regressive Isols'', concerned recursive function theory and was supervised by Matt Hassett. After holding a faculty position in the department of mathematical sciences at Indiana University–Purdue University Indianapolis (IUPUI) for ten years, and becoming a full professor there, she spent a year at the University of Central Florida before returning to IUPUI in 1981 as professor of mathematics and acting chair of the department of computer and information science. She came to the University of Hawaiʻi at Hilo in 1990, and chaire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Numerical Computation

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods that attempt to find approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences like economics, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics (predicting the motions of planets, stars and galaxies), numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pascal (programming Language)

Pascal is an imperative and procedural programming language, designed by Niklaus Wirth as a small, efficient language intended to encourage good programming practices using structured programming and data structuring. It is named after French mathematician, philosopher and physicist Blaise Pascal. Pascal was developed on the pattern of the ALGOL 60 language. Wirth was involved in the process to improve the language as part of the ALGOL X efforts and proposed a version named ALGOL W. This was not accepted, and the ALGOL X process bogged down. In 1968, Wirth decided to abandon the ALGOL X process and further improve ALGOL W, releasing this as Pascal in 1970. On top of ALGOL's scalars and arrays, Pascal enables defining complex datatypes and building dynamic and recursive data structures such as lists, trees and graphs. Pascal has strong typing on all objects, which means that one type of data cannot be converted to or interpreted as another without explicit conversions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |