|

Penalty Function

In mathematical optimization, penalty methods are a certain class of algorithms for solving constrained optimization problems. A penalty method replaces a constrained optimization problem by a series of unconstrained problems whose solutions ideally converge to the solution of the original constrained problem. The unconstrained problems are formed by adding a term, called a penalty function, to the objective function that consists of a ''penalty parameter'' multiplied by a measure of violation of the constraints. The measure of violation is nonzero when the constraints are violated and is zero in the region where constraints are not violated. Description Let us say we are solving the following constrained problem: : \min_x f(\mathbf x) subject to : c_i(\mathbf x) \le 0 ~\forall i \in I. This problem can be solved as a series of unconstrained minimization problems : \min f_p (\mathbf x) := f (\mathbf x) + p ~ \sum_ ~ g(c_i(\mathbf x)) where : g(c_i(\mathbf x))=\max(0,c_ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ill-conditioned

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given f(x) = y, one is solving for ''x,'' and thus the condition number of the (local) inverse must be used. The condition number is derived from the theory of propagation of uncertainty, and is formally defined as the value of the asymptotic worst-case relative change in output for a relative change in input. The "function" is the solution of a problem and the "arguments" are the data in the problem. The condition number is frequently applied to questions in linear algebra, in which case the derivative is straightforward but the error could be in many different directions, and is thus computed from the geometry of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interior Point Method

Interior-point methods (also referred to as barrier methods or IPMs) are algorithms for solving Linear programming, linear and nonlinear programming, non-linear convex optimization problems. IPMs combine two advantages of previously-known algorithms: * Theoretically, their run-time is Polynomial time, polynomial—in contrast to the simplex method, which has exponential run-time in the worst case. * Practically, they run as fast as the simplex method—in contrast to the ellipsoid method, which has polynomial run-time in theory but is very slow in practice. In contrast to the simplex method which traverses the ''boundary'' of the feasible region, and the ellipsoid method which bounds the feasible region from ''outside'', an IPM reaches a best solution by traversing the ''interior'' of the feasible region—hence the name. History An interior point method was discovered by Soviet mathematician I. I. Dikin in 1967. The method was reinvented in the U.S. in the mid-1980s. In 1984, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Linear-quadratic Programming

Sequential linear-quadratic programming (SLQP) is an iterative method for nonlinear optimization problems where objective function and constraints are twice continuously differentiable. Similarly to sequential quadratic programming (SQP), SLQP proceeds by solving a sequence of optimization subproblems. The difference between the two approaches is that: * in SQP, each subproblem is a quadratic program, with a quadratic model of the objective subject to a linearization of the constraints * in SLQP, two subproblems are solved at each step: a linear program (LP) used to determine an active set, followed by an equality-constrained quadratic program (EQP) used to compute the total step This decomposition makes SLQP suitable to large-scale optimization problems, for which efficient LP and EQP solvers are available, these problems being easier to scale than full-fledged quadratic programs. It may be considered related to, but distinct from, quasi-Newton methods. Algorithm basics Con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Successive Linear Programming

Successive Linear Programming (SLP), also known as Sequential Linear Programming, is an optimization technique for approximately solving nonlinear optimization problems. It is related to, but distinct from, quasi-Newton methods. Starting at some estimate of the optimal solution, the method is based on solving a sequence of first-order approximations (i.e. linearizations) of the model. The linearizations are linear programming problems, which can be solved efficiently. As the linearizations need not be bounded, trust regions or similar techniques are needed to ensure convergence in theory. SLP has been used widely in the petrochemical industry since the 1970s. Since then, however, they have been superseded by sequential quadratic programming methods. While solving a QP subproblem takes more time than solving an LP one, the overall decrease in the number of iterations, due to improved convergence, results in significantly lower running times and fewer function evaluations." S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Quadratic Programming

Sequential quadratic programming (SQP) is an iterative method for constrained nonlinear optimization, also known as Lagrange-Newton method. SQP methods are used on mathematical problems for which the objective function and the constraints are twice continuously differentiable, but not necessarily convex. SQP methods solve a sequence of optimization subproblems, each of which optimizes a quadratic model of the objective subject to a linearization of the constraints. If the problem is unconstrained, then the method reduces to Newton's method for finding a point where the gradient of the objective vanishes. If the problem has only equality constraints, then the method is equivalent to applying Newton's method to the first-order optimality conditions, or Karush–Kuhn–Tucker conditions, of the problem. Algorithm basics Consider a nonlinear programming problem of the form: :\begin \min\limits_ & f(x) \\ \mbox & h(x) \ge 0 \\ & g(x) = 0. \end where x \in \mathbb^n, f: \mathbb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Augmented Lagrangian Method

Augmented Lagrangian methods are a certain class of algorithms for solving constrained optimization problems. They have similarities to penalty methods in that they replace a constrained optimization problem by a series of unconstrained problems and add a penalty term to the objective, but the augmented Lagrangian method adds yet another term designed to mimic a Lagrange multiplier. The augmented Lagrangian is related to, but not identical with, the method of Lagrange multipliers. Viewed differently, the unconstrained objective is the Lagrangian of the constrained problem, with an additional penalty term (the augmentation). The method was originally known as the method of multipliers and was studied in the 1970s and 1980s as a potential alternative to penalty methods. It was first discussed by Magnus Hestenes and then by Michael Powell in 1969. The method was studied by R. Tyrrell Rockafellar in relation to Fenchel duality, particularly in relation to proximal-point methods ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Barrier Method (mathematics)

In constrained optimization, a field of mathematics, a barrier function is a continuous function whose value increases to infinity as its argument approaches the boundary of the feasible region of an optimization problem. Such functions are used to replace inequality constraints by a penalizing term in the objective function that is easier to handle. A barrier function is also called an interior penalty function, as it is a penalty function that forces the solution to remain within the interior of the feasible region. The two most common types of barrier functions are inverse barrier functions and logarithmic barrier functions. Resumption of interest in logarithmic barrier functions was motivated by their connection with primal-dual interior point methods. Motivation Consider the following constrained optimization problem: :minimize :subject to where is some constant. If one wishes to remove the inequality constraint, the problem can be reformulated as :minimize , :where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contact Mechanics

Contact mechanics is the study of the Deformation (mechanics), deformation of solids that touch each other at one or more points. A central distinction in contact mechanics is between Stress (mechanics), stresses acting perpendicular to the contacting bodies' surfaces (known as normal stress) and frictional stresses acting Tangential and normal components, tangentially between the surfaces (shear stress). Normal contact mechanics or frictionless contact mechanics focuses on normal stresses caused by applied normal forces and by the adhesion present on surfaces in close contact, even if they are clean and dry. ''Frictional contact mechanics'' emphasizes the effect of friction forces. Contact mechanics is part of mechanical engineering. The physical and mathematical formulation of the subject is built upon the mechanics of materials and continuum mechanics and focuses on computations involving Elasticity (physics), elastic, viscoelasticity, viscoelastic, and Plastic Deformation, p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

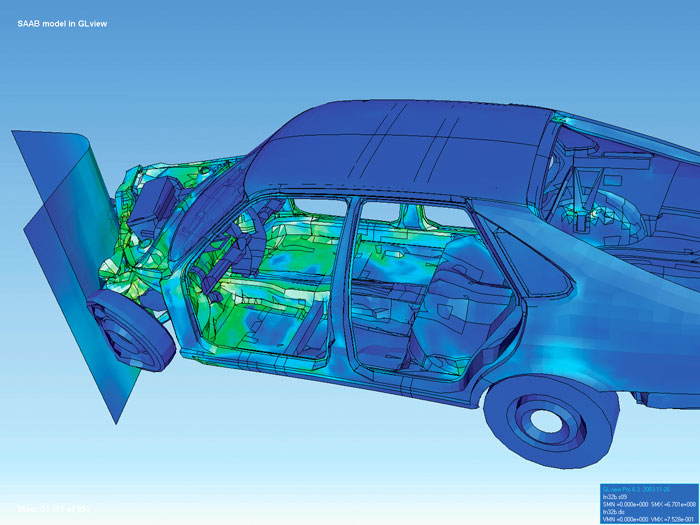

Finite Element Method

Finite element method (FEM) is a popular method for numerically solving differential equations arising in engineering and mathematical modeling. Typical problem areas of interest include the traditional fields of structural analysis, heat transfer, fluid flow, mass transport, and electromagnetic potential. Computers are usually used to perform the calculations required. With high-speed supercomputers, better solutions can be achieved and are often required to solve the largest and most complex problems. FEM is a general numerical method for solving partial differential equations in two- or three-space variables (i.e., some boundary value problems). There are also studies about using FEM to solve high-dimensional problems. To solve a problem, FEM subdivides a large system into smaller, simpler parts called finite elements. This is achieved by a particular space discretization in the space dimensions, which is implemented by the construction of a mesh of the object: the numer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Compression

Image compression is a type of data compression applied to digital images, to reduce their cost for computer data storage, storage or data transmission, transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data. Lossy and lossless image compression Image compression may be lossy compression, lossy or lossless compression, lossless. Lossless compression is preferred for archival purposes and often for medical imaging, technical drawings, clip art, or comics. Lossy compression methods, especially when used at low bit rates, introduce compression artifacts. Lossy methods are especially suitable for natural images such as photographs in applications where minor (sometimes imperceptible) loss of fidelity is acceptable to achieve a substantial reduction in bit rate. Lossy compression that produces negligible differences ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |