|

Ordinal Regression

In statistics, ordinal regression, also called ordinal classification, is a type of regression analysis used for predicting an ordinal variable, i.e. a variable whose value exists on an arbitrary scale where only the relative ordering between different values is significant. It can be considered an intermediate problem between regression and classification. Examples of ordinal regression are ordered logit and ordered probit. Ordinal regression turns up often in the social sciences, for example in the modeling of human levels of preference (on a scale from, say, 1–5 for "very poor" through "excellent"), as well as in information retrieval. In machine learning, ordinal regression may also be called ranking learning. Linear models for ordinal regression Ordinal regression can be performed using a generalized linear model (GLM) that fits both a coefficient vector and a set of ''thresholds'' to a dataset. Suppose one has a set of observations, represented by length- vectors throu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proportional Hazards Model

Proportional hazards models are a class of survival models in statistics. Survival models relate the time that passes, before some event occurs, to one or more covariates that may be associated with that quantity of time. In a proportional hazards model, the unique effect of a unit increase in a covariate is multiplicative with respect to the hazard rate. The hazard rate at time t is the probability per short time d''t'' that an event will occur between t and t + dt given that up to time t no event has occurred yet. For example, taking a drug may halve one's hazard rate for a stroke occurring, or, changing the material from which a manufactured component is constructed, may double its hazard rate for failure. Other types of survival models such as accelerated failure time models do not exhibit proportional hazards. The accelerated failure time model describes a situation where the biological or mechanical life history of an event is accelerated (or decelerated). Background ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, a logistic model (or logit model) is a statistical model that models the logit, log-odds of an event as a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) estimation theory, estimates the parameters of a logistic model (the coefficients in the linear or non linear combinations). In binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, hence the name. The unit of measurement for the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IJCAI

The International Joint Conference on Artificial Intelligence (IJCAI) is a conference in the field of artificial intelligence. The conference series has been organized by the nonprofit IJCAI Organization since 1969.Jointly sponsored by the IJCAI Organization and the hosting national AI societies. It was held biennially in odd-numbered years from 1969 to 2015 and annually starting from 2016. More recently, IJCAI was held jointly every four years with ECAI since 2018 and PRICAI since 2020 to promote collaboration of AI researchers and practitioners. IJCAI covers a broad range of research areas in the field of AI. It is a large and highly selective conference, with only about 20% or less of the submitted papers accepted after peer review in the 5 years leading up to 2022. Awards Three research awards are given at each IJCAI conference. * The IJCAI Computers and Thought Award is given to outstanding young scientists under the age of 35 in AI. * The Donald E. Walker Distinguished Se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log Loss

In information theory, the cross-entropy between two probability distributions p and q, over the same underlying set of events, measures the average number of bits needed to identify an event drawn from the set when the coding scheme used for the set is optimized for an estimated probability distribution q, rather than the true distribution p. Definition The cross-entropy of the distribution q relative to a distribution p over a given set is defined as follows: H(p, q) = -\operatorname_p log q where \operatorname_p cdot/math> is the expected value operator with respect to the distribution p. The definition may be formulated using the Kullback–Leibler divergence D_(p \parallel q), divergence of p from q (also known as the ''relative entropy'' of p with respect to q). H(p, q) = H(p) + D_(p \parallel q), where H(p) is the entropy of p. For discrete probability distributions p and q with the same support \mathcal, this means The situation for continuous distributions is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hinge Loss

In machine learning, the hinge loss is a loss function used for training classifiers. The hinge loss is used for "maximum-margin" classification, most notably for support vector machines (SVMs). For an intended output and a classifier score , the hinge loss of the prediction is defined as :\ell(y) = \max(0, 1-t \cdot y) Note that y should be the "raw" output of the classifier's decision function, not the predicted class label. For instance, in linear SVMs, y = \mathbf \cdot \mathbf + b, where (\mathbf,b) are the parameters of the hyperplane and \mathbf is the input variable(s). When and have the same sign (meaning predicts the right class) and , y, \ge 1, the hinge loss \ell(y) = 0. When they have opposite signs, \ell(y) increases linearly with , and similarly if , y, < 1, even if it has the same sign (correct prediction, but not by enough margin). Extensions While binary SVMs are commonly extended to[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loss Function

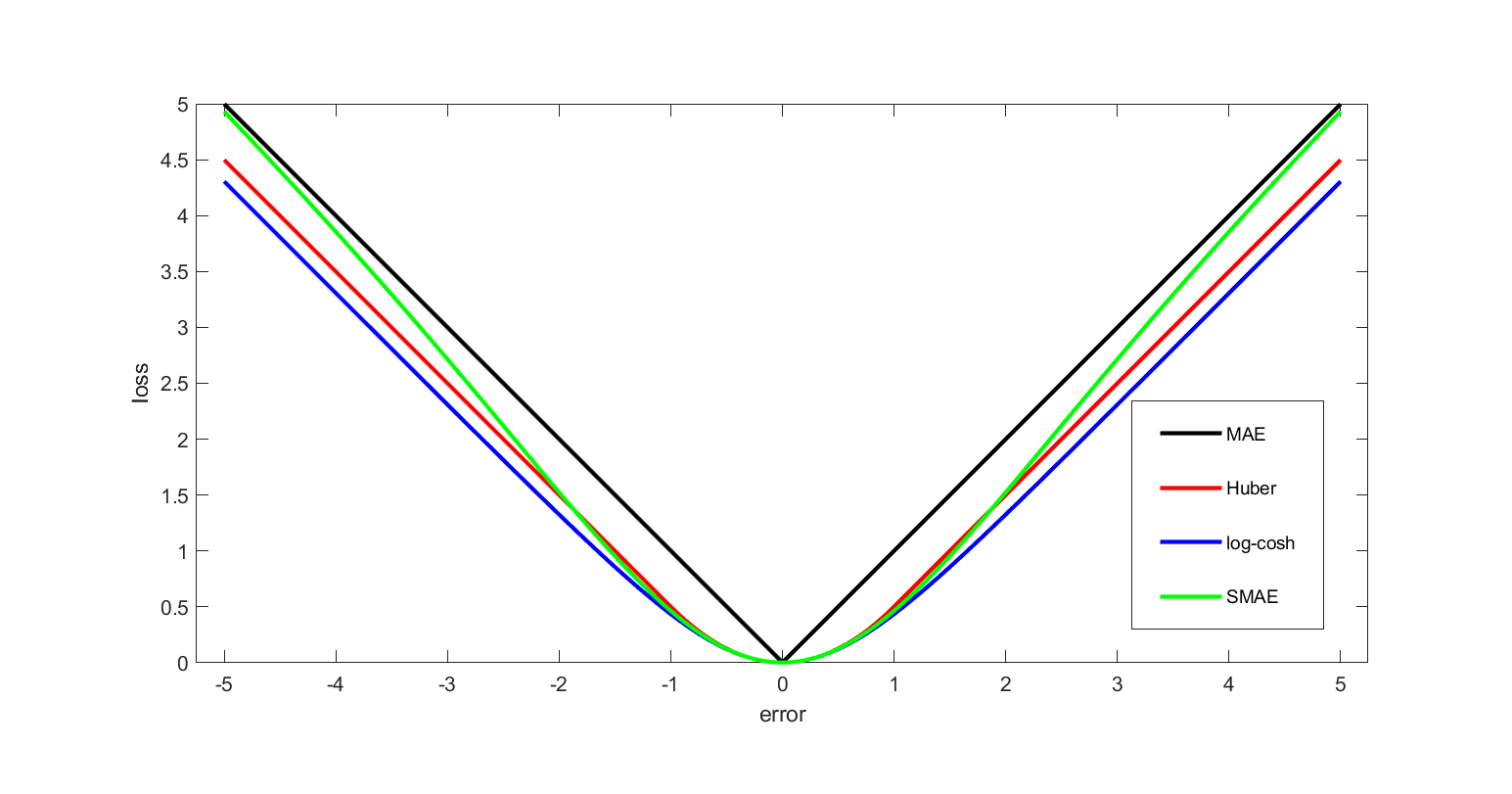

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perceptron

In machine learning, the perceptron is an algorithm for supervised classification, supervised learning of binary classification, binary classifiers. A binary classifier is a function that can decide whether or not an input, represented by a vector of numbers, belongs to some specific class. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of Weighting, weights with the feature vector. History The artificial neuron network was invented in 1943 by Warren McCulloch and Walter Pitts in ''A Logical Calculus of the Ideas Immanent in Nervous Activity, A logical calculus of the ideas immanent in nervous activity''. In 1957, Frank Rosenblatt was at the Cornell Aeronautical Laboratory. He simulated the perceptron on an IBM 704. Later, he obtained funding by the Information Systems Branch of the United States Office of Naval Research and the Rome Air Development Center, to build a custom- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iverson Bracket

In mathematics, the Iverson bracket, named after Kenneth E. Iverson, is a notation that generalises the Kronecker delta, which is the Iverson bracket of the statement . It maps any statement to a function of the free variables in that statement. This function is defined to take the value 1 for the values of the variables for which the statement is true, and takes the value 0 otherwise. It is generally denoted by putting the statement inside square brackets: = \begin 1 & \text P \text \\ 0 & \text \end In other words, the Iverson bracket of a statement is the indicator function of the set of values for which the statement is true. The Iverson bracket allows using capital-sigma notation without restriction on the summation index. That is, for any property P(k) of the integer k, one can rewrite the restricted sum \sum_f(k) in the unrestricted form \sum_k f(k) \cdot (k)/math>. With this convention, f(k) does not need to be defined for the values of for which the Iverson bracket eq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log-likelihood

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the joint probability distribution of the random variable that (presumably) generated the observations. When evaluated on the actual data points, it becomes a function solely of the model parameters. In maximum likelihood estimation, the argument that maximizes the likelihood function serves as a point estimate for the unknown parameter, while the Fisher information (often approximated by the likelihood's Hessian matrix at the maximum) gives an indication of the estimate's precision. In contrast, in Bayesian statistics, the estimate of interest is the ''converse'' of the likelihood, the so-called posterior probability of the parameter given the observed data, which is calculated via Bayes' rule. Definition The likelihood function, paramet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution Support (measure theory), supported on the real numbers, discrete or "mixed" as well as Continuous variable, continuous, is uniquely identified by a right-continuous Monotonic function, monotone increasing function (a càdlàg function) F \colon \mathbb R \rightarrow [0,1] satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from negative infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |