|

Neuro-symbolic AI

Neuro-symbolic AI integrates neural and symbolic AI architectures to address complementary strengths and weaknesses of each, providing a robust AI capable of reasoning, learning, and cognitive modeling. As argued by Valiant and many others, the effective construction of rich computational cognitive models demands the combination of sound symbolic reasoning and efficient machine learning models. Gary Marcus, argues that: "We cannot construct rich cognitive models in an adequate, automated way without the triumvirate of hybrid architecture, rich prior knowledge, and sophisticated techniques for reasoning.". Further, "To build a robust, knowledge-driven approach to AI we must have the machinery of symbol-manipulation in our toolkit. Too much of useful knowledge is abstract to make do without tools that represent and manipulate abstraction, and to date, the only machinery that we know of that can manipulate such abstract knowledge reliably is the apparatus of symbol-manipulation. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connectionism

Connectionism refers to both an approach in the field of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN) and to a wide range of techniques and algorithms using ANNs in the context of artificial intelligence to build more intelligent machines. Connectionism presents a cognitive theory based on simultaneously occurring, distributed signal activity via connections that can be represented numerically, where learning occurs by modifying connection strengths based on experience. Some advantages of the connectionist approach include its applicability to a broad array of functions, structural approximation to biological neurons, low requirements for innate structure, and capacity for graceful degradation. Some disadvantages include the difficulty in deciphering how ANNs process information, or account for the compositionality of mental representations, and a resultant difficulty explaining phenomena at a higher level. The success of deep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt. The architecture is a standard transformer network (with a few engineering tweaks) with the unprecedented size of 2048- token-long context and 175 billion parameters (requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks. It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020, is part of a trend in natural language processing (NLP) systems of pre-trained language representations. The quality of the t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connectionist AI

Connectionism refers to both an approach in the field of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN) and to a wide range of techniques and algorithms using ANNs in the context of artificial intelligence to build more intelligent machines. Connectionism presents a cognitive theory based on simultaneously occurring, distributed signal activity via connections that can be represented numerically, where learning occurs by modifying connection strengths based on experience. Some advantages of the connectionist approach include its applicability to a broad array of functions, structural approximation to biological neurons, low requirements for innate structure, and capacity for graceful degradation. Some disadvantages include the difficulty in deciphering how ANNs process information, or account for the compositionality of mental representations, and a resultant difficulty explaining phenomena at a higher level. The success of deep l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symbolic AI

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems (in particular, expert systems), symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems. Symbolic AI was the dominant paradigm of AI research from the mid-1950s until the middle 1990s. Researchers in the 1960s and the 1970s were convinced that symbolic approaches would eventually succeed in creating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ProbLog

ProbLog is a probabilistic logic programming language that extends Prolog with probabilities. It minimally extends Prolog by adding the notion of a probabilistic fact, which combines the idea of logical atoms and random variables. Similarly to Prolog, ProbLog can query an atom. While Prolog returns the truth value of the queried atom, ProbLog returns the probability of it being true. Semantics A probabilistic fact is a pair (p, a) with a an atom and p \in , 1/math> the probability of a being true. A rule is defined by an atom h, called the head, and a finite set of n literals \, called the body. ProbLog programs consist of a set of probabilistic facts \mathcal and a set of rules \mathcal. Using the distribution semantics, a probability distribution is defined over the two-valued well-founded models of the atoms in the program. The probability of a model is defined as P(M) = \prod_ P(l) where the product runs over all the literals in the model M. For a query atom q the distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Knowledge Bases

A knowledge base (KB) is a technology used to store complex structured and unstructured information used by a computer system. The initial use of the term was in connection with expert systems, which were the first knowledge-based systems. Original usage of the term The original use of the term knowledge base was to describe one of the two sub-systems of an expert system. A knowledge-based system consists of a knowledge-base representing facts about the world and ways of reasoning about those facts to deduce new facts or highlight inconsistencies. Properties The term "knowledge-base" was coined to distinguish this form of knowledge store from the more common and widely used term ''database''. During the 1970s, virtually all large management information systems stored their data in some type of hierarchical or relational database. At this point in the history of information technology, the distinction between a database and a knowledge-base was clear and unambiguous. A databas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Models

A cognitive model is an approximation of one or more cognitive processes in humans or other animals for the purposes of comprehension and prediction. There are many types of cognitive models, and they can range from box-and-arrow diagrams to a set of equations to software programs that interact with the same tools that humans use to complete tasks (e.g., computer mouse and keyboard). Relationship to cognitive architectures Cognitive models can be developed within or without a cognitive architecture, though the two are not always easily distinguishable. In contrast to cognitive architectures, cognitive models tend to be focused on a single cognitive phenomenon or process (e.g., list learning), how two or more processes interact (e.g., visual search bsc1780 decision making), or making behavioral predictions for a specific task or tool (e.g., how instituting a new software package will affect productivity). Cognitive architectures tend to be focused on the structural properties of the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moshe Vardi

, honorific_suffix = , image = Moshe Vardi IMG 0010.jpg , birth_date = , birth_place = Israel , workplaces = Rice UniversityIBM ResearchStanford University , alma_mater = , thesis_title = The Implication Problem for Data Dependencies in the Relational Model , thesis_url = , thesis_year = 1981 , doctoral_advisor = Catriel Beeri , doctoral_students = Kristin Yvonne Rozier , fields = LogicComputation , awards = , website = Moshe Ya'akov Vardi ( he, משה יעקב ורדי) is an Israeli mathematician and computer scientist. He is a Professor of Computer Science at Rice University, United States. He is University Professor, the Karen Ostrum George Professor in Computational Engineering, Distinguished Service Professor, and director of the Ken Kennedy Institute for Information Technology. His interests focus on applications of logic to computer science, including database theory, finite mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

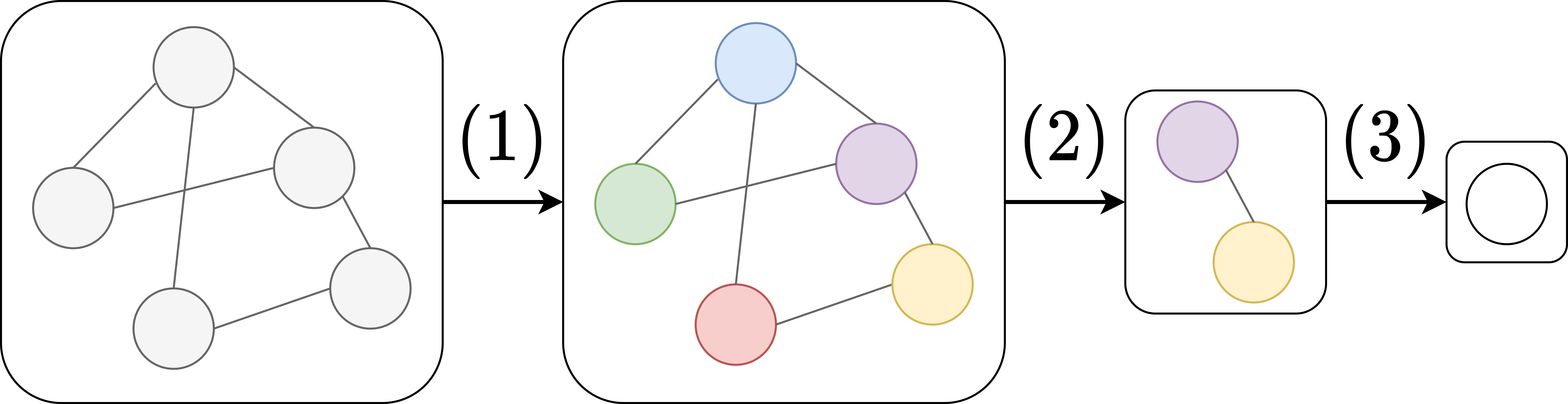

Graph Neural Network

A graph neural network (GNN) belongs to a class of artificial neural networks for processing data that can be represented as Graph (abstract data type), graphs. In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A convolutional neural network layer, in the context of computer vision, can be seen as a GNN applied to graphs whose nodes are pixels and only adjacent pixels are connected by edges in the graph. A Transformer (machine learning model), transformer layer, in natural language processing, can be seen as a GNN applied to complete graphs whose nodes are words or tokens in a passage of natural language text. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Since their inception, several different GNN architectures have been ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sepp Hochreiter

Josef "Sepp" Hochreiter (born 14 February 1967) is a German computer scientist. Since 2018 he has led the Institute for Machine Learning at the Johannes Kepler University of Linz after having led the Institute of Bioinformatics from 2006 to 2018. In 2017 he became the head of the Linz Institute of Technology (LIT) AI Lab. Hochreiter is also a founding director of the Institute of Advanced Research in Artificial Intelligence (IARAI). Previously, he was at the Technical University of Berlin, at the University of Colorado at Boulder, and at the Technical University of Munich. He is a chair of the Critical Assessment of Massive Data Analysis (CAMDA) conference. Hochreiter has made contributions in the fields of machine learning, deep learning and bioinformatics, most notably the development of the long short-term memory (LSTM) neural network architecture, but also in meta-learning, reinforcement learning and biclustering with application to bioinformatics data. Scientific career Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

And–or Tree

An and–or tree is a graphical representation of the reduction of Computational problem, problems (or goals) to Logical conjunction, conjunctions and disjunctions of subproblems (or subgoals). Example The and-or tree: represents the Candidate solution, search space for solving the problem P, using the goal-reduction methods: :P if Q and R :P if S :Q if T :Q if U Definitions Given an initial problem P0 and set of problem solving methods of the form: :P if P1 and … and Pn the associated and-or tree is a set of labelled nodes such that: # The root of the tree is a node labelled by P0. # For every node N labelled by a problem or sub-problem P and for every method of the form P if P1 and … and Pn, there exists a set of children nodes N1, …, Nn of the node N, such that each node Ni is labelled by Pi. The nodes are conjoined by an arc, to distinguish them from children of N that might be associated with other methods. A node N, labelled by a problem P, is a success node ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Macsyma

Macsyma (; "Project MAC's SYmbolic MAnipulator") is one of the oldest general-purpose computer algebra systems still in wide use. It was originally developed from 1968 to 1982 at MIT's Project MAC. In 1982, Macsyma was licensed to Symbolics and became a commercial product. In 1992, Symbolics Macsyma was spun off to Macsyma, Inc., which continued to develop Macsyma until 1999. That version is still available for Microsoft's Windows XP operating system. The 1982 version of MIT Macsyma remained available to academics and US government agencies, and it is distributed by the US Department of Energy (DOE). That version, DOE Macsyma, was maintained by Bill Schelter. Under the name of Maxima, it was released under the GPL in 1999, and remains under active maintenance. Development The project was initiated in July, 1968 by Carl Engelman, William A. Martin (front end, expression display, polynomial arithmetic) and Joel Moses (simplifier, indefinite integration: heuristic/Risch). Martin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |