|

Ngram

In the fields of computational linguistics and probability, an ''n''-gram (sometimes also called Q-gram) is a contiguous sequence of ''n'' items from a given sample of text or speech. The items can be phonemes, syllables, letters, words or base pairs according to the application. The ''n''-grams typically are collected from a text or speech corpus. When the items are words, -grams may also be called ''shingles''. Using Latin numerical prefixes, an ''n''-gram of size 1 is referred to as a "unigram"; size 2 is a "bigram" (or, less commonly, a "digram"); size 3 is a "trigram". English cardinal numbers are sometimes used, e.g., "four-gram", "five-gram", and so on. In computational biology, a polymer or oligomer of a known size is called a ''k''-mer instead of an ''n''-gram, with specific names using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. Applications ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Greek Numerical Prefixes

Numeral or number prefixes are prefixes derived from numerals or occasionally other numbers. In English and many other languages, they are used to coin numerous series of words. For example: * unicycle, bicycle, tricycle (1-cycle, 2-cycle, 3-cycle) * dyad, triad (2 parts, 3 parts) * biped, quadruped (2 legs, 4 legs) * September, October, November, December (month 7, month 8, month 9, month 10) * decimal, hexadecimal (base-10, base-16) * septuagenarian, octogenarian (70-79 years old, 80-89 years old) * centipede, millipede (around 100 legs, around 1000 legs) In many European languages there are two principal systems, taken from Latin and Greek, each with several subsystems; in addition, Sanskrit occupies a marginal position. There is also an international set of metric prefixes, which are used in the metric system and which for the most part are either distorted from the forms below or not based on actual number words. Table of number prefixes in English In the following pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Base Pair

A base pair (bp) is a fundamental unit of double-stranded nucleic acids consisting of two nucleobases bound to each other by hydrogen bonds. They form the building blocks of the DNA double helix and contribute to the folded structure of both DNA and RNA. Dictated by specific hydrogen bonding patterns, "Watson–Crick" (or "Watson–Crick–Franklin") base pairs (guanine–cytosine and adenine–thymine) allow the DNA helix to maintain a regular helical structure that is subtly dependent on its nucleotide sequence. The Complementarity (molecular biology), complementary nature of this based-paired structure provides a redundant copy of the genetic information encoded within each strand of DNA. The regular structure and data redundancy provided by the DNA double helix make DNA well suited to the storage of genetic information, while base-pairing between DNA and incoming nucleotides provides the mechanism through which DNA polymerase replicates DNA and RNA polymerase transcribes DNA in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DNA Sequencing

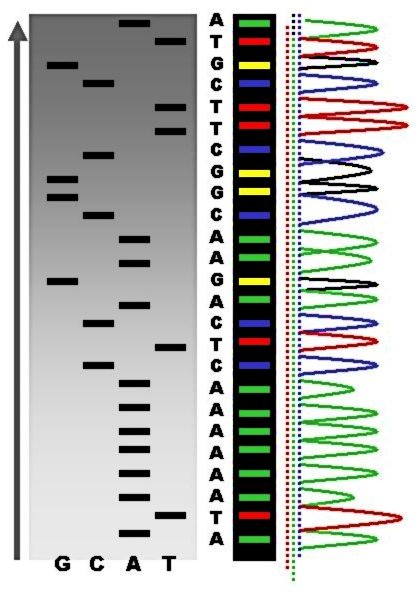

DNA sequencing is the process of determining the nucleic acid sequence – the order of nucleotides in DNA. It includes any method or technology that is used to determine the order of the four bases: adenine, guanine, cytosine, and thymine. The advent of rapid DNA sequencing methods has greatly accelerated biological and medical research and discovery. Knowledge of DNA sequences has become indispensable for basic biological research, DNA Genographic Projects and in numerous applied fields such as medical diagnosis, biotechnology, forensic biology, virology and biological systematics. Comparing healthy and mutated DNA sequences can diagnose different diseases including various cancers, characterize antibody repertoire, and can be used to guide patient treatment. Having a quick way to sequence DNA allows for faster and more individualized medical care to be administered, and for more organisms to be identified and cataloged. The rapid speed of sequencing attained with modern D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amino Acid

Amino acids are organic compounds that contain both amino and carboxylic acid functional groups. Although hundreds of amino acids exist in nature, by far the most important are the alpha-amino acids, which comprise proteins. Only 22 alpha amino acids appear in the genetic code. Amino acids can be classified according to the locations of the core structural functional groups, as Alpha and beta carbon, alpha- , beta- , gamma- or delta- amino acids; other categories relate to Chemical polarity, polarity, ionization, and side chain group type (aliphatic, Open-chain compound, acyclic, aromatic, containing hydroxyl or sulfur, etc.). In the form of proteins, amino acid '' residues'' form the second-largest component (water being the largest) of human muscles and other tissues. Beyond their role as residues in proteins, amino acids participate in a number of processes such as neurotransmitter transport and biosynthesis. It is thought that they played a key role in enabling life ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Protein Sequencing

Protein sequencing is the practical process of determining the amino acid sequence of all or part of a protein or peptide. This may serve to identify the protein or characterize its post-translational modifications. Typically, partial sequencing of a protein provides sufficient information (one or more sequence tags) to identify it with reference to databases of protein sequences derived from the conceptual translation of genes. The two major direct methods of protein sequencing are mass spectrometry and Edman degradation using a protein sequenator (sequencer). Mass spectrometry methods are now the most widely used for protein sequencing and identification but Edman degradation remains a valuable tool for characterizing a protein's ''N''-terminus. Determining amino acid composition It is often desirable to know the unordered amino acid composition of a protein prior to attempting to find the ordered sequence, as this knowledge can be used to facilitate the discovery of errors i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Model

In probability theory, a Markov model is a stochastic model used to Mathematical model, model pseudo-randomly changing systems. It is assumed that future states depend only on the current state, not on the events that occurred before it (that is, it assumes the Markov property). Generally, this assumption enables reasoning and computation with the model that would otherwise be Intractability (complexity), intractable. For this reason, in the fields of predictive modelling and probabilistic forecasting, it is desirable for a given model to exhibit the Markov property. Introduction There are four common Markov models used in different situations, depending on whether every sequential state is observable or not, and whether the system is to be adjusted on the basis of observations made: Markov chain The simplest Markov model is the Markov chain. It models the state of a system with a random variable that changes through time. In this context, the Markov property suggests that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Space–time Tradeoff

A space–time trade-off or time–memory trade-off in computer science is a case where an algorithm or program trades increased space usage with decreased time. Here, ''space'' refers to the data storage consumed in performing a given task (RAM, HDD, etc), and ''time'' refers to the time consumed in performing a given task ( computation time or response time). The utility of a given space–time tradeoff is affected by related fixed and variable costs (of, e.g., CPU speed, storage space), and is subject to diminishing returns. History Biological usage of time–memory tradeoffs can be seen in the earlier stages of animal behavior. Using stored knowledge or encoding stimuli reactions as "instincts" in the DNA avoids the need for "calculation" in time-critical situations. More specific to computers, look-up tables have been implemented since the very earliest operating systems. In 1980 Martin Hellman first proposed using a time–memory tradeoff for cryptanalysis. Types of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signal. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequence Analysis

In bioinformatics, sequence analysis is the process of subjecting a DNA, RNA or peptide sequence to any of a wide range of analytical methods to understand its features, function, structure, or evolution. Methodologies used include sequence alignment, searches against biological databases, and others. Since the development of methods of high-throughput production of gene and protein sequences, the rate of addition of new sequences to the databases increased very rapidly. Such a collection of sequences does not, by itself, increase the scientist's understanding of the biology of organisms. However, comparing these new sequences to those with known functions is a key way of understanding the biology of an organism from which the new sequence comes. Thus, sequence analysis can be used to assign function to genes and proteins by the study of the similarities between the compared sequences. Nowadays, there are many tools and techniques that provide the sequence comparisons (sequence al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Biology

Computational biology refers to the use of data analysis, mathematical modeling and computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and big data, the field also has foundations in applied mathematics, chemistry, and genetics. It differs from biological computing, a subfield of computer engineering which uses bioengineering to build computers. History Bioinformatics, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field. By 1982, researchers shared information via punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)