|

Natural Language Understanding

Natural language understanding (NLU) or natural language interpretation (NLI) is a subset of natural language processing in artificial intelligence that deals with machine reading comprehension. NLU has been considered an AI-hard problem. There is considerable commercial interest in the field because of its application to automated reasoning, machine translation, question answering, news-gathering, text categorization, voice-activation, archiving, and large-scale content analysis. History The program STUDENT, written in 1964 by Daniel Bobrow for his PhD dissertation at MIT, is one of the earliest known attempts at NLU by a computer. Eight years after John McCarthy coined the term artificial intelligence, Bobrow's dissertation (titled ''Natural Language Input for a Computer Problem Solving System'') showed how a computer could understand simple natural language input to solve algebra word problems. A year later, in 1965, Joseph Weizenbaum at MIT wrote ELIZA, an interactiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

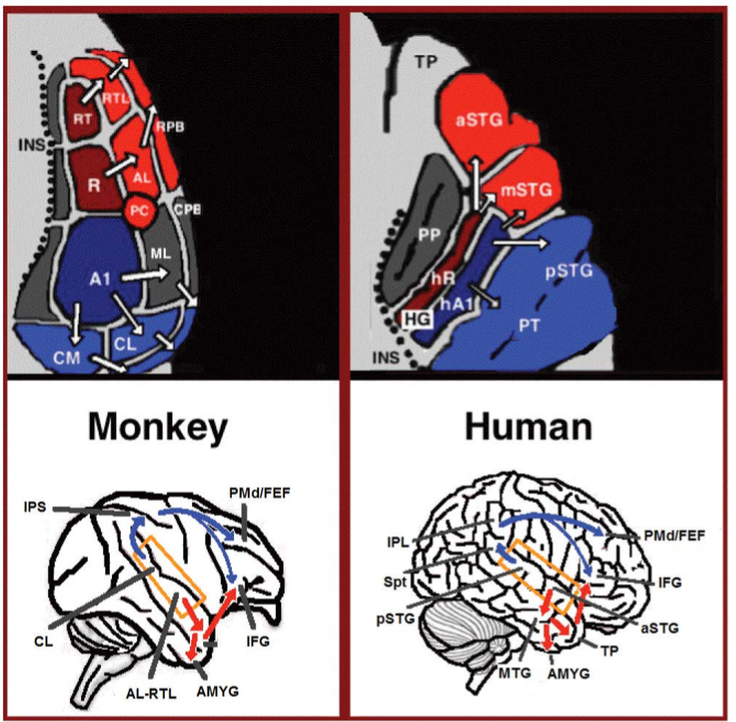

Language Processing In The Brain

In psycholinguistics, language processing refers to the way humans use words to communicate ideas and feelings, and how such communications are processed and understood. Language processing is considered to be a uniquely human ability that is not produced with the same grammatical understanding or systematicity in even human's closest primate relatives. Throughout the 20th century the dominant model for language processing in the brain was the Geschwind–Lichteim–Wernicke model, which is based primarily on the analysis of brain-damaged patients. However, due to improvements in intra-cortical electrophysiological recordings of monkey and human brains, as well non-invasive techniques such as fMRI, PET, MEG and EEG, an auditory pathway consisting of two parts has been revealed and a two-streams model has been developed. In accordance with this model, there are two pathways that connect the auditory cortex to the frontal lobe, each pathway accounting for different lin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John McCarthy (computer Scientist)

John McCarthy (September 4, 1927 – October 24, 2011) was an American computer scientist and cognitive scientist. He was one of the founders of the discipline of artificial intelligence. He co-authored the document that coined the term "artificial intelligence" (AI), developed the programming language family Lisp (programming language), Lisp, significantly influenced the design of the language ALGOL, popularized time-sharing, and invented Garbage collection (computer science), garbage collection. McCarthy spent most of his career at Stanford University. He received many accolades and honors, such as the 1971 Turing Award for his contributions to the topic of AI, the United States National Medal of Science, and the Kyoto Prize. Early life and education John McCarthy was born in Boston, Massachusetts, on September 4, 1927, to an Irish people, Irish immigrant father and a Lithuanian Jewish immigrant mother, John Patrick and Ida (Glatt) McCarthy. The family was obliged to relocat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Aaron Woods

William Aaron Woods (born June 17, 1942), generally known as Bill Woods, is a researcher in natural language processing, continuous speech understanding, knowledge representation, and knowledge-based search technology. He is currently a Software Engineer at Google. Education Woods received a bachelor's degree from Ohio Wesleyan University (1964) and a Master's (1965) and Ph.D. (1968) in applied mathematics from Harvard University, where he then served as an assistant professor and later as a Gordon McKay Professor of the Practice of Computer Science. Research Woods built one of the first natural language question answering systems ( LUNAR) to answer questions about the Apollo 11 Moon rocks for the NASA Manned Spacecraft Center while he was at Bolt Beranek and Newman (BBN) in Cambridge, Massachusetts. At BBN, he was a principal scientist and manager of the Artificial Intelligence Department in the '70's and early '80's. He was the principal investigator for BBN's early work in n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Janet Kolodner

Janet Lynne Kolodner is an American cognitive scientist and learning scientist. She is a Professor of the Practice at the Lynch School of Education at Boston College and co-lead of the MA Program in Learning Engineering. She is also Regents' Professor Emerita in the School of Interactive Computing, College of Computing at the Georgia Institute of Technology. She was Founding Editor in Chief of '' The Journal of the Learning Sciences'' and served in that role for 19 years. She was Founding Executive Officer of the International Society of the Learning Sciences (ISLS). From August, 2010 through July, 2014, she was a program officer at the National Science Foundation and headed up the Cyberlearning and Future Learning Technologies program (originally called Cyberlearning: Transforming Education). Since finishing at NSF, she is working toward a set of projects that will integrate learning technologies coherently to support disciplinary and everyday learning, support project-based p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wendy Lehnert

Wendy Grace Lehnert is an American computer scientist specializing in natural language processing and known for her pioneering use of machine learning in natural language processing. She is a professor emerita at the University of Massachusetts Amherst. Education and career Lehnert earned a bachelor's degree in mathematics from Portland State University in 1972, and a master's degree from Yeshiva University in 1974. She became a student of Roger Schank at Yale University, completing her Ph.D. there in 1977 with a dissertation on ''The Process of Question Answering'', and was hired by Yale as an assistant professor. She moved to the University of Massachusetts Amherst in 1982. At Amherst, her doctoral students have included Claire Cardie and Ellen Riloff. She retired in 2011. Books Lehnert has written both scholarly and popular books on computing, including: *''The Process of Question Answering: A Computer Simulation of Cognition'' (L. Erlbaum Associates, 1978) *''Light on the Web ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert Wilensky

Robert Wilensky (26 March 1951 – 15 March 2013) was an American computer scientist and emeritus professor at the UC Berkeley School of Information, with his main focus of research in artificial intelligence. Academic career In 1971, Wilensky received his bachelor's degree in mathematics from Yale University, and in 1978, a Ph.D. in computer science from the same institution. After finishing his thesis, "Understanding Goal-Based Stories", Wilensky joined the faculty from the EECS Department of UC Berkeley. In 1986, he worked as the doctoral advisor of Peter Norvig, who then later published the standard textbook of the field: '' Artificial Intelligence: A Modern Approach''. From 1993 to 1997, Wilensky was the Berkeley Computer Science Division Chair. During this time, he also served as director of the Berkeley Cognitive Science Program, director of the Berkeley Artificial Intelligence Research Project, and board member of the International Computer Science Institute. In 1997, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

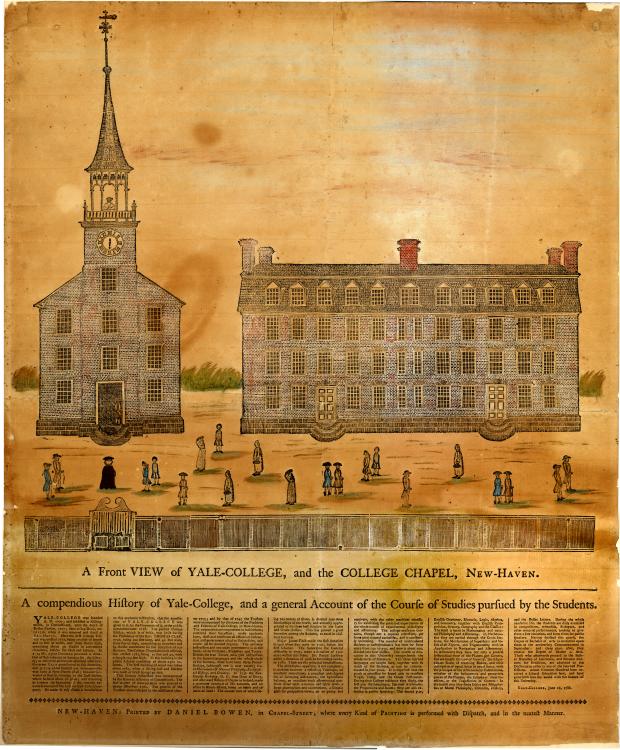

Yale University

Yale University is a Private university, private Ivy League research university in New Haven, Connecticut, United States. Founded in 1701, Yale is the List of Colonial Colleges, third-oldest institution of higher education in the United States, and one of the nine colonial colleges chartered before the American Revolution. Yale was established as the Collegiate School in 1701 by Congregationalism in the United States, Congregationalist clergy of the Connecticut Colony. Originally restricted to instructing ministers in theology and sacred languages, the school's curriculum expanded, incorporating humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first Doctor of Philosophy, PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew rapidly after 1890 due to the expansion of the physical campus and its scientif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sydney Lamb

Sydney MacDonald Lamb (born May 4, 1929 in Denver, Colorado) is an American linguist. He is the Arnold Professor Emeritus of Linguistics and Cognitive Science at Rice University. His scientific contributions have been wide-ranging, including those to historical linguistics, computational linguistics, and the theory of linguistic structure. Lamb is best known for his development of Relational Network Theory (RNT; formerly known as Stratificational Grammar), starting in the early 1960s. The key insight of RNT is that linguistic systems such as phonology, morphology, syntax, and semantics are best described as networks of relationships rather than computational operations upon symbols (which is the view taken in many frameworks of formal linguistics, such as Chomskyan Generative Grammar). Lamb developed a set of graphical formalisms, known as "abstract" and "narrow" relational network notation, for the analysis of linguistic networks based on the system network notation created ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conceptual Dependency Theory

Conceptual dependency theory is a model of natural language understanding used in artificial intelligence systems. Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence. This model was extensively used by Schank's students at Yale University such as Robert Wilensky, Wendy Lehnert, and Janet Kolodner. Schank developed the model to represent knowledge for natural language input into computers. Partly influenced by the work of Sydney Lamb, his goal was to make the meaning independent of the words used in the input, i.e. two sentences identical in meaning would have a single representation. The system was also intended to draw logical inferences. The model uses the following basic representational tokens:''Language, mind, and brain'' by Thomas W. Simon, Robert J. Scholes 1982 page 105 :* ''real world objects'', each with some ''attributes''. :* ''real world actions'', each with attributes :* ''times'' :* ''locations'' A set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stanford University

Leland Stanford Junior University, commonly referred to as Stanford University, is a Private university, private research university in Stanford, California, United States. It was founded in 1885 by railroad magnate Leland Stanford (the eighth List of governors of California, governor of and then-incumbent List of United States senators from California, United States senator representing California) and his wife, Jane Stanford, Jane, in memory of their only child, Leland Stanford Jr., Leland Jr. The university admitted its first students in 1891, opening as a Mixed-sex education, coeducational and non-denominational institution. It struggled financially after Leland died in 1893 and again after much of the campus was damaged by the 1906 San Francisco earthquake. Following World War II, university Provost (education), provost Frederick Terman inspired an entrepreneurship, entrepreneurial culture to build a self-sufficient local industry (later Silicon Valley). In 1951, Stanfor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Roger Schank

Roger Carl Schank (March 12, 1946 – January 29, 2023) was an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur. Beginning in the late 1960s, he pioneered conceptual dependency theory (within the context of natural language understanding) and case-based reasoning, both of which challenged cognitivist views of memory and reasoning. He began his career teaching at Yale University and Stanford University. In 1989, Schank was granted $30 million in a ten-year commitment to his research and development by Andersen Consulting, through which he founded the Institute for the Learning Sciences (ILS) at Northwestern University in Chicago. Early life Schank was born in Manhattan, New York, in 1946, and he attended Stuyvesant High School. Academic career For his undergraduate degree, Schank studied mathematics at Carnegie Mellon University in Pittsburgh PA, and later was awarded a PhD in linguistics at the Univ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexicon

A lexicon (plural: lexicons, rarely lexica) is the vocabulary of a language or branch of knowledge (such as nautical or medical). In linguistics, a lexicon is a language's inventory of lexemes. The word ''lexicon'' derives from Greek word (), neuter of () meaning 'of or for words'. Linguistic theories generally regard human languages as consisting of two parts: a lexicon, essentially a catalogue of a language's words (its wordstock); and a grammar, a system of rules which allow for the combination of those words into meaningful sentences. The lexicon is also thought to include bound morphemes, which cannot stand alone as words (such as most affixes). In some analyses, compound words and certain classes of idiomatic expressions, collocations and other phrasemes are also considered to be part of the lexicon. Dictionaries are lists of the lexicon, in alphabetical order, of a given language; usually, however, bound morphemes are not included. Size and organization Items ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |