|

Named Entity

In information extraction, a named entity is a real-world object, such as a person, location, organization, product, etc., that can be denoted with a proper name. It can be abstract or have a physical existence. Examples of named entities include Barack Obama, New York City, Volkswagen Golf, or anything else that can be named. Named entities can simply be viewed as entity instances (e.g., New York City is an instance of a city). From a historical perspective, the term ''Named Entity'' was coined during the MUC-6 evaluation campaign and contained ENAMEX (entity name expressions e.g. persons, locations and organizations) and NUMEX (numerical expression). A more formal definition can be derived from the rigid designator by Saul Kripke. In the expression "Named Entity", the word "Named" aims to restrict the possible set of entities to only those for which one or many rigid designators stands for the referent. A designator is rigid when it designates the same thing in every possible w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Extraction

Information extraction (IE) is the task of automatically extracting structured information from unstructured and/or semi-structured machine-readable documents and other electronically represented sources. In most of the cases this activity concerns processing human language texts by means of natural language processing (NLP). Recent activities in multimedia document processing like automatic annotation and content extraction out of images/audio/video/documents could be seen as information extraction Due to the difficulty of the problem, current approaches to IE (as of 2010) focus on narrowly restricted domains. An example is the extraction from newswire reports of corporate mergers, such as denoted by the formal relation: :\mathrm(company_1, company_2, date), from an online news sentence such as: :''"Yesterday, New York based Foo Inc. announced their acquisition of Bar Corp."'' A broad goal of IE is to allow computation to be done on the previously unstructured data. A more sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entity Linking

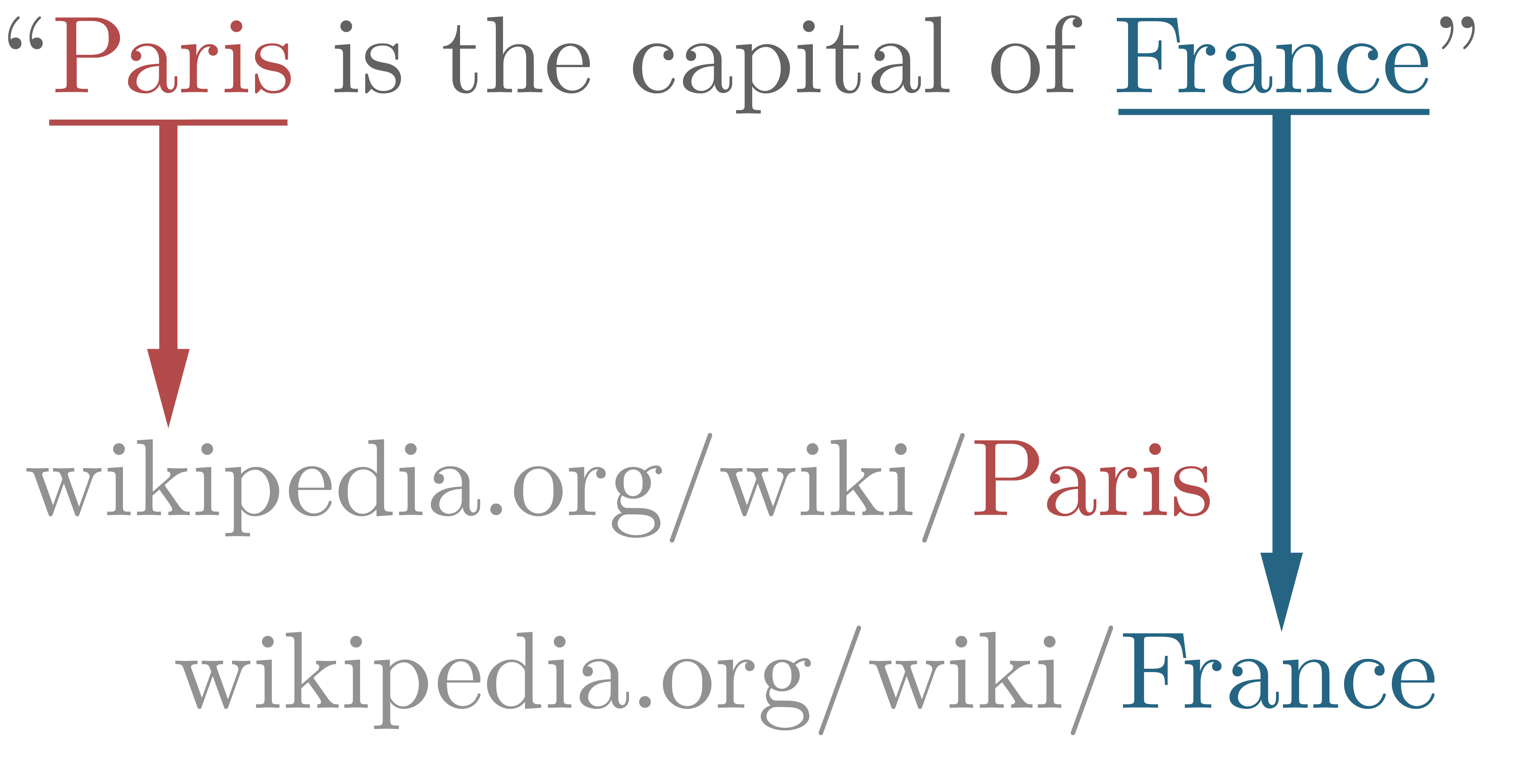

In natural language processing, entity linking, also referred to as named-entity linking (NEL), named-entity disambiguation (NED), named-entity recognition and disambiguation (NERD) or named-entity normalization (NEN) is the task of assigning a unique identity to entities (such as famous individuals, locations, or companies) mentioned in text. For example, given the sentence ''"Paris is the capital of France"'', the idea is to determine that ''"Paris"'' refers to the city of Paris and not to Paris Hilton or any other entity that could be referred to as ''"Paris"''. Entity linking is different from named-entity recognition (NER) in that NER identifies the occurrence of a named entity in text but it does not identify which specific entity it is (see Differences from other techniques). Introduction In entity linking, words of interest (names of persons, locations and companies) are mapped from an input text to corresponding unique entities in a target knowledge base. Words of inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Toolkit

The Natural Language Toolkit, or more commonly NLTK, is a suite of libraries and programs for symbolic and statistical natural language processing (NLP) for English written in the Python programming language. It was developed by Steven Bird and Edward Loper in the Department of Computer and Information Science at the University of Pennsylvania. NLTK includes graphical demonstrations and sample data. It is accompanied by a book that explains the underlying concepts behind the language processing tasks supported by the toolkit, plus a cookbook. NLTK is intended to support research and teaching in NLP or closely related areas, including empirical linguistics, cognitive science, artificial intelligence, information retrieval, and machine learning. NLTK has been used successfully as a teaching tool, as an individual study tool, and as a platform for prototyping and building research systems. There are 32 universities in the US and 25 countries using NLTK in their courses. NLTK suppor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General Architecture For Text Engineering

General Architecture for Text Engineering or GATE is a Java suite of tools originally developed at the University of Sheffield beginning in 1995 and now used worldwide by a wide community of scientists, companies, teachers and students for many natural language processing tasks, including information extraction in many languages. As of May 28, 2011, 881 people are on the gate-users mailing list at SourceForge.net, and 111,932 downloads from SourceForge are recorded since the project moved to SourceForge in 2005. The paper "GATE: A framework and graphical development environment for robust NLP tools and applications" has received over 2000 citations since publication (according to Google Scholar). Books covering the use of GATE, in addition to the GATE User Guide, include "Building Search Applications: Lucene, LingPipe, and Gate", by Manu Konchady, and "Introduction to Linguistic Annotation and Text Analytics", by Graham Wilcock. GATE community and research has been involved in seve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SpaCy

spaCy ( ) is an open-source software library for advanced natural language processing, written in the programming languages Python and Cython. The library is published under the MIT license and its main developers are Matthew Honnibal and Ines Montani, the founders of the software company Explosion. Unlike NLTK, which is widely used for teaching and research, spaCy focuses on providing software for production usage. spaCy also supports deep learning workflows that allow connecting statistical models trained by popular machine learning libraries like TensorFlow, PyTorch or MXNet through its own machine learning library Thinc. Using Thinc as its backend, spaCy features convolutional neural network models for part-of-speech tagging, dependency parsing, text categorization and named entity recognition (NER). Prebuilt statistical neural network models to perform these tasks are available for 23 languages, including English, Portuguese, Spanish, Russian and Chinese, and there is al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache OpenNLP

The Apache OpenNLP library is a machine learning based toolkit for the processing of natural language text. It supports the most common NLP tasks, such as language detection, tokenization, sentence segmentation, part-of-speech tagging, named entity extraction, chunking, parsing and coreference resolution. These tasks are usually required to build more advanced text processing services. See also * Unstructured Information Management Architecture (UIMA) * General Architecture for Text Engineering (GATE) * cTAKES References External linksApache OpenNLP Website {{Apache Software Foundation Natural language processing Statistical natural language processing Natural language processing toolkits OpenNLP The Apache OpenNLP library is a machine learning based toolkit for the processing of natural language text. It supports the most common NLP tasks, such as language detection, tokenization, sentence segmentation, part-of-speech tagging, named en ... Java (programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truecasing

Truecasing, also called capitalization recovery, capitalization correction, or case restoration, is the problem in natural language processing (NLP) of determining the proper capitalization of words where such information is unavailable. This commonly comes up due to the standard practice (in English and many other languages) of automatically capitalizing the first word of a sentence. It can also arise in badly cased or noncased text (for example, all-lowercase or all-uppercase text messages). Truecasing is unnecessary in languages whose scripts do not have a distinction between uppercase and lowercase letters. This includes all languages not written in the Latin, Greek, Cyrillic or Armenian alphabets, such as Japanese, Chinese, Thai, Hebrew, Arabic, Hindi, and Georgian. Techniques * Neural network models that operate at the word level or the character level have been trained to recover capitalization with greater than 90% accuracy. * Sentence segmentation can be used to determ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, also referred to as ''text data mining'', similar to text analytics, is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a KDD (Knowledge Discovery in Databases) process. Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Knowledge Extraction

Knowledge extraction is the creation of knowledge from structured (relational databases, XML) and unstructured (text, documents, images) sources. The resulting knowledge needs to be in a machine-readable and machine-interpretable format and must represent knowledge in a manner that facilitates inferencing. Although it is methodically similar to information extraction ( NLP) and ETL (data warehouse), the main criterion is that the extraction result goes beyond the creation of structured information or the transformation into a relational schema. It requires either the reuse of existing formal knowledge (reusing identifiers or ontologies) or the generation of a schema based on the source data. The RDB2RDF W3C group is currently standardizing a language for extraction of resource description frameworks (RDF) from relational databases. Another popular example for knowledge extraction is the transformation of Wikipedia into structured data and also the mapping to existing knowledge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Extraction

Information extraction (IE) is the task of automatically extracting structured information from unstructured and/or semi-structured machine-readable documents and other electronically represented sources. In most of the cases this activity concerns processing human language texts by means of natural language processing (NLP). Recent activities in multimedia document processing like automatic annotation and content extraction out of images/audio/video/documents could be seen as information extraction Due to the difficulty of the problem, current approaches to IE (as of 2010) focus on narrowly restricted domains. An example is the extraction from newswire reports of corporate mergers, such as denoted by the formal relation: :\mathrm(company_1, company_2, date), from an online news sentence such as: :''"Yesterday, New York based Foo Inc. announced their acquisition of Bar Corp."'' A broad goal of IE is to allow computation to be done on the previously unstructured data. A more sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entity Linking

In natural language processing, entity linking, also referred to as named-entity linking (NEL), named-entity disambiguation (NED), named-entity recognition and disambiguation (NERD) or named-entity normalization (NEN) is the task of assigning a unique identity to entities (such as famous individuals, locations, or companies) mentioned in text. For example, given the sentence ''"Paris is the capital of France"'', the idea is to determine that ''"Paris"'' refers to the city of Paris and not to Paris Hilton or any other entity that could be referred to as ''"Paris"''. Entity linking is different from named-entity recognition (NER) in that NER identifies the occurrence of a named entity in text but it does not identify which specific entity it is (see Differences from other techniques). Introduction In entity linking, words of interest (names of persons, locations and companies) are mapped from an input text to corresponding unique entities in a target knowledge base. Words of inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |