|

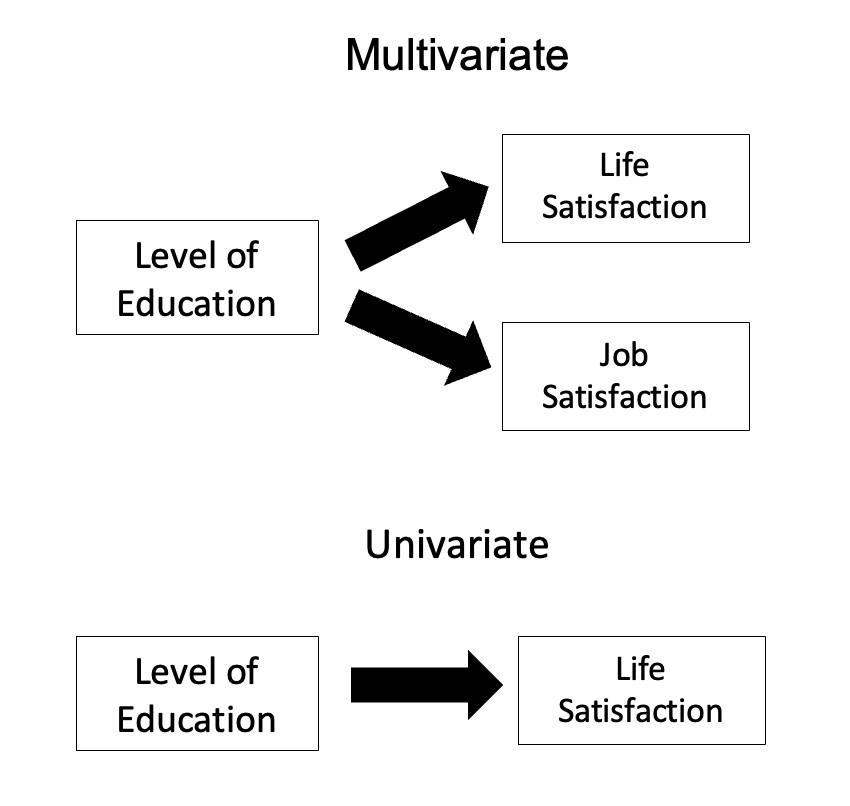

Multivariate Analysis Of Variance

In statistics, multivariate analysis of variance (MANOVA) is a procedure for comparing multivariate sample means. As a multivariate procedure, it is used when there are two or more dependent variables, and is often followed by significance tests involving individual dependent variables separately. Without relation to the image, the dependent variables may be k life satisfactions scores measured at sequential time points and p job satisfaction scores measured at sequential time points. In this case there are k+p dependent variables whose linear combination follows a multivariate normal distribution, multivariate variance-covariance matrix homogeneity, and linear relationship, no multicollinearity, and each without outliers. Relationship with ANOVA MANOVA is a generalized form of univariate analysis of variance (ANOVA), although, unlike univariate ANOVA, it uses the covariance between outcome variables in testing the statistical significance of the mean differences. Where sums o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Univariate Vs

In mathematics, a univariate object is an expression, equation, function or polynomial involving only one variable. Objects involving more than one variable are multivariate. In some cases the distinction between the univariate and multivariate cases is fundamental; for example, the fundamental theorem of algebra and Euclid's algorithm for polynomials are fundamental properties of univariate polynomials that cannot be generalized to multivariate polynomials. In statistics, a univariate distribution characterizes one variable, although it can be applied in other ways as well. For example, univariate data are composed of a single scalar component. In time series analysis, the whole time series is the "variable": a univariate time series is the series of values over time of a single quantity. Correspondingly, a "multivariate time series" characterizes the changing values over time of several quantities. In some cases, the terminology is ambiguous, since the values within a univariat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Samuel Stanley Wilks

Samuel Stanley Wilks (June 17, 1906 – March 7, 1964) was an American mathematician and academic who played an important role in the development of mathematical statistics, especially in regard to practical applications. Early life and education Wilks was born in Little Elm, Texas and raised on a farm. He studied Industrial Arts at the North Texas State Teachers College in Denton, Texas, obtaining his bachelor's degree in 1926. He received his master's degree in mathematics in 1928 from the University of Texas. He obtained his Ph.D. at the University of Iowa under Everett F. Lindquist; his thesis dealt with a problem of statistical measurement in education, and was published in the ''Journal of Educational Psychology''. Career Wilks became an instructor in mathematics at Princeton University in 1933; in 1938 he assumed the editorship of the journal ''Annals of Mathematical Statistics'' in place of Harry C. Carver. Wilks assembled an advisory board for the journal that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Analysis Of Variance

In statistics, multivariate analysis of variance (MANOVA) is a procedure for comparing multivariate sample means. As a multivariate procedure, it is used when there are two or more dependent variables, and is often followed by significance tests involving individual dependent variables separately. Without relation to the image, the dependent variables may be k life satisfactions scores measured at sequential time points and p job satisfaction scores measured at sequential time points. In this case there are k+p dependent variables whose linear combination follows a multivariate normal distribution, multivariate variance-covariance matrix homogeneity, and linear relationship, no multicollinearity, and each without outliers. Relationship with ANOVA MANOVA is a generalized form of univariate analysis of variance (ANOVA), although, unlike univariate ANOVA, it uses the covariance between outcome variables in testing the statistical significance of the mean differences. Where sums o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

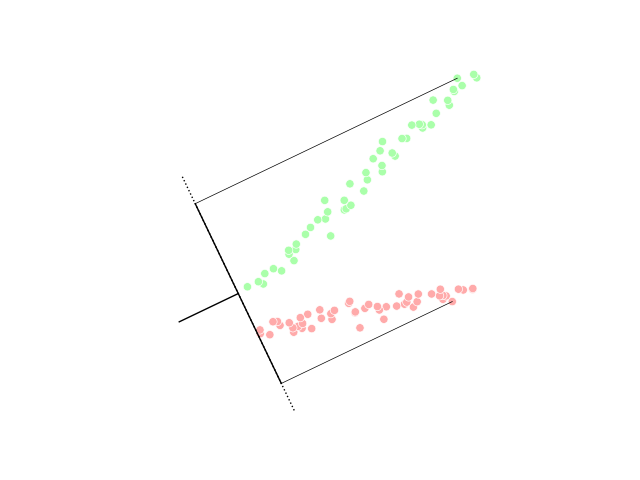

Canonical Correlation Analysis

In statistics, canonical-correlation analysis (CCA), also called canonical variates analysis, is a way of inferring information from cross-covariance matrices. If we have two vectors ''X'' = (''X''1, ..., ''X''''n'') and ''Y'' = (''Y''1, ..., ''Y''''m'') of random variables, and there are correlations among the variables, then canonical-correlation analysis will find linear combinations of ''X'' and ''Y'' which have maximum correlation with each other. T. R. Knapp notes that "virtually all of the commonly encountered parametric tests of significance can be treated as special cases of canonical-correlation analysis, which is the general procedure for investigating the relationships between two sets of variables." The method was first introduced by Harold Hotelling in 1936, although in the context of angles between flats the mathematical concept was published by Jordan in 1875. Definition Given two column vectors X = (x_1, \dots, x_n)^T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discriminant Function Analysis

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical independent variables and a continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (''i.e.'' the class label). Logistic regression and probit regression are more similar to LDA than ANOVA is, as they also explain a categorical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Outcome Variables

{{disambig ...

Outcome may refer to: * Outcome (probability), the result of an experiment in probability theory * Outcome (game theory), the result of players' decisions in game theory * ''The Outcome'', a 2005 Spanish film * An outcome measure (or endpoint) in a clinical trial See also * Outcome-based education * Outcomes theory Outcomes theory provides the conceptual basis for thinking about, and working with outcomes systems of any type. An outcomes system is any system that: identifies; prioritizes; measures; attributes; or hold parties to account for outcomes of any ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hotelling's T-square

In statistics, particularly in hypothesis testing, the Hotelling's ''T''-squared distribution (''T''2), proposed by Harold Hotelling, is a multivariate probability distribution that is tightly related to the ''F''-distribution and is most notable for arising as the distribution of a set of sample statistics that are natural generalizations of the statistics underlying the Student's ''t''-distribution. The Hotelling's ''t''-squared statistic (''t''2) is a generalization of Student's ''t''-statistic that is used in multivariate hypothesis testing. Motivation The distribution arises in multivariate statistics in undertaking tests of the differences between the (multivariate) means of different populations, where tests for univariate problems would make use of a ''t''-test. The distribution is named for Harold Hotelling, who developed it as a generalization of Student's ''t''-distribution. Definition If the vector d is Gaussian multivariate-distributed with zero mean and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation

An approximation is anything that is intentionally similar but not exactly equality (mathematics), equal to something else. Etymology and usage The word ''approximation'' is derived from Latin ''approximatus'', from ''proximus'' meaning ''very near'' and the prefix ''ad-'' (''ad-'' before ''p'' becomes ap- by assimilation (phonology), assimilation) meaning ''to''. Words like ''approximate'', ''approximately'' and ''approximation'' are used especially in technical or scientific contexts. In everyday English, words such as ''roughly'' or ''around'' are used with a similar meaning. It is often found abbreviated as ''approx.'' The term can be applied to various properties (e.g., value, quantity, image, description) that are nearly, but not exactly correct; similar, but not exactly the same (e.g., the approximate time was 10 o'clock). Although approximation is most often applied to numbers, it is also frequently applied to such things as Function (mathematics), mathematical functio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Multivariate Analysis

The ''Journal of Multivariate Analysis'' is a monthly peer-reviewed scientific journal that covers applications and research in the field of multivariate statistical analysis. The journal's scope includes theoretical results as well as applications of new theoretical methods in the field. Some of the research areas covered include copula modeling, functional data analysis, graphical modeling, high-dimensional data analysis, image analysis, multivariate extreme-value theory, sparse modeling, and spatial statistics. According to the ''Journal Citation Reports'', the journal has a 2017 impact factor of 1.009. See also *List of statistics journals This is a list of scientific journals published in the field of statistics. Introductory and outreach *''The American Statistician'' *'' Significance'' General theory and methodology *''Annals of the Institute of Statistical Mathematics'' *'' ... References External links * {{DEFAULTSORT:Journal of Multivariate Analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Roy's Greatest Root

Roy's is an upscale American restaurant that specializes in Hawaiian and Japanese fusion cuisine, with a focus on sushi, seafood and steak. The chain was founded by James Beard Foundation Award Winner, Roy Yamaguchi in 1988 in Honolulu, Hawaii. The concept was well received among critics upon inception. The concept has grown to include 21 Roy's restaurants in the continental United States, six in Hawaii, one in Japan and one in Guam. Roy's is known best for its eclectic blend of Hawaiian, Japanese, and Classic French cuisine created by founder Roy Yamaguchi who was born in Tokyo, Japan, and spent his childhood visiting his grandparents who owned a tavern in Wailuku, Maui. Yamaguchi then graduated from the Culinary Institute of America where he received his formal culinary training and credits these factors to inspiring his unique culinary vision that is brought to life at Roy's. 20 mainland locations Roy's were owned and operated by Bloomin' Brands, Inc., until December 2014 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harold Hotelling

Harold Hotelling (; September 29, 1895 – December 26, 1973) was an American mathematical statistician and an influential economic theorist, known for Hotelling's law, Hotelling's lemma, and Hotelling's rule in economics, as well as Hotelling's T-squared distribution in statistics. He also developed and named the principal component analysis method widely used in finance, statistics and computer science. He was Associate Professor of Mathematics at Stanford University from 1927 until 1931, a member of the faculty of Columbia University from 1931 until 1946, and a Professor of Mathematical Statistics at the University of North Carolina at Chapel Hill from 1946 until his death. A street in Chapel Hill bears his name. In 1972, he received the North Carolina Award for contributions to science. Statistics Hotelling is known to statisticians because of Hotelling's T-squared distribution which is a generalization of the Student's t-distribution in multivariate setting, and its use in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |