|

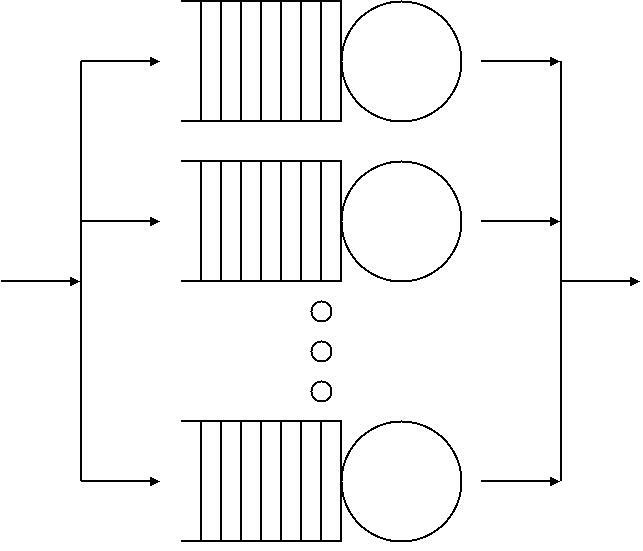

Multilevel Feedback Queue

In computer science, a multilevel feedback queue is a scheduling algorithm. Scheduling algorithms are designed to have some process running at all times to keep the central processing unit A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions ... (CPU) busy. The multilevel feedback queue extends standard algorithms with the following design requirements: #Separate processes into multiple ''ready queues'' based on their need for the processor. #Give preference to processes with short CPU bursts. #Give preference to processes with high I/O bursts. (I/O bound processes will sleep in the ''wait queue'' to give other processes CPU time.) The multilevel feedback queue was first developed by Fernando J. Corbató (1962). For this accomplishment, the Association for Computing Machinery awarded ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Starvation (computer Science)

In computer science, resource starvation is a problem encountered in concurrent computing where a process is perpetually denied necessary resources to process its work. Starvation may be caused by errors in a scheduling or mutual exclusion algorithm, but can also be caused by resource leaks, and can be intentionally caused via a denial-of-service attack such as a fork bomb. When starvation is impossible in a concurrent algorithm, the algorithm is called starvation-free, lockout-freed or said to have finite bypass. This property is an instance of liveness, and is one of the two requirements for any mutual exclusion algorithm; the other being correctness. The name "finite bypass" means that any process (concurrent part) of the algorithm is bypassed at most a finite number times before being allowed access to the shared resource. Scheduling Starvation is usually caused by an overly simplistic scheduling algorithm. For example, if a (poorly designed) multi-tasking system always sw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Lottery Scheduling

Lottery scheduling is a probabilistic scheduling algorithm for processes in an operating system. Processes are each assigned some number of lottery tickets, and the scheduler draws a random ticket to select the next process. The distribution of tickets need not be uniform; granting a process more tickets provides it a relative higher chance of selection. This technique can be used to approximate other scheduling algorithms, such as Shortest job next and Fair-share scheduling. Lottery scheduling solves the problem of starvation. Giving each process at least one lottery ticket guarantees that it has non-zero probability of being selected at each scheduling operation. Implementation Implementations of lottery scheduling should take into consideration that there could be billions of tickets distributed among a large pool of threads. To have an array where each index Index (: indexes or indices) may refer to: Arts, entertainment, and media Fictional entities * Index (''A Certa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

First-come, First-served

Queueing theory is the mathematical study of Queue area, waiting lines, or wikt:queue, queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang, who created models to describe the system of incoming calls at the Copenhagen Telephone Exchange Company. These ideas were seminal to the field of teletraffic engineering and have since seen applications in telecommunications, traffic engineering (transportation), traffic engineering, computing, project management, and particularly industrial engineering, where they are applied in the design of factories, shops, offices, and hospitals. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Round-robin Scheduling

Round-robin (RR) is one of the algorithms employed by process and network schedulers in computing. Guowang Miao, Jens Zander, Ki Won Sung, and Ben Slimane, Fundamentals of Mobile Data Networks, Cambridge University Press, , 2016. As the term is generally used, time slices (also known as time quanta) are assigned to each process in equal portions and in circular order, handling all processes without priority (also known as cyclic executive). Round-robin scheduling is simple, easy to implement, and starvation-free. Round-robin scheduling can be applied to other scheduling problems, such as data packet scheduling in computer networks. It is an operating system concept. The name of the algorithm comes from the round-robin principle known from other fields, where each person takes an equal share of something in turn. Process scheduling To schedule processes fairly, a round-robin scheduler generally employs time-sharing, giving each job a time slot or ''quantum'' (its allowa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

FIFO (computing And Electronics)

Representation of a FIFO queue In computing and in systems theory, first in, first out (the first in is the first out), acronymized as FIFO, is a method for organizing the manipulation of a data structure (often, specifically a data buffer) where the oldest (first) entry, or "head" of the queue, is processed first. Such processing is analogous to servicing people in a queue area on a first-come, first-served (FCFS) basis, i.e. in the same sequence in which they arrive at the queue's tail. FCFS is also the jargon term for the FIFO operating system scheduling algorithm, which gives every process central processing unit (CPU) time in the order in which it is demanded. FIFO's opposite is LIFO, last-in-first-out, where the youngest entry or "top of the stack" is processed first. A priority queue is neither FIFO or LIFO but may adopt similar behaviour temporarily or by default. Queueing theory encompasses these methods for processing data structures, as well as interactions be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Aging (scheduling)

In computer science for Operating systems, aging (US English) or ageing is a Scheduling (computing), scheduling technique used to avoid Resource starvation, starvation. Scheduling (computing)#Fixed priority pre-emptive scheduling, Fixed priority scheduling is a scheduling discipline, in which Task (computing), tasks queued for utilizing a system resource are assigned a priority each. A task with a high priority is allowed to access a specific system resource before a task with a lower priority is allowed to do the same. A disadvantage of this approach is that tasks assigned with a lower priority may be starved when a large number of high priority tasks are queued. Aging is used to gradually increase the Scheduling (computing), priority of a task, based on its waiting time in the Multilevel feedback queue, ready queue. Problem In priority-based Scheduling (computing), scheduling algorithms, a major problem is indefinite block, or Starvation (computing), starvation. A process that is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Preemption (computing)

In computing, preemption is the act performed by an external scheduler — without assistance or cooperation from the task — of temporarily interrupting an executing task, with the intention of resuming it at a later time. This preemptive scheduler usually runs in the most privileged protection ring, meaning that interruption and then resumption are considered highly secure actions. Such changes to the currently executing task of a processor are known as context switching. User mode and kernel mode In any given system design, some operations performed by the system may not be preemptable. This usually applies to kernel functions and service interrupts which, if not permitted to run to completion, would tend to produce race conditions resulting in deadlock. Barring the scheduler from preempting tasks while they are processing kernel functions simplifies the kernel design at the expense of system responsiveness. The distinction between user mode and kernel mode, w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scheduling (computing)

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows. The scheduling activity is carried out by a mechanism called a scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing '' throughput'' (the total amount of work completed per time unit); * minimizing '' wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Multilevel Queue

Multi-level queueing, used at least since the late 1950s/early 1960s, is a queue with a predefined number of levels. Items get assigned to a particular level at insert (using some predefined algorithm), and thus cannot be moved to another level (unlike in the multilevel feedback queue). Items get removed from the queue by removing all items from a level, and then moving to the next. If an item is added to a level above, the "fetching" restarts from there. Each level of the queue is free to use its own scheduling, thus adding greater flexibility than merely having multiple levels in a queue. Process Scheduling Multi-level queue scheduling algorithm is used in scenarios where the processes can be classified into groups based on property like process type, CPU time, IO access, memory size, etc. One general classification of the processes is foreground processes and background processes. In a multi-level queue scheduling algorithm, there will be 'n' number of queues, where 'n' is the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Turing Award

The ACM A. M. Turing Award is an annual prize given by the Association for Computing Machinery (ACM) for contributions of lasting and major technical importance to computer science. It is generally recognized as the highest distinction in the field of computer science and is often referred to as the "List of prizes known as the Nobel of a field or the highest honors of a field, Nobel Prize of Computing". , 79 people have been awarded the prize, with the most recent recipients being Andrew Barto and Richard S. Sutton, who won in 2024. The award is named after Alan Turing, also referred as "Father of Computer Science", who was a British mathematician and Reader (academic rank), reader in mathematics at the University of Manchester. Turing is often credited as being the founder of theoretical computer science and artificial intelligence, and a key contributor to the Allied cryptanalysis of the Enigma cipher during World War II. From 2007 to 2013, the award was accompanied by a prize ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |