|

Megabytes Per Second

In telecommunications, data-transfer rate is the average number of bits (bitrate), characters or symbols (baudrate), or data blocks per unit time passing through a communication link in a data-transmission system. Common data rate units are multiples of bits per second (bit/s) and bytes per second (B/s). For example, the data rates of modern residential high-speed Internet connections are commonly expressed in megabits per second (Mbit/s). Standards for unit symbols and prefixes Unit symbol The ISQ symbols for the bit and byte are ''bit'' and ''B'', respectively. In the context of data-rate units, one byte consists of 8 bits, and is synonymous with the unit octet. The abbreviation bps is often used to mean bit/s, so that when a ''1 Mbps'' connection is advertised, it usually means that the maximum achievable bandwidth is 1 Mbit/s (one million bits per second), which is 0.125 MB/s (megabyte per second), or about 0.1192 MiB/s (mebibyte per second). The Institu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Telecommunications

Telecommunication is the transmission of information by various types of technologies over wire, radio, optical, or other electromagnetic systems. It has its origin in the desire of humans for communication over a distance greater than that feasible with the human voice, but with a similar scale of expediency; thus, slow systems (such as postal mail) are excluded from the field. The transmission media in telecommunication have evolved through numerous stages of technology, from beacons and other visual signals (such as smoke signals, semaphore telegraphs, signal flags, and optical heliographs), to electrical cable and electromagnetic radiation, including light. Such transmission paths are often divided into communication channels, which afford the advantages of multiplexing multiple concurrent communication sessions. ''Telecommunication'' is often used in its plural form. Other examples of pre-modern long-distance communication included audio messages, such as coded drumb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variations

Variation or Variations may refer to: Science and mathematics * Variation (astronomy), any perturbation of the mean motion or orbit of a planet or satellite, particularly of the moon * Genetic variation, the difference in DNA among individuals or the differences between populations ** Human genetic variation, genetic differences in and among populations of humans * Magnetic variation, difference between magnetic north and true north, measured as an angle * ''p''-variation in mathematical analysis, a family of seminorms of functions * Coefficient of variation in probability theory and statistics, a standardized measure of dispersion of a probability distribution or frequency distribution * Total variation in mathematical analysis, a way of quantifying the change in a function over a subset of \mathbb^n or a measure space * Calculus of variations in mathematical analysis, a method of finding maxima and minima of functionals Arts * Variation (ballet) or pas seul, solo dance or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Terabit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gibibyte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gigabyte

The gigabyte () is a multiple of the unit byte for digital information. The prefix ''giga'' means 109 in the International System of Units (SI). Therefore, one gigabyte is one billion bytes. The unit symbol for the gigabyte is GB. This definition is used in all contexts of science (especially data science), engineering, business, and many areas of computing, including storage capacities of hard drives, solid state drives, and tapes, as well as data transmission speeds. However, the term is also used in some fields of computer science and information technology to denote (10243 or 230) bytes, particularly for sizes of RAM. Thus, prior to 1998, some usage of ''gigabyte'' has been ambiguous. To resolve this difficulty, IEC 80000-13 clarifies that a ''gigabyte'' (GB) is 109 bytes and specifies the term ''gibibyte'' (GiB) to denote 230 bytes. These differences are still readily seen for example, when a 400 GB drive's capacity is displayed by Microsoft Windows as 372 G ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gibibit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gigabit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mebibyte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

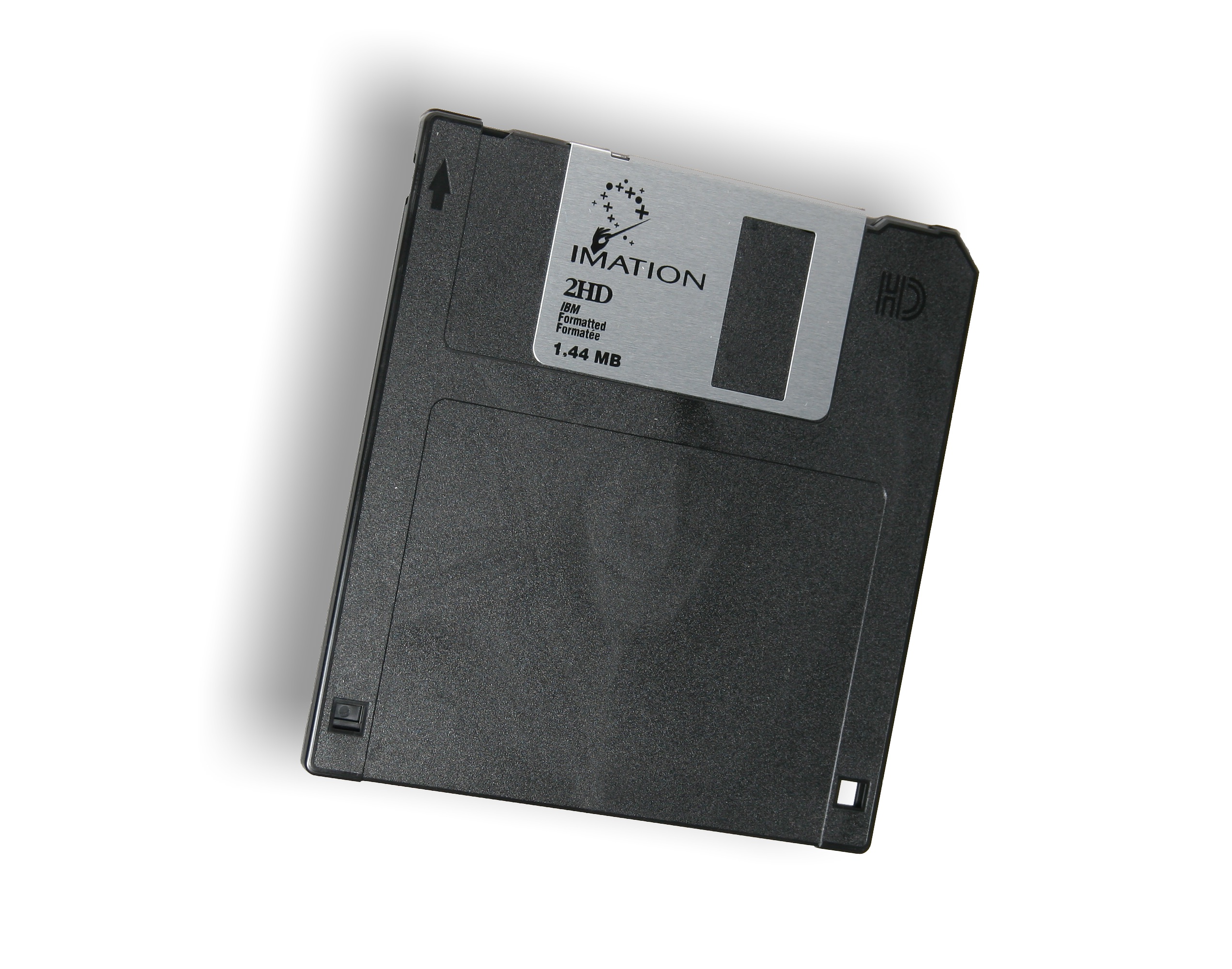

Megabyte

The megabyte is a multiple of the unit byte for digital information. Its recommended unit symbol is MB. The unit prefix ''mega'' is a multiplier of (106) in the International System of Units (SI). Therefore, one megabyte is one million bytes of information. This definition has been incorporated into the International System of Quantities. In the computer and information technology fields, other definitions have been used that arose for historical reasons of convenience. A common usage has been to designate one megabyte as (220 B), a quantity that conveniently expresses the binary architecture of digital computer memory. The standards bodies have deprecated this usage of the megabyte in favor of a new set of binary prefixes, in which this quantity is designated by the unit mebibyte (MiB). Definitions The unit megabyte is commonly used for 10002 (one million) bytes or 10242 bytes. The interpretation of using base 1024 originated as technical jargon for the byte SI prefix, mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mebibit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Megabit

The megabit is a multiple of the unit bit for digital information. The prefix mega (symbol M) is defined in the International System of Units (SI) as a multiplier of 106 (1 million), and therefore :1 megabit = = = 1000 kilobits. The megabit has the unit symbol Mbit or Mb. The lowercase 'b' in Mb distinguishes it from MB (for megabyte). The megabit is closely related to the mebibit, a unit multiple derived from the binary prefix mebi (symbol Mi) of the same order of magnitude, which is equal to = , or approximately 5% larger than the megabit. Despite the definitions of these new prefixes for binary-based quantities of storage by international standards organizations, memory semiconductor chips are still marketed using the metric prefix names to designate binary multiples. Using the common byte size of eight bits and the standard decimal definition of megabit and kilobyte, 1 megabit is equal to 125 kilobytes (kB) or approximately 122 kibibytes (KiB). The megab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kilobyte

The kilobyte is a multiple of the unit byte for digital information. The International System of Units (SI) defines the prefix ''kilo'' as 1000 (103); per this definition, one kilobyte is 1000 bytes.International Standard IEC 80000-13 Quantities and Units – Part 13: Information science and technology, International Electrotechnical Commission (2008). The internationally recommended unit symbol for the kilobyte is kB. In some areas of information technology, particularly in reference to solid-state memory capacity, ''kilobyte'' instead typically refers to 1024 (210) bytes. This arises from the prevalence of sizes that are powers of two in modern digital memory architectures, coupled with the accident that 210 differs from 103 by less than 2.5%. A kibibyte is defined by Clause 4 of IEC 80000-13 as 1024 bytes. Definitions and usage Base 10 (1000 bytes) In the International System of Units (SI) the prefix ''kilo'' means 1000 (103); therefore, one kilobyte is 1000 bytes. The u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |