|

Multi-threaded

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems. In Modern Operating Systems, Tanenbaum shows that many distinct models of process organization are possible.TANENBAUM, Andrew S. Modern Operating Systems. 1992. Prentice-Hall International Editions, ISBN 0-13-595752-4. In many cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently (via multithreading capabilities), sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non- thread-local global variables at any given time. History Threads made an early appearance under the name of "task ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subroutine

In computer programming, a function or subroutine is a sequence of program instructions that performs a specific task, packaged as a unit. This unit can then be used in programs wherever that particular task should be performed. Functions may be defined within programs, or separately in libraries that can be used by many programs. In different programming languages, a function may be called a routine, subprogram, subroutine, method, or procedure. Technically, these terms all have different definitions, and the nomenclature varies from language to language. The generic umbrella term ''callable unit'' is sometimes used. A function is often coded so that it can be started several times and from several places during one execution of the program, including from other functions, and then branch back ('' return'') to the next instruction after the ''call'', once the function's task is done. The idea of a subroutine was initially conceived by John Mauchly during his work on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Program Counter

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence. Usually, the PC is incremented after fetching an instruction, and holds the memory address of ("points to") the next instruction that would be executed. Processors usually fetch instructions sequentially from memory, but ''control transfer'' instructions change the sequence by placing a new value in the PC. These include branches (sometimes called jumps), subroutine calls, and returns. A transfer that is conditional on the truth of some assertion lets the computer follow a different sequence under different conditions. A branch provides that the next instruction is fetched from elsewhere in memory. A subroutine call not only branches but saves the prec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Runtime System

In computer programming, a runtime system or runtime environment is a sub-system that exists both in the computer where a program is created, as well as in the computers where the program is intended to be run. The name comes from the compile time and runtime division from compiled languages, which similarly distinguishes the computer processes involved in the creation of a program (compilation) and its execution in the target machine (the run time). Most programming languages have some form of runtime system that provides an environment in which programs run. This environment may address a number of issues including the management of application memory, how the program accesses variables, mechanisms for passing parameters between procedures, interfacing with the operating system, and otherwise. The compiler makes assumptions depending on the specific runtime system to generate correct code. Typically the runtime system will have some responsibility for setting up and managing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

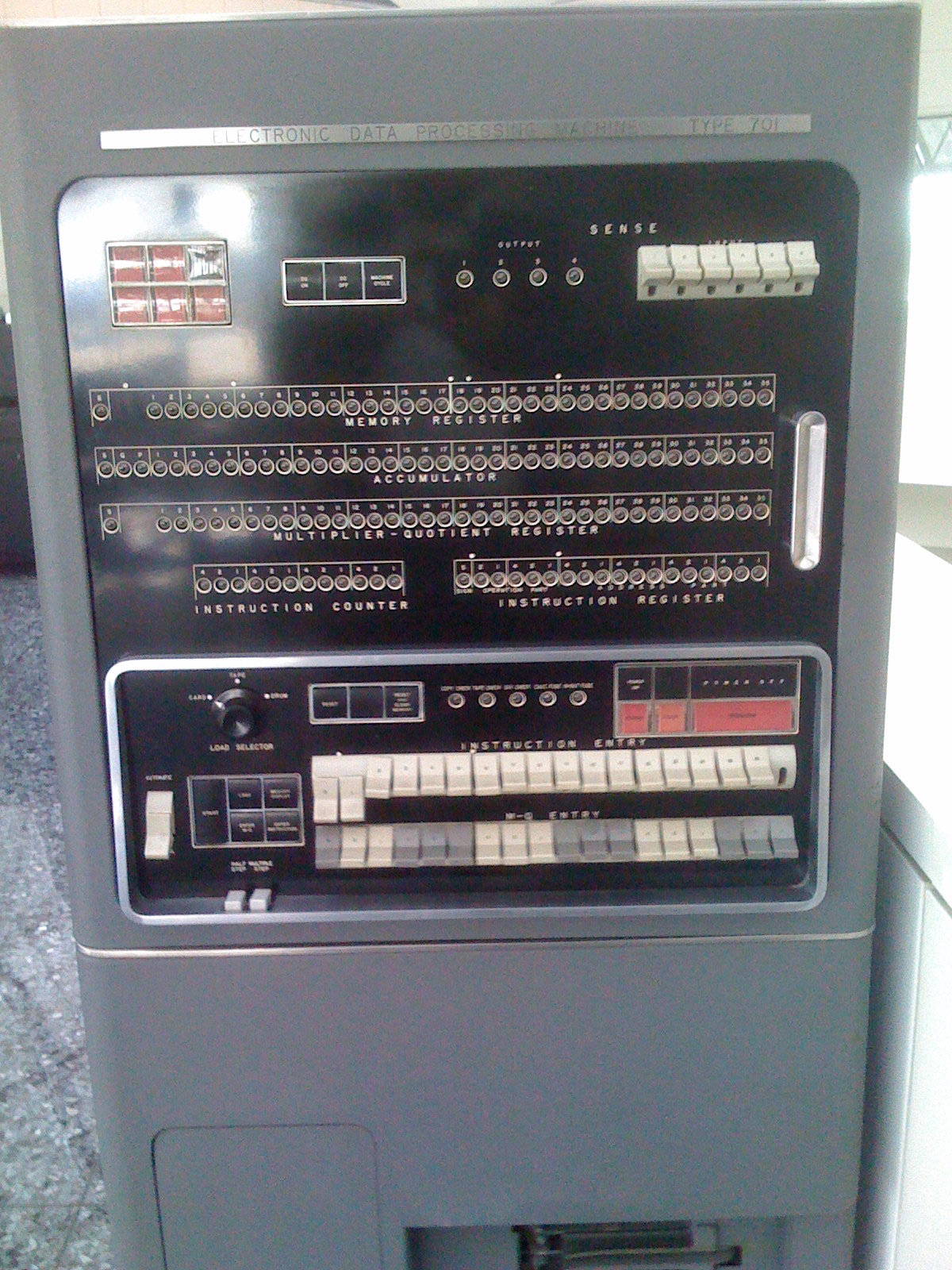

Processor Register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load data from a larger memory into registers where it is used for arithmetic operations and is manipulated or tested by machine instructions. Manipulated data is then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic RAM as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normally at the top of the memory hierarchy, and provide the fastest way to access data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Call Stack

In computer science, a call stack is a stack data structure that stores information about the active subroutines of a computer program. This kind of stack is also known as an execution stack, program stack, control stack, run-time stack, or machine stack, and is often shortened to just "the stack". Although maintenance of the call stack is important for the proper functioning of most software, the details are normally hidden and automatic in high-level programming languages. Many computer instruction sets provide special instructions for manipulating stacks. A call stack is used for several related purposes, but the main reason for having one is to keep track of the point to which each active subroutine should return control when it finishes executing. An active subroutine is one that has been called, but is yet to complete execution, after which control should be handed back to the point of call. Such activations of subroutines may be nested to any level (recursive as a special ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scheduling (computing)

In computing, scheduling is the action of assigning ''resources'' to perform ''tasks''. The ''resources'' may be processors, network links or expansion cards. The ''tasks'' may be threads, processes or data flows. The scheduling activity is carried out by a process called scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing ''throughput'' (the total amount of work completed per time unit); * minimizing ''wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Translation Lookaside Buffer

A translation lookaside buffer (TLB) is a memory cache that stores the recent translations of virtual memory to physical memory. It is used to reduce the time taken to access a user memory location. It can be called an address-translation cache. It is a part of the chip's memory-management unit (MMU). A TLB may reside between the CPU and the CPU cache, between CPU cache and the main memory or between the different levels of the multi-level cache. The majority of desktop, laptop, and server processors include one or more TLBs in the memory-management hardware, and it is nearly always present in any processor that utilizes paged or segmented virtual memory. The TLB is sometimes implemented as content-addressable memory (CAM). The CAM search key is the virtual address, and the search result is a physical address. If the requested address is present in the TLB, the CAM search yields a match quickly and the retrieved physical address can be used to access memory. This is called ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context Switching

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes to share a single central processing unit (CPU), and is an essential feature of a multitasking operating system. The precise meaning of the phrase "context switch" varies. In a multitasking context, it refers to the process of storing the system state for one task, so that task can be paused and another task resumed. A context switch can also occur as the result of an interrupt, such as when a task needs to access disk storage, freeing up CPU time for other tasks. Some operating systems also require a context switch to move between user mode and kernel mode tasks. The process of context switching can have a negative impact on system performance. Cost Context switches are usually computationally intensive, and much of the design of opera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interprocess Communication

In computer science, inter-process communication or interprocess communication (IPC) refers specifically to the mechanisms an operating system provides to allow the processes to manage shared data. Typically, applications can use IPC, categorized as clients and servers, where the client requests data and the server responds to client requests. Many applications are both clients and servers, as commonly seen in distributed computing. IPC is very important to the design process for microkernels and nanokernels, which reduce the number of functionalities provided by the kernel. Those functionalities are then obtained by communicating with servers via IPC, leading to a large increase in communication when compared to a regular monolithic kernel. IPC interfaces generally encompass variable analytic framework structures. These processes ensure compatibility between the multi-vector protocols upon which IPC models rely. An IPC mechanism is either synchronous or asynchronous. Synch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process Isolation

Process isolation is a set of different hardware and software technologies designed to protect each process from other processes on the operating system. It does so by preventing process A from writing to process B. Process isolation can be implemented with virtual address space, where process A's address space is different from process B's address space – preventing A from writing onto B. Security is easier to enforce by disallowing inter-process memory access, in contrast with less secure architectures such as DOS in which any process can write to any memory in any other process. Limited inter-process communication In a system with process isolation, limited (controlled) interaction between processes may still be allowed over inter-process communication (IPC) channels such as shared memory, local sockets or Internet sockets. In this scheme, all of the process' memory is isolated from other processes except where the process is allowing input from collaborating processes. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process Control Block

A process control block (PCB) is a data structure used by computer operating systems to store all the information about a process. It is also known as a process descriptor. When a process is created (initialized or installed), the operating system creates a corresponding process control block. This specifies the process state i.e. new, ready, running, waiting or terminated. Role The role of the PCBs is central in process management: they are accessed and/or modified by most utilities, particularly those involved with scheduling and resource management. Structure In multitasking operating systems, the PCB stores data needed for correct and efficient process management. Though the details of these structures are system-dependent, common elements fall in three main categories: * Process identification * Process state * Process control Status tables exist for each relevant entity, like describing memory, I/O devices, files and processes. Memory tables, for example, contain inform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Handle (computing)

In computer programming, a handle is an abstract reference to a resource that is used when application software references blocks of memory or objects that are managed by another system like a database or an operating system. A resource handle can be an opaque identifier, in which case it is often an integer number (often an array index in an array or "table" that is used to manage that type of resource), or it can be a pointer that allows access to further information. Common resource handles include file descriptors, network sockets, database connections, process identifiers (PIDs), and job IDs. PIDs and job IDs are explicitly visible integers; while file descriptors and sockets (which are often implemented as a form of file descriptor) are represented as integers, they are typically considered opaque. In traditional implementations, file descriptors are indices into a (per-process) file descriptor table, thence a (system-wide) file table. Comparison to pointers Wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |