|

Molecular Dynamics

Molecular dynamics (MD) is a computer simulation method for analyzing the Motion (physics), physical movements of atoms and molecules. The atoms and molecules are allowed to interact for a fixed period of time, giving a view of the dynamics (mechanics), dynamic "evolution" of the system. In the most common version, the trajectory, trajectories of atoms and molecules are determined by Numerical integration, numerically solving Newton's laws of motion, Newton's equations of motion for a system of interacting particles, where Force (physics), forces between the particles and their potential energy, potential energies are often calculated using interatomic potentials or molecular mechanics, molecular mechanical Force field (chemistry), force fields. The method is applied mostly in chemical physics, materials science, and biophysics. Because molecular systems typically consist of a vast number of particles, it is impossible to determine the properties of such complex systems analyt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

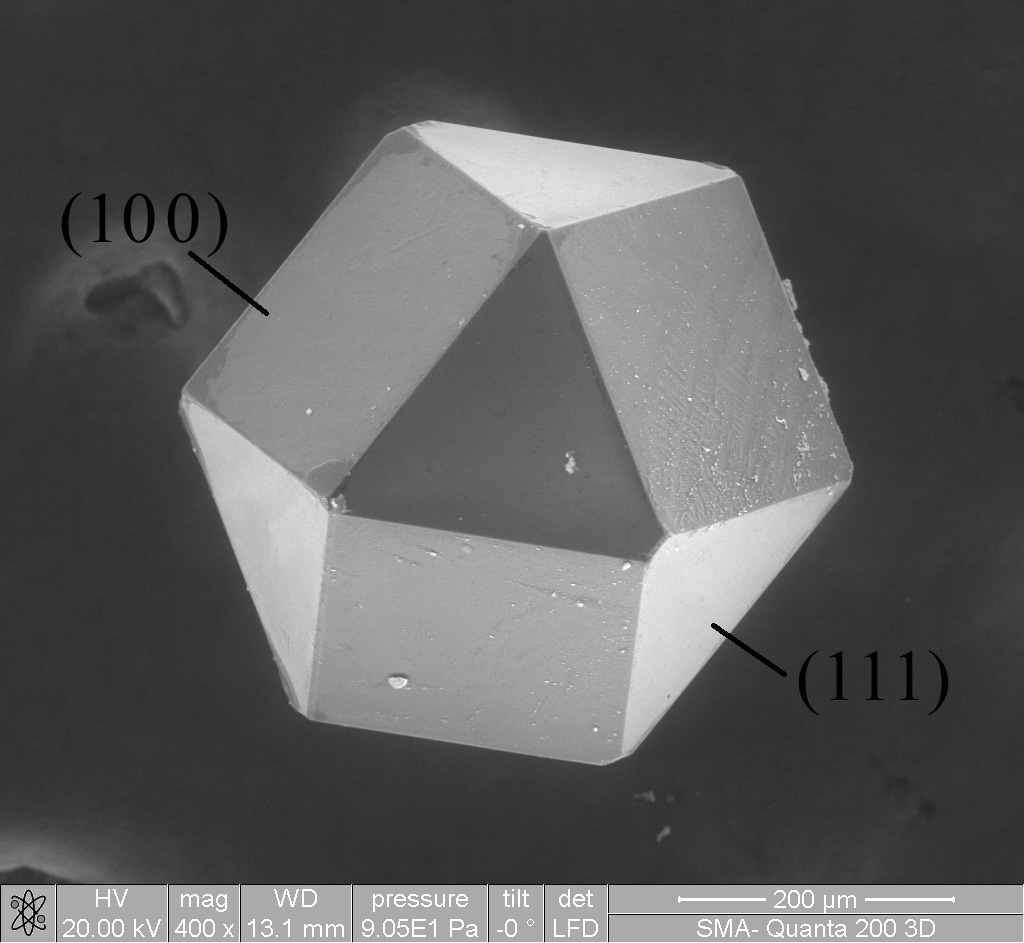

Materials Science

Materials science is an interdisciplinary field of researching and discovering materials. Materials engineering is an engineering field of finding uses for materials in other fields and industries. The intellectual origins of materials science stem from the Age of Enlightenment, when researchers began to use analytical thinking from chemistry, physics, and engineering to understand ancient, phenomenological observations in metallurgy and mineralogy. Materials science still incorporates elements of physics, chemistry, and engineering. As such, the field was long considered by academic institutions as a sub-field of these related fields. Beginning in the 1940s, materials science began to be more widely recognized as a specific and distinct field of science and engineering, and major technical universities around the world created dedicated schools for its study. Materials scientists emphasize understanding how the history of a material (''processing'') influences its struc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Los Alamos National Laboratory

Los Alamos National Laboratory (often shortened as Los Alamos and LANL) is one of the sixteen research and development Laboratory, laboratories of the United States Department of Energy National Laboratories, United States Department of Energy (DOE), located a short distance northwest of Santa Fe, New Mexico, in the Southwestern United States, American southwest. Best known for its central role in helping develop the First Atomic bomb, first atomic bomb, LANL is one of the world's largest and most advanced scientific institutions. Los Alamos was established in 1943 as Project Y, a top-secret site for designing nuclear weapons under the Manhattan Project during World War II.The site was variously called Los Alamos Laboratory and Los Alamos Scientific Laboratory. Chosen for its remote yet relatively accessible location, it served as the main hub for conducting and coordinating nuclear research, bringing together some of the world's most famous scientists, among them numerous Nobel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Buffon's Needle Problem

In probability theory, Buffon's needle problem is a question first posed in the 18th century by Georges-Louis Leclerc, Comte de Buffon: :Suppose we have a floor made of parallel strips of wood, each the same width, and we drop a needle onto the floor. What is the probability that the needle will lie across a line between two strips? Buffon's needle was the earliest problem in geometric probability to be solved; it can be solved using integral geometry. The solution for the sought probability , in the case where the needle length is not greater than the width of the strips, is :p=\frac \cdot \frac. This can be used to design a Monte Carlo method for approximating the number , although that was not the original motivation for de Buffon's question. The seemingly unusual appearance of in this expression occurs because the underlying probability distribution function for the needle orientation is rotationally symmetric. Solution The problem in more mathematical terms is: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Monte Carlo Simulation

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. The name comes from the Monte Carlo Casino in Monaco, where the primary developer of the method, mathematician Stanisław Ulam, was inspired by his uncle's gambling habits. Monte Carlo methods are mainly used in three distinct problem classes: optimization, numerical integration, and generating draws from a probability distribution. They can also be used to model phenomena with significant uncertainty in inputs, such as calculating the risk of a nuclear power plant failure. Monte Carlo methods are often implemented using computer simulations, and they can provide approximate solutions to problems that are otherwise intractable or too complex to analyze mathematically. Monte Carlo methods are widely used in various ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Newtonian Mechanics

Newton's laws of motion are three physical laws that describe the relationship between the motion of an object and the forces acting on it. These laws, which provide the basis for Newtonian mechanics, can be paraphrased as follows: # A body remains at rest, or in motion at a constant speed in a straight line, unless it is acted upon by a force. # At any instant of time, the net force on a body is equal to the body's acceleration multiplied by its mass or, equivalently, the rate at which the body's momentum is changing with time. # If two bodies exert forces on each other, these forces have the same magnitude but opposite directions. The three laws of motion were first stated by Isaac Newton in his '' Philosophiæ Naturalis Principia Mathematica'' (''Mathematical Principles of Natural Philosophy''), originally published in 1687. Newton used them to investigate and explain the motion of many physical objects and systems. In the time since Newton, new insights, especially around ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summarized and extended the work of his predecessors in his five-volume Traité de mécanique céleste, ''Mécanique céleste'' (''Celestial Mechanics'') (1799–1825). This work translated the geometric study of classical mechanics to one based on calculus, opening up a broader range of problems. Laplace also popularized and further confirmed Isaac Newton, Sir Isaac Newton's work. In statistics, the Bayesian probability, Bayesian interpretation of probability was developed mainly by Laplace. Laplace formulated Laplace's equation, and pioneered the Laplace transform which appears in many branches of mathematical physics, a field that he took a leading role in forming. The Laplace operator, Laplacian differential operator, widely used in mathematic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Statistical Mechanics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. Sometimes called statistical physics or statistical thermodynamics, its applications include many problems in a wide variety of fields such as biology, neuroscience, computer science Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ..., information theory and sociology. Its main purpose is to clarify the properties of matter in aggregate, in terms of physical laws governing atomic motion. Statistical mechanics arose out of the development of classical thermodynamics, a field for which it was successful in explaining macroscopic physical properties—such as temperature, pressure, and heat capacity—in terms of microscop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Microcanonical Ensemble

In statistical mechanics, the microcanonical ensemble is a statistical ensemble that represents the possible states of a mechanical system whose total energy is exactly specified. The system is assumed to be isolated in the sense that it cannot exchange energy or particles with its environment, so that (by conservation of energy) the energy of the system does not change with time. The primary macroscopic variables of the microcanonical ensemble are the total number of particles in the system (symbol: ), the system's volume (symbol: ), as well as the total energy in the system (symbol: ). Each of these is assumed to be constant in the ensemble. For this reason, the microcanonical ensemble is sometimes called the ensemble. In simple terms, the microcanonical ensemble is defined by assigning an equal probability to every microstate whose energy falls within a range centered at . All other microstates are given a probability of zero. Since the probabilities must add up to 1, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Thermodynamic

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed by the four laws of thermodynamics, which convey a quantitative description using measurable macroscopic physical quantities but may be explained in terms of microscopic constituents by statistical mechanics. Thermodynamics applies to various topics in science and engineering, especially physical chemistry, biochemistry, chemical engineering, and mechanical engineering, as well as other complex fields such as meteorology. Historically, thermodynamics developed out of a desire to increase the efficiency of early steam engines, particularly through the work of French physicist Sadi Carnot (1824) who believed that engine efficiency was the key that could help France win the Napoleonic Wars. Scots-Irish physicist Lord Kelvin was the first to formulate a concise d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ergodic Hypothesis

In physics and thermodynamics, the ergodic hypothesis says that, over long periods of time, the time spent by a system in some region of the phase space of microstates with the same energy is proportional to the volume of this region, i.e., that all accessible microstates are equiprobable over a long period of time. Liouville's theorem states that, for a Hamiltonian system, the local density of microstates following a particle path through phase space is constant as viewed by an observer moving with the ensemble (i.e., the convective time derivative is zero). Thus, if the microstates are uniformly distributed in phase space initially, they will remain so at all times. But Liouville's theorem does ''not'' imply that the ergodic hypothesis holds for all Hamiltonian systems. The ergodic hypothesis is often assumed in the statistical analysis of computational physics. The analyst would assume that the average of a process parameter over time and the average over the statistica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Condition Number

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given f(x) = y, one is solving for ''x,'' and thus the condition number of the (local) inverse must be used. The condition number is derived from the theory of propagation of uncertainty, and is formally defined as the value of the asymptotic worst-case relative change in output for a relative change in input. The "function" is the solution of a problem and the "arguments" are the data in the problem. The condition number is frequently applied to questions in linear algebra, in which case the derivative is straightforward but the error could be in many different directions, and is thus computed from the geometry of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |