|

Least Angle Regression

In statistics, least-angle regression (LARS) is an algorithm for fitting linear regression models to high-dimensional data, developed by Bradley Efron, Trevor Hastie, Iain Johnstone and Robert Tibshirani. Suppose we expect a response variable to be determined by a linear combination of a subset of potential covariates. Then the LARS algorithm provides a means of producing an estimate of which variables to include, as well as their coefficients. Instead of giving a vector result, the LARS solution consists of a curve denoting the solution for each value of the L1 norm of the parameter vector. The algorithm is similar to forward stepwise regression, but instead of including variables at each step, the estimated parameters are increased in a direction equiangular to each one's correlations with the residual. Pros and cons The advantages of the LARS method are: # It is computationally just as fast as forward selection. # It produces a full piecewise linear solution path, which is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Dimensional Data

In statistical theory, the field of high-dimensional statistics studies data whose dimension is larger than typically considered in classical multivariate analysis. The area arose owing to the emergence of many modern data sets in which the dimension of the data vectors may be comparable to, or even larger than, the sample size, so that justification for the use of traditional techniques, often based on asymptotic arguments with the dimension held fixed as the sample size increased, was lacking. Examples Parameter estimation in linear models The most basic statistical model for the relationship between a covariate vector x \in \mathbb^p and a response variable y \in \mathbb is the linear model : y = x^\top \beta + \epsilon, where \beta \in \mathbb^p is an unknown parameter vector, and \epsilon is random noise with mean zero and variance \sigma^2. Given independent responses Y_1,\ldots,Y_n, with corresponding covariates x_1,\ldots,x_n, from this model, we can form the response ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parametric Statistics

Parametric statistics is a branch of statistics which assumes that sample data comes from a population that can be adequately modeled by a probability distribution that has a fixed set of Statistical parameter, parameters. Conversely a non-parametric model does not assume an explicit (finite-parametric) mathematical form for the distribution when modeling the data. However, it may make some assumptions about that distribution, such as continuity or symmetry. Most well-known statistical methods are parametric. Regarding nonparametric (and semiparametric) models, David Cox (statistician), Sir David Cox has said, "These typically involve fewer assumptions of structure and distributional form but usually contain strong assumptions about independencies". Example The normal distribution, normal family of distributions all have the same general shape and are ''parameterized'' by mean and standard deviation. That means that if the mean and standard deviation are known and if the distribu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sample of voters. Alternatively, it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Selection

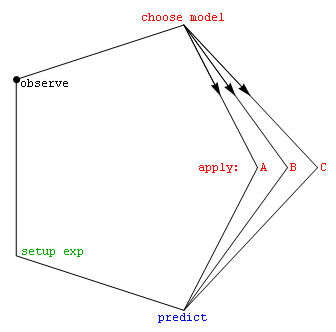

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making or optimization under uncertainty. Introduction In its most basic forms, model selection is one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lasso (statistics)

In statistics and machine learning, lasso (least absolute shrinkage and selection operator; also Lasso or LASSO) is a regression analysis method that performs both variable selection and regularization in order to enhance the prediction accuracy and interpretability of the resulting statistical model. It was originally introduced in geophysics, and later by Robert Tibshirani, who coined the term. Lasso was originally formulated for linear regression models. This simple case reveals a substantial amount about the estimator. These include its relationship to ridge regression and best subset selection and the connections between lasso coefficient estimates and so-called soft thresholding. It also reveals that (like standard linear regression) the coefficient estimates do not need to be unique if covariates are collinear. Though originally defined for linear regression, lasso regularization is easily extended to other statistical models including generalized linear models, generali ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High-dimensional Statistics

In statistical theory, the field of high-dimensional statistics studies data whose dimension is larger than typically considered in classical multivariate analysis. The area arose owing to the emergence of many modern data sets in which the dimension of the data vectors may be comparable to, or even larger than, the sample size, so that justification for the use of traditional techniques, often based on asymptotic arguments with the dimension held fixed as the sample size increased, was lacking. Examples Parameter estimation in linear models The most basic statistical model for the relationship between a covariate vector x \in \mathbb^p and a response variable y \in \mathbb is the linear model : y = x^\top \beta + \epsilon, where \beta \in \mathbb^p is an unknown parameter vector, and \epsilon is random noise with mean zero and variance \sigma^2. Given independent responses Y_1,\ldots,Y_n, with corresponding covariates x_1,\ldots,x_n, from this model, we can form the response ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SAS (software)

SAS (previously "Statistical Analysis System") is a statistical software suite developed by SAS Institute for data management, advanced analytics, multivariate analysis, business intelligence, criminal investigation, and predictive analytics. SAS was developed at North Carolina State University from 1966 until 1976, when SAS Institute was incorporated. SAS was further developed in the 1980s and 1990s with the addition of new statistical procedures, additional components and the introduction of JMP (statistical software), JMP. A Point and click, point-and-click interface was added in version 9 in 2004. A social media analytics product was added in 2010. Technical overview and terminology SAS is a software suite that can Data mining, mine, alter, manage and retrieve data from a variety of sources and perform statistical analysis on it. SAS provides a graphical point-and-click user interface for non-technical users and more through the SAS language. SAS programs have DATA steps, w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Python (language)

Python is a high-level programming language, high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of off-side rule, significant indentation. Python is type_system#DYNAMIC, dynamically-typed and garbage collection (computer science), garbage-collected. It supports multiple programming paradigms, including structured programming, structured (particularly procedural programming, procedural), object-oriented programming, object-oriented and functional programming. It is often described as a "batteries included" language due to its comprehensive standard library. Guido van Rossum began working on Python in the late 1980s as a successor to the ABC (programming language), ABC programming language and first released it in 1991 as Python 0.9.0. Python 2.0 was released in 2000 and introduced new features such as list comprehensions, cycle detection, cycle-detecting garbage collection, reference counting, and Unicode sup ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multicollinearity

In statistics, multicollinearity (also collinearity) is a phenomenon in which one predictor variable in a multiple regression model can be linearly predicted from the others with a substantial degree of accuracy. In this situation, the coefficient estimates of the multiple regression may change erratically in response to small changes in the model or the data. Multicollinearity does not reduce the predictive power or reliability of the model as a whole, at least within the sample data set; it only affects calculations regarding individual predictors. That is, a multivariate regression model with collinear predictors can indicate how well the entire bundle of predictors predicts the outcome variable, but it may not give valid results about any individual predictor, or about which predictors are redundant with respect to others. Note that in statements of the assumptions underlying regression analyses such as ordinary least squares, the phrase "no multicollinearity" usually refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |