|

Law Of The Iterated Logarithm

In probability theory, the law of the iterated logarithm describes the magnitude of the fluctuations of a random walk. The original statement of the law of the iterated logarithm is due to A. Ya. Khinchin (1924). Another statement was given by A. N. Kolmogorov in 1929. Statement Let be independent, identically distributed random variables with means zero and unit variances. Let ''S''''n'' = ''Y''1 + ... + ''Y''''n''. Then : \limsup_ \frac = 1 \quad \text, where “log” is the natural logarithm, “lim sup” denotes the limit superior, and “a.s.” stands for “almost surely”. Discussion The law of iterated logarithms operates “in between” the law of large numbers and the central limit theorem. There are two versions of the law of large numbers — the weak and the strong — and they both state that the sums ''S''''n'', scaled by ''n''−1, converge to zero, respectively in probability and almost surely: : \frac \ \xrightarrow\ 0, \qquad \frac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Law Of Large Numbers

In probability theory, the law of large numbers (LLN) is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials should be close to the expected value and tends to become closer to the expected value as more trials are performed. The LLN is important because it guarantees stable long-term results for the averages of some random events. For example, while a casino may lose money in a single spin of the roulette wheel, its earnings will tend towards a predictable percentage over a large number of spins. Any winning streak by a player will eventually be overcome by the parameters of the game. Importantly, the law applies (as the name indicates) only when a ''large number'' of observations are considered. There is no principle that a small number of observations will coincide with the expected value or that a streak of one value will immediately be "balanced ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weak Law Of Large Numbers

In probability theory, the law of large numbers (LLN) is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials should be close to the expected value and tends to become closer to the expected value as more trials are performed. The LLN is important because it guarantees stable long-term results for the averages of some random events. For example, while a casino may lose money in a single spin of the roulette wheel, its earnings will tend towards a predictable percentage over a large number of spins. Any winning streak by a player will eventually be overcome by the parameters of the game. Importantly, the law applies (as the name indicates) only when a ''large number'' of observations are considered. There is no principle that a small number of observations will coincide with the expected value or that a streak of one value will immediately be "balanced ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic Theory (statistics)

In statistics, asymptotic theory, or large sample theory, is a framework for assessing properties of estimators and statistical tests. Within this framework, it is often assumed that the sample size may grow indefinitely; the properties of estimators and tests are then evaluated under the limit of . In practice, a limit evaluation is considered to be approximately valid for large finite sample sizes too.Höpfner, R. (2014), Asymptotic Statistics, Walter de Gruyter. 286 pag. , Overview Most statistical problems begin with a dataset of size . The asymptotic theory proceeds by assuming that it is possible (in principle) to keep collecting additional data, thus that the sample size grows infinitely, i.e. . Under the assumption, many results can be obtained that are unavailable for samples of finite size. An example is the weak law of large numbers. The law states that for a sequence of independent and identically distributed (IID) random variables , if one value is drawn from each rand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wiener Process

In mathematics, the Wiener process is a real-valued continuous-time stochastic process named in honor of American mathematician Norbert Wiener for his investigations on the mathematical properties of the one-dimensional Brownian motion. It is often also called Brownian motion due to its historical connection with the physical process of the same name originally observed by Scottish botanist Robert Brown (Scottish botanist from Montrose), Robert Brown. It is one of the best known Lévy processes (càdlàg stochastic processes with stationary increments, stationary independent increments) and occurs frequently in pure and applied mathematics, economy, economics, quantitative finance, evolutionary biology, and physics. The Wiener process plays an important role in both pure and applied mathematics. In pure mathematics, the Wiener process gave rise to the study of continuous time martingale (probability theory), martingales. It is a key process in terms of which more complicated sto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iterated Logarithm

In computer science, the iterated logarithm of n, written n (usually read "log star"), is the number of times the logarithm function must be iteratively applied before the result is less than or equal to 1. The simplest formal definition is the result of this recurrence relation: : \log^* n := \begin 0 & \mbox n \le 1; \\ 1 + \log^*(\log n) & \mbox n > 1 \end On the positive real numbers, the continuous super-logarithm (inverse tetration) is essentially equivalent: :\log^* n = \lceil \mathrm _e(n) \rceil i.e. the base ''b'' iterated logarithm is \log^* n = y if n lies within the interval ^b on the ''x''-axis. In computer science, is often used to indicate the binary iterated logarithm, which iterates the binary logarithm (with base 2) instead of the natural logarithm (with base ''e''). Mathematically, the iterated logarithm is well-defined for any base greater than e^ \approx 1.444667, not only for base 2 and base ''e''. Analysis of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Martingale (probability Theory)

In probability theory, a martingale is a sequence of random variables (i.e., a stochastic process) for which, at a particular time, the conditional expectation of the next value in the sequence is equal to the present value, regardless of all prior values. History Originally, '' martingale'' referred to a class of betting strategies that was popular in 18th-century France. The simplest of these strategies was designed for a game in which the gambler wins their stake if a coin comes up heads and loses it if the coin comes up tails. The strategy had the gambler double their bet after every loss so that the first win would recover all previous losses plus win a profit equal to the original stake. As the gambler's wealth and available time jointly approach infinity, their probability of eventually flipping heads approaches 1, which makes the martingale betting strategy seem like a sure thing. However, the exponential growth of the bets eventually bankrupts its users due to f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yongge Wang

Yongge Wang (born 1967) is a computer science professor at the University of North Carolina at Charlotte specialized in algorithmic complexity and cryptography. He is the inventor of IEEE P1363 cryptographic standards SRP5 and WANG-KE and has contributed to the mathematical theory of algorithmic randomness. He co-authored a paper demonstrating that a recursively enumerable real number is an algorithmically random sequence if and only if it is a Chaitin's constant for some encoding of programs. He also showed the separation of Schnorr randomness from recursive randomness. He also invented a distance based statistical testing technique to improve NIST SP800-22 testing in randomness tests A randomness test (or test for randomness), in data evaluation, is a test used to analyze the distribution of a set of data to see if it can be described as random (patternless). In stochastic modeling, as in some computer simulations, the hoped-f .... In cryptographic research, he is known for the in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

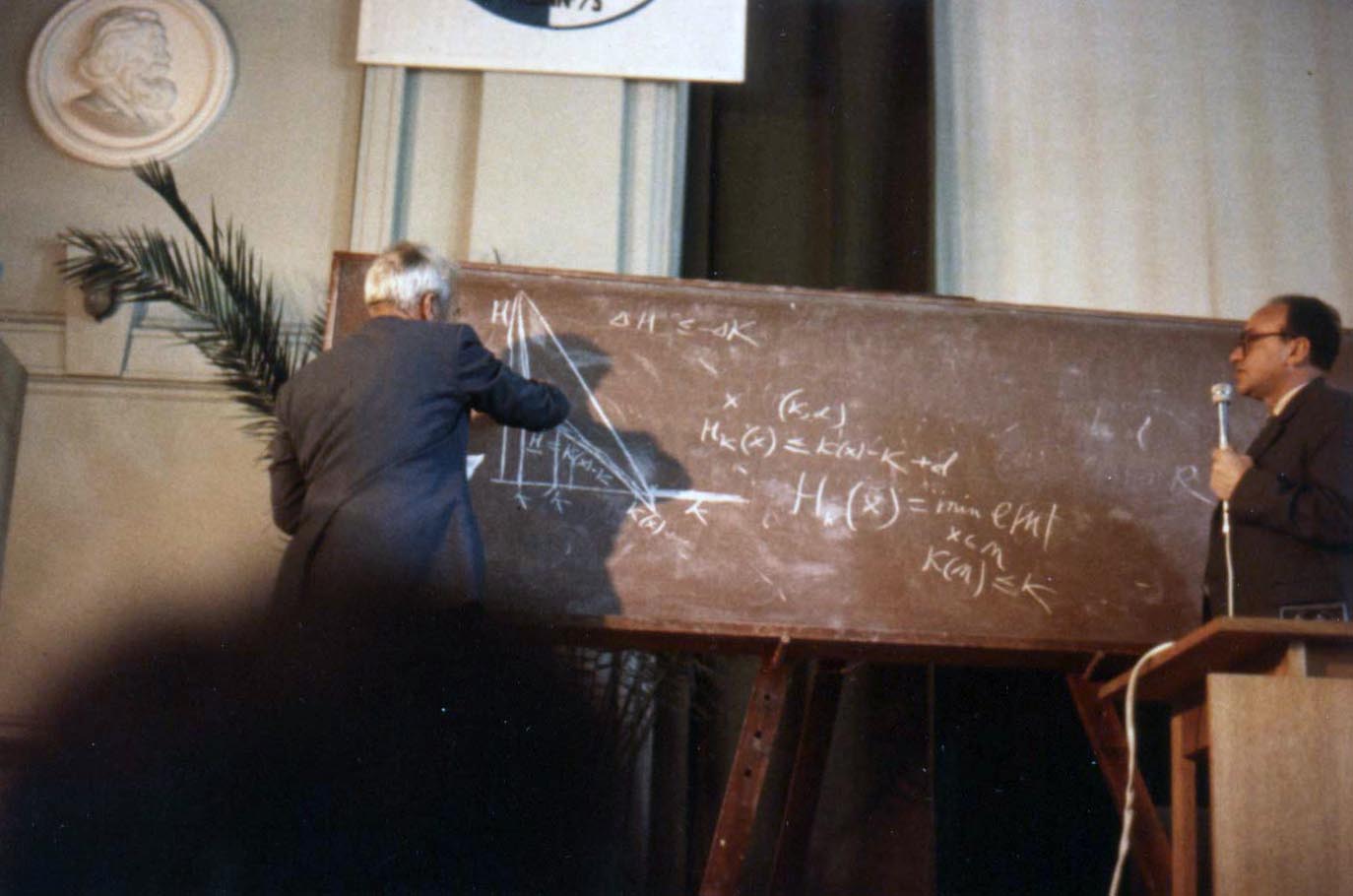

Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who contributed to the mathematics of probability theory, topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers south-southeast of Moscow, in 1903. His unmarried mother, Maria Y. Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveevich Kataev and had been an agronomist. Kataev had been exiled from St. Petersburg to the Yaroslavl province after his participation in the revolutionary movem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Khinchin

Aleksandr Yakovlevich Khinchin (russian: Алекса́ндр Я́ковлевич Хи́нчин, french: Alexandre Khintchine; July 19, 1894 – November 18, 1959) was a Soviet mathematician and one of the most significant contributors to the Soviet school of probability theory. Life and career He was born in the village of Kondrovo, Kaluga Governorate, Russian Empire. While studying at Moscow State University, he became one of the first followers of the famous Luzin school. Khinchin graduated from the university in 1916 and six years later he became a full professor there, retaining that position until his death. Khinchin's early works focused on real analysis. Later he applied methods from the metric theory of functions to problems in probability theory and number theory. He became one of the founders of modern probability theory, discovering the law of the iterated logarithm in 1924, achieving important results in the field of limit theorems, giving a definition of a s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov's Zero–one Law

In probability theory, Kolmogorov's zero–one law, named in honor of Andrey Nikolaevich Kolmogorov, specifies that a certain type of event, namely a ''tail event of independent σ-algebras'', will either almost surely happen or almost surely not happen; that is, the probability of such an event occurring is zero or one. Tail events are defined in terms of countably infinite families of σ-algebras. For illustrative purposes, we present here the special case in which each sigma algebra is generated by a random variable X_k for k\in\mathbb N. Let \mathcal be the sigma-algebra generated jointly by all of the X_k. Then, a tail event F \in \mathcal is an event which is probabilistically independent of each finite subset of these random variables. (Note: F belonging to \mathcal implies that membership in F is uniquely determined by the values of the X_k, but the latter condition is strictly weaker and does not suffice to prove the zero-one law.) For example, the event that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convergence Of Random Variables

In probability theory, there exist several different notions of convergence of random variables. The convergence of sequences of random variables to some limit random variable is an important concept in probability theory, and its applications to statistics and stochastic processes. The same concepts are known in more general mathematics as stochastic convergence and they formalize the idea that a sequence of essentially random or unpredictable events can sometimes be expected to settle down into a behavior that is essentially unchanging when items far enough into the sequence are studied. The different possible notions of convergence relate to how such a behavior can be characterized: two readily understood behaviors are that the sequence eventually takes a constant value, and that values in the sequence continue to change but can be described by an unchanging probability distribution. Background "Stochastic convergence" formalizes the idea that a sequence of essentially rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |