|

Information Distance

Information distance is the distance between two finite objects (represented as computer files) expressed as the number of bits in the shortest program which transforms one object into the other one or vice versa on a universal computer. This is an extension of Kolmogorov complexity. The Kolmogorov complexity of a ''single'' finite object is the information in that object; the information distance between a ''pair'' of finite objects is the minimum information required to go from one object to the other or vice versa. Information distance was first defined and investigated in based on thermodynamic principles, see also. Subsequently, it achieved final form in. It is applied in the normalized compression distance and the normalized Google distance. Properties Formally the information distance ID(x,y) between x and y is defined by : ID(x,y) = \min \, with p a finite binary program for the fixed universal computer with as inputs finite binary strings x,y. In it is proven that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer

A computer is a machine that can be programmed to Execution (computing), carry out sequences of arithmetic or logical operations (computation) automatically. Modern digital electronic computers can perform generic sets of operations known as Computer program, programs. These programs enable computers to perform a wide range of tasks. A computer system is a nominally complete computer that includes the Computer hardware, hardware, operating system (main software), and peripheral equipment needed and used for full operation. This term may also refer to a group of computers that are linked and function together, such as a computer network or computer cluster. A broad range of Programmable logic controller, industrial and Consumer electronics, consumer products use computers as control systems. Simple special-purpose devices like microwave ovens and remote controls are included, as are factory devices like industrial robots and computer-aided design, as well as general-purpose devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metric Space

In mathematics, a metric space is a set together with a notion of '' distance'' between its elements, usually called points. The distance is measured by a function called a metric or distance function. Metric spaces are the most general setting for studying many of the concepts of mathematical analysis and geometry. The most familiar example of a metric space is 3-dimensional Euclidean space with its usual notion of distance. Other well-known examples are a sphere equipped with the angular distance and the hyperbolic plane. A metric may correspond to a metaphorical, rather than physical, notion of distance: for example, the set of 100-character Unicode strings can be equipped with the Hamming distance, which measures the number of characters that need to be changed to get from one string to another. Since they are very general, metric spaces are a tool used in many different branches of mathematics. Many types of mathematical objects have a natural notion of distance a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Elena Deza

Elena Ivanovna Deza (russian: Елена Ивановна Деза, née Panteleeva; born 23 August 1961) is a French and Russian mathematician known for her books on metric spaces and figurate numbers. Education and career Deza was born on 23 August 1961 in Volgograd, and is a French and Russian citizen. She earned a diploma in mathematics in 1983, a candidate's degree (doctorate) in mathematics and physics in 1993, and a docent's certificate in number theory in 1995, all from Moscow State Pedagogical University Moscow State Pedagogical University or Moscow State University of Education is an educational and scientific institution in Moscow, Russia, with eighteen faculties and seven branches operational in other Russian cities. The institution had underg .... From 1983 to 1988, Deza was an assistant professor of mathematics at Moscow State Forest University. In 1988 she moved to Moscow State Pedagogical University; she became a lecturer there in 1993, a reader in 1994, and a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slepian–Wolf Coding

__NOTOC__ In information theory and communication, the Slepian–Wolf coding, also known as the Slepian–Wolf bound, is a result in distributed source coding discovered by David Slepian and Jack Wolf in 1973. It is a method of theoretically coding two lossless compressed correlated sources. Problem setup Distributed coding is the coding of two, in this case, or more dependent sources with separate encoders and a joint decoder. Given two statistically dependent independent and identically distributed finite-alphabet random sequences X^n and Y^n, the Slepian–Wolf theorem gives a theoretical bound for the lossless coding rate for distributed coding of the two sources. Theorem The bound for the lossless coding rates as shown below: : R_X\geq H(X, Y), \, : R_Y\geq H(Y, X), \, : R_X+R_Y\geq H(X,Y). \, If both the encoder and the decoder of the two sources are independent, the lowest rate it can achieve for lossless compression is H(X) and H(Y) for X and Y respectively, wher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon Information Theory

Information theory is the scientific study of the quantification, storage, and communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering, and electrical engineering. A key measure in information theory is entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a die (with six equally likely outcomes). Some other important measures in information theory are mutual information, channel capacity, error exponents, and relative entropy. Important sub-fields of information theory include source c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concatenation

In formal language theory and computer programming, string concatenation is the operation of joining character strings end-to-end. For example, the concatenation of "snow" and "ball" is "snowball". In certain formalisations of concatenation theory, also called string theory, string concatenation is a primitive notion. Syntax In many programming languages, string concatenation is a binary infix operator. The + (plus) operator is often overloaded to denote concatenation for string arguments: "Hello, " + "World" has the value "Hello, World". In other languages there is a separate operator, particularly to specify implicit type conversion to string, as opposed to more complicated behavior for generic plus. Examples include . in Edinburgh IMP, Perl, and PHP, .. in Lua, and & in Ada, AppleScript, and Visual Basic. Other syntax exists, like , , in PL/I and Oracle Database SQL. In a few languages, notably C, C++, and Python, there is string literal concatenation, m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Density

Density (volumetric mass density or specific mass) is the substance's mass per unit of volume. The symbol most often used for density is ''ρ'' (the lower case Greek letter rho), although the Latin letter ''D'' can also be used. Mathematically, density is defined as mass divided by volume: : \rho = \frac where ''ρ'' is the density, ''m'' is the mass, and ''V'' is the volume. In some cases (for instance, in the United States oil and gas industry), density is loosely defined as its weight per unit volume, although this is scientifically inaccurate – this quantity is more specifically called specific weight. For a pure substance the density has the same numerical value as its mass concentration. Different materials usually have different densities, and density may be relevant to buoyancy, purity and packaging. Osmium and iridium are the densest known elements at standard conditions for temperature and pressure. To simplify comparisons of density across different syst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

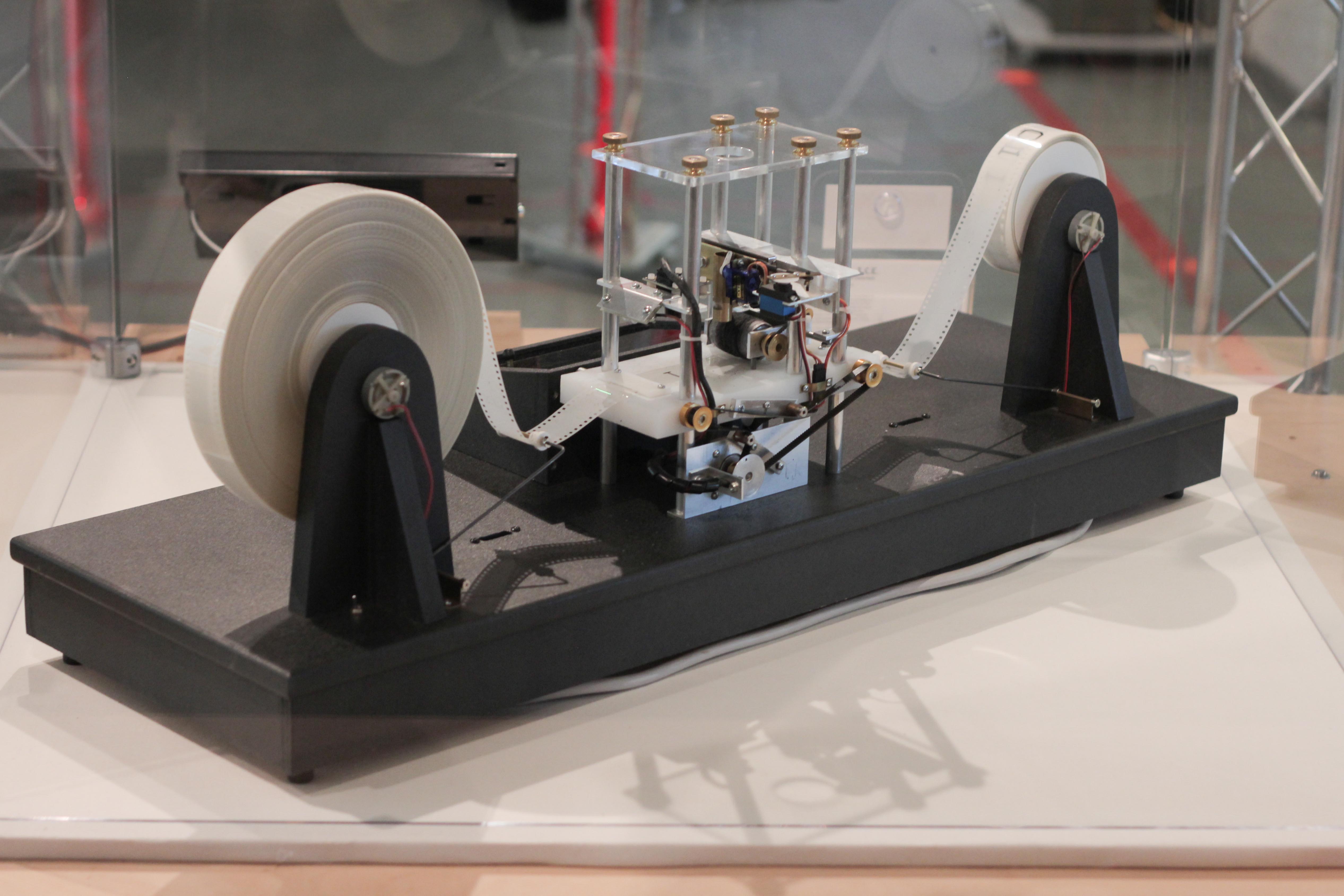

Universal Computer

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each comb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distance

Distance is a numerical or occasionally qualitative measurement of how far apart objects or points are. In physics or everyday usage, distance may refer to a physical length or an estimation based on other criteria (e.g. "two counties over"). Since spatial cognition is a rich source of conceptual metaphors in human thought, the term is also frequently used metaphorically to mean a measurement of the amount of difference between two similar objects (such as statistical distance between probability distributions or edit distance between strings of text) or a degree of separation (as exemplified by distance between people in a social network). Most such notions of distance, both physical and metaphorical, are formalized in mathematics using the notion of a metric space. In the social sciences, distance can refer to a qualitative measurement of separation, such as social distance or psychological distance. Distances in physics and geometry The distance between physi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Upper Semicomputable

In computability theory, a semicomputable function is a partial function f : \mathbb \rightarrow \mathbb that can be approximated either from above or from below by a computable function. More precisely a partial function f : \mathbb \rightarrow \mathbb is upper semicomputable, meaning it can be approximated from above, if there exists a computable function \phi(x,k) : \mathbb \times \mathbb \rightarrow \mathbb, where x is the desired parameter for f(x) and k is the level of approximation, such that: * \lim_ \phi(x,k) = f(x) * \forall k \in \mathbb : \phi(x,k+1) \leq \phi(x,k) Completely analogous a partial function f : \mathbb \rightarrow \mathbb is lower semicomputable if and only if -f(x) is upper semicomputable or equivalently if there exists a computable function \phi(x,k) such that: * \lim_ \phi(x,k) = f(x) * \forall k \in \mathbb : \phi(x,k+1) \geq \phi(x,k) If a partial function is both upper and lower semicomputable it is called computable. See a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |