|

Inequalities In Information Theory

Inequalities are very important in the study of information theory. There are a number of different contexts in which these inequalities appear. Entropic inequalities Consider a tuple X_1,X_2,\dots,X_n of n finitely (or at most countably) supported random variables on the same probability space. There are 2''n'' subsets, for which (joint) entropies can be computed. For example, when ''n'' = 2, we may consider the entropies H(X_1), H(X_2), and H(X_1, X_2). They satisfy the following inequalities (which together characterize the range of the marginal and joint entropies of two random variables): * H(X_1) \ge 0 * H(X_2) \ge 0 * H(X_1) \le H(X_1, X_2) * H(X_2) \le H(X_1, X_2) * H(X_1, X_2) \le H(X_1) + H(X_2). In fact, these can all be expressed as special cases of a single inequality involving the conditional mutual information, namely :I(A;B, C) \ge 0, where A, B, and C each denote the joint distribution of some arbitrary (possibly empty) subset of our collection of r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inequality (mathematics)

In mathematics, an inequality is a relation which makes a non-equal comparison between two numbers or other mathematical expressions. It is used most often to compare two numbers on the number line by their size. There are several different notations used to represent different kinds of inequalities: * The notation ''a'' ''b'' means that ''a'' is greater than ''b''. In either case, ''a'' is not equal to ''b''. These relations are known as strict inequalities, meaning that ''a'' is strictly less than or strictly greater than ''b''. Equivalence is excluded. In contrast to strict inequalities, there are two types of inequality relations that are not strict: * The notation ''a'' ≤ ''b'' or ''a'' ⩽ ''b'' means that ''a'' is less than or equal to ''b'' (or, equivalently, at most ''b'', or not greater than ''b''). * The notation ''a'' ≥ ''b'' or ''a'' ⩾ ''b'' means that ''a'' is greater than or equal to ''b'' (or, equivalently, at least ''b'', or not less than ''b''). The r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rate Function

In mathematics — specifically, in large deviations theory — a rate function is a function used to quantify the probabilities of rare events. It is required to have several properties which assist in the formulation of the large deviation principle. In some sense, the large deviation principle is an analogue of weak convergence of probability measures, but one which takes account of how well the rare events behave. A rate function is also called a Cramér function, after the Swedish probabilist Harald Cramér. Definitions Rate function An extended real-valued function ''I'' : ''X'' → , +∞defined on a Hausdorff topological space ''X'' is said to be a rate function if it is not identically +∞ and is lower semi-continuous, i.e. all the sub-level sets :\ \mbox c \geq 0 are closed in ''X''. If, furthermore, they are compact, then ''I'' is said to be a good rate function. A family of probability measures (''μ''''δ'')''δ'' > ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Principle

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities asserting a fundamental limit to the accuracy with which the values for certain pairs of physical quantities of a particle, such as position, ''x'', and momentum, ''p'', can be predicted from initial conditions. Such variable pairs are known as complementary variables or canonically conjugate variables; and, depending on interpretation, the uncertainty principle limits to what extent such conjugate properties maintain their approximate meaning, as the mathematical framework of quantum physics does not support the notion of simultaneously well-defined conjugate properties expressed by a single value. The uncertainty principle implies that it is in general not possible to predict the value of a quantity with arbitrary certainty, even if all initial conditions are specified. Introduced first in 1927 by the German physicist Werner H ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Annals Of Mathematics

The ''Annals of Mathematics'' is a mathematical journal published every two months by Princeton University and the Institute for Advanced Study. History The journal was established as ''The Analyst'' in 1874 and with Joel E. Hendricks as the founding editor-in-chief. It was "intended to afford a medium for the presentation and analysis of any and all questions of interest or importance in pure and applied Mathematics, embracing especially all new and interesting discoveries in theoretical and practical astronomy, mechanical philosophy, and engineering". It was published in Des Moines, Iowa, and was the earliest American mathematics journal to be published continuously for more than a year or two. This incarnation of the journal ceased publication after its tenth year, in 1883, giving as an explanation Hendricks' declining health, but Hendricks made arrangements to have it taken over by new management, and it was continued from March 1884 as the ''Annals of Mathematics''. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Entropy

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend the idea of (Shannon) entropy, a measure of average surprisal of a random variable, to continuous probability distributions. Unfortunately, Shannon did not derive this formula, and rather just assumed it was the correct continuous analogue of discrete entropy, but it is not. The actual continuous version of discrete entropy is the limiting density of discrete points (LDDP). Differential entropy (described here) is commonly encountered in the literature, but it is a limiting case of the LDDP, and one that loses its fundamental association with discrete entropy. In terms of measure theory, the differential entropy of a probability measure is the negative relative entropy from that measure to the Lebesgue measure, where the latter is treated as if it were a probability measure, despite being unnormalized. Definition Let X be a rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

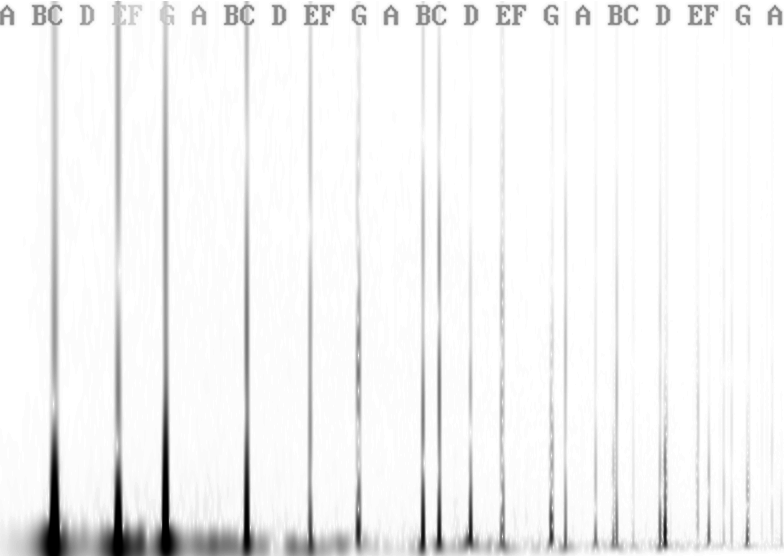

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude ( absolute value) of the complex value represents the amplitude of a constituent complex sinusoid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Journal Of Mathematics

The ''American Journal of Mathematics'' is a bimonthly mathematics journal published by the Johns Hopkins University Press. History The ''American Journal of Mathematics'' is the oldest continuously published mathematical journal in the United States, established in 1878 at the Johns Hopkins University by James Joseph Sylvester, an English-born mathematician who also served as the journal's editor-in-chief from its inception through early 1884. Initially W. E. Story was associate editor in charge; he was replaced by Thomas Craig in 1880. For volume 7 Simon Newcomb became chief editor with Craig managing until 1894. Then with volume 16 it was "Edited by Thomas Craig with the Co-operation of Simon Newcomb" until 1898. Other notable mathematicians who have served as editors or editorial associates of the journal include Frank Morley, Oscar Zariski, Lars Ahlfors, Hermann Weyl, Wei-Liang Chow, S. S. Chern, André Weil, Harish-Chandra, Jean Dieudonné, Henri Cartan, Stephen Smale, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nat (unit)

The natural unit of information (symbol: nat), sometimes also nit or nepit, is a unit of information, based on natural logarithms and powers of ''e'', rather than the powers of 2 and base 2 logarithms, which define the shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/ ''e''. One nat is equal to shannons ≈ 1.44 Sh or, equivalently, hartleys ≈ 0.434 Hart. History Boulton and Wallace used the term ''nit'' in conjunction with minimum message length, which was subsequently changed by the minimum description length community to ''nat'' to avoid confusion with the nit used as a unit of luminance. Alan Turing used the ''natural ban''. Entropy Shannon entropy (information entropy), being the expected value of the information of an event, is a quantity of the same type and with the same units as information. The International System of Uni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Total Variation Distance

In probability theory, the total variation distance is a distance measure for probability distributions. It is an example of a statistical distance metric, and is sometimes called the statistical distance, statistical difference or variational distance. Definition Consider a measurable space (\Omega, \mathcal) and probability measures P and Q defined on (\Omega, \mathcal). The total variation distance between P and Q is defined as: :\delta(P,Q)=\sup_\left, P(A)-Q(A)\. Informally, this is the largest possible difference between the probabilities that the two probability distributions can assign to the same event. Properties Relation to other distances The total variation distance is related to the Kullback–Leibler divergence by Pinsker’s inequality: :\delta(P,Q) \le \sqrt. One also has the following inequality, due to Bretagnolle and Huber (see, also, Tsybakov), which has the advantage of providing a non-vacuous bound even when D_(P\parallel Q)>2: :\delta(P,Q) \le \s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how one probability distribution ''P'' is different from a second, reference probability distribution ''Q''. A simple interpretation of the KL divergence of ''P'' from ''Q'' is the expected excess surprise from using ''Q'' as a model when the actual distribution is ''P''. While it is a distance, it is not a metric, the most familiar type of distance: it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for certain classes of distributions (notably an exponential family), it satisfies a generalized Pythagorean theorem (which applies to squared distances). In the simple case, a relative entropy of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cramér–Rao Bound

In estimation theory and statistics, the Cramér–Rao bound (CRB) expresses a lower bound on the variance of unbiased estimators of a deterministic (fixed, though unknown) parameter, the variance of any such estimator is at least as high as the inverse of the Fisher information. Equivalently, it expresses an upper bound on the precision (the inverse of variance) of unbiased estimators: the precision of any such estimator is at most the Fisher information. The result is named in honor of Harald Cramér and C. R. Rao, but has independently also been derived by Maurice Fréchet, Georges Darmois, as well as Alexander Aitken and Harold Silverstone. An unbiased estimator that achieves this lower bound is said to be (fully) '' efficient''. Such a solution achieves the lowest possible mean squared error among all unbiased methods, and is therefore the minimum variance unbiased (MVU) estimator. However, in some cases, no unbiased technique exists which achieves the bound. This may o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)