|

Head Grammar

Head grammar (HG) is a grammar formalism introduced in Carl Pollard (1984) Pollard, C. 1984. ''Generalized Phrase Structure Grammars, Head Grammars, and Natural Language''. Ph.D. thesis, Stanford University, CA. as an extension of the context-free grammar class of grammars. Head grammar is therefore a type of phrase structure grammar, as opposed to a dependency grammar. The class of head grammars is a subset of the linear context-free rewriting systems. One typical way of defining head grammars is to replace the terminal strings of CFGs with indexed terminal strings, where the index denotes the "head" word of the string. Thus, for example, a CF rule such as A \to abc might instead be A \to (abc, 0), where the 0th terminal, the ''a'', is the head of the resulting terminal string. For convenience of notation, such a rule could be written as just the terminal string, with the head terminal denoted by some sort of mark, as in A \to \widehatbc. Two fundamental operations are then a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carl Pollard

Carl Jesse Pollard (born June 28, 1947) is a Professor of Linguistics at the Ohio State University. He is the inventor of head grammar and higher-order grammar, as well as co-inventor of head-driven phrase structure grammar (HPSG). He is currently also working on convergent grammar (CVG). He has written numerous books and articles on formal syntax and semantics Semantics is the study of linguistic Meaning (philosophy), meaning. It examines what meaning is, how words get their meaning, and how the meaning of a complex expression depends on its parts. Part of this process involves the distinction betwee .... He received his Ph.D. from Stanford. External linksCarl Pollard's website 1947 births Living people Linguists from the United States Syntacticians Ohio State University faculty {{US-linguist-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context-free Grammar

In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules can be applied to a nonterminal symbol regardless of its context. In particular, in a context-free grammar, each production rule is of the form : A\ \to\ \alpha with A a ''single'' nonterminal symbol, and \alpha a string of terminals and/or nonterminals (\alpha can be empty). Regardless of which symbols surround it, the single nonterminal A on the left hand side can always be replaced by \alpha on the right hand side. This distinguishes it from a context-sensitive grammar, which can have production rules in the form \alpha A \beta \rightarrow \alpha \gamma \beta with A a nonterminal symbol and \alpha, \beta, and \gamma strings of terminal and/or nonterminal symbols. A formal grammar is essentially a set of production rules that describe all possible strings in a given formal language. Production rules are simple replacements. For example, the first rule in the picture, : \lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phrase Structure Grammar

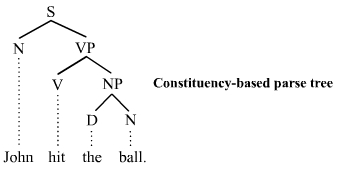

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue ( Post canonical systems). Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as ''constituency grammars''. The defining character of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars. History In 1956, Chomsky wrote, "A phrase-structure grammar is defined by a finite vocabulary (alphabet) Vp, and a finite set Σ of initial strings in Vp, and a finite set F of rules of the form: X → Y, where X and Y are strings in Vp." Constituency relation In linguistics, phrase structure grammars are all those grammars that are based on the constituency relation, as opposed to the dependency relation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependency Grammar

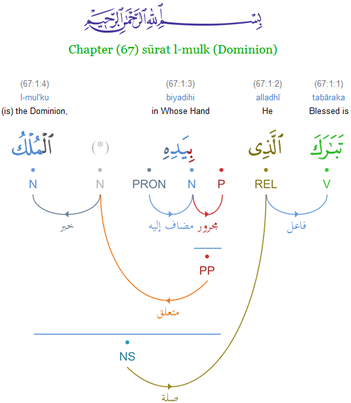

Dependency grammar (DG) is a class of modern Grammar, grammatical theories that are all based on the dependency relation (as opposed to the ''constituency relation'' of Phrase structure grammar, phrase structure) and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called ''dependencies''. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word (a Head (linguistics), head) and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb, finite verb phrase constit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Context-free Grammar

Generalized context-free grammar (GCFG) is a grammar formalism that expands on context-free grammars by adding potentially non-context-free composition functions to rewrite rules. Head grammar (and its weak equivalents) is an instance of such a GCFG which is known to be especially adept at handling a wide variety of non-CF properties of natural language. Description A GCFG consists of two components: a set of composition functions that combine string tuples, and a set of rewrite rules. The composition functions all have the form f(\langle x_1, ..., x_m \rangle, \langle y_1, ..., y_n \rangle, ...) = \gamma, where \gamma is either a single string tuple, or some use of a (potentially different) composition function which reduces to a string tuple. Rewrite rules look like X \to f(Y, Z, ...), where Y, Z, ... are string tuples or non-terminal symbols. The rewrite semantics of GCFGs is fairly straightforward. An occurrence of a non-terminal symbol is rewritten using rewrite rules as in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Indexed Grammar

Indexed grammars are a generalization of context-free grammars in that nonterminals are equipped with lists of ''flags'', or ''index symbols''. The language produced by an indexed grammar is called an indexed language. Definition Modern definition by Hopcroft and Ullman In contemporary publications following Hopcroft and Ullman (1979), an indexed grammar is formally defined a 5-tuple ''G'' = ⟨''N'',''T'',''F'',''P'',''S''⟩ where * ''N'' is a set of variables or nonterminal symbols, * ''T'' is a set ("alphabet") of terminal symbols, * ''F'' is a set of so-called ''index symbols'', or ''indices'', * ''S'' ∈ ''N'' is the '' start symbol'', and * ''P'' is a finite set of '' productions''. In productions as well as in derivations of indexed grammars, a string ("stack") ''σ'' ∈ ''F'' * of index symbols is attached to every nonterminal symbol ''A'' ∈ ''N'', denoted by ''A'' 'σ''" and " are meta symbols to indicate the stack. Terminal symbols may not be followed by inde ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatory Categorial Grammar

Combinatory categorial grammar (CCG) is an efficiently parsable, yet linguistically expressive grammar formalism. It has a transparent interface between surface syntax and underlying semantic representation, including predicate–argument structure, quantification and information structure. The formalism generates constituency-based structures (as opposed to dependency-based ones) and is therefore a type of phrase structure grammar (as opposed to a dependency grammar). CCG relies on combinatory logic, which has the same expressive power as the lambda calculus, but builds its expressions differently. The first linguistic and psycholinguistic arguments for basing the grammar on combinators were put forth by Steedman and Szabolcsi. More recent prominent proponents of the approach are Pauline Jacobson anJason Baldridge In these new approaches, the combinator B (the compositor) is useful in creating long-distance dependencies, as in "Who do you think Mary is talking about?" and th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree-adjoining Grammar

Tree-adjoining grammar (TAG) is a grammar formalism defined by Aravind Joshi. Tree-adjoining grammars are somewhat similar to context-free grammars, but the elementary unit of rewriting is the tree rather than the symbol. Whereas context-free grammars have rules for rewriting symbols as strings of other symbols, tree-adjoining grammars have rules for rewriting the nodes of trees as other trees (see tree (graph theory) and tree (data structure)). History TAG originated in investigations by Joshi and his students into the family of adjunction grammars (AG), the "string grammar" of Zellig Harris. AGs handle exocentric properties of language in a natural and effective way, but do not have a good characterization of endocentric constructions; the converse is true of rewrite grammars, or phrase-structure grammar (PSG). In 1969, Joshi introduced a family of grammars that exploits this complementarity by mixing the two types of rules. A few very simple rewrite rules suffice to ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weak Equivalence (formal Languages)

In formal language theory, weak equivalence of two formal grammars, grammars means they generate the same set of strings, i.e. that the formal language they generate is the same. In compiler theory the notion is distinguished from strong (or structural) equivalence, which additionally means that the two parse trees are reasonably similar in that the same semantic interpretation can be assigned to both. Vijay-Shanker and Weir (1994) demonstrates that Indexed grammar, Linear Indexed Grammars, Combinatory categorial grammar, Combinatory Categorial Grammars, Tree-adjoining grammar, Tree-adjoining Grammars, and Head grammar, Head Grammars are weakly equivalent formalisms, in that they all define the same string languages. On the other hand, if two grammars generate the same set of derivation trees (or more generally, the same set of abstract syntactic objects), then the two grammars are strongly equivalent. Chomsky (1963) introduces the notion of strong equivalence, and argues that onl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Languages

In logic, mathematics, computer science, and linguistics, a formal language is a set of string (computer science), strings whose symbols are taken from a set called "#Definition, alphabet". The alphabet of a formal language consists of symbols that concatenate into strings (also called "words"). Words that belong to a particular formal language are sometimes called Formal language#Definition, ''well-formed words''. A formal language is often defined by means of a formal grammar such as a regular grammar or context-free grammar. In computer science, formal languages are used, among others, as the basis for defining the grammar of programming languages and formalized versions of subsets of natural languages, in which the words of the language represent concepts that are associated with meanings or semantics. In computational complexity theory, decision problems are typically defined as formal languages, and complexity classes are defined as the sets of the formal languages that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar Frameworks

In linguistics, grammar is the set of rules for how a natural language is structured, as demonstrated by its speakers or writers. Grammar rules may concern the use of clauses, phrases, and words. The term may also refer to the study of such rules, a subject that includes phonology, morphology, and syntax, together with phonetics, semantics, and pragmatics. There are, broadly speaking, two different ways to study grammar: traditional grammar and theoretical grammar. Fluency in a particular language variety involves a speaker internalizing these rules, many or most of which are acquired by observing other speakers, as opposed to intentional study or instruction. Much of this internalization occurs during early childhood; learning a language later in life usually involves more direct instruction. The term ''grammar'' can also describe the linguistic behaviour of groups of speakers and writers rather than individuals. Differences in scale are important to this meaning: for exam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |