|

Graph Neural Network

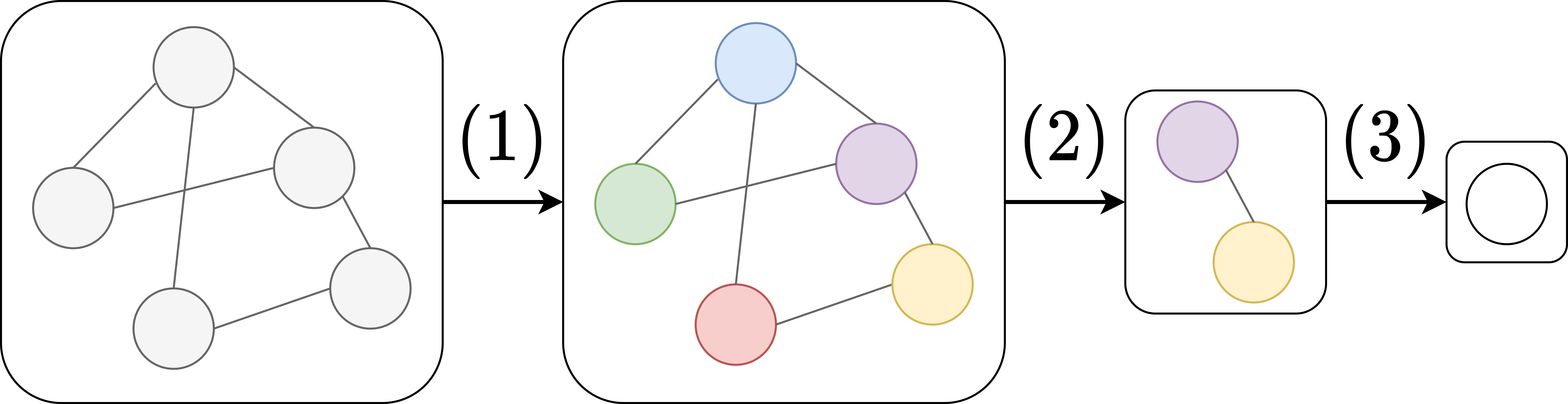

A graph neural network (GNN) belongs to a class of artificial neural networks for processing data that can be represented as Graph (abstract data type), graphs. In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A convolutional neural network layer, in the context of computer vision, can be seen as a GNN applied to graphs whose nodes are pixels and only adjacent pixels are connected by edges in the graph. A Transformer (machine learning model), transformer layer, in natural language processing, can be seen as a GNN applied to complete graphs whose nodes are words or tokens in a passage of natural language text. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Since their inception, several different GNN architectures have been ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NP-hard

In computational complexity theory, NP-hardness ( non-deterministic polynomial-time hardness) is the defining property of a class of problems that are informally "at least as hard as the hardest problems in NP". A simple example of an NP-hard problem is the subset sum problem. A more precise specification is: a problem ''H'' is NP-hard when every problem ''L'' in NP can be reduced in polynomial time to ''H''; that is, assuming a solution for ''H'' takes 1 unit time, ''H''s solution can be used to solve ''L'' in polynomial time. As a consequence, finding a polynomial time algorithm to solve any NP-hard problem would give polynomial time algorithms for all the problems in NP. As it is suspected that P≠NP, it is unlikely that such an algorithm exists. It is suspected that there are no polynomial-time algorithms for NP-hard problems, but that has not been proven. Moreover, the class P, in which all problems can be solved in polynomial time, is contained in the NP class. Defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weisfeiler Leman Graph Isomorphism Test

In graph theory, the Weisfeiler Leman graph isomorphism test is a heuristic test for the existence of an isomorphism between two graphs ''G'' and ''H''. It is based on a normal form for graphs first described in an article by Weisfeiler and Leman in 1968. There are several versions of the test referred to in the literature by various names, which easily leads to confusion. The basic Weisfeiler Leman graph isomorphism test The basic version called WLtest takes a graph as input, produces a partition of the nodes which is invariant under automorphisms and outputs a string certificate encoding the partition. When applied to two graphs ''G'' and ''H'' we can compare the certificates. If the certificates do not agree the test fails and ''G'' and ''H'' cannot be isomorphic. If the certificates agree, ''G'' and ''H'' may or may not be isomorphic. The partition is produced in several rounds starting from the trivial partition where all nodes belong to the same component. In each ro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest Neighbor Graph

The nearest neighbor graph (NNG) is a directed graph defined for a set of points in a metric space, such as the Euclidean distance in the plane. The NNG has a vertex for each point, and a directed edge from ''p'' to ''q'' whenever ''q'' is a nearest neighbor of ''p'', a point whose distance from ''p'' is minimum among all the given points other than ''p'' itself. In many uses of these graphs, the directions of the edges are ignored and the NNG is defined instead as an undirected graph. However, the nearest neighbor relation is not a symmetric one, i.e., ''p'' from the definition is not necessarily a nearest neighbor for ''q''. In theoretical discussions of algorithms a kind of general position is often assumed, namely, the nearest (k-nearest) neighbor is unique for each object. In implementations of the algorithms it is necessary to bear in mind that this is not always the case. For situations in which it is necessary to make the nearest neighbor for each object unique, the set '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Downsampling (signal Processing)

In digital signal processing, downsampling, compression, and decimation are terms associated with the process of ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''compression'', or they can describe an entire process of bandwidth reduction (filtering) and sample-rate reduction. When the process is performed on a sequence of samples of a ''signal'' or a continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a lower rate (or density, as in the case of a photograph). ''Decimation'' is a term that historically means the '' removal of every tenth one''. But in signal processing, ''decimation by a factor of 10'' actually means ''keeping'' only every tenth sample. This factor multiplies the sampling interval or, equivalently, divides the sampling rate. For example, if compact disc audio at 44,100 samples/second is ''decimated'' by a factor of 5 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Map (mathematics)

In mathematics, a map or mapping is a function in its general sense. These terms may have originated as from the process of making a geographical map: ''mapping'' the Earth surface to a sheet of paper. The term ''map'' may be used to distinguish some special types of functions, such as homomorphisms. For example, a linear map is a homomorphism of vector spaces, while the term linear function may have this meaning or it may mean a linear polynomial. In category theory, a map may refer to a morphism. The term ''transformation'' can be used interchangeably, but ''transformation'' often refers to a function from a set to itself. There are also a few less common uses in logic and graph theory. Maps as functions In many branches of mathematics, the term ''map'' is used to mean a function, sometimes with a specific property of particular importance to that branch. For instance, a "map" is a " continuous function" in topology, a "linear transformation" in linear algebra, etc. Some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Layer (deep Learning)

A layer in a deep learning model is a structure or network topology in the model's architecture, which takes information from the previous layers and then passes it to the next layer. There are several famous layers in deep learning, namely convolutional layer and maximum pooling layer in the convolutional neural network. Fully connected layer and ReLU layer in vanilla neural network. RNN layer in the RNN model and deconvolutional layer in autoencoder etc. Differences with layers of the neocortex There is an intrinsic difference between deep learning layering and neocortical layering: deep learning layering depends on network topology, while neocortical layering depends on intra-layers homogeneity. Dense layer Dense layer, also called fully-connected layer, refers to the layer whose inside neurons connect to every neuron in the preceding layer. See also *Deep Learning Deep learning (also known as deep structured learning) is part of a broader family of machine learning me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flux (machine-learning Framework)

Flux is an open-source machine-learning software library and ecosystem written in Julia. Its current stable release is v. It has a layer-stacking-based interface for simpler models, and has a strong support on interoperability with other Julia packages instead of a monolithic design. For example, GPU support is implemented transparently by CuArrays.jl This is in contrast to some other machine learning frameworks which are implemented in other languages with Julia bindings, such as TensorFlow.jl, and thus are more limited by the functionality present in the underlying implementation, which is often in C or C++. Flux joined NumFOCUS as an affiliated project in December of 2021. Flux's focus on interoperability has enabled, for example, support for Neural Differential Equations, by fusing Flux.jl and DifferentialEquations.jl into DiffEqFlux.jl. Flux supports recurrent and convolutional networks. It is also capable of differentiable programming through its source-to-source au ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Julia (programming Language)

Julia is a high-level, dynamic programming language. Its features are well suited for numerical analysis and computational science. Distinctive aspects of Julia's design include a type system with parametric polymorphism in a dynamic programming language; with multiple dispatch as its core programming paradigm. Julia supports concurrent, (composable) parallel and distributed computing (with or without using MPI or the built-in corresponding to "OpenMP-style" threads), and direct calling of C and Fortran libraries without glue code. Julia uses a just-in-time (JIT) compiler that is referred to as "just- ahead-of-time" (JAOT) in the Julia community, as Julia compiles all code (by default) to machine code before running it. Julia is garbage-collected, uses eager evaluation, and includes efficient libraries for floating-point calculations, linear algebra, random number generation, and regular expression matching. Many libraries are available, including some (e.g., for fast Fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google JAX

Google JAX is a machine learning framework for transforming numerical functions. It is described as bringing together a modified version oautograd(automatic obtaining of the gradient function through differentiation of a function) and TensorFlow'XLA(Accelerated Linear Algebra). It is designed to follow the structure and workflow of NumPy as closely as possible and works with various existing frameworks such as TensorFlow and PyTorch. The primary functions of JAX are: # grad: automatic differentiation # jit: compilation # vmap: auto-vectorization # pmap: SPMD programming grad The below code demonstrates the grad function's automatic differentiation. # imports from jax import grad import jax.numpy as jnp # define the logistic function def logistic(x): return jnp.exp(x) / (jnp.exp(x) + 1) # obtain the gradient function of the logistic function grad_logistic = grad(logistic) # evaluate the gradient of the logistic function at x = 1 grad_log_out = grad_logistic(1.0) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TensorFlow

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks. "It is machine learning software being used for various kinds of perceptual and language understanding tasks" – Jeffrey Dean, minute 0:47 / 2:17 from YouTube clip TensorFlow was developed by the Google Brain team for internal Google use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released the updated version of TensorFlow, named TensorFlow 2.0, in September 2019. TensorFlow can be used in a wide variety of programming languages, including Python, JavaScript, C++, and Java. This flexibility lends itself to a range of applications in many different sectors. History DistBelief Starting in 2011, Google Brain built DistBelief as a proprietary machine learning system based on deep learning neural n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PyTorch

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is free and open-source software released under the modified BSD license. Although the Python interface is more polished and the primary focus of development, PyTorch also has a C++ interface. A number of pieces of deep learning software are built on top of PyTorch, including Tesla Autopilot, Uber's Pyro, Hugging Face's Transformers, PyTorch Lightning, and Catalyst. PyTorch provides two high-level features: * Tensor computing (like NumPy) with strong acceleration via graphics processing units (GPU) * Deep neural networks built on a tape-based automatic differentiation system History Meta (formerly known as Facebook) operates both ''PyTorch'' and ''Convolutional Architecture for Fast Feature Embedding'' (Caffe2), but models defined by the two framewor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |