|

Generalized Singular-value Decomposition

In linear algebra, the generalized singular value decomposition (GSVD) is the name of two different techniques based on the singular value decomposition, singular value decomposition (SVD). The two versions differ because one version decomposes two matrices (somewhat like the Higher-order singular value decomposition, higher-order or tensor SVD) and the other version uses a set of constraints imposed on the left and right singular vectors of a single-matrix SVD. First version: two-matrix decomposition The generalized singular value decomposition (GSVD) is a matrix decomposition on a pair of matrices which generalizes the singular value decomposition. It was introduced by Van Loan in 1976 and later developed by Paige and Michael Saunders (academic), Saunders, which is the version described here. In contrast to the SVD, the GSVD decomposes simultaneously a pair of matrices with the same number of columns. The SVD and the GSVD, as well as some other possible generalizations of the SV ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Algebra

Linear algebra is the branch of mathematics concerning linear equations such as :a_1x_1+\cdots +a_nx_n=b, linear maps such as :(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n, and their representations in vector spaces and through matrix (mathematics), matrices. Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as line (geometry), lines, plane (geometry), planes and rotation (mathematics), rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to Space of functions, function spaces. Linear algebra is also used in most sciences and fields of engineering because it allows mathematical model, modeling many natural phenomena, and computing efficiently with such models. For nonlinear systems, which cannot be modeled with linear algebra, it is often used for dealing with first-order a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Inverse

In mathematics, and in particular, algebra, a generalized inverse (or, g-inverse) of an element ''x'' is an element ''y'' that has some properties of an inverse element but not necessarily all of them. The purpose of constructing a generalized inverse of a matrix is to obtain a matrix that can serve as an inverse in some sense for a wider class of matrices than invertible matrices. Generalized inverses can be defined in any mathematical structure that involves associative multiplication, that is, in a semigroup. This article describes generalized inverses of a matrix A. A matrix A^\mathrm \in \mathbb^ is a generalized inverse of a matrix A \in \mathbb^ if AA^\mathrmA = A. A generalized inverse exists for an arbitrary matrix, and when a matrix has a regular inverse, this inverse is its unique generalized inverse. Motivation Consider the linear system :Ax = y where A is an m \times n matrix and y \in \mathcal C(A), the column space of A. If m = n and A is nonsingula ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Discriminant Analysis

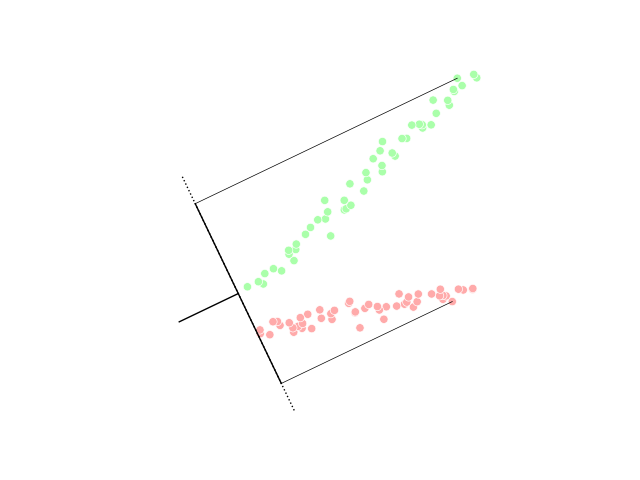

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), canonical variates analysis (CVA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later statistical classification, classification. LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical variable, categorical independent variables and a continuous variable, continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (''i.e.'' the class label). Logistic regr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multidimensional Scaling

Multidimensional scaling (MDS) is a means of visualizing the level of similarity of individual cases of a data set. MDS is used to translate distances between each pair of n objects in a set into a configuration of n points mapped into an abstract Cartesian space. More technically, MDS refers to a set of related ordination techniques used in information visualization, in particular to display the information contained in a distance matrix. It is a form of non-linear dimensionality reduction. Given a distance matrix with the distances between each pair of objects in a set, and a chosen number of dimensions, ''N'', an MDS algorithm places each object into ''N''-dimensional space (a lower-dimensional representation) such that the between-object distances are preserved as well as possible. For ''N'' = 1, 2, and 3, the resulting points can be visualized on a scatter plot. Core theoretical contributions to MDS were made by James O. Ramsay of McGill University, who is also ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correspondence Analysis

Correspondence analysis (CA) is a multivariate statistical technique proposed by Herman Otto Hartley (Hirschfeld) and later developed by Jean-Paul Benzécri. It is conceptually similar to principal component analysis, but applies to categorical rather than continuous data. In a similar manner to principal component analysis, it provides a means of displaying or summarising a set of data in two-dimensional graphical form. Its aim is to display in a biplot any structure hidden in the multivariate setting of the data table. As such it is a technique from the field of multivariate ordination. Since the variant of CA described here can be applied either with a focus on the rows or on the columns it should in fact be called simple (symmetric) correspondence analysis. It is traditionally applied to the contingency table of a pair of nominal variables where each cell contains either a count or a zero value. If more than two categorical variables are to be summarized, a variant called ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing. The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Identity Matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere. It has unique properties, for example when the identity matrix represents a geometric transformation, the object remains unchanged by the transformation. In other contexts, it is analogous to multiplying by the number 1. Terminology and notation The identity matrix is often denoted by I_n, or simply by I if the size is immaterial or can be trivially determined by the context. I_1 = \begin 1 \end ,\ I_2 = \begin 1 & 0 \\ 0 & 1 \end ,\ I_3 = \begin 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end ,\ \dots ,\ I_n = \begin 1 & 0 & 0 & \cdots & 0 \\ 0 & 1 & 0 & \cdots & 0 \\ 0 & 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \end. The term unit matrix has also been widely used, but the term ''identity matrix'' is now standard. The term ''unit matrix'' is ambiguous, because it is also used for a matrix of on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reproducing Kernel Hilbert Space

In functional analysis, a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Specifically, a Hilbert space H of functions from a set X (to \mathbb or \mathbb) is an RKHS if the point-evaluation functional L_x:H\to\mathbb, L_x(f)=f(x), is continuous for every x\in X. Equivalently, H is an RKHS if there exists a function K_x \in H such that, for all f \in H,\langle f, K_x \rangle = f(x).The function K_x is then called the ''reproducing kernel'', and it reproduces the value of f at x via the inner product. An immediate consequence of this property is that convergence in norm implies uniform convergence on any subset of X on which \, K_x\, is bounded. However, the converse does not necessarily hold. Often the set X carries a topology, and \, K_x\, depends continuously on x\in X, in which case: convergence in norm implies uniform convergence on compact subsets of X. It is not entirely straightforwar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Model

In statistics, the term linear model refers to any model which assumes linearity in the system. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term is also used in time series analysis with a different meaning. In each case, the designation "linear" is used to identify a subclass of models for which substantial reduction in the complexity of the related statistical theory is possible. Linear regression models For the regression case, the statistical model is as follows. Given a (random) sample (Y_i, X_, \ldots, X_), \, i = 1, \ldots, n the relation between the observations Y_i and the independent variables X_ is formulated as :Y_i = \beta_0 + \beta_1 \phi_1(X_) + \cdots + \beta_p \phi_p(X_) + \varepsilon_i \qquad i = 1, \ldots, n where \phi_1, \ldots, \phi_p may be nonlinear functions. In the above, the quantities \varepsilon_i are random variables representing err ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jill S

Jill is an English feminine given name, a short form of the name Gillian, which in turn originated as a Middle English variant of Juliana. Jill was such a common name that it had an everygirl quality, as in the 15th century English nursery rhyme Jack and Jill. By the 17th century, the name had become a term for a "common street jade," implying promiscuous sexual behavior, and declined in usage in the Anglosphere. Usage of the name increased again in the 20th century. The name was most used in English-speaking countries from the 1930s to the 1970s. It is currently well-used in the Netherlands. People with the given name *Jill Abramson (born 1954), American author, journalist, and academic * Jill Andrew, Canadian politician * Jill Andrews (born 1980), American singer-songwriter * Jill Astbury, Australian researcher into violence against women * Jill Balcon (1925–2009), British actress * Jill S. Barnholtz-Sloan, American biostatistician and data scientist * Jill Becker, Ameri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tensor Generalized Singular Value Decomposition Following Et Int

In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects associated with a vector space. Tensors may map between different objects such as vectors, scalars, and even other tensors. There are many types of tensors, including scalars and vectors (which are the simplest tensors), dual vectors, multilinear maps between vector spaces, and even some operations such as the dot product. Tensors are defined independent of any basis, although they are often referred to by their components in a basis related to a particular coordinate system; those components form an array, which can be thought of as a high-dimensional matrix. Tensors have become important in physics because they provide a concise mathematical framework for formulating and solving physics problems in areas such as mechanics ( stress, elasticity, quantum mechanics, fluid mechanics, moment of inertia, ...), electrodynamics (electromagnetic tensor, Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moore–Penrose Inverse

In mathematics, and in particular linear algebra, the Moore–Penrose inverse of a matrix , often called the pseudoinverse, is the most widely known generalization of the inverse matrix. It was independently described by E. H. Moore in 1920, Arne Bjerhammar in 1951, and Roger Penrose in 1955. Earlier, Erik Ivar Fredholm had introduced the concept of a pseudoinverse of integral operators in 1903. The terms ''pseudoinverse'' and ''generalized inverse'' are sometimes used as synonyms for the Moore–Penrose inverse of a matrix, but sometimes applied to other elements of algebraic structures which share some but not all properties expected for an inverse element. A common use of the pseudoinverse is to compute a "best fit" ( least squares) approximate solution to a system of linear equations that lacks an exact solution (see below under § Applications). Another use is to find the minimum ( Euclidean) norm solution to a system of linear equations with multiple solutions. The pseu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |