|

Generalized Inverse Gaussian Distribution

In probability theory and statistics, the generalized inverse Gaussian distribution (GIG) is a three-parameter family of continuous probability distributions with probability density function :f(x) = \frac x^ e^,\qquad x>0, where ''Kp'' is a modified Bessel function of the second kind, ''a'' > 0, ''b'' > 0 and ''p'' a real parameter. It is used extensively in geostatistics, statistical linguistics, finance, etc. This distribution was first proposed by Étienne Halphen. It was rediscovered and popularised by Ole Barndorff-Nielsen, who called it the generalized inverse Gaussian distribution. Its statistical properties are discussed in Bent Jørgensen's lecture notes. Properties Alternative parametrization By setting \theta = \sqrt and \eta = \sqrt, we can alternatively express the GIG distribution as :f(x) = \frac \left(\frac\right)^ e^, where \theta is the concentration parameter while \eta is the scaling parameter. Summation Barndorff-Nielsen and Halg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

GIG Distribution Pdf

Gig or GIG may refer to: Arts and entertainment * Concert, known informally as a gig * ''The Gig'', a 1985 film written and directed by Frank D. Gilroy * Gig (Circle Jerks album), ''Gig'' (Circle Jerks album) (1992) * GIG (Hot Wheels AcceleRacers), GIG (''Hot Wheels AcceleRacers''), a character * Gig (Northern Pikes album), ''Gig'' (Northern Pikes album) (1993) * "GUYS Is Green" ("G.I.G.!"), in the Japanese television series ''Ultraman Mebius'' * KGIG-LP ("104.9 The Gig"), an FM radio station licensed to Modesto, California, US Transportation * Gig (boat), a type of boat * Gig (carriage), a two-wheeled sprung cart to be pulled by a horse * Cornish pilot gig, a six-oared rowing boat * Rio de Janeiro–Galeão International Airport (IATA airport code), the main airport serving Rio de Janeiro, Brazil * Giggleswick railway station (National Rail station code), Yorkshire, England * Gig Car Share, a carsharing service in parts of the San Francisco Bay Area Science and technology * Giga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Inverse Gaussian Distribution

In probability theory, the inverse Gaussian distribution (also known as the Wald distribution) is a two-parameter family of continuous probability distributions with support (mathematics), support on (0,∞). Its probability density function is given by : f(x;\mu,\lambda) = \sqrt\frac \exp\biggl(-\frac\biggr) for ''x'' > 0, where \mu > 0 is the mean and \lambda > 0 is the shape parameter. The inverse Gaussian distribution has several properties analogous to a Gaussian distribution. The name can be misleading: it is an inverse only in that, while the Gaussian describes a Wiener process, Brownian motion's level at a fixed time, the inverse Gaussian describes the distribution of the time a Brownian motion with positive drift takes to reach a fixed positive level. Its cumulant generating function (logarithm of the characteristic function) is the inverse of the cumulant generating function of a Gaussian random variable. To indicate that a random variable ''X'' is inverse Gauss ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

John Wiley & Sons

John Wiley & Sons, Inc., commonly known as Wiley (), is an American Multinational corporation, multinational Publishing, publishing company that focuses on academic publishing and instructional materials. The company was founded in 1807 and produces books, Academic journal, journals, and encyclopedias, in print and electronically, as well as online products and services, training materials, and educational materials for undergraduate, graduate, and continuing education students. History The company was established in 1807 when Charles Wiley opened a print shop in Manhattan. The company was the publisher of 19th century American literary figures like James Fenimore Cooper, Washington Irving, Herman Melville, and Edgar Allan Poe, as well as of legal, religious, and other non-fiction titles. The firm took its current name in 1865. Wiley later shifted its focus to scientific, Technology, technical, and engineering subject areas, abandoning its literary interests. Wiley's son Joh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Poisson Distribution

In probability theory and statistics, the Poisson distribution () is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time if these events occur with a known constant mean rate and independently of the time since the last event. It can also be used for the number of events in other types of intervals than time, and in dimension greater than 1 (e.g., number of events in a given area or volume). The Poisson distribution is named after French mathematician Siméon Denis Poisson. It plays an important role for discrete-stable distributions. Under a Poisson distribution with the expectation of ''λ'' events in a given interval, the probability of ''k'' events in the same interval is: :\frac . For instance, consider a call center which receives an average of ''λ ='' 3 calls per minute at all times of day. If the calls are independent, receiving one does not change the probability of when the next on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Normal Variance-mean Mixture

In probability theory and statistics, a normal variance-mean mixture with mixing probability density g is the continuous probability distribution of a random variable Y of the form :Y=\alpha + \beta V+\sigma \sqrtX, where \alpha, \beta and \sigma > 0 are real numbers, and random variables X and V are independent, X is normally distributed with mean zero and variance one, and V is continuously distributed on the positive half-axis with probability density function g. The conditional distribution of Y given V is thus a normal distribution with mean \alpha + \beta V and variance \sigma^2 V. A normal variance-mean mixture can be thought of as the distribution of a certain quantity in an inhomogeneous population consisting of many different normal distributed subpopulations. It is the distribution of the position of a Wiener process (Brownian motion) with drift \beta and infinitesimal variance \sigma^2 observed at a random time point independent of the Wiener process and with probabili ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Conjugate Prior

In Bayesian probability theory, if, given a likelihood function p(x \mid \theta), the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions with respect to that likelihood function and the prior is called a conjugate prior for the likelihood function p(x \mid \theta). A conjugate prior is an algebraic convenience, giving a closed-form expression for the posterior; otherwise, numerical integration may be necessary. Further, conjugate priors may clarify how a likelihood function updates a prior distribution. The concept, as well as the term "conjugate prior", were introduced by Howard Raiffa and Robert Schlaifer in their work on Bayesian decision theory.Howard Raiffa and Robert Schlaifer. ''Applied Statistical Decision Theory''. Division of Research, Graduate School of Business Administration, Harvard University, 1961. A similar c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Inverse-gamma Distribution

In probability theory and statistics, the inverse gamma distribution is a two-parameter family of continuous probability distributions on the positive real line, which is the distribution of the reciprocal of a variable distributed according to the gamma distribution. Perhaps the chief use of the inverse gamma distribution is in Bayesian statistics, where the distribution arises as the marginal posterior distribution for the unknown variance of a normal distribution, if an uninformative prior is used, and as an analytically tractable conjugate prior, if an informative prior is required. It is common among some Bayesians to consider an alternative parametrization of the normal distribution in terms of the precision, defined as the reciprocal of the variance, which allows the gamma distribution to be used directly as a conjugate prior. Other Bayesians prefer to parametrize the inverse gamma distribution differently, as a scaled inverse chi-squared distribution. Characteriza ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gamma Distribution

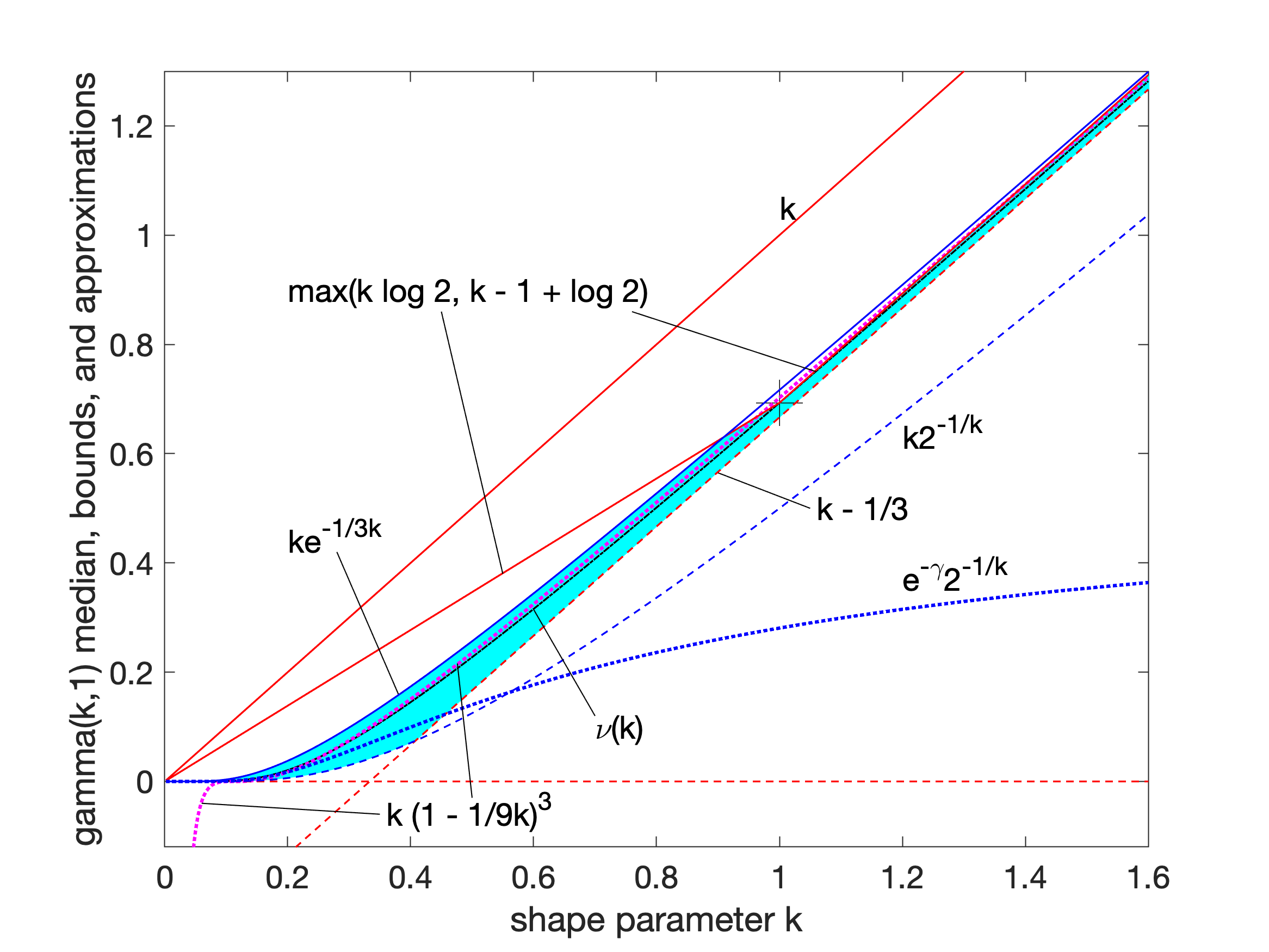

In probability theory and statistics, the gamma distribution is a versatile two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: # With a shape parameter and a scale parameter # With a shape parameter \alpha and a rate parameter In each of these forms, both parameters are positive real numbers. The distribution has important applications in various fields, including econometrics, Bayesian statistics, and life testing. In econometrics, the (''α'', ''θ'') parameterization is common for modeling waiting times, such as the time until death, where it often takes the form of an Erlang distribution for integer ''α'' values. Bayesian statisticians prefer the (''α'',''λ'') parameterization, utilizing the gamma distribution as a conjugate prior for several inverse scale parameters, facilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Imaginary Number

An imaginary number is the product of a real number and the imaginary unit , is usually used in engineering contexts where has other meanings (such as electrical current) which is defined by its property . The square (algebra), square of an imaginary number is . For example, is an imaginary number, and its square is . The number 0, zero is considered to be both real and imaginary. Originally coined in the 17th century by René Descartes as a derogatory term and regarded as fictitious or useless, the concept gained wide acceptance following the work of Leonhard Euler (in the 18th century) and Augustin-Louis Cauchy and Carl Friedrich Gauss (in the early 19th century). An imaginary number can be added to a real number to form a complex number of the form , where the real numbers and are called, respectively, the ''real part'' and the ''imaginary part'' of the complex number. History Although the Greek mathematician and engineer Heron of Alexandria is noted as the first t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Probability Theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |