|

Generalization Error

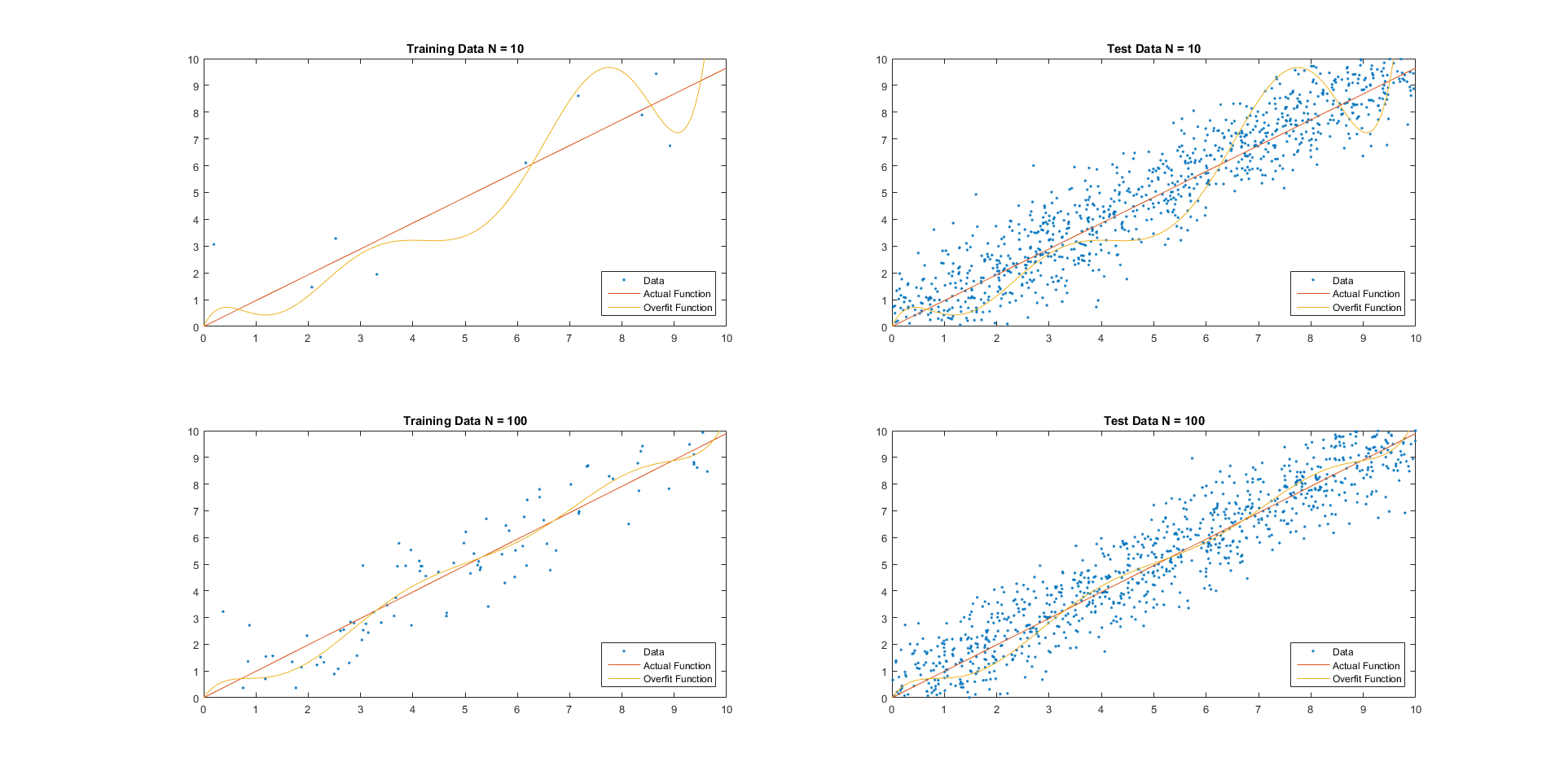

For supervised learning applications in machine learning and statistical learning theory, generalization error (also known as the out-of-sample error or the risk) is a measure of how accurately an algorithm is able to predict outcome values for previously unseen data. Because learning algorithms are evaluated on finite samples, the evaluation of a learning algorithm may be sensitive to sampling error. As a result, measurements of prediction error on the current data may not provide much information about predictive ability on new data. Generalization error can be minimized by avoiding overfitting in the learning algorithm. The performance of a machine learning algorithm is visualized by plots that show values of ''estimates'' of the generalization error through the learning process, which are called learning curves. Definition In a learning problem, the goal is to develop a function f_n(\vec) that predicts output values y for each input datum \vec. The subscript n indicates tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

Supervised learning (SL) is a machine learning paradigm for problems where the available data consists of labelled examples, meaning that each data point contains features (covariates) and an associated label. The goal of supervised learning algorithms is learning a function that maps feature vectors (inputs) to labels (output), based on example input-output pairs. It infers a function from ' consisting of a set of ''training examples''. In supervised learning, each example is a ''pair'' consisting of an input object (typically a vector) and a desired output value (also called the ''supervisory signal''). A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples. An optimal scenario will allow for the algorithm to correctly determine the class labels for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Learning Theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data. Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics. Introduction The goals of learning are understanding and prediction. Learning falls into many categories, including supervised learning, unsupervised learning, online learning, and reinforcement learning. From the perspective of statistical learning theory, supervised learning is best understood. Supervised learning involves learning from a training set of data. Every point in the training is an input-output pair, where the input maps to an output. The learning problem consists of inferring the function that maps between the input and the output, such that the learned function can be used to predict t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sampling Error

In statistics, sampling errors are incurred when the statistical characteristics of a population are estimated from a subset, or sample, of that population. Since the sample does not include all members of the population, statistics of the sample (often known as estimators), such as means and quartiles, generally differ from the statistics of the entire population (known as parameters). The difference between the sample statistic and population parameter is considered the sampling error.Sarndal, Swenson, and Wretman (1992), Model Assisted Survey Sampling, Springer-Verlag, For example, if one measures the height of a thousand individuals from a population of one million, the average height of the thousand is typically not the same as the average height of all one million people in the country. Since sampling is almost always done to estimate population parameters that are unknown, by definition exact measurement of the sampling errors will not be possible; however they can often be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a Heuristic (computer science), heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Curve

A learning curve is a graphical representation of the relationship between how Skill, proficient people are at a task and the amount of experience they have. Proficiency (measured on the vertical axis) usually increases with increased experience (the horizontal axis), that is to say, the more someone, groups, companies or industries perform a task, the better their performance at the task.Compare: The common expression "a steep learning curve" is a misnomer suggesting that an activity is difficult to learn and that expending much effort does not increase proficiency by much, although a learning curve with a steep start actually represents rapid progress., see the "Discussions" section, Dr. Smith's remark about the usage of the term "steep learning curve": "First, semantics. A steep learning curve is one where you gain proficiency over a short number of trials. That means the curve is steep. I think semantically we are really talking about a prolonged or long learning curve. I kn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, this i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Probability Distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered for any given number of random variables. The joint distribution encodes the marginal distributions, i.e. the distributions of each of the individual random variables. It also encodes the conditional probability distributions, which deal with how the outputs of one random variable are distributed when given information on the outputs of the other random variable(s). In the formal mathematical setup of measure theory, the joint distribution is given by the pushforward measure, by the map obtained by pairing together the given random variables, of the sample space's probability measure. In the case of real-valued random variables, the joint distribution, as a particular multivariate distribution, may be expressed by a multivariate cumulativ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stability (learning Theory)

Stability, also known as algorithmic stability, is a notion in computational learning theory of how a machine learning, machine learning algorithm is perturbed by small changes to its inputs. A stable learning algorithm is one for which the prediction does not change much when the training data is modified slightly. For instance, consider a machine learning algorithm that is being trained to Handwriting recognition, recognize handwritten letters of the alphabet, using 1000 examples of handwritten letters and their labels ("A" to "Z") as a training set. One way to modify this training set is to leave out an example, so that only 999 examples of handwritten letters and their labels are available. A stable learning algorithm would produce a similar statistical classification, classifier with both the 1000-element and 999-element training sets. Stability can be studied for many types of learning problems, from Natural language processing, language learning to inverse problems in physic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leave-one-out Cross-validation

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. Cross-validation is a resampling method that uses different portions of the data to test and train a model on different iterations. It is mainly used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation dataset or ''testing set''). The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias and to give an insight on ho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L1 Norm

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer to penaliz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |