|

Free Energy Principle

The free energy principle is a mathematical principle in biophysics and cognitive science that provides a formal account of the representational capacities of physical systems: that is, why things that exist look as if they track properties of the systems to which they are coupled. It establishes that the dynamics of physical systems minimise a quantity known as surprisal (which is just the negative log probability of some outcome); or equivalently, its variational upper bound, called free energy. The principle is formally related to variational Bayesian methods and was originally introduced by Karl Friston as an explanation for embodied perception-action loops in neuroscience, where it is also known as active inference. The free energy principle models the behaviour of systems that are distinct from, but coupled to, another system (e.g., an embedding environment), where the degrees of freedom that implement the interface between the two systems is known as a Markov blanket. More ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Blanket

In statistics and machine learning, when one wants to infer a random variable with a set of variables, usually a subset is enough, and other variables are useless. Such a subset that contains all the useful information is called a Markov blanket. If a Markov blanket is minimal, meaning that it cannot drop any variable without losing information, it is called a Markov boundary. Identifying a Markov blanket or a Markov boundary helps to extract useful features. The terms of Markov blanket and Markov boundary were coined by Judea Pearl in 1988. Markov blanket A Markov blanket of a random variable Y in a random variable set \mathcal=\ is any subset \mathcal_1 of \mathcal, conditioned on which other variables are independent with Y: :Y\perp \!\!\! \perp\mathcal\backslash\mathcal_1 \mid \mathcal_1. It means that \mathcal_1 contains at least all the information one needs to infer Y, where the variables in \mathcal\backslash\mathcal_1 are redundant. In general, a given Markov blank ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surprisal

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory. The Shannon information can be interpreted as quantifying the level of "surprise" of a particular outcome. As it is such a basic quantity, it also appears in several other settings, such as the length of a message needed to transmit the event given an optimal source coding of the random variable. The Shannon information is closely related to '' entropy'', which is the expected value of the self-information of a random variable, quantifying how surprising the random variable is "on average". This is the average amount of self-information an observer would expect to gain about a random variable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unconscious Inference

Unconscious inference (German: unbewusster Schluss), also referred to as unconscious conclusion, is a term of perceptual psychology coined in 1867 by the German physicist and polymath Hermann von Helmholtz to describe an involuntary, pre-rational and reflex-like mechanism which is part of the formation of visual impressions. While precursory notions have been identified in the writings of Thomas Hobbes, Robert Hooke, and Francis North (especially in connection with auditory perception) as well as in Francis Bacon's '' Novum Organum'', Helmholtz's theory was long ignored or even dismissed by philosophy and psychology. It has since received new attention from modern research, and the work of recent scholars has approached Helmholtz's view. In the third and final volume of his ''Handbuch der physiologischen Optik'' (1856–67, translated as ''Treatise on Physiological Optics'' in 1920-25, availablhere, Helmholtz discussed the psychological effects of visual perception. His first ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Entropy Principle

The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy, in the context of precisely stated prior data (such as a proposition that expresses testable information). Another way of stating this: Take precisely stated prior data or testable information about a probability distribution function. Consider the set of all trial probability distributions that would encode the prior data. According to this principle, the distribution with maximal information entropy is the best choice. History The principle was first expounded by E. T. Jaynes in two papers in 1957 where he emphasized a natural correspondence between statistical mechanics and information theory. In particular, Jaynes offered a new and very general rationale why the Gibbsian method of statistical mechanics works. He argued that the entropy of statistical mechanics and the information entropy of informa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Embodied Cognition

Embodied cognition is the theory that many features of cognition, whether human or otherwise, are shaped by aspects of an organism's entire body. Sensory and motor systems are seen as fundamentally integrated with cognitive processing. The cognitive features include high-level mental constructs (such as concepts and categories) and performance on various cognitive tasks (such as reasoning or judgment). The bodily aspects involve the motor system, the perceptual system, the bodily interactions with the environment ( situatedness), and the assumptions about the world built into the organism's functional structure. The embodied mind thesis challenges other theories, such as cognitivism, computationalism, and Cartesian dualism. It is closely related to the extended mind thesis, situated cognition, and enactivism. The modern version depends on insights drawn from up to date research in psychology, linguistics, cognitive science, dynamical systems, artificial intelligence, r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synergetics (Haken)

Synergetics is an interdisciplinary science explaining the formation and self-organization of patterns and structures in open systems far from thermodynamic equilibrium. It is founded by Hermann Haken, inspired by the laser theory. Haken's interpretation of the laser principles as self-organization of non-equilibrium systems paved the way at the end of the 1960s to the development of synergetics. One of his successful popular books is ''Erfolgsgeheimnisse der Natur'', translated into English as ''The Science of Structure: Synergetics''. Self-organization requires a 'macroscopic' system, consisting of many nonlinearly interacting subsystems. Depending on the external control parameters (environment, energy fluxes) self-organization takes place. Order-parameter concept Essential in synergetics is the order-parameter concept which was originally introduced in the Ginzburg–Landau theory in order to describe phase transitions in thermodynamics. The order parameter concept is gene ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cybernetics

Cybernetics is a wide-ranging field concerned with circular causality, such as feedback, in regulatory and purposive systems. Cybernetics is named after an example of circular causal feedback, that of steering a ship, where the helmsperson maintains a steady course in a changing environment by adjusting their steering in continual response to the effect it is observed as having. Cybernetics is concerned with circular causal processes such as steering however they are embodied,Ashby, W. R. (1956). An introduction to cybernetics. London: Chapman & Hall, p. 1. including in ecological, technological, biological, cognitive, and social systems, and in the context of practical activities such as designing, learning, managing, conversation, and the practice of cybernetics itself. Cybernetics' transdisciplinary and "antidisciplinary" character has meant that it intersects with a number of other fields, leading to it having both wide influence and diverse interpretations. Cybernetics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Practopoiesis

An adaptive system is a set of interacting or interdependent entities, real or abstract, forming an integrated whole that together are able to respond to environmental changes or changes in the interacting parts, in a way analogous to either continuous physiological homeostasis or evolutionary adaptation in biology. Feedback loops represent a key feature of adaptive systems, such as ecosystems and individual organisms; or in the human world, communities, organizations, and families. Adaptive systems can be organized into a hierarchy. Artificial adaptive systems include robots with control systems that utilize negative feedback to maintain desired states. The law of adaptation The law of adaptation may be stated informally as: Formally, the law can be defined as follows: Given a system S, we say that a physical event E is a stimulus for the system S if and only if the probability P(S \rightarrow S', E) that the system suffers a change or be perturbed (in its elements or in i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

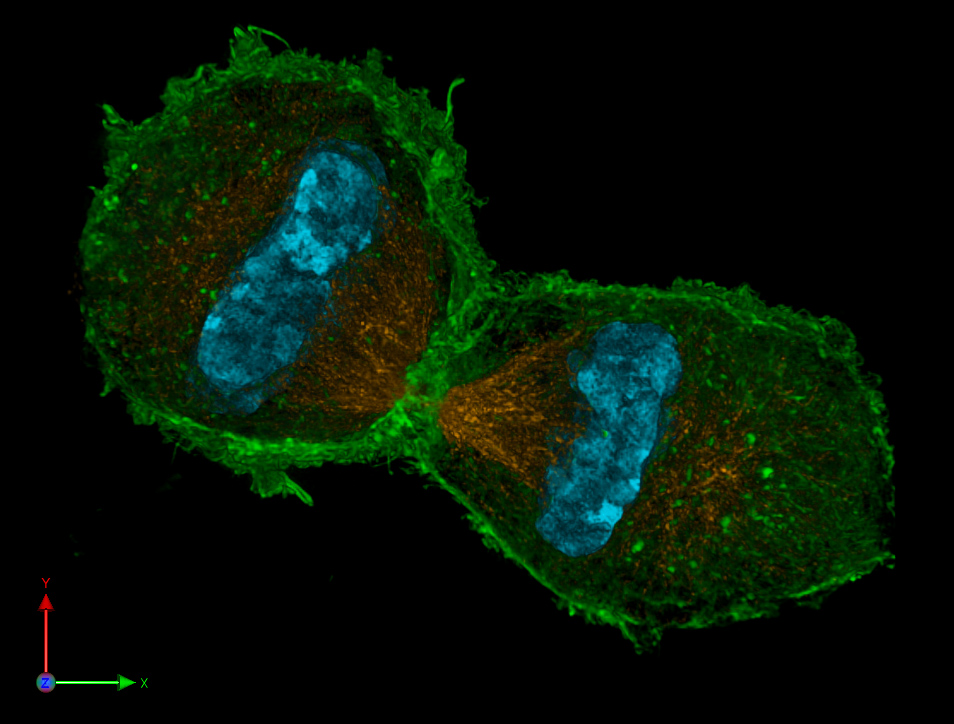

Autopoiesis

The term autopoiesis () refers to a system capable of producing and maintaining itself by creating its own parts. The term was introduced in the 1972 publication '' Autopoiesis and Cognition: The Realization of the Living'' by Chilean biologists Humberto Maturana and Francisco Varela to define the self-maintaining chemistry of living cells. Since then the concept has been also applied to the fields of cognition, systems theory, architecture and sociology. Overview In their 1972 book ''Autopoiesis and Cognition'', Chilean biologists Maturana and Varela described how they invented the word autopoiesis. They explained that, They described the "space defined by an autopoietic system" as "self-contained", a space that "cannot be described by using dimensions that define another space. When we refer to our interactions with a concrete autopoietic system, however, we project this system on the space of our manipulations and make a description of this projection." Meaning Auto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

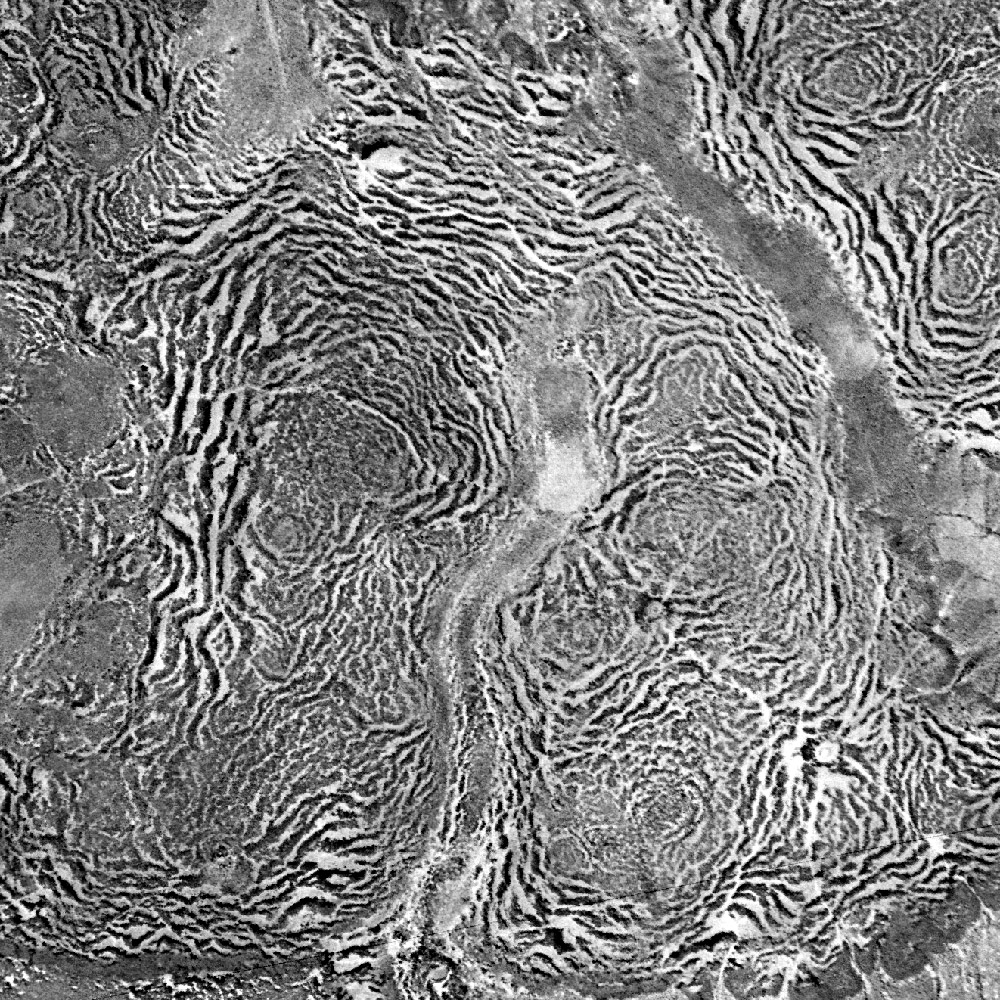

Pattern Formation

The science of pattern formation deals with the visible, (statistically) orderly outcomes of self-organization and the common principles behind similar patterns in nature. In developmental biology, pattern formation refers to the generation of complex organizations of cell fates in space and time. The role of genes in pattern formation is an aspect of morphogenesis, the creation of diverse anatomies from similar genes, now being explored in the science of evolutionary developmental biology or evo-devo. The mechanisms involved are well seen in the anterior-posterior patterning of embryos from the model organism ''Drosophila melanogaster'' (a fruit fly), one of the first organisms to have its morphogenesis studied, and in the eyespots of butterflies, whose development is a variant of the standard (fruit fly) mechanism. Patterns in nature Examples of pattern formation can be found in biology, physics, and science, and can readily be simulated with computer graphics, as desc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

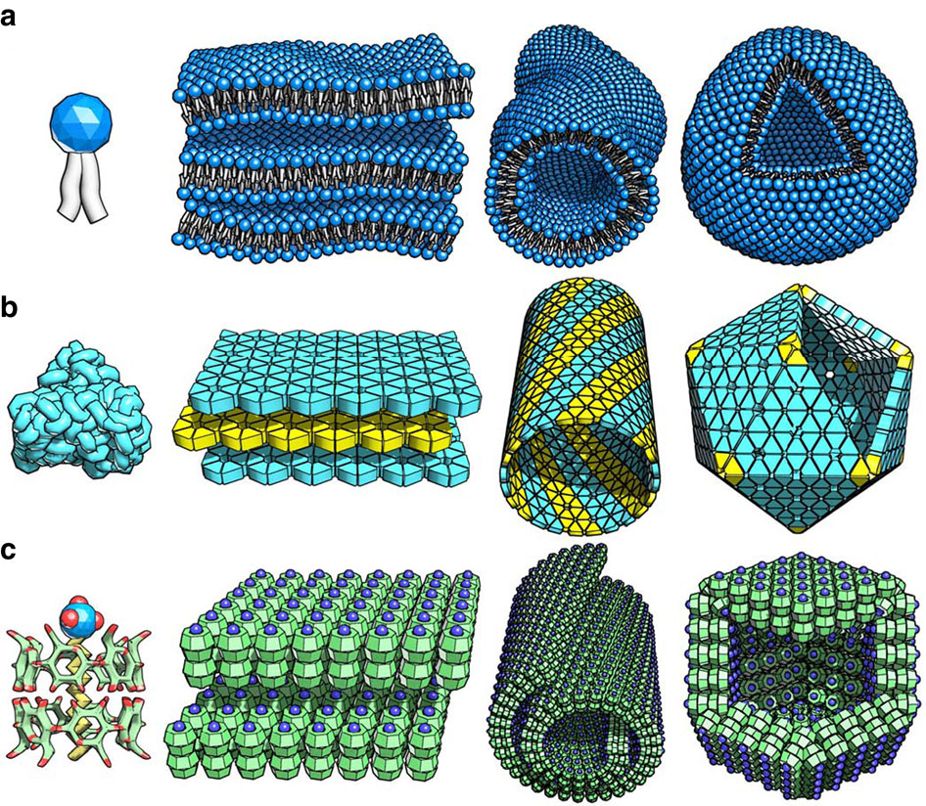

Self-assembly

Self-assembly is a process in which a disordered system of pre-existing components forms an organized structure or pattern as a consequence of specific, local interactions among the components themselves, without external direction. When the constitutive components are molecules, the process is termed molecular self-assembly. Self-assembly can be classified as either static or dynamic. In ''static'' self-assembly, the ordered state forms as a system approaches equilibrium, reducing its free energy. However, in ''dynamic'' self-assembly, patterns of pre-existing components organized by specific local interactions are not commonly described as "self-assembled" by scientists in the associated disciplines. These structures are better described as " self-organized", although these terms are often used interchangeably. Self-assembly in chemistry and materials science Self-assembly in the classic sense can be defined as ''the spontaneous and reversible organization of molec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Good Regulator

The good regulator is a theorem conceived by Roger C. Conant and W. Ross Ashby that is central to cybernetics. Originally stated that "every good regulator of a system must be a model of that system", but more accurately, every good regulator must contain a model of the system. That is, any regulator that is maximally simple among optimal regulators must behave as an image of that system under a homomorphism; while the authors sometimes say 'isomorphism', the mapping they construct is only a homomorphism. Theorem This theorem is obtained by considering the entropy of the variation of the output of the controlled system, and shows that, under very general conditions, that the entropy is minimized when there is a (deterministic) mapping h:S\to R from the states of the system to the states of the regulator. The authors view this map h as making the regulator a 'model' of the system. With regard to the brain, insofar as it is successful and efficient as a regulator for surv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |