|

Explicitly Parallel Instruction Computing

Explicitly parallel instruction computing (EPIC) is a term coined in 1997 by the Itanium, HP–Intel alliance to describe a computing paradigm that researchers had been investigating since the early 1980s. This paradigm is also called ''Independence'' architectures. It was the basis for Intel and Hewlett-Packard, HP development of the Intel Itanium architecture, and Hewlett-Packard, HP later asserted that "EPIC" was merely an old term for the Itanium architecture. EPIC permits microprocessors to execute software instructions in parallel by using the compiler, rather than complex on-die (integrated circuit), die circuitry, to control parallel instruction execution. This was intended to allow simple performance scaling without resorting to higher clock rate, clock frequencies. Roots in VLIW By 1989, researchers at HP recognized that reduced instruction set computer (RISC) architectures were reaching a limit at one instruction per cycle. They began an investigation into a new archite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Itanium

Itanium (; ) is a discontinued family of 64-bit computing, 64-bit Intel microprocessors that implement the Intel Itanium architecture (formerly called IA-64). The Itanium architecture originated at Hewlett-Packard (HP), and was later jointly developed by HP and Intel. Launching in June 2001, Intel initially marketed the processors for enterprise servers and high-performance computing systems. In the concept phase, engineers said "we could run circles around PowerPC...we could kill the x86". Early predictions were that IA-64 would expand to the lower-end servers, supplanting Xeon, and eventually penetrate into the personal computers, eventually to supplant Reduced instruction set computer, reduced instruction set computing (RISC) and complex instruction set computing (CISC) architectures for all general-purpose applications. When first released in 2001 after a decade of development, Itanium's performance was disappointing compared to better-established RISC and CISC processors. Em ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Memory Hierarchy

In computer architecture, the memory hierarchy separates computer storage into a hierarchy based on response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlling technologies. Memory hierarchy affects performance in computer architectural design, algorithm predictions, and lower level programming constructs involving locality of reference. Designing for high performance requires considering the restrictions of the memory hierarchy, i.e. the size and capabilities of each component. Each of the various components can be viewed as part of a hierarchy of memories in which each member is typically smaller and faster than the next highest member of the hierarchy. To limit waiting by higher levels, a lower level will respond by filling a buffer and then signaling for activating the transfer. There are four major storage levels. * ''Internal''processor registers and cache. * Mainthe system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Software Pipelining

In computer science, software pipelining is a technique used to optimize loops, in a manner that parallels hardware pipelining. Software pipelining is a type of out-of-order execution, except that the reordering is done by a compiler (or in the case of hand written assembly code, by the programmer) instead of the processor. Some computer architectures have explicit support for software pipelining, notably Intel's IA-64 architecture. It is important to distinguish ''software pipelining'', which is a target code technique for overlapping loop iterations, from ''modulo scheduling'', the currently most effective known compiler technique for generating software pipelined loops. Software pipelining has been known to assembly language programmers of machines with instruction-level parallelism since such architectures existed. Effective compiler generation of such code dates to the invention of modulo scheduling by Rau and Glaeser. Lam showed that special hardware is unnecessary for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Register Window

In computer engineering, register windows are a feature which dedicates registers to a subroutine by dynamically aliasing a subset of internal registers to fixed, programmer-visible registers. Register windows are implemented to improve the performance of a processor by reducing the number of stack operations required for function calls and returns. One of the most influential features of the Berkeley RISC design, they were later implemented in instruction set architectures such as AMD Am29000, Intel i960, Sun Microsystems SPARC, and Intel Itanium. General operation Several sets of registers are provided for the different parts of the program. Registers are deliberately hidden from the programmer to force several subroutines to share processor resources. Rendering the registers invisible can be implemented efficiently; the CPU recognizes the movement from one part of the program to another during a procedure call. It is accomplished by one of a small number of instructions (''p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Register Renaming

In computer architecture, register renaming is a technique that abstracts logical processor register, registers from physical registers. Every logical register has a set of physical registers associated with it. When a machine language instruction refers to a particular logical register, the processor transposes this name to one specific physical register on the fly. The physical registers are opaque and cannot be referenced directly but only via the canonical names. This technique is used to eliminate false Data dependency, data dependencies arising from the reuse of registers by successive Instruction (computer science), instructions that do not have any real data dependencies between them. The elimination of these false data dependencies reveals more instruction-level parallelism in an instruction stream, which can be exploited by various and complementary techniques such as superscalar and out-of-order execution for better Computer performance, performance. Problem approach ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Register File

A register file is an array of processor registers in a central processing unit (CPU). The instruction set architecture of a CPU will almost always define a set of registers which are used to stage data between memory and the functional units on the chip. The register file is part of the architecture and visible to the programmer, as opposed to the concept of transparent caches. In simpler CPUs, these ''architectural registers'' correspond one-for-one to the entries in a physical register file (PRF) within the CPU. More complicated CPUs use register renaming, so that the mapping of which physical entry stores a particular architectural register changes dynamically during execution. Modern integrated circuit-based register files are usually implemented by way of fast static RAMs with multiple ports. Such RAMs are distinguished by having dedicated read and write ports, whereas ordinary multiported SRAMs will usually read and write through the same ports. Register banking is th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Not A Thing (computing)

Not or NOT may also refer to: Language * Not, the general declarative form of "no", indicating a negation of a related statement that usually precedes * ... Not!, a grammatical construction used as a contradiction, popularized in the early 1990s Science and technology * Negation, a unary operator in logic depicted as ~, ¬, or ! * Bitwise NOT, an operator used in computer programming * NOT gate, a digital logic gate (commonly called an inverter) * Nordic Optical Telescope, an astronomical telescope at Roque de los Muchachos Observatory, La Palma, Canary Islands Other uses * Nottingham railway station (station code NOT) * Polish Federation of Engineering Associations (''Naczelna Organizacja Techniczna'') * Not, Missouri Not is an unincorporated community in Shannon County, Missouri, United States. History A post office called Not was established in 1886, and remained in operation until 1917. The community was so named on account of the knot A knot is an in ..., an uninc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Speculative Execution

Speculative execution is an optimization (computer science), optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing the work after it is known that it is needed. If it turns out the work was not needed after all, most changes made by the work are reverted and the results are ignored. The objective is to provide more Concurrency (computer science), concurrency if extra Resource (computer science), resources are available. This approach is employed in a variety of areas, including branch predictor, branch prediction in instruction pipeline, pipelined CPU, processors, value prediction for exploiting value locality, prefetching Instruction prefetch, memory and File system, files, and optimistic concurrency control in Relational database management system, database systems. Speculative multithreading is a special case of specu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Branch Predication

In computer architecture, predication is a feature that provides an alternative to conditional transfer of control, as implemented by conditional branch machine instructions. Predication works by having conditional (''predicated'') non-branch instructions associated with a ''predicate'', a Boolean value used by the instruction to control whether the instruction is allowed to modify the architectural state or not. If the predicate specified in the instruction is true, the instruction modifies the architectural state; otherwise, the architectural state is unchanged. For example, a predicated move instruction (a conditional move) will only modify the destination if the predicate is true. Thus, instead of using a conditional branch to select an instruction or a sequence of instructions to execute based on the predicate that controls whether the branch occurs, the instructions to be executed are associated with that predicate, so that they will be executed, or not executed, bas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Instruction-level Parallelism

Instruction-level parallelism (ILP) is the Parallel computing, parallel or simultaneous execution of a sequence of Instruction set, instructions in a computer program. More specifically, ILP refers to the average number of instructions run per step of this parallel execution. Discussion ILP must not be confused with Concurrency (computer science), concurrency. In ILP, there is a single specific Thread (computing), thread of execution of a Process (computing), process. On the other hand, concurrency involves the assignment of multiple threads to a Central processing unit, CPU's core in a strict alternation, or in true parallelism if there are enough CPU cores, ideally one core for each runnable thread. There are two approaches to instruction-level parallelism: Computer hardware, hardware and software. Hardware-level ILP works upon dynamic parallelism, whereas software-level ILP works on static parallelism. Dynamic parallelism means that the processor decides at run time whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Stop Bit

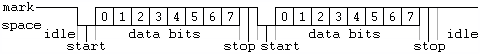

Asynchronous serial communication is a form of serial communication in which the communicating endpoints' interfaces are not continuously synchronized by a common clock signal. Synchronization ( clock recovery) is done by data-embedded signal: the data stream contains synchronization information in a form of start and stop signals set before and after each payload transmission. The start signal prepares the receiver for arrival of data and the stop signal resets its state to enable triggering of a new sequence. A common kind of start-stop transmission is ASCII over RS-232, for example for use in teletypewriter operation. Origin Mechanical teleprinters using 5-bit codes (see Baudot code) typically used a stop period of 1.5 bit times.Dead link: 2015-Oct-03 Very early electromechanical teletypewriters (pre-1930) could require 2 stop bits to allow mechanical impression without buffering. Hardware which does not support fractional stop bits can communicate with a device that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |