|

Discretization Of Continuous Features

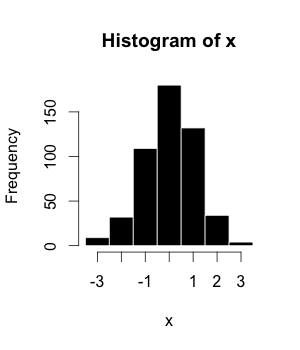

In statistics and machine learning, discretization refers to the process of converting or partitioning continuous attributes, features or variables to discretized or nominal attributes/features/variables/ intervals. This can be useful when creating probability mass functions – formally, in density estimation. It is a form of discretization in general and also of binning, as in making a histogram. Whenever continuous data is discretized, there is always some amount of discretization error. The goal is to reduce the amount to a level considered negligible for the modeling purposes at hand. Typically data is discretized into partitions of ''K'' equal lengths/width (equal intervals) or K% of the total data (equal frequencies). Mechanisms for discretizing continuous data include Fayyad & Irani's MDL method, which uses mutual information to recursively define the best bins, CAIM, CACC, Ameva, and many others Many machine learning algorithms are known to produce better models by d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Function

In mathematics, a continuous function is a function such that a continuous variation (that is a change without jump) of the argument induces a continuous variation of the value of the function. This means that there are no abrupt changes in value, known as '' discontinuities''. More precisely, a function is continuous if arbitrarily small changes in its value can be assured by restricting to sufficiently small changes of its argument. A discontinuous function is a function that is . Up until the 19th century, mathematicians largely relied on intuitive notions of continuity, and considered only continuous functions. The epsilon–delta definition of a limit was introduced to formalize the definition of continuity. Continuity is one of the core concepts of calculus and mathematical analysis, where arguments and values of functions are real and complex numbers. The concept has been generalized to functions between metric spaces and between topological spaces. The latter are t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuity Correction

In probability theory, a continuity correction is an adjustment that is made when a discrete distribution is approximated by a continuous distribution. Examples Binomial If a random variable ''X'' has a binomial distribution with parameters ''n'' and ''p'', i.e., ''X'' is distributed as the number of "successes" in ''n'' independent Bernoulli trials with probability ''p'' of success on each trial, then :P(X\leq x) = P(X |

Density Estimation

In statistics, probability density estimation or simply density estimation is the construction of an estimate, based on observed data, of an unobservable underlying probability density function. The unobservable density function is thought of as the density according to which a large population is distributed; the data are usually thought of as a random sample from that population. A variety of approaches to density estimation are used, including Parzen windows and a range of data clustering techniques, including vector quantization. The most basic form of density estimation is a rescaled histogram. Example We will consider records of the incidence of diabetes. The following is quoted verbatim from the data set description: :''A population of women who were at least 21 years old, of Pima Indian heritage and living near Phoenix, Arizona, was tested for diabetes mellitus according to World Health Organization criteria. The data were collected by the US National Insti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Random Field

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. What kind of graph is used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions. Other examples where CRFs are used are: labeling or parsing of sequential data for natural language processing or biological sequences, part-of-speech tagging, shallow parsing, named entity recog ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as shannons ( bits), nats or hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and Y. MI is the expected value of the pointwise mutual information (PMI). The quantity was defined and analyzed by Claude Shannon in his landmark paper " A Mathemat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Usama Fayyad

Osama most commonly refers to Osama bin Laden (1957–2011), the founder of al-Qaeda. Osama or Usama may also refer to: Film * ''Osama'' (film), a 2003 film made in Afghanistan * '' Being Osama'', a 2004 documentary film of six men named Osama * '' Main Osama'', an upcoming Indian film Other uses * Osama (name) * "Osama" (song), 2021 single by Zakes Bantwini * ''Dinner With Osama'', collection of short stories by Marilyn Krysl * ''Osama'' (novel), a World Fantasy Award-winning novel by Lavie Tidhar See also * Ōsama * Ōsama Game is a cell phone novel written by Nobuaki Kanazawa (pen name: Pakkuncho), consisting of five volumes. A film based on the novel was released in 2011, and directed by Norio Tsuruta. The theme song of the film is "Amazuppai Haru ni Sakura Saku" by ..., a Japanese 2011 horror film * Osamu {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conceptual Model

A conceptual model is a representation of a system. It consists of concepts used to help people know, understand, or simulate a subject the model represents. In contrast, physical models are physical object such as a toy model that may be assembled and made to work like the object it represents. The term may refer to models that are formed after a conceptualization or generalization process. Conceptual models are often abstractions of things in the real world, whether physical or social. Semantic studies are relevant to various stages of concept formation. Semantics is basically about concepts, the meaning that thinking beings give to various elements of their experience. Overview Models of concepts and models that are conceptual The term ''conceptual model'' is normal. It could mean "a model of concept" or it could mean "a model that is conceptual." A distinction can be made between ''what models are'' and ''what models are made of''. With the exception of iconic mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Negligible

{{Short pages monitor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discretization Error

In numerical analysis, computational physics, and simulation, discretization error is the error resulting from the fact that a function of a continuous variable is represented in the computer by a finite number of evaluations, for example, on a lattice. Discretization error can usually be reduced by using a more finely spaced lattice, with an increased computational cost. Examples Discretization error is the principal source of error in methods of finite differences and the pseudo-spectral method of computational physics. When we define the derivative of \,\!f(x) as f'(x) = \lim_ or f'(x)\approx\frac, where \,\!h is a finitely small number, the difference between the first formula and this approximation is known as discretization error. Related phenomena In signal processing, the analog of discretization is sampling, and results in no loss if the conditions of the sampling theorem are satisfied, otherwise the resulting error is called aliasing. Discretization error, which ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Histogram

A histogram is an approximate representation of the frequency distribution, distribution of numerical data. The term was first introduced by Karl Pearson. To construct a histogram, the first step is to "Data binning, bin" (or "Data binning, bucket") the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping interval (mathematics), intervals of a variable. The bins (intervals) must be adjacent and are often (but not required to be) of equal size. If the bins are of equal size, a bar is drawn over the bin with height proportional to the Frequency (statistics), frequency—the number of cases in each bin. A histogram may also be normalization (statistics), normalized to display "relative" frequencies showing the proportion of cases that fall into each of several Categorization, categories, with the sum of the heights equaling 1. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |