|

Data Mart

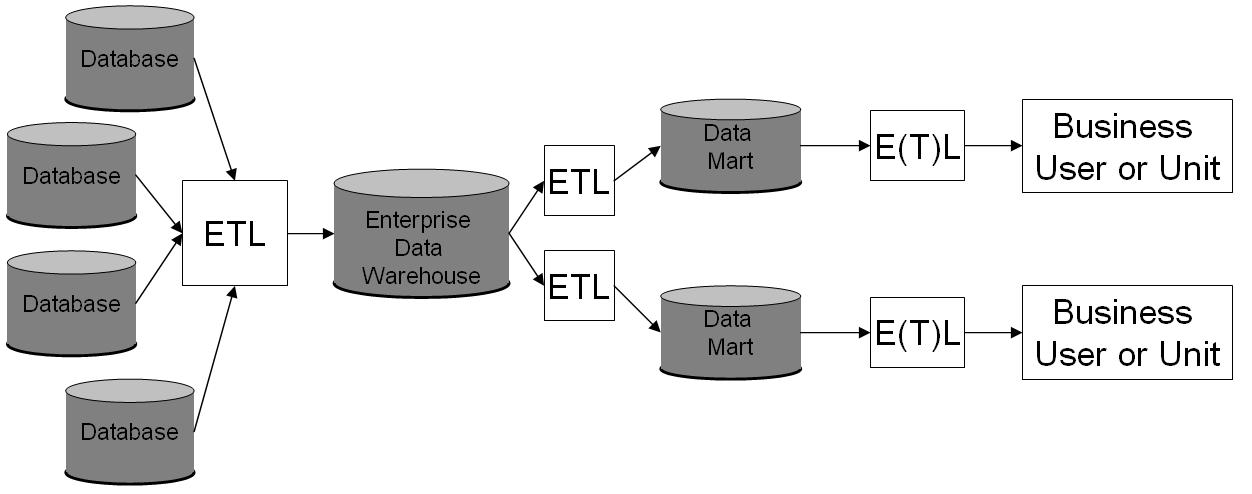

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data warehouses have an enterprise-wide depth, the information in data marts pertains to a single department. In some deployments, each department or business unit is considered the ''owner'' of its data mart including all the ''hardware'', ''software'' and ''data''. This enables each department to isolate the use, manipulation and development of their data. In other deployments where conformed dimensions are used, this business unit owner will not hold true for shared dimensions like customer, product, etc. Warehouses and data marts are built because the information in the database is not organized in a way that makes it readily accessible. This organization requires queries that are too complicated, difficult to access or resource intensive. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse Overview

In the pursuit of knowledge, data (; ) is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted. A datum is an individual value in a collection of data. Data is usually organized into structures such as tables that provide additional context and meaning, and which may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data is commonly used in scientific research, economics, and in virtually every other form of human organizational activity. Examples of data sets include price indices (such as consumer price index), unemployment rates, literacy rates, and census data. In this context, data represents the raw facts and figures which can be used in such a manner in order to capture the useful information out of it. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Response Time (technology)

In technology, response time is the time a system or functional unit takes to react to a given input. Computing Response time is the total amount of time it takes to respond to a request for service. That service can be anything from a memory fetch, to a disk IO, to a complex database query, or loading a full web page. Ignoring transmission time for a moment, the response time is the sum of the service time and wait time. The service time is the time it takes to do the work you requested. For a given request the service time varies little as the workload increases – to do X amount of work it always takes X amount of time. The wait time is how long the request had to wait in a queue before being serviced and it varies from zero, when no waiting is required, to a large multiple of the service time, as many requests are already in the queue and have to be serviced first. With basic queueing theory math you can calculate how the average wait time increases as the device providing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wiley (publisher)

John Wiley & Sons, Inc., commonly known as Wiley (), is an American multinational publishing company founded in 1807 that focuses on academic publishing and instructional materials. The company produces books, journals, and encyclopedias, in print and electronically, as well as online products and services, training materials, and educational materials for undergraduate, graduate, and continuing education students. History The company was established in 1807 when Charles Wiley opened a print shop in Manhattan. The company was the publisher of 19th century American literary figures like James Fenimore Cooper, Washington Irving, Herman Melville, and Edgar Allan Poe, as well as of legal, religious, and other non-fiction titles. The firm took its current name in 1865. Wiley later shifted its focus to scientific, technical, and engineering subject areas, abandoning its literary interests. Wiley's son John (born in Flatbush, New York, October 4, 1808; died in East Orange, New Jer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ralph Kimball

Ralph Kimball (born July 18, 1944) is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to be understandable and fast. His bottom-up methodology, also known as dimensional modeling or the Kimball methodology, is one of the two main data warehousing methodologies alongside Bill Inmon. He is the principal author of the best-selling books ''The Data Warehouse Toolkit'', ''The Data Warehouse Lifecycle Toolkit'', ''The Data Warehouse ETL Toolkit'' and ''The Kimball Group Reader'', published by Wiley and Sons. Career After receiving a Ph.D. in 1973 from Stanford University in electrical engineering (specializing in man-machine systems), Ralph joined the Xerox Palo Alto Research Center (PARC). At PARC Ralph was a principal designer of the Xerox Star Workstation, the first commercial product to use mice, icons and windows. Kimball the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Inconsistency

In database systems, consistency (or correctness) refers to the requirement that any given database transaction must change affected data only in allowed ways. Any data written to the database must be valid according to all defined rules, including constraints, cascades, triggers, and any combination thereof. This does not guarantee correctness of the transaction in all ways the application programmer might have wanted (that is the responsibility of application-level code) but merely that any programming errors cannot result in the violation of any defined database constraints.C. J. Date, "SQL and Relational Theory: How to Write Accurate SQL Code 2nd edition", ''O'reilly Media, Inc.'', 2012, pg. 180. Consistency can also be understood as after a successful write, update or delete of a Record, any read request immediately receives the latest value of the Record. As an ACID guarantee Consistency is one of the four guarantees that define ACID transactions; however, significant ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Duplication

In computing, data deduplication is a technique for eliminating duplicate copies of repeating data. Successful implementation of the technique can improve storage utilization, which may in turn lower capital expenditure by reducing the overall amount of storage media required to meet storage capacity needs. It can also be applied to network data transfers to reduce the number of bytes that must be sent. The deduplication process requires comparison of data 'chunks' (also known as 'byte patterns') which are unique, contiguous blocks of data. These chunks are identified and stored during a process of analysis, and compared to other chunks within existing data. Whenever a match occurs, the redundant chunk is replaced with a small reference that points to the stored chunk. Given that the same byte pattern may occur dozens, hundreds, or even thousands of times (the match frequency is dependent on the chunk size), the amount of data that must be stored or transferred can be greatly reduc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalability

Scalability is the property of a system to handle a growing amount of work by adding resources to the system. In an economic context, a scalable business model implies that a company can increase sales given increased resources. For example, a package delivery system is scalable because more packages can be delivered by adding more delivery vehicles. However, if all packages had to first pass through a single warehouse for sorting, the system would not be as scalable, because one warehouse can handle only a limited number of packages. In computing, scalability is a characteristic of computers, networks, algorithms, networking protocols, programs and applications. An example is a search engine, which must support increasing numbers of users, and the number of topics it indexes. Webscale is a computer architectural approach that brings the capabilities of large-scale cloud computing companies into enterprise data centers. In mathematics, scalability mostly refers to closure u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer

A computer is a machine that can be programmed to Execution (computing), carry out sequences of arithmetic or logical operations (computation) automatically. Modern digital electronic computers can perform generic sets of operations known as Computer program, programs. These programs enable computers to perform a wide range of tasks. A computer system is a nominally complete computer that includes the Computer hardware, hardware, operating system (main software), and peripheral equipment needed and used for full operation. This term may also refer to a group of computers that are linked and function together, such as a computer network or computer cluster. A broad range of Programmable logic controller, industrial and Consumer electronics, consumer products use computers as control systems. Simple special-purpose devices like microwave ovens and remote controls are included, as are factory devices like industrial robots and computer-aided design, as well as general-purpose devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Online Analytical Processing

Online analytical processing, or OLAP (), is an approach to answer multi-dimensional analytical (MDA) queries swiftly in computing. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, report writing and data mining. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications emerging, such as agriculture. The term ''OLAP'' was created as a slight modification of the traditional database term online transaction processing (OLTP). OLAP tools enable users to analyze multidimensional data interactively from multiple perspectives. OLAP consists of three basic analytical operations: consolidation (roll-up), drill-down, and slicing and dicing.O'Brien, J. A., & Marakas, G. M. (2009). Management information systems (9th ed.). Boston, MA: McGraw-Hill/Irwin. Consolidat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logical Schema

A logical data model or logical schema is a data model of a specific problem domain expressed independently of a particular database management product or storage technology (physical data model) but in terms of data structures such as relational tables and columns, object-oriented classes, or XML tags. This is as opposed to a conceptual data model, which describes the semantics of an organization without reference to technology. Overview Logical data models represent the abstract structure of a domain of information. They are often diagrammatic in nature and are most typically used in business processes that seek to capture things of importance to an organization and how they relate to one another. Once validated and approved, the logical data model can become the basis of a physical data model and form the design of a database. Logical data models should be based on the structures identified in a preceding conceptual data model, since this describes the semantics of the informa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |