|

Decimal Data Type

Some programming languages (or compilers for them) provide a built-in (primitive) or library decimal data type to represent non-repeating decimal fractions like 0.3 and -1.17 without rounding, and to do arithmetic on them. Examples are the decimal.Decimal type of Python, and analogous types provided by other languages. Rationale Fractional numbers are supported on most programming languages as floating-point numbers or fixed-point numbers. However, such representations typically restrict the denominator to a power of two. Most decimal fractions (or most fractions in general) cannot be represented exactly as a fraction with a denominator that is a power of two. For example, the simple decimal fraction 0.3 (3/10) might be represented as 5404319552844595/18014398509481984 (0.299999999999999988897769...). This inexactness causes many problems that are familiar to experienced programmers. For example, the expression 0.1 * 7 0.7 might counterintuitively evaluate to false in some sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

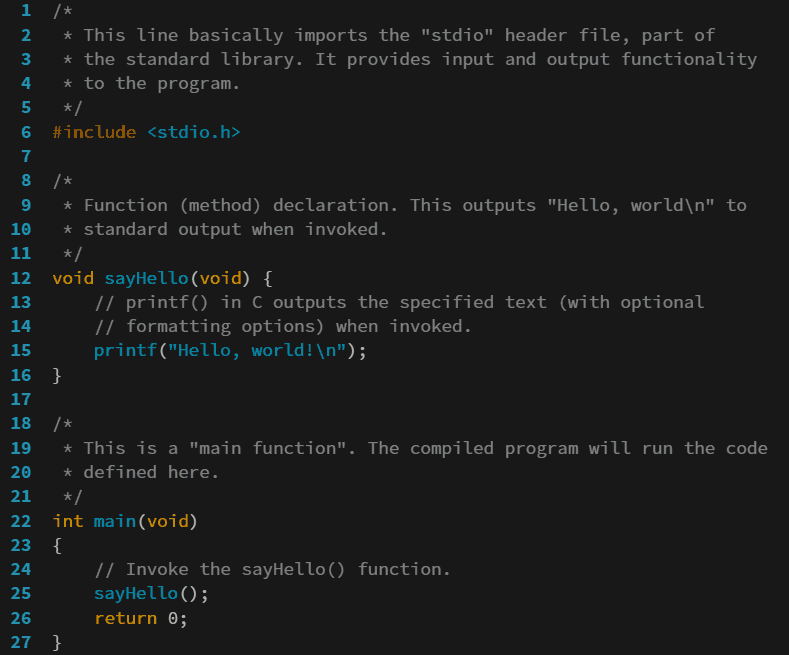

Programming Languages

A programming language is a system of notation for writing computer program, computer programs. Most programming languages are text-based formal languages, but they may also be visual programming language, graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C (programming language), C programming language is specified by an International Organization for Standardization, ISO Standard) while other languages (such as Perl) have a dominant Programming language implementation, implementation that is treated as a reference implementation, reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being commo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decimal32 Floating-point Format

In computing, decimal32 is a decimal floating-point computer numbering format that occupies 4 bytes (32 bits) in computer memory. It is intended for applications where it is necessary to emulate decimal rounding exactly, such as financial and tax computations. Like the binary16 format, it is intended for memory saving storage. Decimal32 supports 7 decimal digits of significand and an exponent range of −95 to +96, i.e. to ±. (Equivalently, to .) Because the significand is not normalized (there is no implicit leading "1"), most values with less than 7 significant digits have multiple possible representations; , etc. Zero has 192 possible representations (384 when both signed zeros are included). Decimal32 floating point is a relatively new decimal floating-point format, formally introduced in the 2008 version of IEEE 754 The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic established in 1985 by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Error Mitigation

Floating-point error mitigation is the minimization of errors caused by the fact that real numbers cannot, in general, be accurately represented in a fixed space. By definition, floating-point error cannot be eliminated, and, at best, can only be managed. Huberto M. Sierra noted in his 1956 patent "Floating Decimal Point Arithmetic Control Means for Calculator": The Z1, developed by Konrad Zuse in 1936, was the first computer with floating-point arithmetic and was thus susceptible to floating-point error. Early computers, however, with operation times measured in milliseconds, were incapable of solving large, complex problems and thus were seldom plagued with floating-point error. Today, however, with supercomputer system performance measured in petaflops, floating-point error is a major concern for computational problem solvers. The following sections describe the strengths and weaknesses of various means of mitigating floating-point error. Numerical error analysis Tho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten ( decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arbitrary-precision Arithmetic

In computer science, arbitrary-precision arithmetic, also called bignum arithmetic, multiple-precision arithmetic, or sometimes infinite-precision arithmetic, indicates that calculations are performed on numbers whose digits of precision are limited only by the available memory of the host system. This contrasts with the faster fixed-precision arithmetic found in most arithmetic logic unit (ALU) hardware, which typically offers between 8 and 64 bits of precision. Several modern programming languages have built-in support for bignums, and others have libraries available for arbitrary-precision integer and floating-point math. Rather than storing values as a fixed number of bits related to the size of the processor register, these implementations typically use variable-length arrays of digits. Arbitrary precision is used in applications where the speed of arithmetic is not a limiting factor, or where precise results with very large numbers are required. It should not b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNU Compiler Collection

The GNU Compiler Collection (GCC) is an optimizing compiler produced by the GNU Project supporting various programming languages, hardware architectures and operating systems. The Free Software Foundation (FSF) distributes GCC as free software under the GNU General Public License (GNU GPL). GCC is a key component of the GNU toolchain and the standard compiler for most projects related to GNU and the Linux kernel. With roughly 15 million lines of code in 2019, GCC is one of the biggest free programs in existence. It has played an important role in the growth of free software, as both a tool and an example. When it was first released in 1987 by Richard Stallman, GCC 1.0 was named the GNU C Compiler since it only handled the C programming language. It was extended to compile C++ in December of that year. Front ends were later developed for Objective-C, Objective-C++, Fortran, Ada, D and Go, among others. The OpenMP and OpenACC specifications are also supported in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PL/I

PL/I (Programming Language One, pronounced and sometimes written PL/1) is a procedural, imperative computer programming language developed and published by IBM. It is designed for scientific, engineering, business and system programming. It has been used by academic, commercial and industrial organizations since it was introduced in the 1960s, and is still used. PL/I's main domains are data processing, numerical computation, scientific computing, and system programming. It supports recursion, structured programming, linked data structure handling, fixed-point, floating-point, complex, character string handling, and bit string handling. The language syntax is English-like and suited for describing complex data formats with a wide set of functions available to verify and manipulate them. Early history In the 1950s and early 1960s, business and scientific users programmed for different computer hardware using different programming languages. Business users were moving ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNUstep

GNUstep is a free software implementation of the Cocoa (formerly OpenStep) Objective-C frameworks, widget toolkit, and application development tools for Unix-like operating systems and Microsoft Windows. It is part of the GNU Project. GNUstep features a cross-platform, object-oriented IDE. Apart from the default Objective-C interface, GNUstep also has bindings for Java, Ruby, GNU Guile and Scheme. The GNUstep developers track some additions to Apple's Cocoa to remain compatible. The roots of the GNUstep application interface are the same as the roots of Cocoa: NeXTSTEP and OpenStep. GNUstep thus predates Cocoa, which emerged when Apple acquired NeXT's technology and incorporated it into the development of the original Mac OS X, while GNUstep was initially an effort by GNU developers to replicate the technically ambitious NeXTSTEP's programmer-friendly features. History GNUstep began when Paul Kunz and others at Stanford Linear Accelerator Center wanted to port Hip ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cocoa (API)

Cocoa is Apple's native object-oriented application programming interface (API) for its desktop operating system macOS. Cocoa consists of the Foundation Kit, Application Kit, and Core Data frameworks, as included by the Cocoa.h header file, and the libraries and frameworks included by those, such as the C standard library and the Objective-C runtime.Mac Technology Overview: OS X Frameworks Developer.apple.com. Retrieved on September 18, 2013. Cocoa applications are typically developed using the development tools provided by Apple, specifically Xcode (formerly |

Objective-C

Objective-C is a general-purpose, object-oriented programming language that adds Smalltalk-style messaging to the C programming language. Originally developed by Brad Cox and Tom Love in the early 1980s, it was selected by NeXT for its NeXTSTEP operating system. Due to Apple macOS’s direct lineage from NeXTSTEP, Objective-C was the standard programming language used, supported, and promoted by Apple for developing macOS and iOS applications (via their respective APIs, Cocoa and Cocoa Touch) until the introduction of the Swift programming language in 2014. Objective-C programs developed for non-Apple operating systems or that are not dependent on Apple's APIs may also be compiled for any platform supported by GNU GCC or LLVM/ Clang. Objective-C source code 'messaging/implementation' program files usually have filename extensions, while Objective-C 'header/interface' files have extensions, the same as C header files. Objective-C++ files are denoted with a fil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Java (programming Language)

Java is a high-level, class-based, object-oriented programming language that is designed to have as few implementation dependencies as possible. It is a general-purpose programming language intended to let programmers ''write once, run anywhere'' ( WORA), meaning that compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax of Java is similar to C and C++, but has fewer low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as reflection and runtime code modification) that are typically not available in traditional compiled languages. , Java was one of the most popular programming languages in use according to GitHub, particularly for client–server web applications, with a reported 9 million developers. Java was originally de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ruby (programming Language)

Ruby is an interpreted, high-level, general-purpose programming language which supports multiple programming paradigms. It was designed with an emphasis on programming productivity and simplicity. In Ruby, everything is an object, including primitive data types. It was developed in the mid-1990s by Yukihiro "Matz" Matsumoto in Japan. Ruby is dynamically typed and uses garbage collection and just-in-time compilation. It supports multiple programming paradigms, including procedural, object-oriented, and functional programming. According to the creator, Ruby was influenced by Perl, Smalltalk, Eiffel, Ada, BASIC, Java and Lisp. History Early concept Matsumoto has said that Ruby was conceived in 1993. In a 1999 post to the ''ruby-talk'' mailing list, he describes some of his early ideas about the language: Matsumoto describes the design of Ruby as being like a simple Lisp language at its core, with an object system like that of Smalltalk, blocks inspired by highe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |