|

Data Exploration

Data exploration is an approach similar to initial data analysis, whereby a data analyst uses visual exploration to understand what is in a dataset and the characteristics of the data, rather than through traditional data management systems.FOSTER Open Science Overview of Data Exploration Techniques: Stratos Idreos, Olga Papaemmonouil, Surajit Chaudhuri. These characteristics can include size or amount of data, completeness of the data, correctness of the data, possible relationships amongst data elements or files/tables in the data. Data exploration is typically conducted using a combination of automated and manual activities.Stanford.edu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and confirmatory data analysis (CDA). EDA focuses on discovering ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and confirmatory data analysis (CDA). EDA focuses on discovering ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

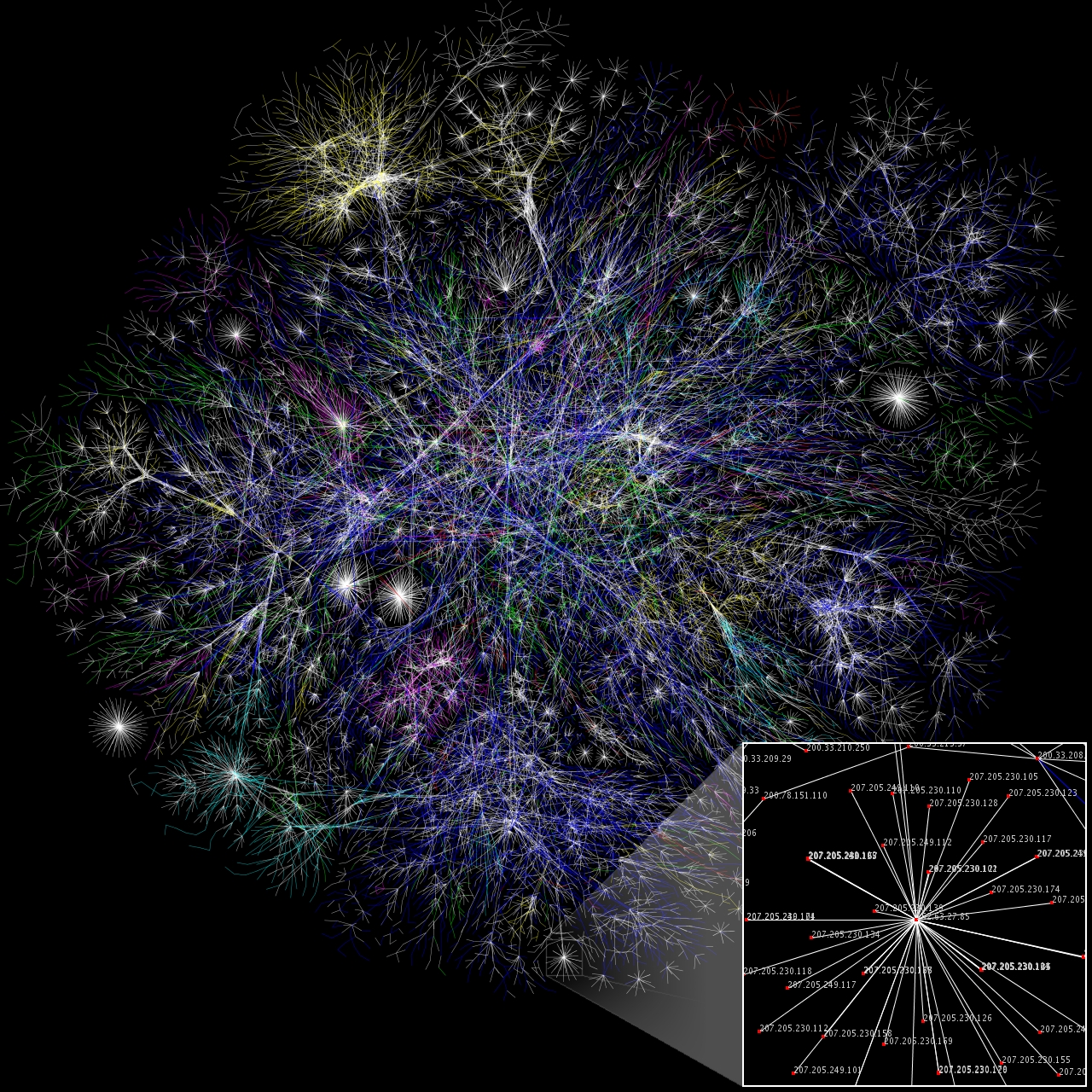

Data Visualization

Data and information visualization (data viz or info viz) is an interdisciplinary field that deals with the graphic representation of data and information. It is a particularly efficient way of communicating when the data or information is numerous as for example a time series. It is also the study of visual representations of abstract data to reinforce human cognition. The abstract data include both numerical and non-numerical data, such as text and geographic information. It is related to infographics and scientific visualization. One distinction is that it's information visualization when the spatial representation (e.g., the page layout of a graphic design) is chosen, whereas it's scientific visualization when the spatial representation is given. From an academic point of view, this representation can be considered as a mapping between the original data (usually numerical) and graphic elements (for example, lines or points in a chart). The mapping determines how the attri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Profiling

Data profiling is the process of examining the data available from an existing information source (e.g. a database or a file) and collecting statistics or informative summaries about that data. The purpose of these statistics may be to: # Find out whether existing data can be easily used for other purposes # Improve the ability to search data by tagging it with keywords, descriptions, or assigning it to a category # Assess data quality, including whether the data conforms to particular standards or patterns # Assess the risk involved in integrating data in new applications, including the challenges of joins # Discover metadata of the source database, including value patterns and distributions, key candidates, foreign-key candidates, and functional dependencies # Assess whether known metadata accurately describes the actual values in the source database # Understanding data challenges early in any data intensive project, so that late project surprises are avoided. Finding data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exploratory Data Analysis

In statistics, exploratory data analysis (EDA) is an approach of analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling and thereby contrasts traditional hypothesis testing. Exploratory data analysis has been promoted by John Tukey since 1970 to encourage statisticians to explore the data, and possibly formulate hypotheses that could lead to new data collection and experiments. EDA is different from initial data analysis (IDA), which focuses more narrowly on checking assumptions required for model fitting and hypothesis testing, and handling missing values and making transformations of variables as needed. EDA encompasses IDA. Overview Tukey defined data analysis in 1961 as: "Procedures for analyzing data, techniques for interpreting the results of such procedures, ways of pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tableau Software

Tableau Software ( ) is an American interactive data visualization software company focused on business intelligence. It was founded in 2003 in Mountain View, California, and is currently headquartered in Seattle, Washington. In 2019 the company was acquired by Salesforce for $15.7 billion. At the time, this was the largest acquisition by Salesforce (a leader in the CRM field) since its foundation. It was later surpassed by Salesforce's acquisition of Slack. The company's founders, Christian Chabot, Pat Hanrahan and Chris Stolte, were researchers at the Department of Computer Science at Stanford University. They specialized in visualization techniques for exploring and analyzing relational databases and data cubes, and started the company as a commercial outlet for research at Stanford from 1999 to 2002. Tableau products query relational databases, online analytical processing cubes, cloud databases, and spreadsheets to generate graph-type data visualizations. The software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenRefine

OpenRefine is an open-source desktop application for data cleanup and transformation to other formats, an activity commonly known as data wrangling. It is similar to spreadsheet applications, and can handle spreadsheet file formats such as CSV, but it behaves more like a database. It operates on ''rows'' of data which have cells under ''columns,'' similar to the manner in which relational database tables operate. OpenRefine projects consist of one table, whose rows can be filtered using ''facets'' that define criteria (for example, showing rows where a given column is not empty). Unlike spreadsheets, most operations in OpenRefine are done on all visible rows, for example, the transformation of all cells in all rows under one column, or the creation of a new column based on existing data. Actions performed on a dataset are stored the project and can be 'replayed' on other datasets. Formulas are not stored in cells, but are used to transform the data. Transformation is done only ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power BI

Power BI is an interactive data visualization software product developed by Microsoft with a primary focus on business intelligence. It is part of the Microsoft Power Platform. Power BI is a collection of software services, apps, and connectors that work together to turn unrelated sources of data into coherent, visually immersive, and interactive insights. Data may be input by reading directly from a database, webpage, or structured files such as spreadsheets, CSV, XML, and JSON. General Power BI provides cloud-based BI (business intelligence) services, known as "Power BI Services", along with a desktop-based interface, called "Power BI Desktop". It offers data warehouse capabilities including data preparation, data discovery, and interactive dashboards. In March 2016, Microsoft released an additional service called Power BI Embedded on its Azure cloud platform. One main differentiator of the product is the ability to load custom visualizations. History This application was o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alteryx

Alteryx is an American computer software company based in Irvine, California, with a development center in Broomfield, Colorado. The company's products are used for data science and analytics. The software is designed to make advanced analytics accessible to any data worker. History SRC LLC, the predecessor to Alteryx, was founded in 1997 by Dean Stoecker, Olivia Duane Adams and Ned Harding. SRC developed the first online data engine for delivering demographic-based mapping and reporting shortly after being founded. In 1998, SRC released Allocate, a data engine incorporating geographically organized U.S. Census data that allows users to manipulate, analyze and map data. Solocast was developed in 1998, which was software that allowed customers to do customer segmentation analysis. In 2000, SRC LLC entered into a contract with the U.S. Census Bureau that resulted in a modified version of its Allocate software being included on CD-ROMs of Census Data sold by the Bureau. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paxata

Paxata is a privately owned software company headquartered in Redwood City, California. It develops self-service data preparation software that gets data ready for data analytics software. Paxata's software is intended for business analysts, as opposed to technical staff. It is used to combine data from different sources, then check it for data quality issues, such as duplicates and outliers. Algorithms and machine learning automate certain aspects of data preparation and users work with the software through a user-interface similar to Excel spreadsheets. The company was founded in January 2012 and operated in stealth mode until October 2013. It received more than $10 million in venture funding before being acquired by DataRobot. History Paxata was founded in January 2012. It initially raised $2 million in venture capital. The company came out of stealth mode in October 2013. Simultaneously with its public release, Paxata announced an $8 million funding round led by Accel Partn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |