|

Corosync

The Corosync Cluster Engine is an open source implementation of the Totem Single Ring Ordering and Membership protocol. It was originally derived from the OpenAIS project and licensed under the new BSD License. The mission of the Corosync effort is to develop, release, and support a community-defined, open source cluster. Features The Corosync Cluster Engine is a group communication system with additional features for implementing high availability within applications. The project provides four C API features: * A closed process group communication model with virtual synchrony guarantees for creating replicated state machines. * A simple availability manager that restarts the application process when it has failed. * A configuration and statistics in-memory database that provides the ability to set, retrieve, and receive change notifications of information. * A quorum system that notifies applications when quorum is achieved or lost. The software is designed to operate on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

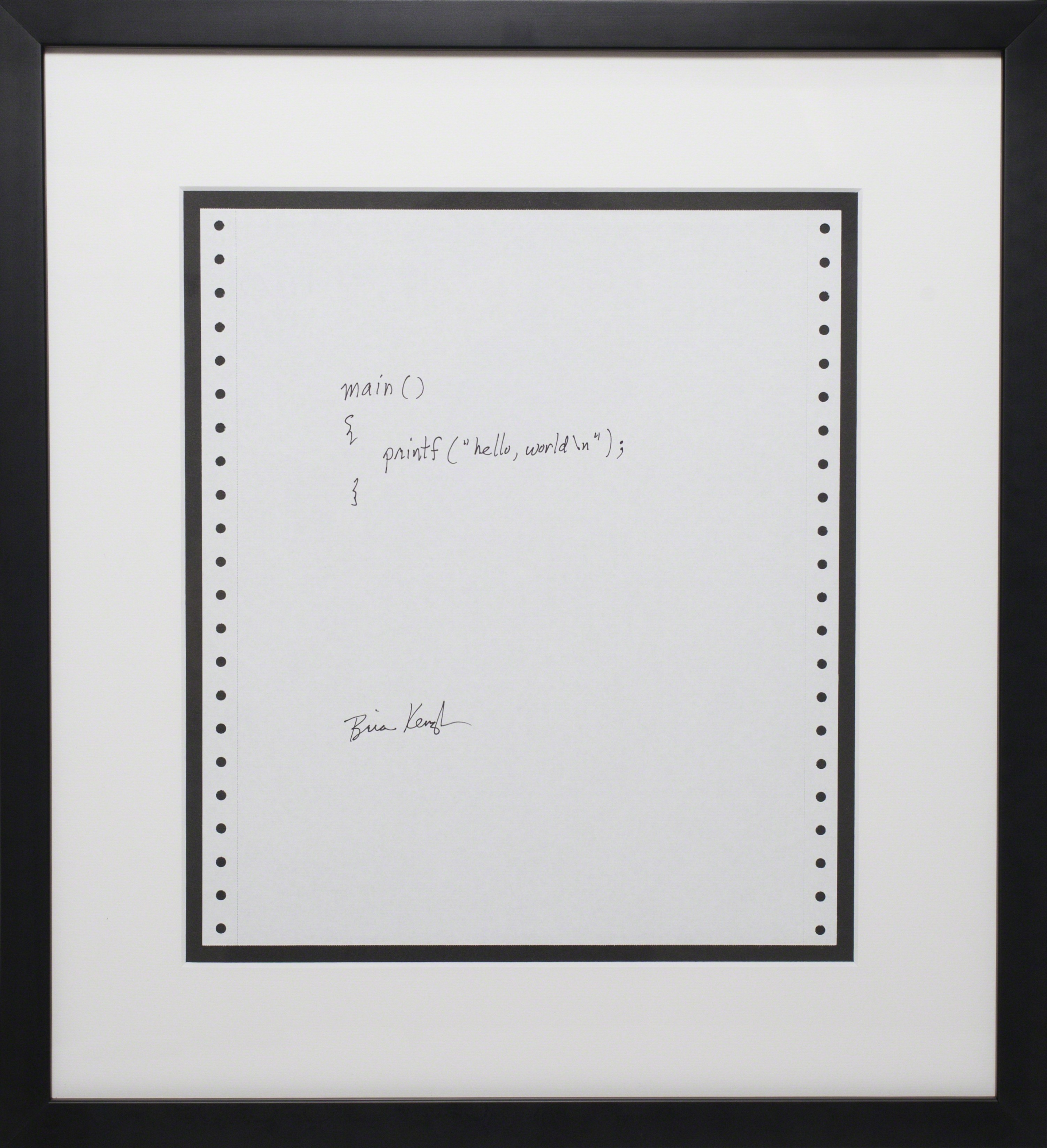

C Programming Language

''The C Programming Language'' (sometimes termed ''K&R'', after its authors' initials) is a computer programming book written by Brian Kernighan and Dennis Ritchie, the latter of whom originally designed and implemented the language, as well as co-designed the Unix operating system with which development of the language was closely intertwined. The book was central to the development and popularization of the C programming language and is still widely read and used today. Because the book was co-authored by the original language designer, and because the first edition of the book served for many years as the ''de facto'' standard for the language, the book was regarded by many to be the authoritative reference on C. History C was created by Dennis Ritchie at Bell Labs in the early 1970s as an augmented version of Ken Thompson's B. Another Bell Labs employee, Brian Kernighan, had written the first C tutorial, and he persuaded Ritchie to coauthor a book on the language. Ker ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quorum (Distributed Systems)

A quorum is the minimum number of votes that a distributed transaction has to obtain in order to be allowed to perform an operation in a distributed system. A quorum-based technique is implemented to enforce consistent operation in a distributed system. Quorum-based techniques in distributed database systems Quorum-based voting can be used as a replica control method, as well as a commit method to ensure transaction atomicity in the presence of network partitioning. Quorum-based voting in commit protocols In a distributed database system, a transaction could execute its operations at multiple sites. Since atomicity requires every distributed transaction to be atomic, the transaction must have the same fate ( commit or abort) at every site. In case of network partitioning, sites are partitioned and the partitions may not be able to communicate with each other. This is where a quorum-based technique comes in. The fundamental idea is that a transaction is executed if the majo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Computing

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as a result of convergence of a number of computing trends including t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pacemaker (software)

Pacemaker is an open-source high availability resource manager software used on computer clusters since 2004. Until about 2007, it was part of the Linux-HA project, then was split out to be its own project. It implements several APIs for controlling resources, but its preferred API for this purpose is the Open Cluster Framework resource agent API. Related software Pacemaker is generally used with Corosync Cluster engine or Linux-HA Heartbeat. See also *High-availability cluster High-availability clusters (also known as HA clusters, fail-over clusters) are groups of computers that support server applications that can be reliably utilized with a minimum amount of down-time. They operate by using high availability softwa ... * Red Hat cluster suite References {{Reflist External linksClusterLabs the home of Pacemaker. Cluster computing Free software programmed in C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linux-HA

The Linux-HA (High-Availability Linux) project provides a high-availability ( clustering) solution for Linux, FreeBSD, OpenBSD, Solaris and Mac OS X which promotes reliability, availability, and serviceability (RAS).Alan Robertson ''The Evolution of The LinuxHA project''. IBM Linux Technology Center, 201/ref> The project's main software product is Heartbeat, a GNU General Public License, GPL-licensed portable cluster management program for high-availability clustering. Its most important features are: * no fixed maximum number of nodes - Heartbeat can be used to build large clusters as well as very simple ones * resource monitoring: resources can be automatically restarted or moved to another node on failure * fencing mechanism to remove failed nodes from the cluster * sophisticated policy-based resource management, resource inter-dependencies and constraints * time-based rules allow for different policies depending on time * several resource scripts (for Apache, IBM Db2, Or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SA Forum

The Service Availability Forum (SAF or SA Forum) is a consortium that develops, publishes, educates on and promotes open specifications for carrier-grade and mission-critical systems. Formed in 2001, it promotes development and deployment of commercial off-the-shelf (COTS) technology. Description Service availability is an extension of high availability, referring to services that are available regardless of hardware, software or user fault and importance. Key principles of service availability: * Redundancy – "backup" capability in case of need to failover due to a fault * Stateful and seamless recovery from failures * Minimization of mean time to repair (MTTR) – time to restore service after an outage * Fault prediction & avoidance – take action before something fails The traditional definitions of high availability have their roots in hardware systems where redundancy of equipment was the primary mechanism for achieving uptime over a specific period. As software has co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Refactored

In computer programming and software design, code refactoring is the process of restructuring existing computer code—changing the '' factoring''—without changing its external behavior. Refactoring is intended to improve the design, structure, and/or implementation of the software (its '' non-functional'' attributes), while preserving its functionality. Potential advantages of refactoring may include improved code readability and reduced complexity; these can improve the source codes maintainability and create a simpler, cleaner, or more expressive internal architecture or object model to improve extensibility. Another potential goal for refactoring is improved performance; software engineers face an ongoing challenge to write programs that perform faster or use less memory. Typically, refactoring applies a series of standardized basic ''micro-refactorings'', each of which is (usually) a tiny change in a computer program's source code that either preserves the behavior of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ottawa Linux Symposium

The Linux Symposium was a Linux and Open Source conference held annually in Canada from 1999 to 2014. The conference was initially named Ottawa Linux Symposium and was held only in Ottawa, but was renamed after being held in other cities in Canada. Even after the name change, however, it was still referred to as OLS. The conference featured 100+ paper presentations, tutorials, birds of a feather sessions and mini summits on a wide range of topics. There were 650 attendees from 20+ countries in 2008. History The 2009 Symposium was held in Montréal, Quebec. The 2011 and 2012 Symposium were both held in Ottawa. In 2014, OLS organizers put together an unsuccessful campaign on Indiegogo to raise funds in order to pay off debts from previous events. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Application Programming Interface

An application programming interface (API) is a way for two or more computer programs to communicate with each other. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build or use such a connection or interface is called an ''API specification''. A computer system that meets this standard is said to ''implement'' or ''expose'' an API. The term API may refer either to the specification or to the implementation. In contrast to a user interface, which connects a computer to a person, an application programming interface connects computers or pieces of software to each other. It is not intended to be used directly by a person (the end user) other than a computer programmer who is incorporating it into the software. An API is often made up of different parts which act as tools or services that are available to the programmer. A program or a programmer that uses one of these parts is said to ''call'' that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

In Memory Database

An in-memory database (IMDB, or main memory database system (MMDB) or memory resident database) is a database management system that primarily relies on main memory for computer data storage. It is contrasted with database management systems that employ a disk storage mechanism. In-memory databases are faster than disk-optimized databases because disk access is slower than memory access and the internal optimization algorithms are simpler and execute fewer CPU instructions. Accessing data in memory eliminates seek time when querying the data, which provides faster and more predictable performance than disk. Applications where response time is critical, such as those running telecommunications network equipment and mobile advertising networks, often use main-memory databases. IMDBs have gained much traction, especially in the data analytics space, starting in the mid-2000s – mainly due to multi-core processors that can address large memory and due to less expensive RAM. A pot ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared Memory (interprocess Communication)

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors. Using memory for communication inside a single program, e.g. among its multiple threads, is also referred to as shared memory. In hardware In computer hardware, ''shared memory'' refers to a (typically large) block of random access memory (RAM) that can be accessed by several different central processing units (CPUs) in a multiprocessor computer system. Shared memory systems may use: * uniform memory access (UMA): all the processors share the physical memory uniformly; * non-uniform memory access (NUMA): memory access time depends on the memory location relative to a processor; * cache-only memory architecture (COMA): the local memor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |