|

Constructible Function

In complexity theory, a time-constructible function is a function ''f'' from natural numbers to natural numbers with the property that ''f''(''n'') can be constructed from ''n'' by a Turing machine in the time of order ''f''(''n''). The purpose of such a definition is to exclude functions that do not provide an upper bound on the runtime of some Turing machine. Time-constructible Let the Turing machine be defined in the standard way, with an alphabet that includes the symbols 0, 1. It has a standard input tape containing zeros except for an input string. Let 1^n denote a string composed of n ones. That is, it's the unary representation. Let , n, be the binary representation. There are two different definitions of a time-constructible function. In the first definition, a function f is called time-constructible if there exists a Turing machine M, such that for all but finitely many n, M(1^n) halts in O(f(n)) steps. In the second definition, a function f is called time-constructi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Natural Numbers

In mathematics, the natural numbers are the numbers 0, 1, 2, 3, and so on, possibly excluding 0. Some start counting with 0, defining the natural numbers as the non-negative integers , while others start with 1, defining them as the positive integers Some authors acknowledge both definitions whenever convenient. Sometimes, the whole numbers are the natural numbers as well as zero. In other cases, the ''whole numbers'' refer to all of the integers, including negative integers. The counting numbers are another term for the natural numbers, particularly in primary education, and are ambiguous as well although typically start at 1. The natural numbers are used for counting things, like "there are ''six'' coins on the table", in which case they are called ''cardinal numbers''. They are also used to put things in order, like "this is the ''third'' largest city in the country", which are called ''ordinal numbers''. Natural numbers are also used as labels, like jersey numbers on a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

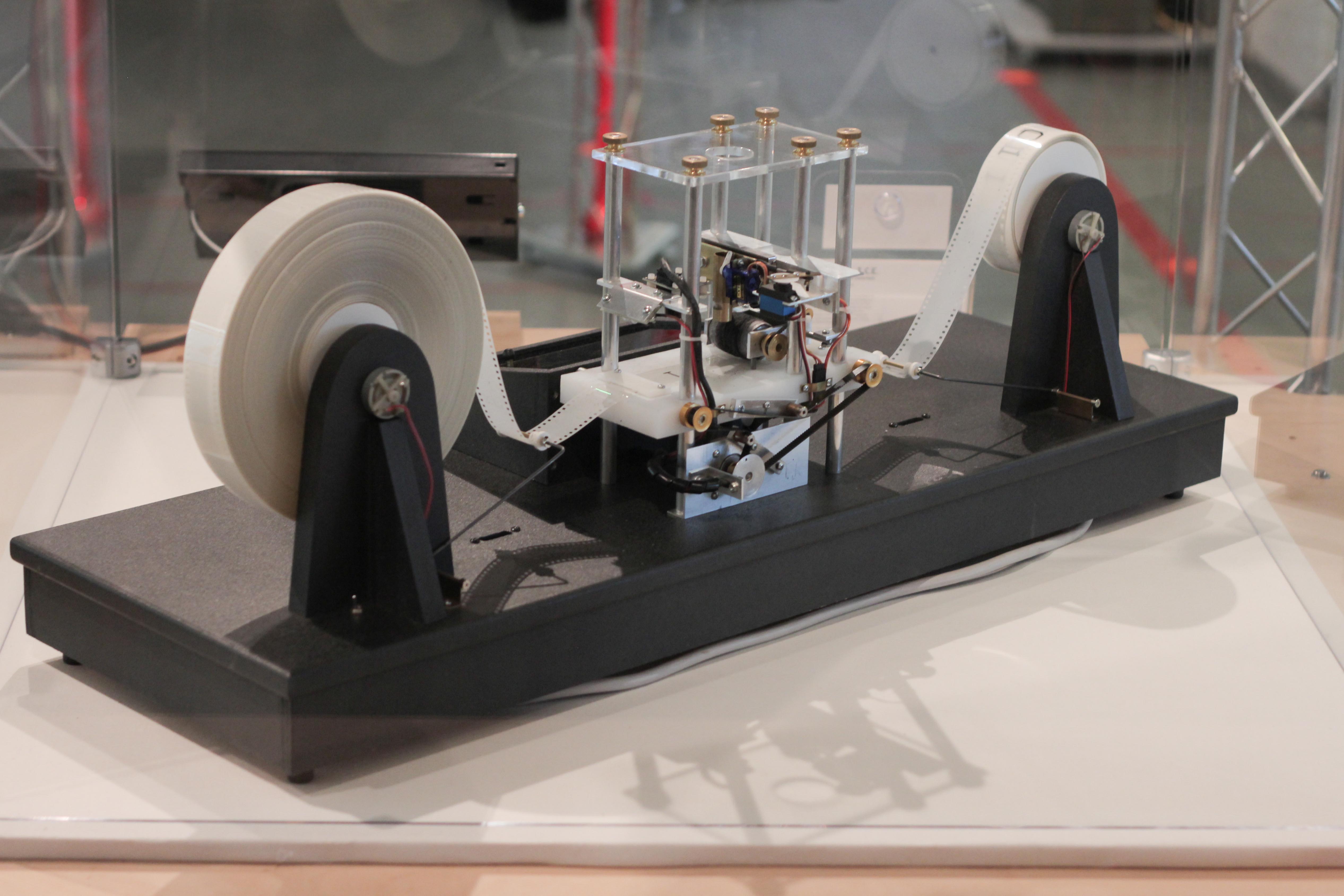

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unary Numeral System

The unary numeral system is the simplest numeral system to represent natural numbers: to represent a number ''N'', a symbol representing 1 is repeated ''N'' times. In the unary system, the number 0 (zero) is represented by the empty string, that is, the absence of a symbol. Numbers 1, 2, 3, 4, 5, 6, ... are represented in unary as 1, 11, 111, 1111, 11111, 111111, ... Unary is a bijective numeral system. However, although it has sometimes been described as "base 1", it differs in some important ways from positional notations, in which the value of a digit depends on its position within a number. For instance, the unary form of a number can be exponentially longer than its representation in other bases. The use of tally marks in counting is an application of the unary numeral system. For example, using the tally mark (𝍷), the number 3 is represented as . In East Asian cultures, the number 3 is represented as 三, a character drawn with three strokes. (One and two are repres ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Time Hierarchy Theorem

In computational complexity theory, the time hierarchy theorems are important statements about time-bounded computation on Turing machines. Informally, these theorems say that given more time, a Turing machine can solve more problems. For example, there are problems that can be solved with ''n''2 time but not ''n'' time, where ''n'' is the input length. The time hierarchy theorem for deterministic multi-tape Turing machines was first proven by Richard E. Stearns and Juris Hartmanis in 1965. It was improved a year later when F. C. Hennie and Richard E. Stearns improved the efficiency of the universal Turing machine. Consequent to the theorem, for every deterministic time-bounded complexity class, there is a strictly larger time-bounded complexity class, and so the time-bounded hierarchy of complexity classes does not completely collapse. More precisely, the time hierarchy theorem for deterministic Turing machines states that for all time-constructible functions ''f''(''n''), :\mat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Big-O Notation

Big ''O'' notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by German mathematicians Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for ''Ordnung'', meaning the order of approximation. In computer science, big O notation is used to classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetical function and a better understood approximation; one well-known example is the remainder term in the prime number theorem. Big O notation is also used in many other fields to provide similar estimates. Big O notation characterizes functions according to their growth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Space Hierarchy Theorem

In computational complexity theory, the space hierarchy theorems are separation results that show that both deterministic and nondeterministic machines can solve more problems in (asymptotically) more space, subject to certain conditions. For example, a deterministic Turing machine can solve more decision problem In computability theory and computational complexity theory, a decision problem is a computational problem that can be posed as a yes–no question on a set of input values. An example of a decision problem is deciding whether a given natura ...s in space ''n'' log ''n'' than in space ''n''. The somewhat weaker analogous theorems for time are the time hierarchy theorems. The foundation for the hierarchy theorems lies in the intuition that with either more time or more space comes the ability to compute more functions (or decide more languages). The hierarchy theorems are used to demonstrate that the time and space complexity classes form a hierarchy where classes w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |