|

Concurrent Access

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in memory or storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the whole system. Introducing concurrency control into a system means applying operation constraints wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Information Technology

Information technology (IT) is a set of related fields within information and communications technology (ICT), that encompass computer systems, software, programming languages, data processing, data and information processing, and storage. Information technology is an application of computer science and computer engineering. The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several products or services within an economy are associated with information technology, including computer hardware, software, electronics, semiconductors, internet, Telecommunications equipment, telecom equipment, and e-commerce.. An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a Computer, computer system — including all Computer hardware, hardware, software, and peripheral equipment †... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Systems Management

Systems management is enterprise-wide System administration, administration of distributed systems including (and commonly in practice) computer systems. Systems management is strongly influenced by network management initiatives in telecommunications. The application performance management (APM) technologies are now a subset of Systems management. Maximum productivity can be achieved more efficiently through event correlation, system automation and predictive analysis which is now all part of APM. Discussion Centralized management has a time and effort trade-off that is related to the size of the company, the expertise of the Information technology, IT staff, and the amount of technology being used: * For a small business startup with ten computers, automated centralized processes may take more time to learn how to use and implement than just doing the management work manually on each computer. * A very large business with thousands of similar employee computers may clearly be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Abstract Data Type

In computer science, an abstract data type (ADT) is a mathematical model for data types, defined by its behavior (semantics) from the point of view of a '' user'' of the data, specifically in terms of possible values, possible operations on data of this type, and the behavior of these operations. This mathematical model contrasts with ''data structures'', which are concrete representations of data, and are the point of view of an implementer, not a user. For example, a stack has push/pop operations that follow a Last-In-First-Out rule, and can be concretely implemented using either a list or an array. Another example is a set which stores values, without any particular order, and no repeated values. Values themselves are not retrieved from sets; rather, one tests a value for membership to obtain a Boolean "in" or "not in". ADTs are a theoretical concept, used in formal semantics and program verification and, less strictly, in the design and analysis of algorithms, data structu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Serializability

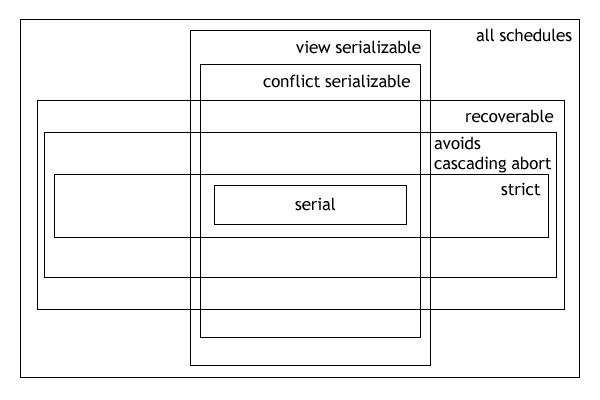

In the fields of databases and transaction processing (transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions in a set of transactions running in the system. Often it is a ''list'' of operations (actions) ordered by time, performed by a set of transactions that are executed together in the system. If the order in time between certain operations is not determined by the system, then a ''partial order'' is used. Examples of such operations are requesting a read operation, reading, writing, aborting, committing, requesting a lock, locking, etc. Often, only a subset of the transaction operation types are included in a schedule. Schedules are fundamental concepts in database concurrency control theory. In practice, most general purpose database systems employ conflict-serializable and strict recoverable schedules. Notation Grid notation: * Columns: The different transactions in the schedule. * Rows: The time order of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Data Integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire Information Lifecycle Management, life-cycle. It is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity. Definition Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities). Moreover, upon later Data retrieval, retrieval, ensure the data is the same as when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrency (computer Science)

Concurrency refers to the ability of a system to execute multiple tasks through simultaneous execution or time-sharing (context switching), sharing resources and managing interactions. Concurrency improves responsiveness, throughput, and scalability in modern computing, including: * Operating systems and embedded systems * Distributed systems, parallel computing, and high-performance computing * Database systems, web applications, and cloud computing Related concepts Concurrency is a broader concept that encompasses several related ideas, including: * Parallelism (simultaneous execution on multiple processing units). Parallelism executes tasks independently on multiple CPU cores. Concurrency allows for multiple ''threads of control'' at the program level, which can use parallelism or time-slicing to perform these tasks. Programs may exhibit parallelism only, concurrency only, both parallelism and concurrency, neither. * Multi-threading and multi-processing (shared ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cloud Computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for Standardization, ISO. Essential characteristics In 2011, the National Institute of Standards and Technology (NIST) identified five "essential characteristics" for cloud systems. Below are the exact definitions according to NIST: * On-demand self-service: "A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider." * Broad network access: "Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations)." * Pooling (resource management), Resource pooling: " The provider' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Grid Computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished from conventional high-performance computing systems such as cluster computing in that grid computers have each node set to perform a different task/application. Grid computers also tend to be more heterogeneous and geographically dispersed (thus not physically coupled) than cluster computers. Although a single grid can be dedicated to a particular application, commonly a grid is used for a variety of purposes. Grids are often constructed with general-purpose grid middleware software libraries. Grid sizes can be quite large. Grids are a form of distributed computing composed of many networked loosely coupled computers acting together to perform large tasks. For certain applications, distributed or grid computing can be seen as a special ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weikum01

Gerhard Weikum (born September 28, 1957) is a German computer scientist and Research Director at the Max Planck Institute for Informatics in SaarbrĂĽcken, Germany, where he is leading the databases and information systems department. His current research interests include transactional and distributed systems, self-tuning database systems, data and text integration, and the automatic construction of knowledge bases. He is one of the creators of the YAGO knowledge base. He is also the Dean of the International Max Planck Research School for Computer Science (IMPRS-CS). Earlier he held positions at Saarland University in SaarbrĂĽcken, Germany, at ETH Zurich, Switzerland, at MCC in Austin, Texas, and he was a visiting senior researcher at Microsoft Research in Redmond, Washington. He received his diploma and doctoral degrees from the TU Darmstadt, Germany. He acted as the President of the VLDB endowment in 2005 and 2006. The endowment organizes the yearly ''International Confere ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database Management System

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database. Before digital storage and retrieval of data have become widespread, index cards were used for data storage in a wide range of applications and environments: in the home to record and store recipes, shopping lists, contact information and other organizational data; in business to record presentation notes, project research and notes, and contact information; in schools as flash ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |