|

Ciphertext Expansion

In cryptography, the term ciphertext expansion refers to the length increase of a message when it is encrypted. Many modern cryptosystems cause some degree of expansion during the encryption process, for instance when the resulting ciphertext must include a message-unique Initialization Vector (IV). Probabilistic encryption schemes cause ciphertext expansion, as the set of possible ciphertexts is necessarily greater than the set of input plaintexts. Certain schemes, such as Cocks Identity Based Encryption, or the Goldwasser-Micali cryptosystem result in ciphertexts hundreds or thousands of times longer than the plaintext. Ciphertext expansion may be offset or increased by other processes which compress or expand the message, e.g., data compression or error correction coding In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptography

Cryptography, or cryptology (from grc, , translit=kryptós "hidden, secret"; and ''graphein'', "to write", or ''-logia'', "study", respectively), is the practice and study of techniques for secure communication in the presence of adversarial behavior. More generally, cryptography is about constructing and analyzing protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security (data confidentiality, data integrity, authentication, and non-repudiation) are also central to cryptography. Practical applications of cryptography include electronic commerce, chip-based payment cards, digital currencies, computer passwords, and military communications. Cryptography prior to the modern age was effectively synony ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Encryption

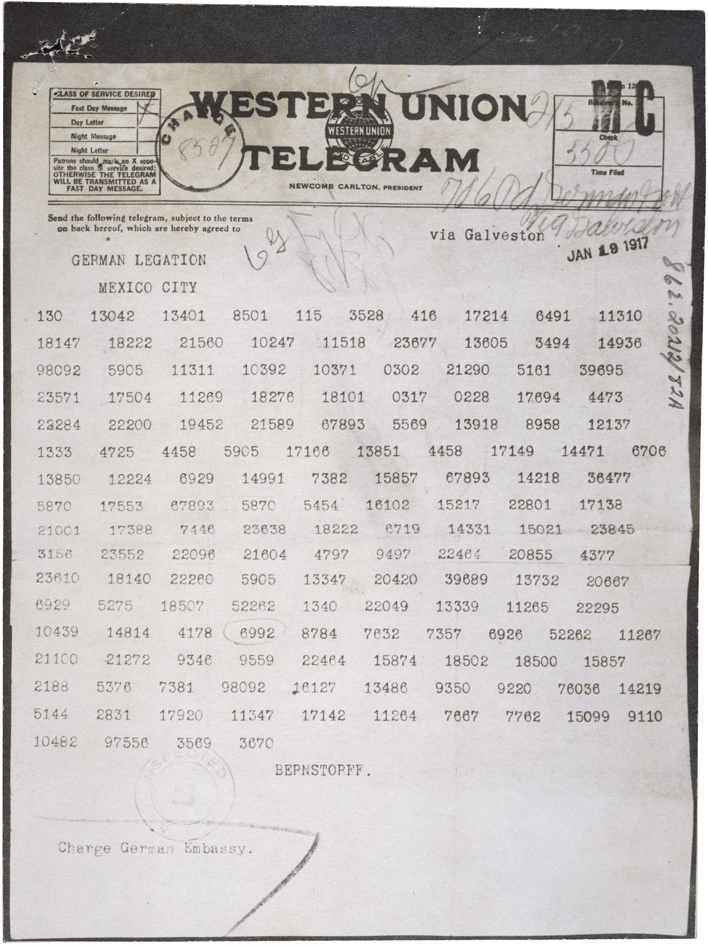

In cryptography, encryption is the process of encoding information. This process converts the original representation of the information, known as plaintext, into an alternative form known as ciphertext. Ideally, only authorized parties can decipher a ciphertext back to plaintext and access the original information. Encryption does not itself prevent interference but denies the intelligible content to a would-be interceptor. For technical reasons, an encryption scheme usually uses a pseudo-random encryption key generated by an algorithm. It is possible to decrypt the message without possessing the key but, for a well-designed encryption scheme, considerable computational resources and skills are required. An authorized recipient can easily decrypt the message with the key provided by the originator to recipients but not to unauthorized users. Historically, various forms of encryption have been used to aid in cryptography. Early encryption techniques were often used in milit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ciphertext

In cryptography, ciphertext or cyphertext is the result of encryption performed on plaintext using an algorithm, called a cipher. Ciphertext is also known as encrypted or encoded information because it contains a form of the original plaintext that is unreadable by a human or computer without the proper cipher to decrypt it. This process prevents the loss of sensitive information via hacking. Decryption, the inverse of encryption, is the process of turning ciphertext into readable plaintext. Ciphertext is not to be confused with codetext because the latter is a result of a code, not a cipher. Conceptual underpinnings Let m\! be the plaintext message that Alice wants to secretly transmit to Bob and let E_k\! be the encryption cipher, where _k\! is a cryptographic key. Alice must first transform the plaintext into ciphertext, c\!, in order to securely send the message to Bob, as follows: : c = E_k(m). \! In a symmetric-key system, Bob knows Alice's encryption key. Once the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Initialization Vector

In cryptography, an initialization vector (IV) or starting variable (SV) is an input to a cryptographic primitive being used to provide the initial state. The IV is typically required to be random or pseudorandom, but sometimes an IV only needs to be unpredictable or unique. Randomization is crucial for some encryption schemes to achieve semantic security, a property whereby repeated usage of the scheme under the same key does not allow an attacker to infer relationships between (potentially similar) segments of the encrypted message. For block ciphers, the use of an IV is described by the modes of operation. Some cryptographic primitives require the IV only to be non-repeating, and the required randomness is derived internally. In this case, the IV is commonly called a nonce (a number used only once), and the primitives (e.g. CBC) are considered ''stateful'' rather than ''randomized''. This is because an IV need not be explicitly forwarded to a recipient but may be derived from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Encryption

Probabilistic encryption is the use of randomness in an encryption algorithm, so that when encrypting the same message several times it will, in general, yield different ciphertexts. The term "probabilistic encryption" is typically used in reference to public key encryption algorithms; however various symmetric key encryption algorithms achieve a similar property (e.g., block ciphers when used in a chaining mode such as CBC), and stream ciphers such as Freestyle which are inherently random. To be semantically secure, that is, to hide even partial information about the plaintext, an encryption algorithm must be probabilistic. History The first provably-secure probabilistic public-key encryption scheme was proposed by Shafi Goldwasser and Silvio Micali, based on the hardness of the quadratic residuosity problem and had a message expansion factor equal to the public key size. More efficient probabilistic encryption algorithms include Elgamal, Paillier, and various construction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cocks Identity Based Encryption

Identity-based cryptography is a type of public-key cryptography in which a publicly known string representing an individual or organization is used as a public key. The public string could include an email address, domain name, or a physical IP address. The first implementation of identity-based signatures and an email-address based public-key infrastructure (PKI) was developed by Adi Shamir in 1984, which allowed users to verify digital signatures using only public information such as the user's identifier. Under Shamir's scheme, a trusted third party would deliver the private key to the user after verification of the user's identity, with verification essentially the same as that required for issuing a certificate in a typical PKI. Shamir similarly proposed identity-based encryption, which appeared particularly attractive since there was no need to acquire an identity's public key prior to encryption. However, he was unable to come up with a concrete solution, and identity-based ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Correction Codes

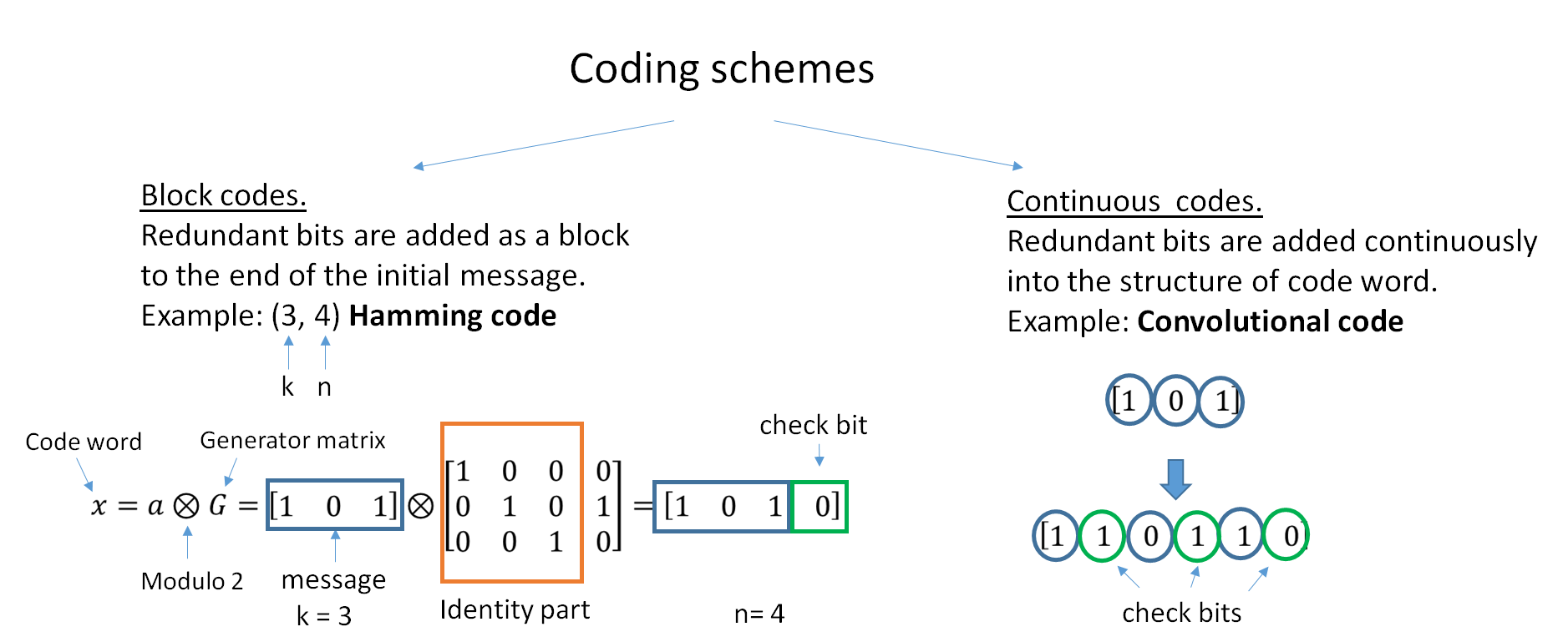

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |