|

Canonical Decomposition

Unicode equivalence is the specification by the Unicode character encoding standard that some sequences of code points represent essentially the same character. This feature was introduced in the standard to allow compatibility with preexisting standard character sets, which often included similar or identical characters. Unicode provides two such notions, canonical equivalence and compatibility. Code point sequences that are defined as canonically equivalent are assumed to have the same appearance and meaning when printed or displayed. For example, the code point U+006E (the Latin lowercase "n") followed by U+0303 (the combining tilde "◌̃") is defined by Unicode to be canonically equivalent to the single code point U+00F1 (the lowercase letter " ñ" of the Spanish alphabet). Therefore, those sequences should be displayed in the same manner, should be treated in the same way by applications such as alphabetizing names or searching, and may be substituted for each other. Si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unicode

Unicode, formally The Unicode Standard,The formal version reference is is an information technology Technical standard, standard for the consistent character encoding, encoding, representation, and handling of Character (computing), text expressed in most of the world's writing systems. The standard, which is maintained by the Unicode Consortium, defines as of the current version (15.0) 149,186 characters covering 161 modern and historic script (Unicode), scripts, as well as symbols, emoji (including in colors), and non-visual control and formatting codes. Unicode's success at unifying character sets has led to its widespread and predominant use in the internationalization and localization of computer software. The standard has been implemented in many recent technologies, including modern operating systems, XML, and most modern programming languages. The Unicode character repertoire is synchronized with Universal Coded Character Set, ISO/IEC 10646, each being code-for-code id ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

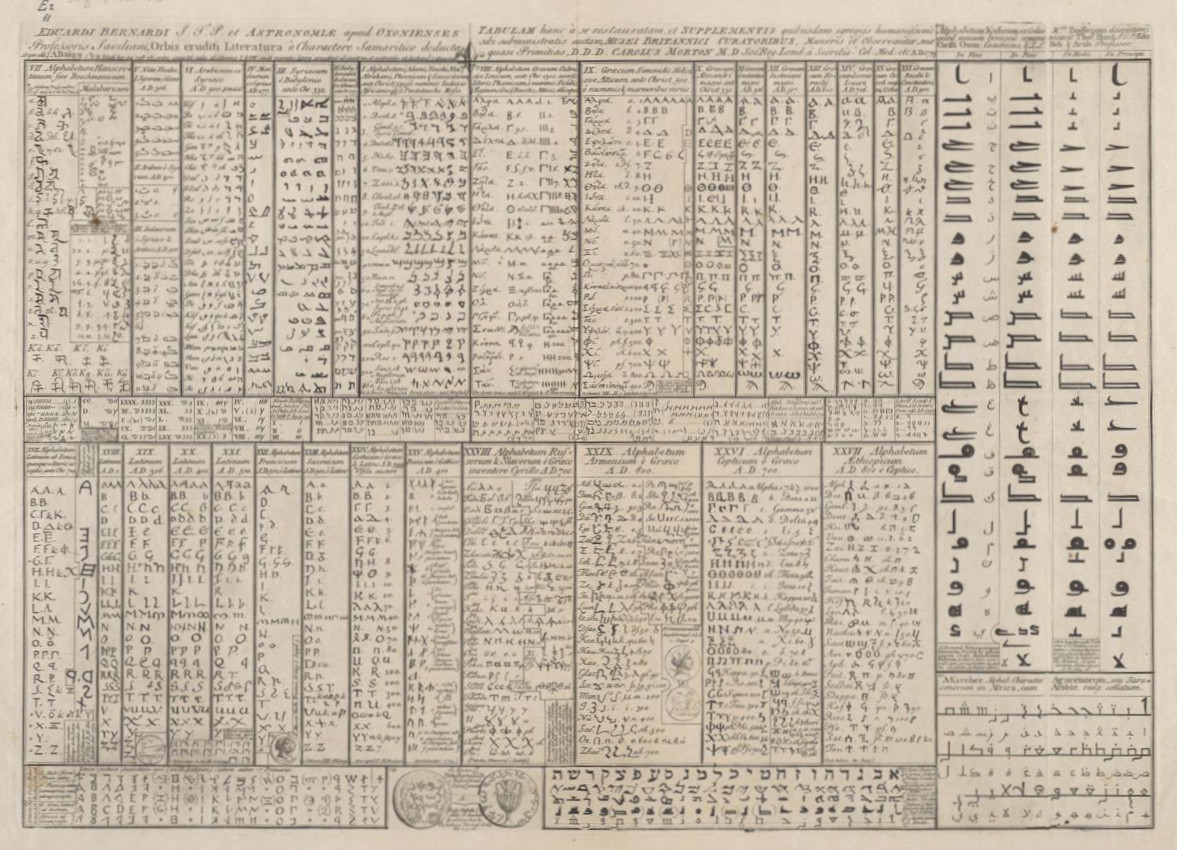

Alphabet

An alphabet is a standardized set of basic written graphemes (called letters) that represent the phonemes of certain spoken languages. Not all writing systems represent language in this way; in a syllabary, each character represents a syllable, and logographic systems use characters to represent words, morphemes, or other semantic units. The first fully phonemic script, the Proto-Sinaitic script, later known as the Phoenician alphabet, is considered to be the first alphabet and is the ancestor of most modern alphabets, including Arabic, Cyrillic, Greek, Hebrew, Latin, and possibly Brahmic. It was created by Semitic-speaking workers and slaves in the Sinai Peninsula (as the Proto-Sinaitic script), by selecting a small number of hieroglyphs commonly seen in their Egyptian surroundings to describe the sounds, as opposed to the semantic values of the Canaanite languages. However, Peter T. Daniels distinguishes an abugida, a set of graphemes that represent consonantal base ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Half-width Katakana

are katakana characters displayed compressed at half their normal width (a 1:2 aspect ratio), instead of the usual square (1:1) aspect ratio. For example, the usual (full-width) form of the katakana ''ka'' is カ while the half-width form is カ. Half-width hiragana is not included in Unicode, although it's usable on Web or in e-books via CSS's font-feature-settings: "hwid" 1 with Adobe-Japan1-6 based OpenType fonts. Half-width kanji is not usable on modern computers, but is used in some receipt printers, electric bulletin board and old computers. Half-width kana were used in the early days of Japanese computing, to allow Japanese characters to be displayed on the same grid as monospaced fonts of Latin characters. Half-width kanji were not used. Half-width kana characters are not generally used today, but find some use in specific settings, such as cash register displays, on shop receipts, Japanese digital television and DVD subtitles, and mailing address labels. Their usage ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Typographic Ligature

In writing and typography, a ligature occurs where two or more graphemes or letters are joined to form a single glyph. Examples are the characters æ and œ used in English and French, in which the letters 'a' and 'e' are joined for the first ligature and the letters 'o' and 'e' are joined for the second ligature. For stylistic and legibility reasons, 'f' and 'i' are often merged to create 'fi' (where the tittle on the 'i' merges with the hood of the 'f'); the same is true of 's' and 't' to create 'st'. The common ampersand (&) developed from a ligature in which the handwritten Latin letters 'E' and 't' (spelling , Latin for 'and') were combined. History The earliest known script Sumerian cuneiform and Egyptian language, Egyptian hieratic both include many cases of character combinations that gradually evolve from ligatures into separately recognizable characters. Other notable ligatures, such as the Brahmic family, Brahmic abugidas and the Runes, Germanic bind rune, figure pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Forms

Database normalization or database normalisation (see spelling differences) is the process of structuring a relational database in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model. Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of ''synthesis'' (creating a new database design) or ''decomposition'' (improving an existing database design). Objectives A basic objective of the first normal form defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic. An example of such a language is SQL, though it is one that Codd regarded as seriou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Precomposed Character

A precomposed character (alternatively composite character or decomposable character) is a Unicode entity that can also be defined as a sequence of one or more other characters. A precomposed character may typically represent a letter with a diacritical mark, such as ''é'' (Latin small letter ''e'' with acute accent). Technically, ''é'' (U+00E9) is a character that can be decomposed into an equivalent string of the base letter ''e'' (U+0065) and combining acute accent (U+0301). Similarly, ligatures are precompositions of their constituent letters or graphemes. Precomposed characters are the legacy solution for representing many special letters in various character sets. In Unicode, they are included primarily to aid computer systems with incomplete Unicode support, where equivalent decomposed characters may render incorrectly. Comparing precomposed and decomposed characters In the following example, there is a common Swedish surname Åström written in the two alternative me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dakuten

The , colloquially , is a diacritic most often used in the Japanese kana syllabaries to indicate that the consonant of a syllable should be pronounced voiced, for instance, on sounds that have undergone rendaku (sequential voicing). The , colloquially , is a diacritic used with the kana for syllables starting with ''h'' to indicate that they should instead be pronounced with . History The ''kun'yomi'' pronunciation of the character is ''nigori''; hence the ''daku-ten'' may also be called the ''nigori-ten''. This character, meaning ''muddy'' or ''turbid'', stems from historical Chinese phonology, where consonants were traditionally classified as ''clear'' ( "voiceless"), ''lesser-clear'' ( " aspirated") and ''muddy'' ( "voiced"). (See: Middle Chinese § Initials) ''Dakuten'' were used sporadically since the start of written Japanese; their use tended to become more common as time went on. The modern practice of using dakuten in all cases of voicing in all writing only came ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

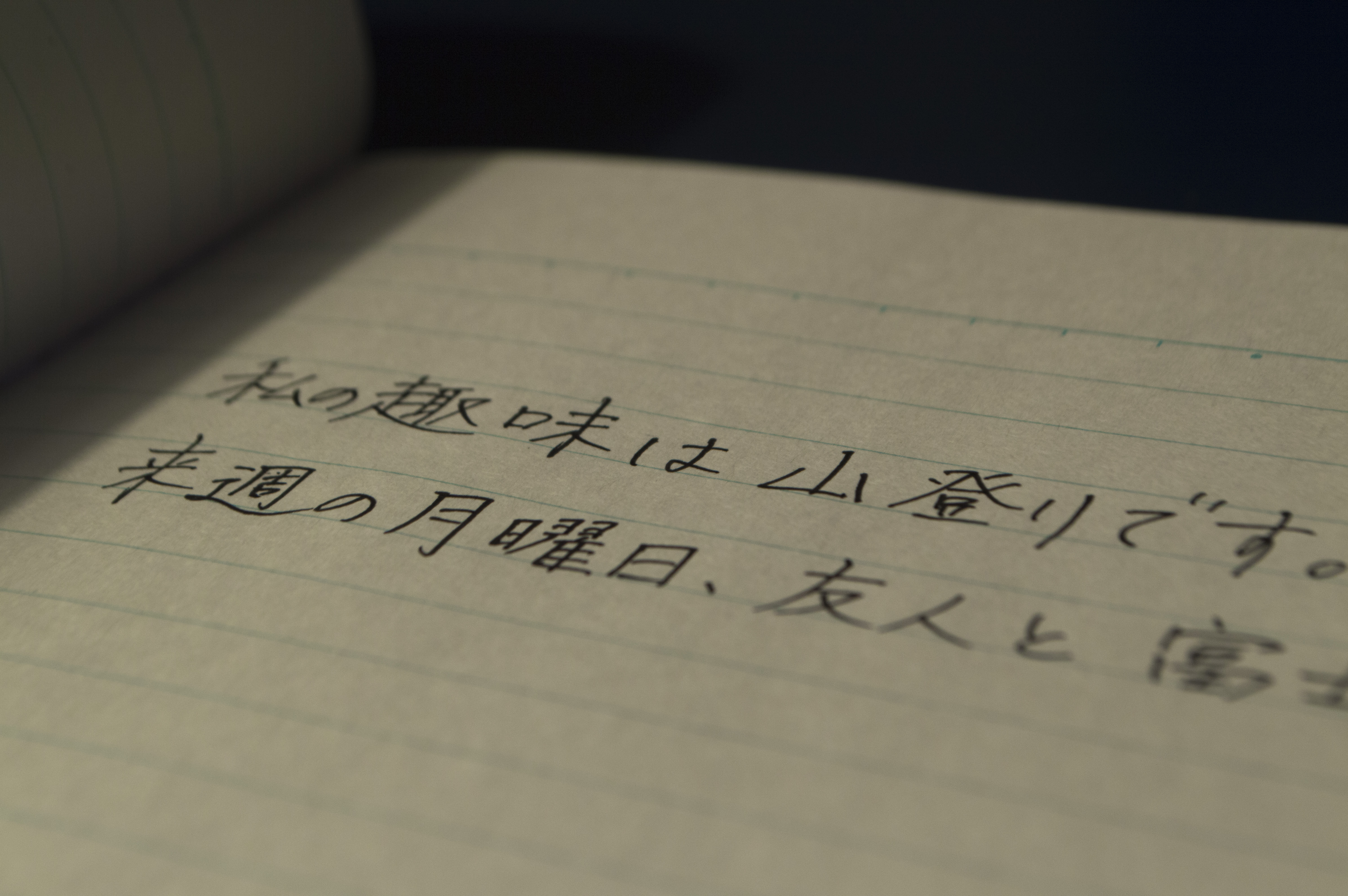

Japanese Script

The modern Japanese writing system uses a combination of logographic kanji, which are adopted Chinese characters, and syllabic kana. Kana itself consists of a pair of syllabaries: hiragana, used primarily for native or naturalised Japanese words and grammatical elements; and katakana, used primarily for foreign words and names, loanwords, onomatopoeia, scientific names, and sometimes for emphasis. Almost all written Japanese sentences contain a mixture of kanji and kana. Because of this mixture of scripts, in addition to a large inventory of kanji characters, the Japanese writing system is considered to be one of the most complicated currently in use. Several thousand kanji characters are in regular use, which mostly originate from traditional Chinese characters. Others made in Japan are referred to as “Japanese kanji” ( ja, 和製漢字, wasei kanji, label=none; also known as “country’s kanji” ja, 国字, kokuji, label=none). Each character has an intrinsic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |