|

Cumulant Generating Function

In probability theory and statistics, the cumulants of a probability distribution are a set of quantities that provide an alternative to the '' moments'' of the distribution. Any two probability distributions whose moments are identical will have identical cumulants as well, and vice versa. The first cumulant is the mean, the second cumulant is the variance, and the third cumulant is the same as the third central moment. But fourth and higher-order cumulants are not equal to central moments. In some cases theoretical treatments of problems in terms of cumulants are simpler than those using moments. In particular, when two or more random variables are statistically independent, the -th-order cumulant of their sum is equal to the sum of their -th-order cumulants. As well, the third and higher-order cumulants of a normal distribution are zero, and it is the only distribution with this property. Just as for moments, where ''joint moments'' are used for collections of random var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Edgeworth Series

The Gram–Charlier A series (named in honor of Jørgen Pedersen Gram and Carl Charlier), and the Edgeworth series (named in honor of Francis Ysidro Edgeworth) are series that approximate a probability distribution in terms of its cumulants. The series are the same; but, the arrangement of terms (and thus the accuracy of truncating the series) differ. The key idea of these expansions is to write the characteristic function of the distribution whose probability density function is to be approximated in terms of the characteristic function of a distribution with known and suitable properties, and to recover through the inverse Fourier transform. Gram–Charlier A series We examine a continuous random variable. Let \hat be the characteristic function of its distribution whose density function is , and \kappa_r its cumulants. We expand in terms of a known distribution with probability density function , characteristic function \hat, and cumulants \gamma_r. The density is generally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernoulli Number

In mathematics, the Bernoulli numbers are a sequence of rational numbers which occur frequently in analysis. The Bernoulli numbers appear in (and can be defined by) the Taylor series expansions of the tangent and hyperbolic tangent functions, in Faulhaber's formula for the sum of ''m''-th powers of the first ''n'' positive integers, in the Euler–Maclaurin formula, and in expressions for certain values of the Riemann zeta function. The values of the first 20 Bernoulli numbers are given in the adjacent table. Two conventions are used in the literature, denoted here by B^_n and B^_n; they differ only for , where B^_1=-1/2 and B^_1=+1/2. For every odd , . For every even , is negative if is divisible by 4 and positive otherwise. The Bernoulli numbers are special values of the Bernoulli polynomials B_n(x), with B^_n=B_n(0) and B^+_n=B_n(1). The Bernoulli numbers were discovered around the same time by the Swiss mathematician Jacob Bernoulli, after whom they are named, and i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distribution or rectangular distribution is a family of symmetric probability distributions. The distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, ''a'' and ''b'', which are the minimum and maximum values. The interval can either be closed (e.g. , b or open (e.g. (a, b)). Therefore, the distribution is often abbreviated ''U'' (''a'', ''b''), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable ''X'' under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is: : f(x)=\be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eccentricity (mathematics)

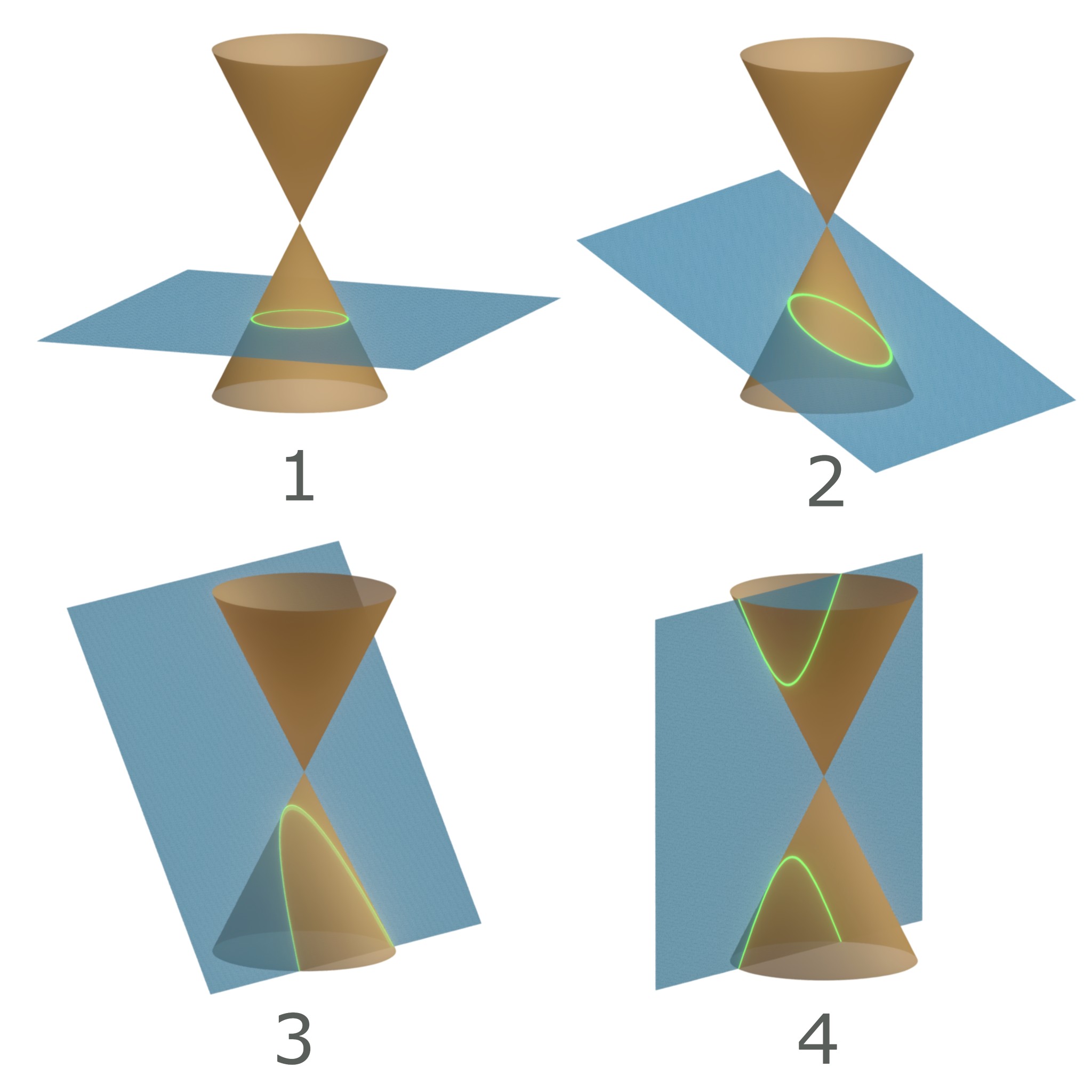

In mathematics, the eccentricity of a conic section is a non-negative real number that uniquely characterizes its shape. More formally two conic sections are similar if and only if they have the same eccentricity. One can think of the eccentricity as a measure of how much a conic section deviates from being circular. In particular: * The eccentricity of a circle is zero. * The eccentricity of an ellipse which is not a circle is greater than zero but less than 1. * The eccentricity of a parabola is 1. * The eccentricity of a hyperbola is greater than 1. * The eccentricity of a pair of lines is \infty Definitions Any conic section can be defined as the locus of points whose distances to a point (the focus) and a line (the directrix) are in a constant ratio. That ratio is called the eccentricity, commonly denoted as . The eccentricity can also be defined in terms of the intersection of a plane and a double-napped cone associated with the conic section. If the cone is orie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conic Sections

In mathematics, a conic section, quadratic curve or conic is a curve obtained as the intersection of the surface of a cone with a plane. The three types of conic section are the hyperbola, the parabola, and the ellipse; the circle is a special case of the ellipse, though historically it was sometimes called a fourth type. The ancient Greek mathematicians studied conic sections, culminating around 200 BC with Apollonius of Perga's systematic work on their properties. The conic sections in the Euclidean plane have various distinguishing properties, many of which can be used as alternative definitions. One such property defines a non-circular conic to be the set of those points whose distances to some particular point, called a '' focus'', and some particular line, called a ''directrix'', are in a fixed ratio, called the '' eccentricity''. The type of conic is determined by the value of the eccentricity. In analytic geometry, a conic may be defined as a plane algebraic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance-to-mean Ratio

In probability theory and statistics, the index of dispersion, dispersion index, coefficient of dispersion, relative variance, or variance-to-mean ratio (VMR), like the coefficient of variation, is a normalized measure of the dispersion of a probability distribution: it is a measure used to quantify whether a set of observed occurrences are clustered or dispersed compared to a standard statistical model. It is defined as the ratio of the variance \sigma^2 to the mean \mu,'' :D = . It is also known as the Fano factor, though this term is sometimes reserved for ''windowed'' data (the mean and variance are computed over a subpopulation), where the index of dispersion is used in the special case where the window is infinite. Windowing data is frequently done: the VMR is frequently computed over various intervals in time or small regions in space, which may be called "windows", and the resulting statistic called the Fano factor. It is only defined when the mean \mu is non-zero, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Limiting Case (mathematics)

In mathematics, a limiting case of a mathematical object is a special case that arises when one or more components of the object take on their most extreme possible values. For example: * In statistics, the limiting case of the binomial distribution is the Poisson distribution. As the number of events tends to infinity in the binomial distribution, the random variable changes from the binomial to the Poisson distribution. *A circle is a limiting case of various other figures, including the Cartesian oval, the ellipse, the superellipse, and the Cassini oval. Each type of figure is a circle for certain values of the defining parameters, and the generic figure appears more like a circle as the limiting values are approached. *Archimedes calculated an approximate value of π by treating the circle as the limiting case of a regular polygon with 3 × 2''n'' sides, as ''n'' gets large. *In electricity and magnetism, the long wavelength limit is the limiting case when the wavelen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Negative Binomial Distribution

In probability theory Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set o ... and statistics, the negative binomial distribution is a discrete probability distribution that models the number of failures in a sequence of independent and identically distributed Bernoulli trials before a specified (non-random) number of successes (denoted r) occurs. For example, we can define rolling a 6 on a die as a success, and rolling any other number as a failure, and ask how many failure rolls will occur before we see the third success (r=3). In such a case, the probability distribution of the number of failures that appear will be a negative binomial distribution. An alternative formulation is to model the number of total trials (instead of the number of failures). In fact, for a specified (non-ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binomial Distribution

In probability theory and statistics, the binomial distribution with parameters ''n'' and ''p'' is the discrete probability distribution of the number of successes in a sequence of ''n'' independent experiments, each asking a yes–no question, and each with its own Boolean-valued outcome: ''success'' (with probability ''p'') or ''failure'' (with probability q=1-p). A single success/failure experiment is also called a Bernoulli trial or Bernoulli experiment, and a sequence of outcomes is called a Bernoulli process; for a single trial, i.e., ''n'' = 1, the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the popular binomial test of statistical significance. The binomial distribution is frequently used to model the number of successes in a sample of size ''n'' drawn with replacement from a population of size ''N''. If the sampling is carried out without replacement, the draws are not independent and so the resultin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |